Computation: Cleaner, Faster, Seamless, and Higher Quality Alan Edelman Massachusetts Institute of Technology

advertisement

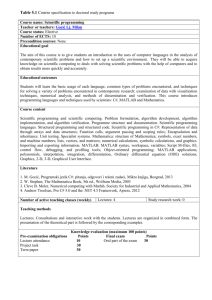

Computation: Cleaner, Faster, Seamless, and Higher Quality Alan Edelman Massachusetts Institute of Technology Unique Opportunity • Raise the bar for all functions: – Linear Algebra! – Elementary Functions – Special Functions – Hard Functions: Optimization/Diff Eqs – Combinatorial and Set Functions Unique Opportunity • Raise the bar for all functions: – – – – – – – – Linear Algebra! Elementary Functions Special Functions Hard Functions: Optimization/Diff Eqs Combinatorial and Set Functions 0-d, 1-d, n-d arrays, empty arrays Inf, Not Available (NaN) Types (integer, double, complex) and embeddings • (real inside complex, matrices insidse n-d arrays) • Methodology – Work in Progress: Create a web experience that guides and educates users in the amount of time that it takes to say Wikipedia – Futuristic?: The Semantic Web and Other Automations? What the world understands about floating point: • Typical user: In grade school, math was either right or wrong. Getting it “wrong” meant: bad boy/bad girl. (Very shameful!) • By extension, if a computer gets it wrong “Shame, Bad computer! Bad programmer!” What the world understands about floating point: • Typical user: In grade school, math was either right or wrong. Getting it “wrong” meant: bad boy/bad girl. (Very shameful!) • By extension, if a computer gets it wrong “Shame, Bad computer! Bad programmer!” • People can understand a bad digit or two in the insignficant part at the end. We might say “small forward error,” but they think something more like fuzzy measurement error. The Linear Algebra World • Some of the world’s best practices. • Still Inconsistent, still complicated. • Never go wrong reading a book authored by someone named Nick, a paper or key example by Velvel, all the good stuff in terms of error bounds of LAPACK and papers from our community Landscape of Environments • Traditional Languages plus libraries – (Fortran, C, C++, Java?) • Proprietary Environments – MATLAB®, Mathematica, Maple • Freely Available Open Source – Scilab, Python(SciPy)(Enthought!), R, Octave • Other Major Players – Excel, Labview, PVWave Computing Environments: what’s appropriate? • “Good” numerics? – Who defines good? – How do we evaluate? • Seamless portability? – Rosetta stone? – “Consumer Reports?” • Easy to learn? • Good documentation? • Proprietary or Free? – “Download, next, next, next, enter, and compute?” Numerical Errors: What’s appropriate? • The exact answer? Numerical Errors: What’s appropriate? • The exact answer? • What MATLAB® gives! Numerical Errors: What’s appropriate? • • • • • • • • • The exact answer? What MATLAB® gives! float(f(x))??? (e.g. some trig libraries) Small Forward Error Small Backward Error Nice Mathematical Properties What people expect, right or wrong Consistent? Nice mathematics? Simple Ill Conditioning • Matrix: >> a=magic(4);inv(a) Warning: Matrix is close to singular or badly scaled. Results may be inaccurate. RCOND = 1.306145e-17. • Statistics Function: “erfinv” erfinv(1-eps) Exact: 5.8050186831934533001812 MATLAB: 5.805365688510648 erfinv(1-eps-eps/2) is exactly 5.77049185355333287054782 and erfinv(1-eps+eps/2) is exactly 5.86358474875516792720766 condition number is O(1e14) Some Conclusions? • MATLAB® should have had a small forward error and it’s been bad? • Nobody would ever ask for erfinv at such a strange argument? • MATLAB® should have been consistent and warned us, like it has so nicely done with “inv” which raises the bar high, but has let us down on all the other functions? • We have a good backward error so we need not worry? • Users either are in extremely bad shape or extremely good shape anyway: – Have such huge problems due to ill-conditioning they should have formulated the problem completely differently (as in they should have asked for the pseudospectra not the spectra!) – The ill-conditioning is not a problem because later they apply the inverse function or project onto a subspace where it doesn’t matter Discontinuity • octave:> atan(1+i*2^64*(1+[0 eps])) ans = • 1.5708 -1.5708 Discontinuity • octave:> atan(1+i*2^64*(1+[0 eps])) ans = • 1.5708 -1.5708 • Exact? tan(x+pi) is tan(x) after all so is it okay to return atan(x) + pi * any random integer? • There is an official atan with definition on the branch cuts ±i(1, ) Continuous everywhere else! MATLAB: atan(i*2^509) is pi/2 and atan(i*2^510) is –pi/2 atan(1+i*2^509) is pi/2 as is atan(1+i*2^510) ∞ QR? • No different from atan • Good enough to take atan+pi*(random integer?) • Is it okay to take Q*diag(random(signs?))? – There is an argument to avoid gratuitous discontinuity! Just like atan – Many people take [Q,R]=qr(randn(n)) to get uniformly distributed orthogonal matrices! But broken because Q is only piecewise continuous. – Backward error analysis has a subtlety. We guarantee that [Q,R] is “a” QR decomposition of a nearby matrix, not “the” QR decomposition of a nearby matrix. Even without roundoff, there may be no algorithm that gives “THIS” QR for the rounded output. • Is it okay to have any QR for rank deficiency? Snap to grid? • Sin( float(pi) * n) = 0??? Vs. float(sin(float(pi)*n)) • Determinant of a matrix of integers? – Should it be an integer? – It is in MATLAB! Cleve said so on line But is it right? • • a=sign(randn(27)),det(a) a= 1 1 -1 -1 -1 1 -1 1 -1 -1 -1 1 -1 1 -1 -1 1 1 1 -1 1 -1 -1 -1 1 1 1 • 1 -1 1 -1 1 1 -1 1 1 1 -1 1 1 1 1 -1 1 1 -1 -1 1 -1 1 -1 1 -1 -1 1 1 -1 -1 1 -1 -1 -1 1 1 -1 -1 1 -1 -1 1 1 1 -1 -1 -1 1 -1 1 -1 1 1 -1 1 1 -1 -1 1 -1 -1 1 -1 -1 -1 -1 1 1 -1 1 -1 1 -1 1 -1 1 1 -1 1 1 -1 -1 1 -1 1 1 -1 -1 -1 1 1 -1 -1 1 1 1 1 1 1 1 1 1 -1 1 1 1 -1 -1 1 1 -1 -1 1 -1 1 1 -1 1 -1 -1 -1 1 -1 -1 -1 -1 -1 -1 -1 1 -1 -1 -1 -1 -1 -1 1 -1 -1 -1 1 -1 -1 -1 -1 -1 1 1 1 1 1 1 1 1 -1 1 1 -1 1 1 1 1 -1 1 1 -1 1 -1 -1 -1 -1 -1 -1 -1 1 1 -1 -1 1 -1 -1 -1 -1 1 -1 -1 1 1 1 -1 1 -1 1 -1 1 1 1 -1 -1 1 -1 -1 -1 -1 -1 1 -1 1 -1 -1 -1 1 1 -1 1 ans = 839466457497601 -1 -1 -1 -1 -1 -1 -1 1 -1 1 1 -1 -1 -1 -1 1 1 -1 -1 -1 -1 -1 1 -1 -1 1 -1 1 -1 1 -1 -1 1 1 -1 1 1 1 -1 -1 -1 1 -1 1 1 -1 1 1 1 -1 1 -1 -1 1 -1 1 -1 -1 1 1 -1 -1 1 1 -1 -1 1 1 1 -1 -1 -1 1 1 1 -1 1 -1 1 1 1 -1 -1 1 1 1 -1 1 1 1 1 -1 -1 -1 1 -1 -1 -1 1 1 1 1 1 -1 -1 1 1 -1 1 -1 1 -1 1 1 -1 1 1 1 1 -1 -1 1 -1 -1 1 -1 -1 1 -1 -1 1 1 -1 -1 1 -1 -1 1 1 -1 -1 1 -1 -1 -1 -1 -1 1 1 -1 -1 -1 1 -1 1 -1 -1 -1 -1 -1 1 1 1 -1 1 -1 -1 -1 1 1 1 1 -1 1 1 -1 -1 -1 -1 1 1 -1 1 1 1 -1 1 -1 1 -1 -1 -1 -1 -1 1 1 -1 1 -1 1 -1 -1 -1 -1 1 1 1 1 -1 -1 1 1 -1 1 -1 1 1 1 -1 1 -1 1 1 1 1 1 1 1 1 1 1 -1 1 1 -1 -1 -1 -1 1 -1 1 1 1 -1 -1 1 1 -1 -1 1 1 -1 1 1 -1 -1 -1 1 1 -1 -1 -1 -1 1 1 1 1 -1 -1 -1 -1 -1 -1 -1 1 -1 -1 1 1 -1 1 1 -1 1 1 -1 1 -1 -1 -1 -1 -1 1 -1 1 -1 1 -1 -1 -1 -1 1 1 1 1 1 -1 -1 1 1 1 1 1 -1 -1 1 -1 -1 1 1 -1 -1 1 -1 -1 -1 1 1 1 1 -1 1 -1 1 1 -1 1 -1 1 1 1 1 -1 1 1 1 1 1 -1 1 1 -1 1 1 -1 -1 1 1 -1 1 1 1 1 -1 1 -1 -1 1 1 1 1 1 -1 1 1 1 1 -1 1 -1 -1 -1 1 1 -1 -1 1 1 1 1 -1 -1 1 1 -1 1 1 1 -1 1 -1 1 1 -1 1 -1 1 1 -1 1 -1 -1 1 1 1 -1 1 1 -1 1 -1 1 -1 1 -1 -1 -1 -1 1 -1 1 1 -1 1 1 -1 1 1 1 1 -1 -1 -1 -1 -1 1 -1 -1 1 1 1 -1 -1 1 -1 1 1 -1 -1 -1 1 -1 -1 -1 -1 -1 -1 -1 -1 1 -1 -1 -1 -1 1 -1 1 -1 -1 1 -1 1 -1 1 -1 1 MPI Based Libraries Typical sentence: … we enjoy using parallel computing libraries such as Scalapack • What else? … you know, such as scalapack • And …? Well, there is scalapack • (petsc, superlu, mumps, trilinos, …) • Very few users, still many bugs, immature • Highly Optimized Libraries? Yes and No Star-P Some of the hardest parallel computing problems (not what you think) • A: row distributed array (or worse) • B: column distributed array(or worse) • C=A+B • Indexing: • C=A(I,J) LU Example Scientific Software • Proprietary: – MATLAB, Mathematica, Maple • Open Source – Octave and Scilab – Python and R • Also: – IDL, PVWave, Labview • What should I use and what’s the difference anyway? • Seamless: how to avoid the tendency to get stuck with one package and believe it’s the best • Seamless: portability, • Higher Quality: standards, accuracy Documentation I would like • Tell me on page 1 how to type in a 2x2 matrix such as 1 2 1+i nan function [funct]=obj_func(v,T) sigalpha=v(1); T0=v(2); beta=v(3); f=v(4); R=0.008314; aN=sigalpha*f/2; aP=sigalpha*(2-f)./2; H=[-1000:2:1000]'; [Hr Hc]=size(H); G0=zeros(Hr,1); function [funct]=obj_func(v,T) beta=v(3);f=v(4);R=0.008314; H=[-1000:2:1000]'; HH =2*[ [-1000:2:-2]'/f;[0:2:1000]'/(2-f)]/v(1); G0=-2*beta*HH.^2 + abs(beta)*HH.^4; sth=exp(-G0/(R*v(2))); PT0=sth/sum(sth); EE=exp((1/v(2)-1./T)*H'/R); tt= EE*PT0; Hi=(EE*(H.*PT0))./tt;H2=(EE*(H.^2.*PT0))./tt; funct=(H2-Hi.^2)./(R*T.^2); for i=1:Hr if H(i)<0 G0(i)=-2*beta*(H(i)./aN).^2+abs(beta)*(H(i)./aN).^4; else G0(i)=-2*beta*(H(i)./aP).^2+abs(beta)*(H(i)./aP).^4; end end sth=exp(-G0./(R*T0)); PT0=sth./(trapz(sth*(H(2)-H(1)))); [Tr Tc]=size(T); for i=1:Tr sth2=PT0.*exp(-(1/R)*((1/T(i))-(1/T0)).*H); PT(:,i)=sth2./(trapz(sth2*(H(2)-H(1)))); Hi(i)=(1./(trapz(sth2*(H(2)-H(1)))))*trapz(H.*sth2*(H(2)-H(1))); H2(i)=(1./(trapz(sth2*(H(2)-H(1)))))*trapz((H.^2).*sth2*(H(2)-H(1))); Cpex(i)=(H2(i)-Hi(i).^2)./(R.*(T(i)^2)); end funct=Cpex'; A Factor of 10 or so in MATLAB • • • • • Life is too short to write ”for loops” Trapz is just a “sum” if the boundaries are 0 H(2)-H(1) never needed (cancels out) Sums are sometimes dot products Dot products are sometimes matvecs Higher Quality New Standards for Quality of Computation • Associative Law: (a+b)+c=a+(b+c) • Not true in roundoff • Mostly didn’t matter in serial • Parallel computation reorganizes computation • Lawyers get very upset! Accuracy: Sine Function Extreme Argument sin(2^64)=0.0235985099044395586343659… One IEEE double ulp higher: sin(2^64+4096)=0.6134493700154282634382759… over 651 wavelengths higher. 2^64 is a good test case Maple 10 evalhf(sin(2^64)); 0.0235985099044395581 note evalf(sin(2^64)) does not accurately use 2^64 but rather computes evalf(sin(evalf(2^64,10))) Print[N[Sin[2^64]]]; Print[N[Sin[2^64],20]]; 0.312821* 0.023598509904439558634 MATLAB sin(2^64) 0.023598509904440 sin(single(2^64)) 0.0235985 Octave 2.1.72: sin(2^64) 0.312821314503348* python 2.5 numpy sin(2**64) 0.24726064630941769** R 2.4.0 format(sin(2^64),digits=17) 0.24726064630941769** Scilab format(25);sin(2^64) 0.0235985099044395581214 Mathematica 6.0 *Mathematica and python have been observed to give the correct answer on certain 64 bit linux machines. **The python and R above numbers listed here were taken with Windows XP. The .3128 number was seen with python on a 32 bit linux machine. The correct answer was seen with R on a sun4 machine. It’s very likely that default N in MMA, Octave, Python, and R pass thru to various libraries, such as LIBM, and MSVCRT.DLL. Notes: Maple and Mathematica use both hardware floating point and custom vpa MATLAB discloses use of FDLIBM. MATLAB and extra precision MAPLE and Mathematica can claim small forward error All others can claim small backward error Excel doesn’t compute 2^64 accurately and sin is an error. Google gives 0.312821315 Ruby on http://tryruby.hobix.com/ gives -0.35464… for Math.sin(2**64). On a sun4 the correct was given with Ruby. Relative Backward Error <= (2pi/2^64) = 3e-19 Relative Condition Number = abs(cos(2^64)dx/sin(2^64))/(dx/2^64) = 8e20 One can see accuracy drainage going back to around 2^21 or so, by powers of 2 Coverage: Sine Function Complex Support • All packages seem good » » » » » » » » » Maple: evalf(sin(I)) Mathematica: N[Sin[I]] MATLAB: sin(i) Octave: “ “ Numpy: sin(1j) R: sin(1i) Ruby: require 'complex'; Complex::I; Math::sin(Complex::I) Scilab: sin(%i) EXCEL: =IMSIN(COMPLEX(0,1)) Note special command in excel Coverage: Erf Function Complex Support python/scipy is the only numerical package good • Maple: evalf(erf(I)); 1.650425759 I • Mathematica: N[Erf[I]] 0. + 1.65043 I • MATLAB: erf(i) ??? Input must be real. • Octave: “ “ error: • Python/Scipy: erf(1j) • R: pnorm(1i) erf: unable to handle complex argument 1.6504257588j Error in pnorm … Non-numeric argument • • Scilab: erf(%i) !--error 52 argument must be a real … Acceptable or not? • • • • • • • • tan(z+kπ)=tan(z) atan officially discontinuous on branch cut atan discontinuous off branch cut? MATLAB does it MATLAB highly inaccurate in some zones Python, octave, R worse in some ways Scilab very Good QR does it? (unacceptable in my opinion) Clones • Just because Octave is a MATLAB “wannabe” (want to be) doesn’t mean they are the same • Better some ways, worse in other ways Type Casting • Mathematica and Maple, type cast as the symbolic languages have excellent numerics • MATLAB “the matrix laboratory” uses the same lapack and math libraries as everyone else • MATLAB has 1500 functions some terrific some trivial Optimization • Would love to see the “Consumer Reports Style” Article