1 Solving the Maxwell Eigenproblem Solving the Maxwell Eigenproblem: 2a

advertisement

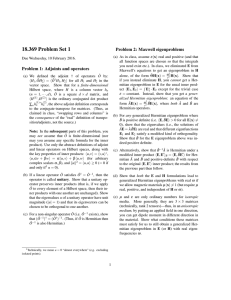

Solving the Maxwell Eigenproblem Finite cell discrete eigenvalues ωn Want to solve for ωn(k), & plot vs. “all” k for “all” n, 1 constraint: € 0.9 0.8 Solving the Maxwell Eigenproblem: 2a 2 1 ω (∇ + ik) × (∇ + ik) × H n = n2 H n ε c where: 0.7 Limit range of k: irreducible Brillouin zone 1 Limit range of k: irreducible Brillouin zone 2 Limit degrees of freedom: expand H in finite basis (N) 2 Limit degrees of freedom: expand H in finite basis (∇ + ik) ⋅ H = 0 H(x,y) ei(k⋅x – ωt) Solving the Maxwell Eigenproblem: 2b 1 — must satisfy constraint: (∇ + ik) ⋅ H = 0 N H = H(xt ) = ∑ hm bm (x t ) solve: Aˆ H = ω 2 H Planewave (FFT) basis Photonic Band Gap € 0.2 TM bands 0.1 finite matrix problem: 0 1 Limit range of k: irreducible Brillouin zone 2 Limit degrees of freedom: expand H in finite basis 3 Efficiently solve eigenproblem: iterative methods Ah = ω 2 Bh Solving the Maxwell Eigenproblem: 3a 3 Bml = bm bl Efficiently solve eigenproblem: iterative methods 1 Limit range of k: irreducible Brillouin zone 1 Limit range of k: irreducible Brillouin zone 2 Limit degrees of freedom: expand H in finite basis 2 Limit degrees of freedom: expand H in finite basis 3 Efficiently solve eigenproblem: iterative methods 3 Efficiently solve eigenproblem: iterative methods Ah = ω 2 Bh Ah = ω Bh Slow way: compute A & B, ask LAPACK for eigenvalues — requires O(N 2) storage, O(N3) time Faster way: — start with initial guess eigenvector h0 — iteratively improve — O(Np) storage, ~ O(Np2) time for p eigenvectors (p smallest eigenvalues) The Iteration Scheme is Important Many iterative methods: — Arnoldi, Lanczos, Davidson, Jacobi-Davidson, …, Rayleigh-quotient minimization for Hermitian matrices, smallest eigenvalue ω0 minimizes: “variational theorem” ω 02 = min h h' Ah h' Bh minimize by preconditioned conjugate-gradient (or…) € nonuniform mesh, more arbitrary boundaries, complex code & mesh, O(N) [ figure: Peyrilloux et al. , J. Lightwave Tech. 21, 536 (2003) ] Efficiently solve eigenproblem: iterative methods The Iteration Scheme is Important (minimizing function of 10 4–10 8+ variables!) ω 02 = min h h' Ah = f (h) h' Bh Steepest-descent: minimize (h + α ∇f) over α … repeat Conjugate-gradient: minimize (h + α d) € — d is ∇f + (stuff): conjugate to previous search dirs Preconditioned steepest descent: minimize (h + α d) — d = (approximate A -1) ∇f ~ Newton’s method Preconditioned conjugate-gradient: minimize (h + α d) — d is (approximate A -1) [∇f + (stuff)] The ε-averaging is Important 100 E|| is continuous 1000000 H G ⋅ (G + k) = 0 3 The Boundary Conditions are Tricky (minimizing function of ~40,000 variables) Nédélec elements [ Nédélec, Numerische Math. 35, 315 (1980) ] uniform “grid,” periodic boundaries, simple code, O(N log N) Solving the Maxwell Eigenproblem: 3c 2 € G constraint: Aml = bm Aˆ bl f g = ∫ f* ⋅g constraint, boundary conditions: H(x t ) = ∑ HG eiG⋅xt 0.5 0.4 0.3 Finite-element basis m=1 0.6 100000 E 1000 J Ñ % error 100 Ñ J 10 1 0.1 0.01 0.001 E E E E EEEE EEEEE EEEE EE E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E E Ñ Ñ J E E E E E E E J Ñ E E E E E E E E J ÑÑ E E E E E E E E J ÑÑ E E E E E E E J ÑÑ E E E E JJ ÑÑÑÑ E E E E E E JJJJJÑÑÑÑ E E Ñ Ñ Ñ E Ñ Ñ Ñ E Ñ Ñ Ñ JJ Ñ Ñ Ñ Ñ Ñ Ñ Ñ Ñ Ñ Ñ E Ñ Ñ Ñ E Ñ Ñ Ñ E Ñ Ñ Ñ Ñ JJ Ñ Ñ E Ñ Ñ E Ñ Ñ Ñ Ñ E Ñ Ñ Ñ E Ñ Ñ Ñ E Ñ Ñ Ñ J Ñ Ñ E E Ñ Ñ Ñ Ñ E Ñ E Ñ Ñ E Ñ J Ñ E Ñ Ñ E Ñ E Ñ Ñ E Ñ Ñ E E Ñ Ñ J Ñ E E Ñ Ñ E E Ñ E Ñ E Ñ Ñ Ñ J Ñ Ñ Ñ Ñ Ñ Ñ Ñ J Ñ Ñ Ñ J no preconditioning preconditioned conjugate-gradient JJ 0.0001 E⊥ is discontinuous (D⊥ = εE⊥ is continuous) Any single scalar ε fails: (mean D) ≠ (any ε) (mean E) ε? ε 0.000001 1 10 100 B J 1 H backwards averaging H H H B B J H H B H B J J J B B J 0.1 H B J B tensor averaging H H 1000 # iterations H B B J B J J ε ε−1 0.01 10 correct averaging changes order of convergence from ∆x to ∆x 2 J J E|| −1 E⊥ H no Baveraging Use a tensor ε: no conjugate-gradient J J 0.00001 H 10 % error E 10000 resolution (pixels/period) J 100 (similar effects in other E&M numerics & analyses) € 1