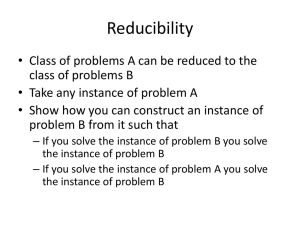

Reducibility and Completeness

advertisement