Document 10469317

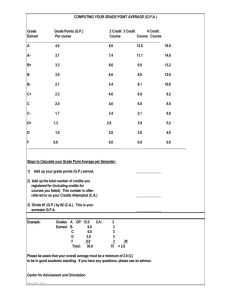

advertisement