3D Pose from 3 Corresponding Points under Weak-Perspective Projection

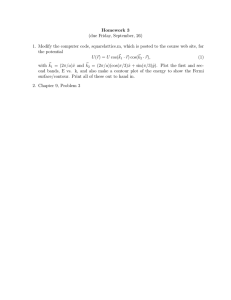

advertisement