Speaker Turns from Between-Channel Differences •

advertisement

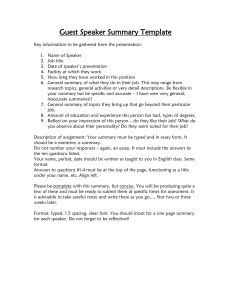

Speaker Turns from

Between-Channel

Differences

Dan Ellis & Jerry Liu

LabROSA (Columbia) & ICSI

• Between-channel features

• Timing-difference-based system

• Evaluation and results

NIST Meetings - Dan Ellis

Columbia University

2004-05-17

Meeting Turn Information

mic recordings carry information

• Multiple

on speaker turns

every voice reaches every mic... (?)

... but with differing coupling filters (delays, gains)

• Find turns with minimal assumptions

e.g. ad-hoc sensor setups (multiple PDAs)

differences to remove effect of source signal

- no spectral models, < 1xRT

NIST Meetings - Dan Ellis

Columbia University

2004-05-17

Between-channel cues:

Timing (ITD) & Level

Speaker activity

Speaker

ground-truth

xocrr peak lags (5pt med filt)

skew/samp

50

Timing diffs (ITD)

(2 mic pairs, 250ms win)

0

-50

125

130

135

140

145

150

norm xcorr pk val

155

160

165

170

175

1

Peak correlation

coefficient r

0.5

0

125

130

135

140

145

150

per-chan E

155

160

165

170

175

-40

Per-channel

energy

dB

-50

-60

-70

-80

125

130

135

140

145

150

chan E diffs

155

160

165

170

175

10

Between-channel

energy differences

dB

5

0

-5

-10

125

130

135

140

145

150

time/s

NIST Meetings - Dan Ellis

Columbia University

155

160

165

170

175

2004-05-17

Level Cues

• Level at each mic relative to average of all

• Project nmic x nfreq arrays onto PCA

• Compare to ground truth ... poor separation

Mean/StdDev

per-spkr coupling matrices

Per-spkr PCA 1,2 projections

Speaker 1 frames

Speaker 2 frames

120

120

100

100

100

80

80

80

60

60

60

40

40

40

20

20

20

20 40 60 80 100 120

20 40 60 80 100 120

Speaker 4 frames

Columbia University

20 40 60 80 100 120

Speaker 5 frames

Speaker 6 frames

120

120

120

100

100

100

80

80

80

60

60

60

40

40

40

20

20

20

20 40 60 80 100 120

NIST Meetings - Dan Ellis

Speaker 3 frames

120

20 40 60 80 100 120

20 40 60 80 100 120

2004-05-17

Timing Cues (ITD)

• Cross-correlation between two 2-mic pairs

• Compare to ground-truth...

ICSI0: All frames

Speaker 1 frames

Skew34 / ms

0

147

Speaker 2 frames

0

147

54

54

54

2

Speaker 3 frames

0

2

19

2

0

19

2

19

19

6

6

6

4

6

4

0

6

4

2

Skew12 / ms

6

0

6

0

Speaker 4 frames

4

2

6

0

2

4

2

6

0

3

6

2

3

4

2

0

Speaker 7 frames

0

19

0

6

Speaker 6 frames

0

2

0

6

Speaker 5 frames

0

4

2

2

2

6

4

2

2

0

1

2

6

2

4

4

4

2

6

0

6

4

2

0

1

4

1

6

0

6

4

2

0

6

6

6

4

2

0

0

6

• Promising, but still ambiguous (overlaps)

NIST Meetings - Dan Ellis

Columbia University

4

2004-05-17

2

0

Pre-whitening for ITD

by 12-pole LPC models (32 ms

• Inverse-filter

windows) to remove local resonances

• Filter out noise < 500 Hz, > 6 kHz

• Then cross-correlate...

lag / samps

100

Short-time xcorr: raw signals

50

50

0

0

-50

-50

spkr ID

-100

1220

1225

1230

1235

Speaker ground truth

1240

6

4

4

2

2

1225

1230

time / sec

1235

NIST Meetings - Dan Ellis

1240

Short-time xcorr: whitened+filtered signals

-100

1220

6

1220

Columbia University

100

1220

1225

1230

1235

Speaker ground truth

1240

1225

1240

1230

time / sec

1235

2004-05-17

Dynamic Programming

for peak continuity

• How can we exclude ITD outliers?

top N cross-corr peaks,

• Consider

optimize combined peak height + step cost

xcorr; blue = all peaks; red = top peak; green = dp choice

100

lag / samples

50

••

0

-50

-100

Helps and hurts...

NIST Meetings - Dan Ellis

Columbia University

1230

1240

time / sec

1250

2004-05-17

System overview

Chan 1

Chan 2

Pre-emphasis

(HPF)

LPC whitening

Normalized

peak values

500-6000 Hz

BPF

Crosscorrelation

Pre-emphasis

(HPF)

LPC whitening

"Good" frame

selection

500-6000 Hz

BPF

Spectral

clustering

Chan 3

Chan 4

Pre-emphasis

(HPF)

LPC whitening

500-6000 Hz

BPF

Crosscorrelation

Pre-emphasis

(HPF)

LPC whitening

500-6000 Hz

BPF

Peak

lags

Clusters

Labels

Classification

• Many noisy points remain in ITD...

• Models based on “good” (high-r) frames

• Spectral clustering to identify speakers

• Then classify all frame by (single) best match

NIST Meetings - Dan Ellis

Columbia University

2004-05-17

Choosing “Good” Frames

coef. r

• Correlation

~ channel similarity:

!n mi[n] · m j [n + !]

ri j [!] = !

! m2i ! m2j

• Select frames with r in top 50% in both pairs

•

ITD - high-correlation points (435/1201)

0

Skew34 / samples

Skew34 / samples

ITD - all points

-50

-100

0

-50

-100

•

• Cleaner basis for models

-150

-100

-50

Skew12 / samples

NIST Meetings - Dan Ellis

Columbia University

0

about 35% of points

-150

-100

-50

Skew12 / samples

0

2004-05-17

Spectral clustering

of “affinity matrix” A

• Eigenvectors

to pick out similar points:

Affinity matrix A

first 12 eigenvectors (normalized)

2

400

0

350

300

-2

250

-4

200

-6

150

-8

100

-10

50

100

200

300

point index

400

-12

0

100

•

• Ad-hoc mapping to clusters

200

300

point index

400

amn = exp{−"x[m] − x[n]"2/2!2}

Number of clusters K from eigenvalues ≈ points

NIST Meetings - Dan Ellis

Columbia University

2004-05-17

Speaker Models & Classification

• Actual clusters depend on ! and K heuristic

Gaussians to each cluster,

• Fit

assign that class to all frames within radius

or: consider dimension independently, choose best

ICSI0: good points

All pts: nearest class

All pts: closest dimension

0

0

0

-20

-20

-20

-40

-40

-40

-60

-60

-60

-80

-80

-80

-100

-100

-50

0

-100

NIST Meetings - Dan Ellis

Columbia University

-100

-50

0

-100

-100

-50

0

2004-05-17

Evaluation issues

• Correspondence of results to ground truth

greedy selection – choose by highest overlap

• If you guess just 1 speaker is always active...

upper bound on error (dep. dominant speaker)

• If you report only 1 speaker/time frame

lower bound on error (dep. overlap)

Spkr Err%

LDC1

LDC2

NIST1

NIST2

ICSI1

ICSI2

CMU1

CMU2

NIST Meetings - Dan Ellis

Columbia University

Guess1

52.7%

60.1%

37.3%

77.9%

69.8%

62.5%

54.0%

37.4%

Only1

11.7%

24.9%

16.7%

18.9%

12.2%

16.7%

20.0%

10.0%

2004-05-17

Dev-set results

100

90

80

70

Spkr Err %

60

Only1

ins

del

err

Guess1

50

40

30

20

10

00

_2

00

_2

00

20

20

31

32

9-

0-

14

15

00

50

14

232

10

00

00

_2

T_

_2

02

20

20

T_

10

03

20

05

8-

-1

14

00

30

7

8

14

-1

14

02

02

11

00

LD

C_

2

C_

20

01

11

11

16

6-

-1

15

40

00

0

0

CM

U

CM

U

IC

SI

IC

SI

IS

N

IS

N

LD

• ‘Best dimension’ + median filter

• Worse than Guess1 in 4 out of 8 cases!

many deletions of outlier points

NIST Meetings - Dan Ellis

Columbia University

2004-05-17

Performance Analysis

• Compare reference & system activity maps:

system misses quiet speakers 2,3,4 (deletions)

system splits speaker 6 (deletions+insertions)

many short gaps (deletions)

NIST Meetings - Dan Ellis

Columbia University

2004-05-17

Future work

• Use more channels

can add mic pairs → more ITD dimensions

select pairs with best average correlation r

• Classify on partial data

classify each dimension separately & vote? (8%...)

throw out dimensions with lower r

• Merge clusters based on source spectrum

look at distribution of MFCCs in each cluster;

reunites speakers who move...

NIST Meetings - Dan Ellis

Columbia University

2004-05-17