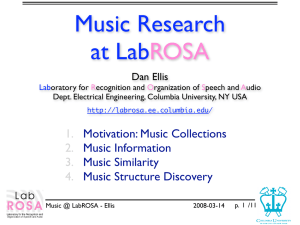

What can we Learn from Large Music Databases? Dan Ellis

advertisement

What can we Learn from Large Music Databases? Dan Ellis Laboratory for Recognition and Organization of Speech and Audio Dept. Electrical Engineering, Columbia University, NY USA dpwe@ee.columbia.edu 1. 2. 3. 4. Learning Music Music Similarity Melody, Drums, Event extraction Conclusions Learning from Music - Ellis 2004-12-18 p. 1 /24 Learning from Music • A lot of music data available e.g. 60G of MP3 ≈ 1000 hr of audio/15k tracks • What can we do with it? implicit definition of ‘music’ • Quality vs. quantity Speech recognition lesson: 10x data, 1/10th annotation, twice as useful • Motivating Applications music similarity / classification computer (assisted) music generation insight into music Learning from Music - Ellis 2004-12-18 p. 2 /24 Ground Truth Data music data available manual annotation is much rarer 7000 6500 6000 5500 5000 4500 4000 3500 3000 2500 2000 1500 1000 500 t 0:02 0:04 f 9 Printed: Tue Mar 11 13:04:28 • A lot of unlabeled File: /Users/dpwe/projects/aclass/aimee.wav Hz 0:06 0:08 0:10 0:12 • Unsupervised structure discovery possible .. but labels help to indicate what you want • Weak annotation sources mus artist-level descriptions symbol sequences without timing (MIDI) errorful transcripts • Evaluation requires ground truth limiting factor in Music IR evaluations? Learning from Music - Ellis 2004-12-18 p. 3 /24 0:14 vox 0:16 0:18 mu Talk Roadmap Anchor models 1 Similarity/ recommend'n Semantic bases Music audio Melody extraction Drums extraction 2 5 Event extraction Learning from Music - Ellis 6 Fragment clustering Eigenrhythms 3 4 Synthesis/ generation ? 2004-12-18 p. 4 /24 1. Music Similarity Browsing • Musical information overload with Adam Berenzweig record companies filter/categorize music an automatic system would be less odious • Connecting audio and preference map to a ‘semantic space’? n-dimensional vector in "Anchor Space" Anchor Anchor Audio Input (Class i) p(a1|x) AnchorAnchor Anchor Audio Input (Class j) p(a2n-dimensional |x) vector in "Anchor Space" GMM Modeling Similarity Computation p(a1|x)p(an|x) p(a2|x) Anchor Conversion to Anchorspace GMM Modeling KL-d, EMD, etc. p(an|x) Conversion to Anchorspace Learning from Music - Ellis 2004-12-18 p. 5 /24 Anchor Space • Frame-by-frame high-level categorizations 0 0.6 0.4 0.2 Electronica fifth cepstral coef compare to raw features? Anchor Space Features Cepstral Features 0 0.2 0.4 0.6 madonna bowie 0.8 1 0.5 0 third cepstral coef 5 10 15 madonna bowie 15 0.5 properties in distributions? dynamics? Learning from Music - Ellis 2004-12-18 10 Country p. 6 /24 5 ‘Playola’ Similarity Browser Learning from Music - Ellis 2004-12-18 p. 7 /24 Semantic Bases Brian Whitman • What should the ‘anchor’ dimensions be? hand-chosen genres? X somehow choose automatically • “Community metadata”: Use Web to get words/phrases.. .. that are informative about artists .. and that can be predicted from audio cates the number of frames in which a term classiositively agrees with the truth value (both classifier uth say a frame is ‘funky,’ for example). b indicates umber of frames in which the term classifier india negative term association but the truth value india positive association (the classifier says a frame is unky,’ but truth says it is). The value c is the amount mes the term classifier predicts a positive association e truth is negative, and the value of d is the amount of s the term classifier and truth agree to be a negative ation. We wish to maximize a and d as correct clasions; by contrast, random guessing by the classifier Learning from Music - Ellis give the same ratio of classifier labels regardless of d truth i.e. a/b ≈ c/d. With N = a + b + c + d, the • Refine classifiers to below artist level e.g. by EM? adj Term aggressive softer synthetic punk sleepy funky noisy angular acoustic romantic K-L bits 0.0034 0.0030 0.0029 0.0024 0.0022 0.0020 0.0020 0.0016 0.0015 0.0014 np Term reverb the noise new wave elvis costello the mud his guitar guitar bass and drums instrumentals melancholy three chords K-L bits 0.0064 0.0051 0.0039 0.0036 0.0032 0.0029 0.0027 0.0021 0.0020 0.0019 Table 2. Selected top-performing models of adjective and noun phrase terms used to predict new reviews of music p. 8 /24 from the K-L 2004-12-18 with their corresponding bits of information distance measure. 2. Transcription as Classification with Graham Poliner • Signal models typically used for transcription harmonic spectrum, superposition • But ... trade domain knowledge for data transcription as pure classification problem: Audio Trained classifier p("C0"|Audio) p("C#0"|Audio) p("D0"|Audio) p("D#0"|Audio) p("E0"|Audio) p("F0"|Audio) single N-way discrimination for “melody” per-note classifiers for polyphonic transcription Learning from Music - Ellis 2004-12-18 p. 9 /24 Classifier Transcription Results • Trained on MIDI syntheses (32 songs) SMO SVM (Weka) • Tested on ISMIR MIREX 2003 set foreground/background separation Frame-level pitch concordance system “jazz3” overall fg+bg 71.5% 44.3% just fg 56.1% 45.4% Learning from Music - Ellis 2004-12-18 p. 10/24 Forced-Alignment of MIDI with Rob Turetsky • MIDI is a handy description of music notes, instruments, tracks .. to drive synthesis • Align MIDI ‘replicas’ to get GTruth for audio freq / kHz estimate time-warp relation 4 Original 2 0 freq / kHz "Don't you want me" (Human League), verse1 4 17 18 19 20 21 22 23 24 25 26 MIDI Replica 2 MIDI # 0 80 MIDI notes 60 40 19 20 21 Learning from Music - Ellis 22 23 24 25 26 27 28 time / sec 2004-12-18 p. 11/24 3. Melody Clustering with Graham Poliner • Goal: Find ‘fragments’ that recur in melodies .. across large music database .. trade data for model sophistication Training data Melody extraction 5 second fragments VQ clustering Top clusters • Data sources pitch tracker, or MIDI training data • Melody fragment representation DCT(1:20) - removes average, smoothes detail Learning from Music - Ellis 2004-12-18 p. 12/24 Melody clustering results • Clusters match underlying contour: • Finds some similarities: e.g. Pink + Nsync Learning from Music - Ellis 2004-12-18 p. 13/24 4. Eigenrhythms: Drum Pattern Space with John Arroyo • Pop songs built on repeating “drum loop” variations on a few bass, snare, hi-hat patterns • Eigen-analysis (or ...) to capture variations? by analyzing lots of (MIDI) data, or from audio • Applications music categorization “beat box” synthesis insight Learning from Music - Ellis 2004-12-18 p. 14/24 Aligning the Data • Need to align patterns prior to modeling... tempo (stretch): by inferring BPM & normalizing downbeat (shift): correlate against ‘mean’ template Learning from Music - Ellis 2004-12-18 p. 15/24 Eigenrhythms (PCA) • Need 20+ Eigenvectors for good coverage of 100 training patterns (1200 dims) • Eigenrhythms both add and subtract Learning from Music - Ellis 2004-12-18 p. 16/24 Posirhythms (NMF) Posirhythm 1 Posirhythm 2 HH HH SN SN BD BD Posirhythm 3 Posirhythm 4 HH HH SN SN BD BD 0.1 Posirhythm 5 Posirhythm 6 HH HH SN SN BD BD 0 1 50 100 2 150 200 3 250 300 4 350 400 0 -0.1 0 1 50 100 2 150 200 3 250 300 4 • Nonnegative: only adds beat-weight • Capturing some structure Learning from Music - Ellis 2004-12-18 p. 17/24 350 samples (@ 2 beats (@ 120 Eigenrhythms for Classification • Projections in Eigenspace / LDA space PCA(1,2) projection (16% corr) LDA(1,2) projection (33% corr) 10 6 blues 4 country disco hiphop 2 house newwave rock 0 pop punk -2 rnb 5 0 -5 -10 -20 -10 0 10 -4 -8 -6 -4 -2 • 10-way Genre classification (nearest nbr): PCA3: 20% correct LDA4: 36% correct Learning from Music - Ellis 2004-12-18 p. 18/24 0 2 Eigenrhythm BeatBox • Resynthesize rhythms from eigen-space Learning from Music - Ellis 2004-12-18 p. 19/24 5. Event Extraction • Music often contains many repeated events notes, drum sounds but: usually overlapped... • Vector Quantization finds common patterns: Event dictionary Find alignments Combine & re-estimate Training data representation... aligning/matching... how much coverage required? Learning from Music - Ellis 2004-12-18 p. 20/24 Drum Track Extraction with Ron Weiss, after Yoshii et al. ’04 • Initialize dictionary with Bass Drum, Snare • Match only on a few spectral peaks narrowband energy most likely to avoid overlap • Median filter to re-estimate template .. after normalizing amplitudes can pick up partials from common notes Learning from Music - Ellis 2004-12-18 p. 21/24 Generalized Event Detection with Michael Mandel • Based on ‘Shazam’ audio fingerprints (Wang’03) -.$/0$*(1%&23, <666 8666 ;666 7666 4666 6 456 457 458 455 459 4:6 4:7 !"#$%&'$()*+', 4:8 4:5 4:9 496 457 458 455 459 4:6 4:7 !"#$%&'$()*+', 4:8 4:5 4:9 496 -.$/0$*(1%&23, <666 8666 ;666 7666 4666 6 456 relative timing of F1-F2-ΔT triples discriminates pieces narrowband features to avoid collision (again) • Fingerprint events, not recordings: choose top triples, look for repeats rank reduction of triples x time matrix Learning from Music - Ellis 2004-12-18 p. 22/24 Event detection results • Procedure find hash triples cluster them patterns in hash co-occurrence = events? 150 100 50 0 0 50 100 Learning from Music - Ellis 150 200 250 2004-12-18 300 p. 23/24 Conclusions Similarity/ recommend'n Anchor models Semantic bases Music audio Melody extraction Drums extraction Event extraction Fragment clustering Synthesis/ generation Eigenrhythms ? • Lots of data + noisy transcription + weak clustering musical insights? Learning from Music - Ellis 2004-12-18 p. 24/24 Approaches to Chord Transcription with Alex Sheh • Note transcription, then note→chord rules like labeling chords in MIDI transcripts • Spectrum→chord rules i.e. find harmonic peaks, use knowledge of likely notes in each chord • Trained classifier don’t use any “expert knowledge” instead, learn patterns from labeled examples • Train ASR HMMs with chords ≈ words Learning from Music - Ellis 2004-12-18 p. 25/24 Chord Sequence Data Sources • All we need are the chord sequences for our training examples Hal Leonard “Paperback Song Series” - manually retyped for 20 songs: “Beatles for Sale”, “Help”, “Hard Day’s Night” freq / kHz The Beatles - Hard Day's Night # The Beatles - A Hard Day's Night # G Cadd9 G F6 G Cadd9 G F6 G C D G C9 G G Cadd9 G F6 G Cadd9 G F6 G C D G C9 G Bm Em Bm G Em C D G Cadd9 G F6 G Cadd9 G F6 G C D G C9 G D G C7 G F6 G C7 G F6 G C D G C9 G Bm Em Bm G Em C D G Cadd9 G F6 G Cadd9 G F6 G C D G C9 G C9 G Cadd9 Fadd9 5 4 3 2 1 0 0 5 10 15 20 25 time / sec - hand-align chords for 2 test examples Learning from Music - Ellis 2004-12-18 p. 26/24 Chord Results • Recognition weak, but forced-alignment OK Frame-level Accuracy Recognition Alignment MFCC 8.7% PCP_ROT 21.7% # G # 22.0% F E pitch class Feature Beatles - Beatles For Sale - Eight Days a Week (4096pt) 76.0% # D # (random ~3%) MFCCs are poor (can overtrain) PCPs better (ROT helps generalization) C B # A 16.27 true align recog Learning from Music - Ellis inte 24.84 time / sec E G D E G DBm E G Bm Bm G G Am 2004-12-18 Em7 Bm p. 27/24 Em7 What did the models learn? • Chord model centers (means) indicate chord ‘templates’: PCP_ROT family model means (train18) 0.4 DIM DOM7 MAJ MIN MIN7 0.35 0.3 0.25 0.2 0.15 0.1 0.05 0 C 0 D 5 Learning from Music - Ellis E F 10 G A 15 20 BC 25 2004-12-18 (for C-root chords) p. 28/24