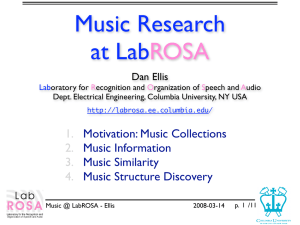

Extracting and Using Music Audio Information Dan Ellis

advertisement

Extracting and Using

Music Audio Information

Dan Ellis

Laboratory for Recognition and Organization of Speech and Audio

Dept. Electrical Engineering, Columbia University, NY USA

http://labrosa.ee.columbia.edu/

1.

2.

3.

4.

Motivation: Music Collections

Music Information

Music Similarity

Music Structure Discovery

Music Audio Information - Ellis

2007-11-02

p. 1 /42

LabROSA Overview

Information

Extraction

Music

Recognition

Environment

Separation

Machine

Learning

Retrieval

Signal

Processing

Speech

Music Audio Information - Ellis

2007-11-02

p. 2 /42

1. Managing Music Collections

• A lot of music data available

e.g. 60G of MP3 ≈ 1000 hr of audio, 15k tracks

• Management challenge

how can computers help?

• Application scenarios

personal music collection

discovering new music

“music placement”

Music Audio Information - Ellis

2007-11-02

p. 3 /42

Learning from Music

• What can we infer from 1000 h of music?

common patterns

sounds, melodies, chords, form

what is and what isn’t music

Scatter of PCA(3:6) of 12x16 beatchroma

60

50

40

30

•

• Applications

Data driven musicology?

modeling/description/coding

computer generated music

curiosity...

Music Audio Information - Ellis

20

10

10

20

30

40

50

60

10

20

30

40

50

60

60

50

40

30

20

10

2007-11-02

p. 4 /42

The Big Picture

Low-level

features

Classification

and Similarity

browsing

discovery

production

Music

Structure

Discovery

modeling

generation

curiosity

Melody

and notes

Music

audio

Key

and chords

Tempo

and beat

.. so far

Music Audio Information - Ellis

2007-11-02

p. 5 /42

2. Music Information

• How to represent music audio?

• Audio features

spectrogram, MFCCs, bases

• Musical elements

Frequency

4000

3000

2000

1000

0

0

0.5

1

1.5

2

2.5

3

notes, beats, chords, phrases

requires transcription

• Or something inbetween?

optimized for a certain task?

Music Audio Information - Ellis

2007-11-02

p. 6 /42

3.5

4

4.5

5

Time

Transcription as Classification

• Exchange signal models for data

Poliner & Ellis ‘05,’06,’07

transcription as pure classification problem:

feature representation

feature vector

Training data and features:

•MIDI, multi-track recordings,

playback piano, & resampled audio

(less than 28 mins of train audio).

•Normalized magnitude STFT.

classification posteriors

Classification:

•N-binary SVMs (one for ea. note).

•Independent frame-level

classification on 10 ms grid.

•Dist. to class bndy as posterior.

hmm smoothing

Temporal Smoothing:

•Two state (on/off) independent

HMM for ea. note. Parameters

learned from training data.

•Find Viterbi sequence for ea. note.

Music Audio Information - Ellis

2007-11-02

p. 7 /42

Polyphonic Transcription

• Real music excerpts + ground truth

MIREX 2007

Frame-level transcription

Estimate the fundamental frequency of all notes present on a 10 ms grid

1.25

1.00

0.75

0.50

0.25

0

Precision

Recall

Acc

Etot

Esubs

Emiss

Efa

Note-level transcription

Group frame-level predictions into note-level transcriptions by estimating onset/offset

1.25

1.00

0.75

0.50

0.25

0

Precision

Music Audio Information - Ellis

Recall

Ave. F-measure

Ave. Overlap

2007-11-02

p. 8 /42

Beat Tracking

Ellis ’06,’07

• Goal: One feature vector per ‘beat’ (tatum)

for tempo normalization, efficiency

• “Onset Strength Envelope”

freq / mel

sumf(max(0, difft(log |X(t, f)|)))

40

30

20

10

0

• Autocorr. + window → global tempo estimate

0

5

10

time / sec 15

0

168.5100BPM 200

0

300

Music Audio Information - Ellis

400

500

600

700

800

900

1000

lag / 4 ms samples

2007-11-02

p. 9 /42

Beat Tracking

• Dynamic Programming finds beat times {t }

i

optimizes i O(ti) + i W((ti+1 – ti – p)/)

where O(t) is onset strength envelope (local score)

W(t) is a log-Gaussian window (transition cost)

p is the default beat period per measured tempo

incrementally find best predecessor at every time

backtrace from largest final score to get beats

C*(t)

O(t)

τ

t

C*(t) = γ O(t) + (1–γ)max{W((τ – τp)/β)C*(τ)}

τ

P(t) = argmax{W((τ – τp)/β)C*(τ)}

τ

Music Audio Information - Ellis

2007-11-02

p. 10/42

Beat Tracking

• DP will bridge gaps (non-causal)

there is always a best path ...

freq / Bark band

Alanis Morissette - All I Want - gap + beats

40

30

20

10

• 2nd place in MIREX 2006 Beat Tracking

182

184

186

188

190

192

time / sec

compared to McKinney & Moelants human data

freq / Bark band

Subject #

test 2 (Bragg) - McKinney + Moelants Subject data

40

40

30

20

10

20

0

0

5

Music Audio Information - Ellis

10

time / s

2007-11-02

p. 11/42

15

Chroma Features

• Chroma features convert spectral energy

into musical weights in a canonical octave

i.e. 12 semitone bins

IF chroma

chroma

3

2

G

F

D

C

1

A

0

2

4

6

8

10

100

time / sec

200

300

400

500

• Can resynthesize as “Shepard Tones”

600

700

time / frames

all octaves at once

0

-10

-20

-30

-40

-50

-60

12 Shepard tone spectra

freq / kHz

level / dB

Piano

scale

freq / kHz

Piano chromatic scale

4

Shepard tone resynth

4

3

2

1

0

500

1000

1500

2000

2500 freq / Hz

Music Audio Information - Ellis

0

2

4

6

2007-11-02

8

10 time / sec

p. 12/42

Key Estimation

• Covariance of chroma reflects key

• Normalize by transposing for best fit

single Gaussian

model of one piece

find ML rotation

of other pieces

model all

transposed pieces

iterate until

convergence

aligned chroma

Taxman

Eleanor Rigby

I'm Only Sleeping

G

F

G

F

G

F

D

D

D

C

C

C

A

A

A

A

Love You To

C D

F G

A

Aligned Global model

G

F

G

F

D

D

D

C

C

C

A

A

A

C D

She Said She Said

C D

F G

A

Good Day Sunshine

G

F

G

F

D

D

D

C

C

C

A

C D

F G

C D

F G

And Your Bird Can Sing

G

F

A

F G

A

A

F G

C D

Yellow Submarine

G

F

A

Music Audio Information - Ellis

Ellis ICASSP ’07

A

A

C D

F G

2007-11-02

A

p. 13/42

C D

F G

aligned chroma

Chord Transcription

Sheh & Ellis ’03

• “Real Books” give chord transcriptions

but no exact timing

.. just like speech transcripts

# The Beatles - A Hard Day's Night

#

G Cadd9 G F6 G Cadd9 G F6 G C D G C9 G

G Cadd9 G F6 G Cadd9 G F6 G C D G C9 G

Bm Em Bm G Em C D G Cadd9 G F6 G Cadd9 G

F6 G C D G C9 G D

G C7 G F6 G C7 G F6 G C D G C9 G Bm Em Bm

G Em C D

G Cadd9 G F6 G Cadd9 G F6 G C D G C9 G

C9 G Cadd9 Fadd9

• Use EM to simultaneously

learn and align chord models

Model inventory

ae1

ae2

dh1

dh2

ae3

Initialization Θ

init

parameters

Repeat until

convergence

Labelled training data

dh ax k ae t

s ae t aa n

dh

s ae

E-step:

probabilities p(qni |XN

1,Θold)

of unknowns

M-step:

maximize via

parameters

Music Audio Information - Ellis

ax

k

t

dh

ae

t

aa n

ax

k

Uniform

initialization

alignments

ae

Θ : max E[log p(X,Q | Θ)]

2007-11-02

p. 14/42

Chord Transcription

Frame-level Accuracy

Feature

Recog.

Alignment

Beatles - Beatles For Sale - Eight Days a Week (4096pt)

#

8.7%

PCP_ROT

21.7%

22.0%

G

#

120

F

76.0%

100

(random ~3%)

MFCCs are poor

(can overtrain)

PCPs better

(ROT helps generalization)

E

pitch class

MFCC

80

#

D

60

#

40

C

20

B

0

intensity

#

A

16.27

true

align

recog

24.84

time / sec

E

G

D

E

G

DBm

E

G

Bm

Bm

G

G

Am

Em7 Bm

Em7

• Needed more training data...

Music Audio Information - Ellis

2007-11-02

p. 15/42

3. Music Similarity

• The most central problem...

motivates extracting musical information

supports real applications (playlists, discovery)

• But do we need content-based similarity?

compete with collaborative filtering

compete with fingerprinting + metadata

• Maybe ... for the Future of Music

connect listeners directly to musicians

Music Audio Information - Ellis

2007-11-02

p. 16/42

Discriminative Classification

Mandel & Ellis ‘05

• Classification as a proxy for similarity

• Distribution models...

Training

MFCCs

Artist 1

GMMs

KL

Artist

Artist 2

Min

KL

• vs. SVM

Test Song

Training

MFCCs

Song Features

Artist 1

D

D

D

DAG SVM

Artist

Artist 2

D

D

D

Test Song

Music Audio Information - Ellis

2007-11-02

p. 17/42

A

Segment-Level Features

• Statistics of spectra and envelope

Mandel & Ellis ‘07

LabROSA’s audio music similarity and classification system

define a pointMichael

in feature

I Mandel and space

Daniel P W Ellis

· Dept of Electrical Engineering · Columbia University, New York

for SVMLabROSA

classification,

or Euclidean similarity...

{mim,dpwe}@ee.columbia.edu

d

o

1. Feature Extraction

ures are the same as Mandel and Ellis (2005), mean and

ovariance of MFCCs

tures are similar to those in Rauber et al. (2002)

ut into overlapping 10-second clips

mel spectrum of each clip, averaging clips’ features

mel frequencies together to get magnitude bands for low,

3. Results

2. Similarity and Classification

• We use a DAG-SVM for n-way classification of songs (Platt et al., 2000)

• The distance between songs is the Euclidean distance between their

feature vectors

• During testing, some songs were a top similarity match for many songs

• Re-normalizing each song’s feature vector avoided this problem

Music Audio Information - Ellis

Audio Music Similarity

2007-11-02

0.8

0.7

p. 18/42

Audio Classification

80

70

0.6

60

0.5

50

0.4

40

0.3

30

MIREX’07 Results

Results and classification

• One system for3.similarity

Audio Music Similarity

Audio Classification

80

0.8

70

0.7

0.6

60

0.5

50

0.4

40

0.3

30

20

0.2

Greater0

Psum

Fine

WCsum

SDsum

Greater1

0.1

10

0

0

PS

GT

Genre ID Hierarchical

Genre ID Raw

Mood ID

Composer ID

Artist ID

LB

CB1

TL1

ME

TL2

CB2

CB3

BK1

PC

BK2

PS = Pohle, Schnitzer; GT = George Tzanetakis; LB = Barrington, Turnbull, Torres, Lanckriet;

CB = Christoph Bastuck; TL = Lidy, Rauber, Pertusa, Iñesta; ME = Mandel, Ellis; BK = Bosteels,

Kerre; PC = Paradzinets, Chen

Music Audio Information - Ellis

IMsvm

MEspec

ME

TL

GT

IM

knn

KL

CL

GH

IM = IMIRSEL M2K; ME = Mandel, Ellis; TL = Lidy,

Rauber, Pertusa, Iñesta; GT = George Tzanetakis; KL = Kyogu Lee; CL = Laurier, Herrera;

GH = Guaus, Herrera

2007-11-02

p. 19/42

Active-Learning Playlists

• SVMs are well suited to “active learning”

solicit labels on items closest to current boundary

• Automatic player

with “skip”

= Ground truth

data collection

active-SVM

automatic playlist

generation

Music Audio Information - Ellis

2007-11-02

p. 20/42

Cover Song Detection

Ellis & Poliner ‘07

• “Cover Songs” = reinterpretation of a piece

different instrumentation, character

no match with “timbral” features

Let It Be - Nick Cave

Let It Be / Beatles / verse 1

4

freq / kHz

freq / kHz

Let It Be - The Beatles

3

2

1

0

Let It Be / Nick Cave / verse 1

4

3

2

1

0

• Need a different representation!

2

4

6

8

10 time / sec

2

4

6

8

10

time / sec

beat-synchronous chroma features

Beat-sync chroma features

G

F

chroma

chroma

Beat-sync chroma features

D

C

A

G

F

D

C

A

5

10

15

20

25

Music Audio Information - Ellis

beats

5

10

15

2007-11-02

20

25

beats

p. 21/42

Beat-Synchronous Chroma Features

• Beat + chroma features / 30ms frames

→ average chroma within each beat

compact; sufficient?

34,5-.-6,7

&#

%#

$#

89/,)-/)4,9:);

"#

#

0;48+2-1*9/

"$

"#

(

'

&

$

#

!

"#

)*+,-.-/,0 "!

0;48+2-1*9/

"$

"#

(

'

&

$

!

"#

"!

Music Audio Information - Ellis

$#

$!

%#

%!

)*+,-.-1,2)/

2007-11-02

p. 22/42

Matching: Global Correlation

• Cross-correlate entire beat-chroma matrices

... at all possible transpositions

implicit combination of match quality and duration

chroma bins

Elliott Smith - Between the Bars

G

E

D

C

A

skew / semitones

chroma bins

100

200

300

400

Glen Phillips - Between the Bars

500

beats @281 BPM

G

E

D

C

A

Cross-correlation

+5

0

-5

-500

• One good matching fragment is sufficient...?

-400

-300

-200

Music Audio Information - Ellis

-100

0

100

200

300

400 skew / beats

2007-11-02

p. 23/42

MIREX 06 Results

• Cover song contest

• Found 761/3300

= 23% recall

next best: 11%

guess: 3%

Music Audio Information - Ellis

song-set (each row is one query song)

30 songs x 11

versions of each (!)

(data has not been

disclosed)

# true covers in top 10

8 systems compared

(4 cover song

+ 4 similarity)

MIREX 06 Cover Song Results:

# Covers retrieved per song per system

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

8

6

4

2

0

correct

matches

retrieved

CS DE KL1 KL2 KWL KWT LR TP

cover song systems

similarity systems

2007-11-02

p. 24/42

Cross-Correlation Similarity

• Use cover-song approach to find similarity

e.g. similar note/instrumentation sequence

may sound very similar to judges

• Numerous variants

try on chroma (melody/harmony)

and MFCCs (timbre)

try full search (xcorr)

or landmarks (indexable)

compare to random,

segment-level stats

• Evaluate by subjective tests

modeled after MIREX similarity

Music Audio Information - Ellis

2007-11-02

p. 25/42

•

Cross-Correlation Similarity

or boundary within each segation only at the single skew

e use the BIC method [8] to

he feature matrix that maxiof fitting separate Gaussians

e boundary compared to fitsingle Gaussian i.e. the time

ray into maximally dissimio miss some of the matching

simplicity may be the only

databases consisting of mil-

AND RESULTS

Table 1. Results of the subjective similarity evaluation.

Human

web-based

judgments

Counts

are the number of times

the best hit returned by each

was rated as similar by a human rater. Each albinaryalgorithm

judgments

for speed

gorithm provided one return for each of 30 queries, and was

judged

by

6 raters,

hence the

countscandidate

are out of a maximum

6 users

x

30

queries

x

10

returns

possible of 180.

Algorithm

(1) Xcorr, chroma

(2) Xcorr, MFCC

(3) Xcorr, combo

(4) Xcorr, combo + tempo

(5) Xcorr, combo at boundary

(6) Baseline, MFCC

(7) Baseline, rhythmic

(8) Baseline, combo

Random choice 1

Random choice 2

Similar count

48/180 = 27%

48/180 = 27%

55/180 = 31%

34/180 = 19%

49/180 = 27%

81/180 = 45%

49/180 = 27%

88/180 = 49%

22/180 = 12%

28/180 = 16%

ing music similarity systems

ntitative analysis. As noted

fication tasks that have been

ikely fall short of accounting

larly in the case of a system

combined score evaluated only at the reference boundary of

ch structural detail instead of

section 2.1. To these, we added three additional hits from a

ducted a small subjective lismore conventional feature statistics system using (6) MFCC

after the MIREX music simimean and covariance (as in [2]), (7) subband rhythmic fead to collect only a single sim(modulation

spectra, similar to [10]), and 2007-11-02

(8) a simple

ch returned clip (toMusic

simplify

Audiotures

Information

- Ellis

summation of the normalized scores under these two meacluding some random selecsures. Finally, we added two randomly-chosen clips to bring

mparison.

• Cross-correlation inferior to baseline...

... but is getting somewhere, even with ‘landmark’

p. 26/42

Cross-Correlation Similarity

• Results are not overwhelming

.. but database is only a few thousand clips

Music Audio Information - Ellis

2007-11-02

p. 27/42

“Anchor Space”

Berenzweig & Ellis ‘03

• Acoustic features describe each song

.. but from a signal, not a perceptual, perspective

.. and not the differences between songs

• Use genre classifiers to define new space

prototype genres are “anchors”

n-dimensional

vector in "Anchor

Space"

Anchor

Anchor

Audio

Input

(Class i)

p(a1|x)

AnchorAnchor

Anchor

Audio

Input

(Class j)

|x)

p(a2n-dimensional

vector in "Anchor

Space"

GMM

Modeling

Similarity

Computation

p(a1|x)p(an|x)

p(a2|x)

Anchor

Conversion to Anchorspace

GMM

Modeling

KL-d, EMD, etc.

p(an|x)

Conversion to Anchorspace

Music Audio Information - Ellis

2007-11-02

p. 28/42

“Anchor Space”

• Frame-by-frame high-level categorizations

0

0.6

0.4

0.2

Electronica

fifth cepstral coef

compare to

raw features?

Anchor Space Features

Cepstral Features

0

0.2

0.4

0.6

madonna

bowie

0.8

1

0.5

0

third cepstral coef

5

10

15

madonna

bowie

15

0.5

properties in distributions? dynamics?

Music Audio Information - Ellis

2007-11-02

10

Country

p. 29/42

5

‘Playola’ Similarity Browser

Music Audio Information - Ellis

2007-11-02

p. 30/42

Ground-truth data

• Hard to evaluate Playola’s ‘accuracy’

Ellis et al, ‘02

user tests...

ground truth?

• “Musicseer” online

survey/game:

ran for 9 months in 2002

> 1,000 users,

> 20k judgments

http://labrosa.ee.columbia.edu/

projects/musicsim/

Music Audio Information - Ellis

2007-11-02

p. 31/42

“Semantic Bases”

• Describe segment in human-relevant terms

e.g. anchor space, but more so

• Need ground truth...

what words to people use?

• MajorMiner

game:

400 users

7500 unique tags

70,000 taggings

2200 10-sec clips used

• Train classifiers...

Music Audio Information - Ellis

2007-11-02

p. 32/42

3. Music Structure Discovery

• Use the many examples to map out the

“manifold” of music audio

... and hence define the subset that is music

x 104

32GMMs on 1000 MFCC20s

u2

-2.5

tina_turner

roxette

-3

rolling_stones

-3.5

queen

pink_floyd

-4

artist model

s

metallica

madonna

-4.5

green_day

genesis

-5

garth_brooks

-5.5

fleetwood_mac

depeche_mode

-6

dave_matthews_band

creedence_clearwater_revival

-6.5

bryan_adams

beatles

• Problems

-7

aerosmith

ae

be

br

cr

da

de

fl

ga

ge

gr

test tracks

ma

me

pi

qu

ro

ro

ti

u2

alignment/registration of data

factoring & abstraction

separating parts?

Music Audio Information - Ellis

2007-11-02

p. 33/42

Eigenrhythms: Drum Pattern Space

Ellis & Arroyo ‘04

• Pop songs built on repeating “drum loop”

variations on a few bass, snare, hi-hat patterns

• Eigen-analysis (or ...) to capture variations?

by analyzing lots of (MIDI) data, or from audio

• Applications

music categorization

“beat box” synthesis

insight

Music Audio Information - Ellis

2007-11-02

p. 34/42

Aligning the Data

• Need to align patterns prior to modeling...

tempo (stretch):

by inferring BPM &

normalizing

downbeat (shift):

correlate against

‘mean’ template

Music Audio Information - Ellis

2007-11-02

p. 35/42

Eigenrhythms (PCA)

• Need 20+ Eigenvectors for good coverage

of 100 training patterns (1200 dims)

• Eigenrhythms both add and subtract

Music Audio Information - Ellis

2007-11-02

p. 36/42

Posirhythms (NMF)

Posirhythm 1

Posirhythm 2

HH

HH

SN

SN

BD

BD

Posirhythm 3

Posirhythm 4

HH

HH

SN

SN

BD

BD

0.1

Posirhythm 5

Posirhythm 6

HH

HH

SN

SN

BD

BD

0

1

50

100

2

150

200

3

250

300

4

350

400

0

-0.1

0

1

50

100

2

150

200

3

250

300

4

• Nonnegative: only adds beat-weight

• Capturing some structure

Music Audio Information - Ellis

2007-11-02

p. 37/42

350

samples (@ 2

beats (@ 120

Eigenrhythm BeatBox

• Resynthesize rhythms from eigen-space

Music Audio Information - Ellis

2007-11-02

p. 38/42

Melody Clustering

• Goal: Find ‘fragments’ that recur in melodies

.. across large music database

.. trade data for model sophistication

Training

data

Melody

extraction

5 second

fragments

VQ

clustering

Top

clusters

• Data sources

pitch tracker, or MIDI training data

• Melody fragment representation

DCT(1:20) - removes average, smoothes detail

Music Audio Information - Ellis

2007-11-02

p. 39/42

Melody Clustering

• Clusters match underlying contour:

• Some interesting

matches:

e.g. Pink + Nsync

Music Audio Information - Ellis

2007-11-02

p. 40/42

Beat-Chroma Fragment Codebook

• Idea: Find the very popular music fragments

e.g. perfect cadence, rising melody, ...?

• Clustering a large enough database should

reveal these

but: registration of phrase boundaries, transposition

• Need to deal with really large datasets

e.g. 100k+ tracks, multiple landmarks in each

but: Locality Sensitive Hashing

can help - quickly finds ‘most’

points in a certain radius

• Experiments in progress...

Music Audio Information - Ellis

2007-11-02

p. 41/42

Conclusions

Low-level

features

Classification

and Similarity

browsing

discovery

production

Music

Structure

Discovery

modeling

generation

curiosity

Melody

and notes

Music

audio

Key

and chords

Tempo

and beat

• Lots of data

+ noisy transcription

+ weak clustering

musical insights?

Music Audio Information - Ellis

2007-11-02

p. 42/42