Document 10438034

advertisement

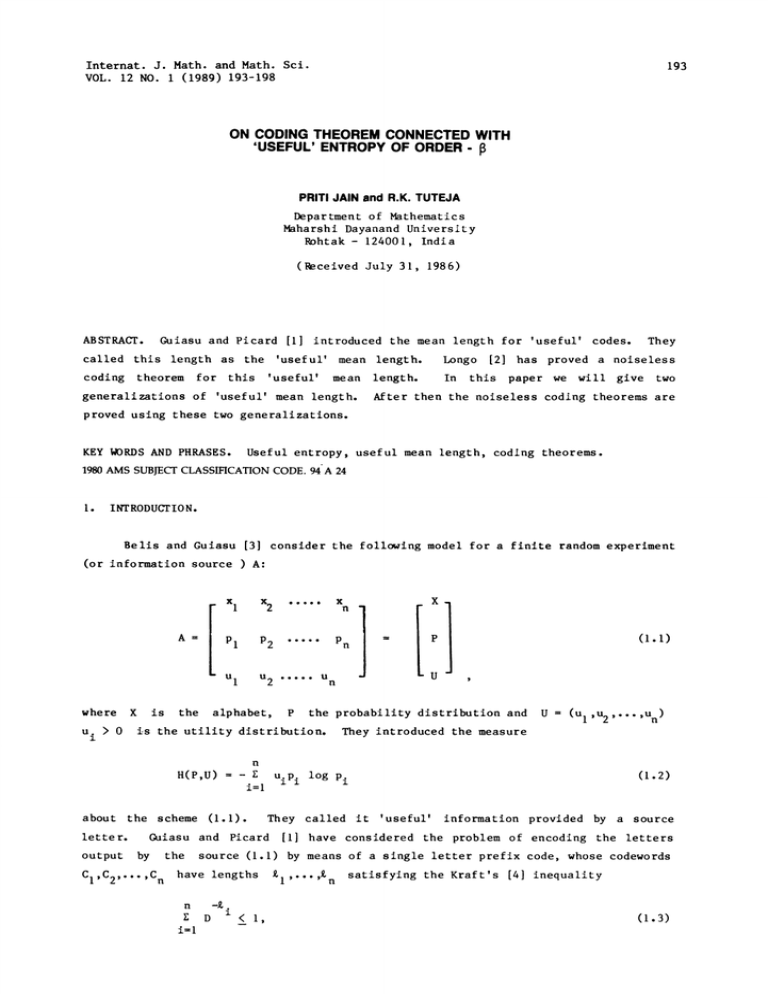

Internat. J. Math. and Math. Sci. VOL. 12 NO. 1 (1989) 193-198 193 ON CODING THEOREM CONNECTED WITH ’USEFUL’ ENTROPY OF ORDERPRITI JAIN and R.K. TUTEJA Department of Mathematics Maharshi Dayanand University Rohtak 124001, India (eceived July 31, 1986) ABSTRACT. Guiasu and Picard called this length as the coding ’useful’ for this theorem [I] introduced the mean length for ’useful’ codes. They Longo [2] has proved a noiseless ’useful’ mean length. mean generalizations of ’useful’ mean length. In length. this paper we will give two After then the noiseless coding theorems are proved using these two generalizations. KEY WORDS AND PHRASES. 1980 AMS Useful entropy, useful mean length, coding theorems. SUBJECT CLASSIFICATION CODE. 94A 24 INTRODUCTION. Bells and Guiasu [3] consider the following model for a finite random experiment (or information source A: x x 2 Xn X Pl P2 Pn P u u A where u. > 0 X is the alphabet, U u 2 P (1.1) the probability distribution and i-s the utility distribution. U (u l,u2,...,un) They introduced the measure n r. H(P,U) uiP i i=l about the scheme (I.I). letter. output CI,C2,...,Cn the n i=l information provided by a source [I] have considered the problem of encoding the letters source (I.I) by means of a single letter prefix code, whose codewords - have lengths E (1.2) Pi They called it ’useful’ Guiasu and Picard by log D i < I, I"’" ’n satisfying the Kraft’s [4] inequality 194 P. JAIN AND R.K. TUTEJA where D is the size of the code alphabet. They defined the following quantity n E iuiPi i=l n n(u) (1.4) E uiP i i=l and call it ’useful’ mean length of the code They also derived a lower bound for it. , In this communication two generalizations of (1.4) have been studied and then the bounds for these generalizations are obtained in terms of ’useful’ entropy of type which is given by n (m,u) uiPi (21-s-i) (p-l-l)] 8 > 0 (1.5) 8 under the condition -g. n i l u. D l i=l n < E i=l (1.6) uiPi which is the generalization of Kraft’s inequality (1.4). 2. TWO GENERALIZATIONS OF ’USEFUL’ MEAN LENGTH AND THE CODING THEOREMS. Let us introduce the measure of length: L! (U)= i=l n I- -I) log D(2 E i=! uiPi uiPi It is easy to see that (u) lim L L(U). In the followgng theorem we obtain lower bound for (2.1) in terms of THEOREM I. (U)> HE (P,U) / U log D, B L where U I’2’’’" ’n If H(P,U). denote the lengths of a code satisfying (1.6) then I, > 0 (2.2) n l u.p. i=l with equality iff Pl (2.3) n E Z uiP i / i=l PROOF. i=l uiP i By Holder’s inequality I/p n n I/q n (2.4) where --+q P l, p i--I i=l i=l < and a., b. > O. CODING THEOREM CONNECTED WITH ENTROPY 195 Put (B- I____) p I-B, q in b [ i / uiPi ai B uiPi i=I n B uiP i / uiPi i=l ] (2.4), we get t.i (1-B)/B n I i=l uiPi D -B/(1-B n / I uiPi i=l n l u D i i=l < Using (1.6) in (2.5), we get n Y-- i=l Let I/(I-8) 0 <B < .(1-B)/B uiP i D z n i=l I- -I > 0 uiPi D n n (2.5) for 0 B < < B n uiPi] > 1/(B-l) n B/ly. uiPi i=l n / g i=l <B < The proof for (l-B)/B uiPi i/E ui. i_Di=l <_ uiPi] i=l 1/(1-B i=l i=l Raising both sides of (2.6) to the power I. r. Since 2 n uiPi / E B/(B-1) n / E . - n r. u "=I (2.6) iPi (B-I), we get n uiPBi/il E i=l uiPi]. I, a simple manipulation proves (2.2) for 0 < B < I. It is clear tht equality in follows on the same lines. (2.2) holds iff = -I. m Pi Z i=l (2 7) ulPBl/i7" uiP i) n n which implies that i lOgD upB/il r. i=l B uiPi) lgD Pi Hence it is always possible to have a code satisfying n -B log D Pi + lgD < -B D n B Z uiPi/ i=I uiPi )< i < Z i=I n log Pi + lgD n B g i=l r. uiPi/ i=l uiP i )+1, (2.9) which is equivalent to n -B Pi E i=l PARTICULAR CASE. n uzP/iE Let uiP i) < u. D z < Dp for each B( n i=l i and B n I uiPi/ i=l uiPi D (2.10) 2, that is, the codes are binary, then (2.2) reduces to the result proved by Van der Lubbe [5]. 196 P. JAIN AND R.K. TUTEJA In the theorem, we following L131(U) will give an upper bound for H13(P,U). THEOREM 2. By properly choosing the lengths 13(U) Theorem 1, + D D < 13 < i I. D ’Multiplying n Dp13 < uiPi/iE i=l i (I-13)/13 < < 13 I. > (2.11) 0. (2.12) 13-I (I-13)/13 D Pi n E i=l we get (I-13)/13 n B 1-13 ---, uiP"/ig1 (2 13) uipi n E uiPi/ i=l < 0 13 13, (1-13)4 /13 < Let i D uiPi i--I < I, 13 uiPi) both sides of (2.13) by n 0 13 Io D Raising both sides of (2.12) to the power E for 1-13 D 1-13 (2 -I) n 13 E raising both sides to the power Since code of From (2.10), we have PROOF. 0 the in can be made to satisfy the following inequality: L H13(PU) L113(U) < log D DI-13 Let 1’4 2,...,4n in terms of n / E i--I I, 2 < 13 < , > -I O, uiPi, sming over i and after then we get a 1-13 n n 13/i uiP i] < D simple manipulation E uiPi i=l "--I (2.14) uiP i] proves theorem the for the proof follows on same lines. PARTICULAR CASE. Let u. for each i and D that is, the codes are 2, 1 binary codes, then (2.11) reduces to the result proved by Van der Lubbe [5]. REMARK. When 13 I, (2.2) and (2.1 I) give H(P,.U) < L(U) < H(PU) + I, U log D where L(U) is the ’useful’ mean length function L(U) upper bounds on H(PU-) u Io (2.15) U log D (1.4), Longo [2] gave the lower and as follows: u + u .log u u log D < L(U) < H(P’U) -’u’l"’[ + u log u u (2.16) + I, u log D where the bar means the value with respect to probability distribution P Since x log x is a convex u log u > function, the inequality u log u H(P,U) holds and therefore U does not seem to be as basic in (2.16) as in (2.15). Now we will define another measure of length related to measure of length 13(U) 2 L (PI"’’’Pn)" by (P,U). We define the CODING THEOREM CONNECTED WITH ENTROPY (21-8-I) n Z i=l log D n 8 8/iZ= ulPi uipi i (8-I) D 197 -1] 8 > 1, 8 0. (2.17) It is easy to see that L2(U) lira L(U). In the following theorem we obtain the lower bound for THEOREM 3. I’2’’’" ’n If H 8 L2(U) >_U E P ,u) L2(U in terms of HE(-,U). denote the lengths of code satisfying (1.6), then > I, 8 8 (2.18) O,- log D n E U where up i=l with equality if and only if PROOF. i. D Pi Let 0 < (2.19) < 8 I. By using Holder’s inequality and (1.6) it easily follows that n 8 l i=l uiPi D i (8-I) n l < u i=l (2.20) i Pi" ObviouslF (2.20) implies n E i--I Since uiP 8i/il 8 D i (21-8-I) > proof for < (8-I) n 1] uiP i 0 [( E i=l whenever < 8 >_ 0 < 8 < I, n uiP/iF. uiPi) -1] 0 < < 1. (2.21) a simple manipulation proves (2.18). follows on the same lines. The It is clear that the equality in (2.18) is true if and only if (2.22) which implies that i lgD (I/pi)" (2.23) Thus it is always possible to have a code word satisfying the requirement D!__< <:OD:i + I, Pi-- :og which is equivalent to i (2.24) P. JAIN AND R.K. TUTEJA 198 Di < Pi D <__. (2 25) Pi Let PARTICULAR CASE. for each u. and i 2, then (2.18) reduces to D the result proved by Math and Nittal [6]. L82(U). Next we obtain a result giving the upper bound to the ’useful’ mean length TItEOREM 4. By properly choosing the lengths ,ln in the code of Theorem 1 ’2 ’’’" L2(U) 3, can be made to sat+/-sly the following L28(U < PROOF. D -8 HS(P,U) + D -8 -1 (21-8-I)Iog log D < I, 0 fl 8 < 1. (2.26) D From (2.25), we have i pi D <D. Consequent ly 8 pi D (8-1 ) i < Multiplying both sides by n l il 8 uiP i D 8 D D u - i < D 8 and then summing over i (8-1) i > (2.27) I. 0, 8 and using (1.6) we get i n 8 E il (2.28) uiP i Obviously (2.28) implies that n i=l 21 -fl Since -I < 0 (- ) n uiPi for PARTICULAR CASE. uiPi i=l 0 Let < 8 u < i D-8 i) n i=l uiPi8/ n ir’=l uiP i (2.29) (2.29) implies (2.26). I, for each i and D =2, then (2.26) reduces to the result proved by Nath and Mittal [6]. REFERENCES I. GUIASU, S. and PICARD, C.F. 2. LONGO, G. Borne Inferieure de la Longueur Ulite de Certain Codes, C.R. Acad. Sci. Paris 273 (1971), 248-251. A Noiseless Coding Theorem for Sources Having Utilities, SlAM J. Math. 30 (4), (1976). 3. 4. 5. 6. Appl. BELLS, M. and GUIASU, S. A Quantitative- Qualitative Measure of Information in Cybernetic Systems. IEEE Trans. Information Theory, IT-14 (1968), 593-594. KRAFT, L.G. A Device for Quantizing Grouping and Coding Amplitude Modulated Pulses., M.S. Thesis, Electrical Engineering Department, MIT (1949). VAN der LUBBE, J.C.A. On Certain Coding Theorems for the Information of Order and Type 8, in Information Theory, Statistical Functions, Random Processes, Transactions of the 8th Prague Conference_.C, Prague (1978) 253-266. NATH, P. and MITTAL, D.P. A Generalization of Shannon’s Inequality and Its Application in Coding Theory. Information and Control 23 (1973), 438-445.