WEAK CONVERGENCE OF A DIRICHLET-MULTINOMIAL PROCESS

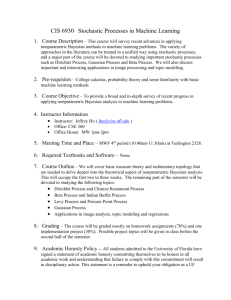

advertisement

Georgian Mathematical Journal

Volume 10 (2003), Number 2, 319–324

WEAK CONVERGENCE OF A DIRICHLET-MULTINOMIAL

PROCESS

PIETRO MULIERE AND PIERCESARE SECCHI

Abstract. We present a random probability distribution which approximates, in the sense of weak convergence, the Dirichlet process and supports

a Bayesian resampling plan called a proper Bayesian bootstrap.

2000 Mathematics Subject Classification: 62G09, 60B10.

Key words and phrases: Dirichlet process, proper Bayesian bootstrap.

1. Introduction

The purpose of this paper is to throw light on a random probability distribution called the Dirichlet-multinomial process that approximates, in the sense

of weak convergence, the Dirichlet process. A Dirichlet-multinomial process is

a particular mixture of Dirichlet processes: in two previous works [11, 12] we

showed that the process supports a Bayesian resampling plan which we called a

proper Bayesian bootstrap suitable for approximating the distribution of functionals of the Dirichlet process and therefore being of interest in the context of

Bayesian nonparametric inference.

Under different names, variants of the Dirichlet-multinomial model have been

recently considered by other authors: see, for instance, [7] and the references

therein. In fact, it has been pointed out that the Dirichlet-Multinomial model

is equivalent to Fisher’s species sampling model [5] recently reconsidered by

Pitman among those extending the Blackwell and MacQueen urn scheme [13].

However none of these works allude to a connection between the Dirichletmultinomial model and Bayesian bootstrap resampling plans. Recent applications of our proper Bayesian bootstrap include those in [3] for the approximation

of the posterior distribution of the overflow rate in discrete-time queueing models.

In Section 2 we define the Dirichlet-multinomial process and we show that it

can be used to approximate a Dirichlet process. Section 3 is dedicated to the

proper Bayesian bootstrap algorithm and its connections with the Dirichletmultinomial process.

2. A Convergence Result

Let P be the class of probability measures defined on the Borel σ-field B of

<; for the reason of simplicity we work with < but all the arguments below still

hold if < is replaced by a separable metric space. Endow P with the topology

c Heldermann Verlag www.heldermann.de

ISSN 1072-947X / $8.00 / °

320

PIETRO MULIERE AND PIERCESARE SECCHI

of weak convergence and write σ(P) for the Borel σ-field in P. With these

assumptions P becomes a separable and complete metric space [14].

A useful random probability measure P ∈ P is the Dirichlet process introduced by Ferguson [4]. When α is a finite, nonnegative, nonnull measure on

(<, B) and P is a Dirichlet process with parameter α, we write P ∈ D(α). We

want to define a random element of P that is a mixture of Dirichlet processes;

according to [1] we thus need to specify a transition measure and a mixing

distribution.

Given w > 0, let αw : P × B → [0, +∞) be defined by setting, for every

P ∈ P and B ∈ B,

αw (P, B) = wP (B).

The function αw is a transition measure. Indeed, for every P ∈ P, αw (P, ·) is

a finite, nonnegative and nonnull measure on (<, B) whereas, for every B ∈ B,

αw (·, B) is measurable on (P, σ(P)) since σ(P) is a smallest σ-field in P such

that the function P → P (B) is measurable, for every B ∈ B.

∗

Given a probability distribution P0 , let X1∗ , . . . , Xm

be an i.i.d. sample of size

∗

∗

m > 0 from P0 . Assume Pm ∈ P to be the empirical distribution of X1∗ , . . . , Xm

defined by

m

1 X

∗

δX ∗ ,

Pm =

m i=1 i

∗

where δx denotes the point mass at x. Write Hm

for the distribution of Pm∗ on

(P, σ(P)).

Roughly, the following definition introduces a process P such that, conditionally on Pm∗ , P ∈ D(wPm∗ ).

Definition 2.1. A random element P ∈ P is called a Dirichlet-multinomial process with parameters (m, w, P0 ) (P ∈ DM(m, w, P0 )) if it is a mixture

∗

of Dirichlet processes on (<, B) with mixing distribution Hm

and transition

measure αw .

Remark 2.2. We call the process P defined above Dirichlet-multinomial since,

as it will be seen in a moment, given any finite measurable partition B1 , . . . , Bk

of <, the distribution of (P (B1 ), . . . , P (Bk )) is a mixture of Dirichlet distributions with multinomial weights. This process must not be confused with the

Dirichlet-multinomial point process of Lo [9, 10] whose marginal distributions

are mixtures of multinomial with Dirichlet weights.

It follows from the definition that if P ∈ DM (m, w, P0 ), for every finite

measurable partition B1 , . . . , Bk of < and (y1 , . . . , yk ) ∈ <k ,

Pr (P (B1 ) ≤ y1 , . . . , P (Bk ) ≤ yk )

Z

∗

(u),

=

D(y1 , . . . , yk |αw (u, B1 ), . . . , αw (u, Bk )) dHm

P

where D(y1 , . . . , yk |α1 , . . . , αk ) denotes the Dirichlet distribution function with

parameters (α1 , . . . , αk ) evaluated at (y1 , . . . , yk ). With different notation, we

WEAK CONVERGENCE OF A DIRICHLET-MULTINOMIAL PROCESS

321

may say that the vector (P (B1 ), . . . , P (Bk )) has a distribution

³ M

Mk ´ ^

1

Dirichlet w

,...,w

multinomial (m, (P0 (B1 ), . . . , P0 (Bk ))) ;

m

m

(M1 ,...,Mk )

i.e., a mixture of Dirichlet distributions with multinomial weights.

For our purposes, the introduction of the Dirichlet-Multinomial process is

justified by the following theorem.

Theorem 2.3. For every m > 0, let Pm ∈ P be a Dirichlet-multinomial

process with parameters (m, w, P0 ). Then, when m → ∞, Pm converges in distribution to a Dirichlet process with parameter wP0 .

The result appears in [11] as well as in [13]. See also [8]. For ease of reference

we sketch a simple argument, inspired by [16], that we consider as a nice didactic

illustration of Prohorov’s Theorem.

Proof. Given any finite measurable partition B1 , . . . , Bk of <, the distribution

of the vector (Pm (B1 ), . . . , Pm (Bk )) weakly converges to a Dirichlet distribution

with parameters (wP0 (B1 ), . . . , wP0 (Bk )) when m → ∞. In order to prove that

Pm weakly converges to a Dirichlet process with parameter wP0 it is therefore

enough to show that the sequence of measures induced on (P, σ(P)) by the

processes Pm , m = 1, 2, . . . , is tight. Given ² > 0, let Kr , r = 1, 2, . . . , be a

compact set of < such that P0 (Krc ) ≤ ²/r3 and define

n

1o

.

Mr = P ∈ P : P (Krc ) ≤

r

T

The set M = ∞

r=1 Mr is compact in P. For m = 1, 2, . . . and r = 1, 2, . . . ,

E[Pm (Krc )] = P0 (Krc ) and thus

³

²

1´

c

≤ rP0 (Krc ) ≤ 2 .

Pr Pm (Kr ) >

r

r

Hence, for every m = 1, 2, . . . ,

∞

∞

³

X

X

1´

1

Pr(Pm ∈ M ) ≥ 1 −

Pr Pm (Krc ) >

≥1−²

.

¤

2

r

r

r=1

r=1

3. Connections with the Proper Bayesian Bootstrap

Let T : P → < be a measurable function and P ∈ D(wP0 ) with w > 0, P0 ∈

P. It is often difficult to work out analytically the distribution of T (P ) even

when T is a simple statistical functional like the mean [6, 2]. However, when P0

is discrete with finite support one may produce a reasonable approximation of

the distribution of T (P ) by a Monte Carlo procedure that obtains i.i.d. samples

from D(wP0 ). If P0 is not discrete, we propose to approximate the distribution of

T (P ) by the distribution of T (Pm ), where Pm is a Dirichlet-multinomial process

with parameters (m, w, P0 ) and m is large enough.

Of course, since the Continuous Mapping Theorem does not apply to every

function T, the fact that Pm converges in distribution to P does not always

322

PIETRO MULIERE AND PIERCESARE SECCHI

imply that the distribution of T (Pm ) is close to that of T (P ). However, we

proved in [12] that this is in fact the case when T belongs to a large class of

linear functionals or when T is a quantile. In [12] we also tested by means of a

few numerical examples a bootstrap algorithm that generates an approximation

of the distribution of T (P ) in the following steps:

∗

(1) Generate an i.i.d sample X1∗ , . . . , Xm

from P0 .

w

, 1).

(2) Generate an i.i.d. sample V1 , . . . , Vm from a Gamma( m

(3) Compute T (Pm ), where Pm ∈ P is defined by

m

X

1

Vi δXi∗ .

P m = Pm

i=1 Vi i=1

(4) Repeat steps (1)–(3) s times and approximate the distribution of T (P )

with the empirical distribution of the values T1 , . . . , Ts generated at step

(3).

It is easily seen that the probability distribution Pm generated in step (3)

is in fact a trajectory of the Dirichlet-multinomial process with parameters

(m, w, P0 ). We may therefore conclude that the previous algorithm aims at approximating the distribution of T (P ) by distribution of T (Pm ), where Pm ∈

DM (m, w, P0 ), and approximates the latter by means of the empirical distribution of the values T1 , . . . , Ts generated in step (3).

Remark 3.1. Step (1) is useless when P0 is P

discrete with finite support

{z1 , . . . , zm } and P0 (zi ) = pi , i = 1, . . . , m, with m

i=1 pi = 1. In fact, in this

case one may generate at step (3) a trajectory of P ∈ D(wP0 ), by taking

m

X

1

P

Pm = m

Vi δzi

i=1 Vi i=1

where V1 , . . . , Vm , are independent and Vi has distribution Gamma(wpi , 1), i =

1, . . . , m.

We call the algorithm (1)–(4) the proper Bayesian bootstrap. To understand

the reason for this name consider the following situation. A sample X1 , . . . , Xn

from a process P ∈ D(kQ0 ), with k > 0 and Q0 ∈ P, has been observed and

the problem is to compute the posterior distribution of T (P ) where T is a

given statistical functional. Ferguson [4] proved that the P

posterior distribution

of P is again a Dirichlet process with parameter kQ0 + ni=1 δXi . In order to

approximate the posterior distribution of T (P ) our algorithm generates an i.i.d.

∗

from

sample X1∗ , . . . , Xm

!

à n

k

n

1X

Q0 +

δX

k+n

k + n n i=1 i

∗

,

and then, in step (3), produces a trajectory

of a process that, given X1∗ , . . . , Xm

P

m

−1

∗

is Dirichlet with parameter = (k +n)m

i=1 δXi and evaluates T with respect

to this trajectory. The algorithm is therefore a bootstrap procedure since it

samples from a mixture of the empirical distribution function generated by

WEAK CONVERGENCE OF A DIRICHLET-MULTINOMIAL PROCESS

323

X1 , . . . , Xn and Q0 which, together with the weight k, elicits the prior opinions

relative to P. Because it takes into account prior opinions by means of a proper

distribution function, the procedure was termed proper.

The name proper Bayesian bootstrap also distinguishes the algorithm from

the Bayesian bootstrap of Rubin [15] that approximates the posterior

Pn distribution of T (P ) by means of the distribution of T (Q) with Q ∈ D( i=1 δXi ). We

already noticed in the previous work [12] that there are no proper priors for P

which support Rubin’s approximation and that the proper Bayesian bootstrap

essentially becomes the Bayesian bootstrap of Rubin when k tends to 0 or n is

very large.

Acknowledgements

We thank an anonymous referee for his helpful comments that greatly improved the paper. Both authors were financially supported by the Italian

MIUR’s research project Metodi Bayesiani nonparametrici e loro applicazioni.

References

1. C. Antoniak, Mixtures of Dirichlet processes with applications to Bayesian nonparametric problems. Ann. Statist. 2(1974), 1152–1174.

2. D. M. Cifarelli and E. Regazzini, Distribution functions of means of Dirichlet process.

Ann. Statist. 18(1990), No. 1, 429–442.

3. P. L. Conti, Bootstrap approximations for Bayesian analysis of Geo/G/1 discrete time

queueing models. J. Statist. Plann. Inference, 2002 (in press).

4. T. S. Ferguson, A Bayesian analysis of some nonparametric problems. Ann. Statist.

1(1973), No. 2, 209–230.

5. R. A. Fisher, A. S. Corbet, and C. B. Williams, The relation between the number

of species and the number of individuals in a random sample of animal population. J.

Animal Ecology 12(1943), 42–58.

6. R. C. Hannum, M. Hollander, and N. A. Langberg, Distributional results for

random functionals of a Dirichlet process. Ann. Prob. 9(1981), 665–670.

7. H. Ishwaran and L. F. James, Gibbs sampling methods for stick-breaking priors. J.

Amer. Statist. Assoc. 96(2001), 161–173.

8. H. Ishwaran and M. Zarepour, Exact and approximate sum-representations for the

Dirichlet process. Canad. J. Statist. 30(2002), 269–283.

9. A. Y. Lo, Bayesian statistical inference for sampling a finite population. Ann. Statist.

14(1986), No. 3, 1226–1233.

10. A. Y. Lo, A Bayesian bootstrap for a finite population. Ann. Statist. 16(1988), No. 4,

1684–1695.

11. P. Muliere and P. Secchi, A note on a proper Bayesian mootstrap. Technical Report

#18, Dip. di Economia Politica e Metodi Quantitativi, Università di Pavia, 1995.

12. P. Muliere and P. Secchi, Bayesian nonparametric predictive inference and bootstrap

techniques. Ann. Inst. Statist. Math. 48(1996), No. 4, 663–673.

13. J. Pitman, Some developments of the Blackwell–MacQueen urn scheme. Statistics, probability and game theory, 245–267, IMS Lecture Notes Monogr. Ser., 30, Inst. Math. Statist.,

Hayward, CA, 1996.

324

PIETRO MULIERE AND PIERCESARE SECCHI

14. Yu. V. Prohorov, Convergence of random processes and limit theorems in probability

theory. Theory Probab. Appl. 1(1956), 157–214.

15. D. M. Rubin, The Bayesian bootstrap. Ann. Statist. 9(1981), No. 1, 130–134.

16. J. Sethuraman and R. C. Tiwari, Convergence of Dirichlet measures and the interpretation of their parameter. Statistical decision theory and related topics, III, Vol. 2 (West

Lafayette, Ind., 1981), 305–315, Academic Press, New York–London, 1982.

(Received 3.10.2002; revised 23.01.2003)

Authors’ addresses:

Pietro Muliere

Università L. Bocconi

Istituto di Metodi Quantitativi

Viale Isonzo 25, 20135 Milano

Italy

E-mail: pietro.muliere@uni-bocconi.it

Piercesare Secchi

Politecnico di Milano

Dipartimento di Matematica

Piazza Leonardo da Vinci 32, 20132 Milano

Italy

E-mail: secchi@mate.polimi.it