Outline Introduction Graph Construction Graph Learning

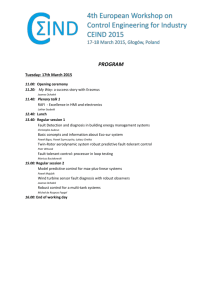

advertisement

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Robust Multi-Class Transductive Learning with

Graphs

Wei Liu and Shih-Fu Chang

Columbia University

June 19, 2009

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

What is Semi-Supervised Learning (SSL)?

F In the narrow sense, SSL refers particularly to semi-supervised

classification using labeled data and unlabeled data, which often

includes transductive and inductive cases.

seen data

+

-

unseen data

inductive learning

transductive learning

Figure: Narrow-sense semi-supervised learning.

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

What is Semi-Supervised Learning (SSL)?

F In the wide sense, SSL covers all learning tasks where prior

knowledge about a few data is known and knowledge about the

remaining data can be inferred. The knowledge may be labels,

response values, vector representations, and pairwise relations.

regression

clustering

Figure: Wide-sense semi-supervised learning.

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Survey and Book

Xiaojin Zhu. Semi-Supervised Learning Literature Survey,

Computer Sciences Technical Report 1530, University of

Wisconsin-Madison, 2005.

Olivier Chapelle, Bernhard Schölkopf, and Alexander Zien.

Semi-Supervised Learning, MIT Press, 2006.

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Binary-Class SSL Setting

I

A data set X = {x1 , · · · , xl , · · · , xn } ⊂ Rd in which the first l

samples are labeled and the remaining u = n − l ones are

unlabeled. Prior labels saved in y ∈ Rn such that yi ∈ {1, −1}

if xi is labeled and yi = 0 if unlabeled. Use the graph

Laplacian matrix L or its normalized variant L̄ to infer the

overall labeling f ∈ Rn .

I

Graph Laplacian: L = D − W where W is the weight matrix

of the P

graph G (V , E , W ) built on the dataset X , and

Dii = j Wij .

I

Normalized Graph Laplacian: L̄ = D − 2 LD − 2 .

1

Wei Liu and Shih-Fu Chang

1

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

State-of-The-Arts

F Label Propagation – the key is the Laplacian-shaped regularizer.

Gaussian Fields and Harmonic Functions (GFHF), Zhu et al. 2003:

min

f T Lf

s.t.

fl = yl

f

Local and Global Consistency (LGC), Zhou et al. 2004:

min kf − yk2 + µf T L̄f

f

Quadratic Criterion (QC), Bengio et al. 2006:

min kfl − yl k2 + µf T Lf + µ²kfk2

f

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

F Remarks

1. All these methods are akin to each other. I found that X. Zhu’s

method GFHF gives more robust performance because of the hard

constraint and no trade-off parameters.

2. All these methods heavily depend on graph structures.

3. All these methods naturally generalize to multi-class problems.

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Motivation

1. ”Several graph-based methods listed here are similar to each

other. They differ in the particular choice of the loss function and

the regularizer. We believe it is more important to construct a

good graph than to choose among the methods. However graph

construction, as we will see later, is not a well studied area.”

X. Zhu, the SSL survey 2005.

2. Two mostly used kinds of graphs: k-NN graph and

h-neighborhood graph. Empirically, k-NN weighted graph with

small k tends to perform better.

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

A Simple Toy Problem–Noisy Two Moons

Noisy two moons

1.2

unlabeled

noise

labeled: +1

labeled: −1

1

0.8

0.6

0.4

0.2

0

−0.2

−0.4

−0.6

−0.8

−1.5

−1

−0.5

0

0.5

1

1.5

2

2.5

3

Figure: Noisy two moons given two labeled points. We only have ground

truth labels for the points on two moons, so we evaluate classification

performance on these on-manifold points.

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

A Simple Toy Problem–Noisy Two Moons

(c) GFHF with sGraph (0%)

(b) GFHF (14.21%)

(a) LGC (13.55%)

1.2

1.2

1.2

1

1

1

0.8

0.8

0.8

0.6

0.6

0.6

0.4

0.4

0.4

0.2

0.2

0.2

0

0

0

−0.2

−0.2

−0.2

−0.4

−0.4

−0.4

−0.8

−1.5

−0.6

−0.6

−0.6

−1

−0.5

0

labeled to ’+1’

0.5

1

1.5

labeled to ’−1’

2

’+1’

−0.8

2.5 −1.53

’−1’

−1

−0.5

0

labeled to ’+1’

0.5

1

1.5

labeled to ’−1’

2

’+1’

−0.8

2.5 −1.53

’−1’

−1

−0.5

0

labeled to ’+1’

0.5

1

1.5

labeled to ’−1’

2

’+1’

2.5

3

’−1’

Figure: Error rates over unlabeled points. (a) LGC with 13.55% error

rate using a 10-NN graph; (b) GFHF with 14.21% error rate using a

10-NN graph; (c) GFHF with zero error rate using a symmetry-favored

10-NN graph.

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Illumination

I

Using the traditional k-NN graph, LGC and GFHF cause

many errors. But GFHF achieves perfect results when using

the proposed symmetry-favored k-NN graph. This illustrates

that graph quality is critical to SSL, and the same SSL

method leads to very different results using different graph

construction schemes.

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

k-NN Graph

I

Let us define an asymmetric n × n matrix:

³

´

(

d(x ,x )2

exp − σi 2 j

, if j ∈ Ni

Aij =

0,

otherwise

(1)

where the set Ni saves the indexes of k nearest neighbors of

point xi and d(xi , xj ) is some distance measure (e.g.

Euclidean distance) between xi and xj .

I

The P

parameter σ is empirically estimated by

σ = ni=1 d(xi , xik )/n, where xik is the k-th nearest neighbor

of xi . Such an estimation is verified simple and sufficiently

effective.

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

k-NN sGraph

I

Let us define a symmetric n × n matrix:

Aij + Aji , if j ∈ Ni and i ∈ Nj

A ,

if j ∈

/ Ni and i ∈ Nj

Wij =

ji

Aij ,

otherwise

(2)

Obviously, W = A + AT and W is symmetric with Wii = 0

(to avoid self loops). This weighting scheme favors the

symmetric edges < xi , xj > such that xi is in the

neighborhood of xj and xj is simultaneously in the

neighborhood of xi .

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Remark

1. The weights of those symmetric edges are doubled explicitly due

to the reasonable consideration that two points connected by a

symmetric edge are prone to be on the same submanifold.

2. In contrast, the weighting scheme adopted by traditional k-NN

graphs treats all edges in the same manner, which defines the

weighted adjacency matrix by max{A, AT }.

3. We call the graph constructed through eq. (2) the

symmetry-favored k-NN graph or k-NN sGraph in abbreviation.

The proposed graph is relatively robust to noise as it reinforces the

similarities between points on manifolds.

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Comparision

2-NN Graph

2-NN sGraph

Figure: Thicker edges represent larger edge weights.

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Graph Laplacian

I

Given the constructed graph G (V , E , W), the smooth

semi-norm used in most graph-based approaches is

1

kf k2G = (f (vi ) − f (vj ))2 Wij = f T Lf,

2

where we elicit the graph Laplacian matrix

L = D − W.

I

(3)

n×n is a diagonal matrix such that

The degree

Pn matrix D ∈ R

Dii = j=1 Wij . Dii approximates the local density of

neighborhood at xi .

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Doubly-Stochastic Matrix

I

Theorem 1 (in paper) implies that the smooth norm

emphasizes neighborhoods of high densities (large Dii ).

However, sampling is usually not uniform in practice, so

over-emphasizing the neighborhoods of high densities may

occlude the information in sparse regions.

Figure: Ununiform sampling.

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Doubly-Stochastic Matrix

I

To fully exploit the power of unlabeled data, we wouldn’t

expect sparse densities from all unlabeled data. Thus, we

choose to enforce the equal degree constraint Dii = 1 by

setting W1 = 1 which makes the adjacency matrix W a

doubly-stochastic matrix.

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

How to learn?

I

We try to learn W from training data without any presumed

function form. We only assume that W is close to the initial

W0 calculated via eq. (2).

I

We can infuse semi-supervised information into W. Consider

a pair set

T = {(i, j)|i = j or (xi , xj ) differ in labels}

and define its matrix form T. In particular, wePrequire

Wij = 0 for (i, j) ∈ T or equivalently require (i,j)∈T Wij = 0

due to Wij ≥ 0. This constraint is intuitive since it removes

self loops and erroneous edges.

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Learning W

I

We formulate learning doubly-stochastic W subject to

differently labeled information T as follows

1

min G(W) = kW − W0 k2F

2

X

s.t.

Wij = 0

(i,j)∈T

W1 = 1, W = WT , W ≥ 0

(4)

where k.kF stands for the Frobenius norm. Eq. (4) falls into

an instance of quadratic programming (QP).

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Learning W

I

For efficient computation, we divide this QP problem into two

convex sub-problems

1

min G(W) = kW − W0 k2F

2

X

Wij = 0, W1 = 1, W = WT

s.t.

(5)

(i,j)∈T

and

1

min G(W) = kW − W0 k2F

2

Wei Liu and Shih-Fu Chang

s.t. W ≥ 0

(6)

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Learning W

I

We find a simple solution to the sub-problem eq. (6):

W = dW0 e≥0 in which the operator dW0 e≥0 zeros out all

negative entries of W0 . The operator is essentially a conic

subspace projection operator.

I

We solve the sub-problem eq. (5)

µ

¶

µ0

21T Tµ

T

0

0

0

µ0 , (7)

W = P(W , T) = W − t +

T + µ 0 1T + 1µ

|T |

where P(W0 , T) behaves as an affine subspace projection

operator. t0 and µ0 are also computed based on W0 .

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Successive Projection

I

We tackle the original QP problem eq. (4) by successive

projection using the two subspace projection operators.

I

Von-Neumanns successive projection lemma: the

successively alternate projection process will converge onto

the intersect of the affine and conic subspace operators. VNs

lemma ensures that alternately solving sub-problems eq. (5)

and (6) is theoretically guaranteed to converge to the globally

optimal solution of the target problem eq. (4).

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Algorithm 1. Doubly-Stochastic Adjacency Matrix Learning

INPUT: the initial adjacency matrix W0

the differently labeled information T

the maximum iteration number MaxIter .

LOOP: m = 1, · · · , MaxIter

Wm = P(Wm−1 , T)

If Wm ≥ 0 stop LOOP;

else Wm = dWm e≥0 .

OUTPUT: W = Wm .

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Two Rings Toy Problem

0.9

0.8

0.7

0.6

0.5

0.4

0.3

0.2

0.1

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

Figure: Two rings toy data.

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Two Rings Toy Problem

(a) k−NN Graph

(b) b−Matching Graph

0.9

0.9

0.8

0.8

0.7

0.7

0.6

0.6

0.5

0.5

0.4

0.4

0.3

0.3

0.2

0.2

0.1

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.1

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

Figure: k = 10. The b-matching graph is a regular graph where each

node has k adjacent nodes.

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Two Rings Toy Problem

(c) unit−degree Graph

(d) unit−degree Graph given two labeled points

0.9

0.9

0.8

0.8

0.7

0.7

0.6

0.6

0.5

0.5

0.4

0.4

0.3

0.3

0.2

0.2

0.1

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.1

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

Figure: These two graphs have doubly-stochastic matrices learned based

on the 10-NN sGraph. The former doesn’t use the differently labeled

information T (good enough!), while the latter does.

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Merits of Doubly-Stochastic Matrix

I

It offers a nonparametric form for W, flexibly representing

data lying in compact clusters or intrinsic low-dimensional

submanifolds.

I

It is highly robust to noise, e.g., when a noisy sample xj

invades the neighborhood of xi , the unit-degree constraint

makes the weight Wij absolutely small compared to the

weights between xi and closer neighbors.

I

It provides the “balanced” graph Laplacian with which the

smooth norm penalizes label prediction functions on each

sample (node) uniformly, resulting in uniform label

propagation.

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Goal

I

Solve a soft label matrix F ∈ Rn×c for any multi-class SSL

task.

Yl known class assignment

Fl

F=

Fu

= [F.1 , F.2 ,..., F.c ]

account for each class

unknown

Figure: Provided Yl infer Fu .

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Multi-Class Constraints

I

It suffices to suppose the class posteriors for the labeled data

be p(Ck |xi ) = Yik = 1 if xi ∈ Ck and p(Ck |xi ) = Yik = 0

otherwise. Importantly, if we knew class priors

ω T 1c = 1) and regarded soft labels

ω = [p(C1 ), · · · , p(Cc )]T (ω

Fik as p(Ck |xi ), we would have the equation

n

n

1T F.k ∼ X p(Ck |xi ) X

p(xi )p(Ck |xi ) = p(Ck )

=

=

n

n

i=1

(8)

i=1

where the marginal probability density p(xi ) ∝ Dii = 1 is

assumed to be 1/n. Eq. (8) induces a hard constraint

ω T (FT 1 = nω

ω ).

1T F = nω

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Multi-Class Label Propagation

I

To address multi-class problems, our motivation is to let the

soft labels Fik carry the main properties of p(Ck |xi ). Hence,

ω and F1c = 1 (due

we P

impose two hard constraints FT 1 = nω

to k p(Ck |xi ) = 1, 1c is a c-dimensional 1-entry vector) to

obtain a constrained multi-class label propagation:

minF tr (FT LF)

s.t.

ω

Fl = Yl , F1c = 1, FT 1 = nω

Wei Liu and Shih-Fu Chang

(9)

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Multi-Class Label Propagation

I

Eq. (9) reduces to

T

min Q(Fu ) = tr (FT

u Luu Fu ) + 2tr (Fu Lul Yl )

ω − YlT 1l

s.t. Fu 1c = 1u , FT

(10)

u 1u = nω

· ll

¸

L Llu

where Luu and Lul are sub-matrices of L =

, and

Lul Luu

1l and 1u are l- and u-dimensional 1-entry vectors,

respectively.

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Multi-Class Label Propagation

I

Theorem 2 (in paper) shows a closed-form solution to

eq. (10). The formulated multi-class label propagation

succeeds in incorporating class priors, different from all

existing label propagation methods.

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Flowchart of RMGT

prior labels

k-NN sGraph

input

feature

vectors

unit-degree Graph

doubly-stochastic

adjacency matrix

learning

multi-class label

propagation

global

classification

Figure: The RMGT algorithm.

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Experimental Setup

Data

#Features #Samples #Classes

USPS (test)

256

2007

10

FRGC (subset)

4608

3160

316

Figure: Digit and face images.

RMGT: without graph adjacency matrix learning.

RMGT(W): with graph adjacency matrix learning.

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Performance Curves

USPS

FRGC

0.35

0.85

LGC

SGT

GFHF+CMN

RMGT

RMGT(W)

0.25

0.2

0.15

0.1

20

0.8

Recognition Rate (%)

Error Rate (%)

0.3

0.75

0.7

LGC

SGT

GFHF+CMN

RMGT

RMGT(W)

0.65

30

40

50

60

70

80

90

100

# Labeled Samples

Wei Liu and Shih-Fu Chang

3

4

5

6

7

8

9

10

# Labeled Samples/100

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Conclusions

I

All compared SSL algorithms achieve performance gains when

switching k-NN graphs to k-NN sGraphs.

I

RMGT performs better than the other methods, thus

demonstrating the success of multi-class label propagation

with class priors.

I

RMGT(W) is significantly superior to the others, manifesting

that the proposed graph learning technique (doubly-stochastic

adjacency matrix learning) boosts graph-based SSL

performance.

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs

Outline

Introduction

Graph Construction

Graph Learning

Robust Multi-Class Graph Transduction (RMGT)

Experiments

Thanks!

For any problems, please email to wliu@ee.columbia.edu.

Wei Liu and Shih-Fu Chang

Robust Multi-Class Transductive Learning with Graphs