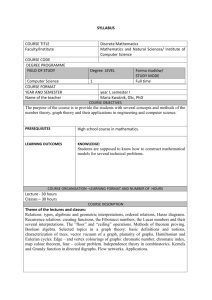

Probability and combinatorics Michael Anshelevich May 1, 2012 Texas A&M University

advertisement

Probability and combinatorics

Michael Anshelevich

Texas A&M University

May 1, 2012

Michael Anshelevich

Probability and combinatorics

Probability spaces.

(Λ, M, P ) = measure space.

Probability space: P a probability measure, P (Λ) = 1.

Algebra A = L∞ (Λ, P ) of bounded random variables.

R

E[X] = X dP = expectation functional on A.

For each real-valued X, have µX = probability measure on R

defined by

Z

Z

f (x) dµX (x) =

f (X) dP = E[f (X)]

Λ

R

for f ∈ C0 (R).

µX = distribution of X.

Michael Anshelevich

Probability and combinatorics

Independence.

More generally, if X1 , X2 , . . . , Xn random variables,

µX1 ,X2 ,...,Xn = measure on Rn = joint distribution.

Definition. X, Y are independent if

µX,Y = µX ⊗ µY (product measure)

i.e.

E[f (X)g(Y )] = E[f (X)]E[g(Y )]

Remark. If X, Y independent

Z

Z

f (t) dµX+Y (t) = f (x + y) dµX,Y (x, y)

Z

Z

= f (x + y) d(µX ⊗ µY ) = f (t) d(µX ∗ µY ).

So in this case, µX+Y = µX ∗ µY .

Michael Anshelevich

Probability and combinatorics

Fourier transform.

Definition. Fourier transform

Z

FX (θ) = eiθx dµX (x) = E[eiθX ]

Lemma. If X, Y independent,

FX+Y (θ) = FX (θ)FY (θ).

Proof.

E[eiθ(X+Y ) ] = E[eiθX eiθY ] = E[eiθX ]E[eiθY ]

Michael Anshelevich

Probability and combinatorics

Combinatorics.

Z

FX (θ) =

eiθx dµX (x) =

∞

X

(iθ)n

n=0

Z

mn =

n!

mn (X),

xn dµX (x) = E[X n ].

{m0 , m1 , m2 , . . .} = moments of X.

For X, Y independent, mn (X + Y ) complicated. But:

FX+Y (θ) = FX (θ)FY (θ),

log FX+Y (θ) = log FX (θ) + log FY (θ).

Denote `X (θ) = log FX+Y (θ).

Michael Anshelevich

Probability and combinatorics

Cumulants.

`X (θ) =

∞

X

(iθ)n

n=1

n!

cn ,

{c1 , c2 , c3 , . . .} = cumulants of X.

cn (X + Y ) = cn (X) + cn (Y ).

Relation between {mn }, {cn }?

A set partition

{(1, 3, 4), (2, 7), (5), (6)} ∈ P(7).

Michael Anshelevich

Probability and combinatorics

Moment-cumulant formula.

Proposition.

mn =

X Y

c|B| .

π∈P(n) B∈π

mean

m1 = c1 ,

c1 = m1

m2 = c2 + c21 ,

c2 = m2 − m21

c31 ,

c3 = m3 −

` = log F,

F = e` ,

m3 = c3 + 3c2 c1 +

mn+1 =

n X

n

k=0

Michael Anshelevich

k

3m2 m21

variance

+

2m31

F 0 = `0 F.

ck+1 mn−k .

Probability and combinatorics

Central limit theorem.

Theorem. Let {Xn : n ∈ N} be independent, identically

distributed, mean 0, variance v.

E[Xn ] = 0,

Let

Sn =

E[Xn2 ] = v.

X1 + X2 + . . . + Xn

√

.

n

Then the moments of Sn converge to the moments of the

normal distribution N (0, v).

Michael Anshelevich

Probability and combinatorics

Central limit theorem.

Proof. For each k,

ck (αX) = αk ck (X).

ck (Sn ) = ck

X1 + X2 + . . . + Xn

√

n

n

= √ k ck (X1 ).

( n)

(k = 1) c1 (X1 ) = 0, c1 (Sn ) = 0.

(k = 2) c2 (X1 ) = v, c2 (Sn ) = v.

n

(k > 2) k/2 → 0, ck (Sn ) → 0.

n

In the limit, get whichever distribution has

(

v, k = 2,

ck =

0, otherwise.

P

Check: normal distribution. Note mn = π∈P2 (n) v n/2 .

Michael Anshelevich

Probability and combinatorics

Operators.

H = real Hilbert space, e.g. Rn .

HC = its complexification (Cn ).

HC⊗n = HC ⊗ HC ⊗ . . . ⊗ HC

= symmetric tensor product

= Span ({h1 ⊗ h2 ⊗ . . . ⊗ hn , order immaterial})

with the inner product

hh1 ⊗ . . . ⊗ hn , g1 ⊗ . . . ⊗ gn i =

X

h1 , gσ(1) . . . hn , gσ(n)

σ∈Sym(n)

(degenerate inner product).

Michael Anshelevich

Probability and combinatorics

Creation and annihilation operators.

Symmetric Fock space

F(HC ) =

∞

M

HC⊗n = CΩ ⊕ HC ⊕ HC⊗2 ⊕ HC⊗3 . . . ,

n=0

Ω = vacuum vector.

−

For h ∈ H, define a+

h , ah on F(HC )

a+

h (f1 ⊗ . . . ⊗ fn ) = h ⊗ f1 ⊗ . . . ⊗ fn ,

n

X

a−

(f

⊗

.

.

.

⊗

f

)

=

hfi , hi f1 ⊗ . . . ⊗ fˆi ⊗ . . . ⊗ fn ,

n

h 1

i=1

a−

h (f )

= hf, hi Ω

creation and annihilation operators.

Michael Anshelevich

Probability and combinatorics

Operator algebra.

+ ∗

Check: a−

h = (ah ) adjoint.

−

So Xh = a+

h + ah = self-adjoint.

a+ , a− do not commute:

+

+ −

a−

h ag − ag ah = hg, hi .

But Xh , Xg commute.

A = Alg {Xh : h ∈ H} = commutative algebra.

Define the expectation functional on it by

E[A] = hAΩ, Ωi .

(A, E) = probability space.

Michael Anshelevich

Probability and combinatorics

Wick formula.

D

E

−

+

−

+

−

E[Xh1 Xh2 . . . Xhn ] = (a+

+

a

)(a

+

a

)

.

.

.

(a

+

a

)Ω,

Ω

h1

h1

h2

h2

hn

hn

X

Y

=

hhi , hj i .

π∈P2 (n) (i,j)∈π

Therefore:

(

khk2 , k = 2,

ck (Xh ) =

0,

otherwise,

and so Xh ∼ N (0, khk2 ).

If h ⊥ g, the Xh , Xg are independent.

Michael Anshelevich

Probability and combinatorics