This article appeared in a journal published by Elsevier. The... copy is furnished to the author for internal non-commercial research

advertisement

This article appeared in a journal published by Elsevier. The attached

copy is furnished to the author for internal non-commercial research

and education use, including for instruction at the authors institution

and sharing with colleagues.

Other uses, including reproduction and distribution, or selling or

licensing copies, or posting to personal, institutional or third party

websites are prohibited.

In most cases authors are permitted to post their version of the

article (e.g. in Word or Tex form) to their personal website or

institutional repository. Authors requiring further information

regarding Elsevier’s archiving and manuscript policies are

encouraged to visit:

http://www.elsevier.com/copyright

Author's personal copy

Journal of Statistical Planning and Inference 139 (2009) 533 -- 546

Contents lists available at ScienceDirect

Journal of Statistical Planning and Inference

journal homepage: w w w . e l s e v i e r . c o m / l o c a t e / j s p i

Asymptotic properties of nonparametric M-estimation for mixing

functional data

Jia Chen∗ , Lixin Zhang

Department of Mathematics, Zhejiang University, Yuquan Campus, Hangzhou 310027, China

A R T I C L E

I N F O

A B S T R A C T

We investigate the asymptotic behavior of a nonparametric M-estimator of a regression function for stationary dependent processes, where the explanatory variables take values in some

abstract functional space. Under some regularity conditions, we give the weak and strong

consistency of the estimator as well as its asymptotic normality. We also give two examples

of functional processes that satisfy the mixing conditions assumed in this paper. Furthermore,

a simulated example is presented to examine the finite sample performance of the proposed

estimator.

© 2008 Elsevier B.V. All rights reserved.

Article history:

Received 19 February 2006

Received in revised form

20 May 2007

Accepted 1 May 2008

Available online 22 May 2008

MSC:

primary 62N02

62G09

secondary 62E20

Keywords:

-Mixing

Asymptotic normality

Consistency

Functional data

Nonparametric M-estimation

1. Introduction

The nonparametric regression techniques in modelling economic and financial time series data have gained a lot of attention

during the last two decades. Both estimation and specification testing have been systematically examined for real-valued i.i.d.

or weakly dependent stationary processes. See, for example, Robinson (1989), Härdle et al. (1997), Fan and Yao (2003) and the

references therein.

In this paper, we consider the case of mixing functional data. Let {Xi , Yi }∞

be a stationary process, where Yi are real-valued

i=1

random variables and Xi take values in some abstract functional space H (e.g. Banach or Hilbert space) with norm · and

the deduced distance d(u, v) = u − v, u, v ∈ H. In recent years, there is increasing interest in studying the limit theorems for

functional data. For example, Araujo and Gine (1980) and Vakashnia et al. (1987) extended certain probability limit theorems

to Banach-space-valued random variables. Gasser et al. (1998) considered density and mode estimation for Xi taking values in a

normed vector space. Ferraty and Vieu (2002) studied nonparametric regression estimation, and Ramsay and Silverman (2002)

gave an introduction to the basic methods of functional data analysis and provided diverse case studies in several areas including

criminology, economics and neurophysiology.

In this paper, we consider the following regression functional:

m(x) = E(Y1 |X1 = x),

∗

x ∈ H,

Corresponding author.

E-mail address: chenjiazju@yahoo.com.cn (J. Chen).

0378-3758/$ - see front matter © 2008 Elsevier B.V. All rights reserved.

doi:10.1016/j.jspi.2008.05.007

(1.1)

Author's personal copy

534

J. Chen, L. Zhang / Journal of Statistical Planning and Inference 139 (2009) 533 -- 546

assuming that E|Y1 | < ∞. Masry (2005) established the asymptotic normality of the Nadaraya–Watson (NW) kernel estimator

m∗n (x) of m(x), which is defined by

m∗n (x) =

n

Yi K

i=1

d(x, Xi )

hn

n

i=1

K

d(x, Xi )

,

hn

(1.2)

where K(·) is a kernel function and hn is the bandwidth, which tends to zero as n tends to infinity. The NW estimator m∗n (x) can

be seen as a local least squares estimator, since it minimizes

n

K

i=1

d(x, Xi )

(Yi − )2

hn

with respect to .

Although NW estimators are central in nonparametric regression, it is known that they are not robust due to the fact that NW

estimators can be considered as local least squares estimators and the least squares estimators are not robust. For instance, they

are sensitive to outliers and do not perform well when the errors are heavy-tailed. However, outliers or aberrant observations are

observed very often in econometrics and finance as well as in many other applied fields. A treatment of outliers is an important

step in highlighting features of a data set. So in order to attenuate the lack of robustness of NW estimators, M-type regression

estimators are natural candidates for achieving desirable robustness properties. In this paper, we investigate the asymptotic

properties of the nonparametric M-estimator mn (x), which is implicitly defined as the solution to the following problem: find

∈ R to minimize

n

d(x, Xi )

K

(Yi − )

(1.3)

hn

i=1

or to satisfy the equation

n

K

i=1

d(x, Xi )

(Yi − ) = 0,

hn

(1.4)

where (·) is a given outlier-resistant loss function defined in R and (·) is its derivative. If the relevant function is (y)=y, then

the estimator mn (x) becomes the NW estimator m∗n (x). If (y) = sign(y), then the resulting estimator of (1.4) is a nonparametric

least absolute distance estimator of m(x). Many examples of choices of can be found in the book of Serfling (1980).

There is much literature on M-estimation for real-valued data. For example, Huber (1964) studied M-estimation of a location

parameter and obtained the asymptotic theory for the proposed estimator. Härdle (1984) established weak and strong consistency

as well as asymptotic normality of a nonparametric regression M-estimator for i.i.d. observations. Härdle (1989) considered the

asymptotic maximal deviation of the nonparametric M-smoother mn (x) towards the regression curve m(x), i.e. sup0 x 1 |mn (x)−

m(x)|. Boente and Fraiman (1989) proposed nonparametric M-estimators for regression and autoregression of mixing processes.

Beran (1991) studied M-estimation of a local parameter for Gaussian long-memory processes and Beran et al. (2003) investigated

the behavior of a nonparametric kernel M-estimator for a fixed design model with long-memory errors.

To the best of our knowledge, however, nonparametric M-estimation has not been developed for functional data. The purpose

of this paper is to investigate the nonparametric M-estimator mn (x), which is defined as the solution of Eq. (1.4), and to obtain its

weak and strong consistency as well as its asymptotic normality under -mixing dependence assumptions. From the asymptotic

normality, the asymptotic mean and variance of the error mn (x) − m(x) can be got.

The rest of the paper is organized as follows. In Section 2, we present the assumptions and main results. The derivations of the

main results are given in Section 3. Section 4 includes some examples of functional processes that satisfy the mixing conditions

in this paper as well as a simulated example. The simulation results show that the proposed M-estimator works well in practice.

Some useful lemmas are given in the Appendix.

2. Assumptions and main results

A stationary process {Xi , Yi }∞

is called -mixing if

i=1

(n) → 0 as n → ∞,

where

(i) =

sup

∞

A∈F0

−∞ ,B∈Fi

|P(AB) − P(A)P(B)|,

where Fba is the -algebra generated by {Xi , Yi }bi=a . -Mixing dependence is satisfied by many time series models (see Pham and

Tran, 1985 for more details). Hence, it is assumed by many authors in nonparametric inference for real-valued time series,

Author's personal copy

J. Chen, L. Zhang / Journal of Statistical Planning and Inference 139 (2009) 533 -- 546

535

say Roussas (1990), Masry and Fan (1997) and Cai (2002). For limit theorems for -mixing sequences, we refer to Lin and Lu

(1996) and the references therein.

Define Di = d(x, Xi ) and i = Yi − m(Xi ). We first give the assumptions that are necessary in deriving the asymptotic properties

of the nonparametric M-estimator mn (x).

A1. K(·) is a nonnegative and bounded kernel function with compact support, say [0, 1].

A2. (·) is a convex function. Let (·) be the derivative of (·), then it is monotone and continuous almost everywhere.

A3. (i) F(u, x) := P(D1 u) = (u)f1 (x) as u → 0, where (0) = 0 and (u) is absolutely continuous in a neighborhood of 0 and

f1 : H −→ R is a nonnegative function;

(ii) supij P(Di u, Dj u) (u)f2 (x) as u → 0, where (u) → 0 as u → 0 and (hn )/ 2 (hn ) is bounded, f2 (·) is a nonnegative

function defined in H;

(iii) For i = 1, 2, Ii (hn ) := (hn / (hn )) 01 K i (v) (hn v) dv → Ki as n → ∞, whereK1 and K2 are two positive constants.

A4. Uniformly for s in a neighborhood of s = m(x),

(i) There exists a function 1 (·) defined in H, such that as |u| → 0,

E[(1 + u)|X1 = z] = 1 (z)u + o(u),

where 1 (z) is continuous in a neighborhood of x and 1 (x)0;

(ii) E|(Y1 − s)|r < ∞ for some r > 2 and if we let g1 (z, s) = E[|(Y1 − s)|2 |X1 = z] and g2 (z, s) = E[|(Y1 − s)|r |X1 = z], then g1 (z, s)

and g2 (z, s) are continuous in a neighborhood of x with respect to z. Furthermore, we have, for z in a neighborhood of x,

max{E[((1 + u) − (1 ))2 |X1 = z], E[|(1 + u) − (1 )|r |X1 = z]} 2 (|u|),

where 2 (u) is continuous at u = 0 with 2 (0) = 0;

(iii) gi,j (x1 , x2 ; s) = E[(Yi − s)(Yj − s)|Xi = x1 , Xj = x2 ], ij, is uniformly bounded in a neighborhood of (x1 , x2 ) = (x, x).

A5. |m(z) − m(x)| Cd (z, x) for z in a neighborhood of x, where is some positive constant.

∞ l [(l)]1−2/r < ∞, > 1 − 2/r.

A6.

l=1

For simplicity, we will write h instead of hn in the rest of the paper.

Remark 1. A1 is standard in nonparametric kernel estimation except that K(·) is a one-sided kernel on [0, 1]. This is because K(·)

takes the form K(d(x, Xi )/h) in our paper and d(x, Xi ) 0. Therefore, we assume that K(·) has a compact support [0, 1] as Masry

(2005). In A2, we impose some mild restrictions on (·). In Härdle (1984, 1989), (·) is assumed to be monotone, antisymmetric,

bounded and twice continuously differentiable. With contrast to their assumption, A2 is much milder and covers some wellknown special cases such as the least squares estimate (LSE), the least absolute distance estimate (LADE) and the mixed LSE and

LADE. Assumptions A3, A5 and A6 are the same as Conditions 1, 3 and 4 in Masry (2005). Gasser et al. (1998) also used the

condition F(u, x) = (u)f1 (x) as u → 0, and refer to f1 (·) as the functional probability density of X. For an insight of A3, we take

H=Rd as an example and assume that f1 (·) is the probability density function of X1 . Then, F(u, x) = C1 ud f1 (x) as u → 0, where

C1 is the volume of the unit ball in Rd . Let f2 (x) = supij fi,j (x, x), where fi,j (x, x) is the joint density of Xi and Xj at (x, x). Then,

supij P(Di u, Dj u) (C1 ud )2 f2 (x) as u → 0. So in this case, (u) = C1 ud and (u) = O(2 (u)) as u → 0. Furthermore, by an

elementary calculation, we know that Ii (h) = d 01 K i (v)vd−1 dv for i = 1, 2, which is obviously finite. Masry (2005) also gave an

alternative condition on F(u, x0 ) (i.e. 0 < c3 (h)f1 (x) F(h, x) c4 (h)f1 (x)) when calculating the variance of the proposed NW

estimator. A4 imposes some uniform continuity assumptions on certain conditional moments of (i + ·) and A4 (i) and (ii) are

similar to (M3) and (M4) in Bai et al. (1992). Assumption A6 concerns with the decaying rate of the mixing coefficient, which is

related to the moment order r in A4.

Our main results are stated as follows.

Theorem 1. Suppose that m(x) is an isolated root of E[(Y1 − )|X1 = x] = 0 and A1–A6 hold. Moreover, the bandwidth h satisfies

h → 0 and n(h) → ∞. Then, we have

P

mn (x) −→ m(x),

n → ∞.

(2.1)

Theorem 2. Assume that the assumptions of Theorem 1 hold. Besides, there exists a sequence of positive constants {An }, such that

An → ∞ and the quantities An , h and (l) satisfy

∞

n=1

A−r

n < ∞,

∞ An 1/2 1/2

n ([n1/2 ]) < ∞,

(h)

n=1

n2 (h)

A2n log2 n

→ ∞.

(2.2)

Author's personal copy

536

J. Chen, L. Zhang / Journal of Statistical Planning and Inference 139 (2009) 533 -- 546

Then,

a.s.

mn (x) −→ m(x),

n → ∞.

(2.3)

Remark 2. To gain an insight as to what kind of h, (l) and An satisfy the assumptions above, let us suppose that An =

n1/2 (h)(log n)−2 , (l) = O(l− ), where > max{ 72 , ( + 1)/(1 − 2/r)} and h is chosen such that (h) = O(n−1/2(1/2−1/r) ). Then,

(2.2) and A6 hold.

Theorem 3. Assume that the conditions of Theorem 1 hold. Besides, there exists a sequence of positive integers {vn }, such that vn → ∞,

vn = o((n(h))1/2 ) and (n/ (h))1/2 (vn ) → 0 as n → ∞. Then, for all x that satisfy 1 (x)f1 (x)0, we have

d

(n(h))1/2 (mn (x) − m(x) − n ) −→ N(0, 21 (x)),

(2.4)

where

2 (m(x))

n =

Bn

,

1 (x)f1 (x)K1

Bn =

1

E[1 (Y1 − m(x))] = O(h ),

(h)

21 (x) =

(

1 (x)f1 (x)K1 )2

,

i = K

d(x, Xi )

,

h

2 (s) = g1 (x, s)f1 (x)K2

and K1 , K2 are defined in A3 and g1 (x, s) is defined in A4.

Remark 3. In Masry (2005), a smoothness condition on the regression function m(x) is also imposed, that is |m(u)−m(v)| Cd (u, v)

for all u, v ∈ H for some > 0, and the asymptotic normality of the NW type estimator m∗n (x) is given as

d

(n(h))1/2 (m∗n (x) − m(x) − B∗n ) → N(0, ∗ (x)),

where the bias term B∗n = O(h ), which is of the same order as n in (2.4).

3. Proofs of the main results

In order to avoid unnecessary repetitions, we suppose that all limits are taken as n tends to infinity except special notations

and the limits in the proofs of Theorems 1 and 2 hold uniformly in a neighborhood of s = m(x).

Proof of Theorem 1. Define i = K(d(x, Xi )/h), Hn (x, s) = (1/n(h)) ni=1 i (Yi − s) and H(x, s) = E[(Y1 − s)|X1 = x]f1 (x)K1 ,

where 1 (x) is defined in A4. We first prove

P

Hn (x, s) −→ H(x, s)

(3.1)

and then prove (2.1) by the monotonity and continuity of the function (·). We prove (3.1) by verifying the following two

statements:

P

Hn (x, s) − EHn (x, s) −→ 0

(3.2)

EHn (x, s) −→ H(x, s).

(3.3)

and

The validity of (3.3) is obvious by an elementary calculation. In fact, by A1, A3 and A4,

EHn (x, s) =

=

1

(h)

1

(h)

E1 (Y1 − s)

E(1 E[(Y1 − s)|X1 ])

= E[(Y1 − s)|X1 = x](1 + o(1))

1

E1

1

u

F(du, x)

= E[(Y1 − s)|X1 = x](1 + o(1))

K

(h)

h

1

h

= E[(Y1 − s)|X1 = x](1 + o(1))

f1 (x)

K(v) (hv) dv

(h)

0

−→E[(Y1 − s)|X1 = x]f1 (x)K1 = H(x, s).

(h)

(3.4)

Author's personal copy

J. Chen, L. Zhang / Journal of Statistical Planning and Inference 139 (2009) 533 -- 546

537

To prove (3.2), we first show

n(h)Var[Hn (x, s)] −→ 2 (s),

(3.5)

where 2 (s) = g1 (x, s)f1 (x)K2 . Let Zn,i (s) = i (Yi − s), then

Hn (x, s) =

n

1 Zn,i (s)

n(h)

i=1

and

(n(h))1/2 (Hn (x, s) − EHn (x, s)) = 1

n

(Zn,i (s) − EZ n,i (s)).

n(h) i=1

(3.6)

Notice that

n(h)Var[Hn (x, s)] =

1

1

Var(Zn,1 ) +

(h)

n(h)

n

i,j=1 ij

Cov(Zn,i , Zn,j ).

(3.7)

By (3.4) and the definition of Zn,1 (s), we have

EZ n,1 (s) = O((h)).

(3.8)

By A5, we know that when d(X1 , x) h, m(X1 ) = m(x) + O(h ). Hence,

Var(Zn,1 ) = E[1 (Y1 − s)]2 + O(2 (h))

= E(21 E[2 (Y1 − s)|X1 ]) + O(2 (h))

1

= g1 (x, s)(1 + o(1))hf 1 (x)

K 2 (v) (hv) dv + O(2 (h)).

0

This, in conjunction with A3(iii) and (h) → 0, gives

1

(h)

Var(Zn,1 ) → 2 (s).

(3.9)

Next, we will show

n

i,j=1 ij

|Cov(Zn,i , Zn,j )| = o(n(h)).

(3.10)

Let 0 < an < n be a sequence of positive integers that tends to infinity and its value will be specified later. Then,

n

i,j=1 ij

|Cov(Zn,i , Zn,j )| 0<|i−j| an

|Cov(Zn,i , Zn,j )| +

|Cov(Zn,i , Zn,j )|

|i−j|>an

=: J1 + J2 .

(3.11)

In view of (3.8), A1, A3(ii) and A4(iii), we have

J1 =

0<|i−j| an

0<|i−j| an

0<|i−j| an

[|EZ n,i Zn,j | + (EZ n,1 )2 ]

[|Egi,j (Xi , Xj ; s)i j | + O(2 (h))]

[CP(Di h, Dj h) + O(2 (h))]

Cnan [f2 (x)(h) + O(2 (h))],

where C denotes a positive constant that may take different values in different places.

(3.12)

Author's personal copy

538

J. Chen, L. Zhang / Journal of Statistical Planning and Inference 139 (2009) 533 -- 546

As for the covariances in J2 , we employ A4 and Corollary A.2 (Davydov's lemma) in Hall and Heyde (1980) and get

|Cov(Zn,i , Zn,j )| 8{E|(Y1 − s)1 |r }2/r [(|i − j|)]1−2/r

C{P(D1 h)}2/r [(|i − j|)]1−2/r

C{f1 (x)(h)}2/r [(|i − j|)]1−2/r .

Hence,

J2 C

{f1 (x)(h)}2/r [(|i − j|)]1−2/r

|i−j|>an

∞

Cn{f1 (x)(h)}2/r

[(l)]1−2/r

l=an +1

a

n

Cn{f1 (x)(h)}2/r

∞

l [(l)]1−2/r .

(3.13)

l=an +1

Now we choose an = [((h))−(1−2/r)/ ], then by (3.12) and (3.13), we have

J1 Cn((h))2−(1−2/r)/ and

∞

J2 Cn(h)

l [(l)]1−2/r .

l=an +1

By A6, we have

J1 = o(n(h))

(3.14)

J2 = o(n(h)).

(3.15)

and

Combining (3.11), (3.14) and (3.15), we get (3.10). By (3.7), (3.9) and (3.10), we know that (3.5) is true. Therefore,

1

= oP (1).

Hn (x, s) − EHn (x, s) = OP n(h)

This implies that (3.2) holds. Thus we have proved (3.1).

The remaining proof of Theorem 1 is rather standard in the context of M-estimation and we omit it here. For details, see Huber

(1964). Proof of Theorem 2. We first prove

a.s.

Hn (x, s) − EHn (x, s) −→ 0,

n → ∞,

(3.16)

a.s.

P

then the rest of the proof of mn (x) −→ m(x) is similar to that of mn (x) → m(x). To prove (3.16), we first employ a truncation method.

Let {An }↑∞ be the sequence that satisfies (2.2) in Theorem 2 and denote

A = A (Y − s) ,

Zn,i

i

i

HnA (x, s) =

n

1 A

Zn,i ,

n(h)

i=1

where A (y) = (y)I(|(y)| An ). Then,

A

|Hn (x, s) − EHn (x, s)| |Hn (x, s) − HnA (x, s)| + |HnA (x, s) − EHA

n (x, s)| + |EHn (x, s) − EHn (x, s)|.

Since

∞

−r

n=1 An < ∞, we have, for any > 0,

∞

n=1

P(|(Yn − s)| > An ) ∞

n=1

−r E|r (Y1 − s)|A−r

n < ∞.

(3.17)

Author's personal copy

J. Chen, L. Zhang / Journal of Statistical Planning and Inference 139 (2009) 533 -- 546

539

By Theorem 4.2.1 in Galambos (1978), we know that

P

max {|(Yi − s)|} > An i.o. = 0.

1in

Therefore, for n sufficiently large,

Hn (x, s) = HnA (x, s) a.s.

(3.18)

Besides, by A3(iii), A4(ii), r > 2 and An → ∞, we have

|EHA

n (x, s) − EHn (x, s)| 1

(h)

An1−r

An1−r

E[|(Y1 − s)|I(|(Y1 − s)| > An )1 ]

1

(h)

1

(h)

E[|(Y1 − s)|r I(|(Y1 − s)| > An )1 ]

E[|(Y1 − s)|r 1 ]

= g2 (x, s)(1 + o(1))An1−r

1

(h)

= g2 (x, s)(1 + o(1))An1−r f1 (x)

E1

1

h

K(v) (hv) dv

(h) 0

→0.

(3.19)

A / (h)| A / (h), we now apply Lemma 1 in the Appendix and get for each q = 1, . . . , [n/2],

As |Zn,i

n

⎛

⎞

n

A

A

Z

Z

n,i

n,i ⎝

> n⎠

−E

P(|HnA − EHA

n | > ) = P (h) i=1 (h)

n

2 q

4An 1/2

,

4 exp − 2

q

+ 22 1 +

(h)

2q

8v (q)

(3.20)

where v2 (q) = 22 (q)/p2 + An /(2(h)), p = [n/2q] and

⎛

⎞

A

A

Zn,[(j+1)p]−[jp]

Zn,1

2

⎠,

+ ··· +

(q) = max Var ⎝

(h)

(h)

0 j 2q−1

which, by (3.5), is bounded above by Cp/ (h). If we choose q = [n1/2 ], then (3.20) is bounded above by

4 exp{−Cn1/2 (h)/An } + C

By n2 (h)/A2n log2 n → ∞ and

∞

An 1/2 1/2

n ([n1/2 ]).

(h)

∞

n=1 (An / (h))

1/2 1/2

n ([n1/2 ]) < ∞, we know

P(|HnA − EHA

n | > ) < ∞,

n=1

which, by the Borel–Cantelli lemma, implies HnA − EHA

n → 0 a.s. Combining this with (3.17)–(3.19), we get (3.16). A

A

Remark 4. Notice that if we choose q?n1/2 ( i.e. q/n1/2 → ∞), then by (3.20) we know that in order ∞

n=1 P(|Hn − EHn | > ) < ∞,

we need more rigid conditions on the mixing coefficient (l) but weaker conditions on the bandwidth h. For example, if

we choose q = [n2/3 ], then in order that the last term in (3.20) is summable, it suffices that n((h)/An log n)3/2 → ∞ and

∞

1/2 2/3

n ([n1/3 ]) < ∞. Hence, there is a tradeoff between the bandwidth h and the mixing coefficient (l). In the

n=1 (An / (h))

proof of Theorem 2, we just choose q = [n1/2 ].

Proof of Theorem 3. We first prove that for any c > 0,

n

i [(Yi − s) − (Yi − m(x)) + (Yi − m(x))(s − m(x))]

sup

1/2

(n(h)) |s−m(x)| c i=1

1

2

− (m(x) − s) 1 (x)f1 (x)K1 n(h) = oP (1).

2

Author's personal copy

540

J. Chen, L. Zhang / Journal of Statistical Planning and Inference 139 (2009) 533 -- 546

As (·) is convex, we have

i |(Yi − s) − (Yi − m(x)) + (Yi − m(x))(s − m(x))|

i |s − m(x)||(Yi − s) − (Yi − m(x))|

= i |s − m(x)||(i + m(Xi ) − s) − (i + m(Xi ) − m(x))|

i |s − m(x)|[|(i + m(Xi ) − s) − (i )| + |(i + m(Xi ) − m(x)) − (i )|].

(3.21)

By A5, we know that when (n(h))1/2 |s − m(x)| c and d(x, Xi ) h, we have

|m(Xi ) − s| |m(Xi ) − m(x)| + |m(x) − s| Ch + (n(h))−1/2 c

(3.22)

|m(Xi ) − m(x)| Ch .

(3.23)

and

Let ni = i |(i + m(Xi ) − m(x)) − (i )|, then by Theorem 17.2.2 in Ibragimov and Linnik (1971) and A6, we have

⎞

⎛

n

n

ni ⎠ nE2n1 + Cn

|Cov(n1 , ni )|

Var ⎝

i=1

i=2

n

nE2n1 + Cn [(i)]1−2/r (E|n1 |r )2/r

i=1

2

nEn1 + Cn(E|n1 |r )2/r .

(3.24)

Furthermore, by A3, A4 and (3.23), we have

E2n1 = E[E(2n1 |X1 )] CE[21 2 (|m(X1 ) − m(x)|)] C (h)

2 (h ).

Similarly, we have

E|n1 |r C (h)

2 (h ).

Therefore, by (3.24), we know that

⎞

⎛

n

ni ⎠ = O(n(h)

2 (h )) = o(n(h)).

Var ⎝

(3.25)

i=1

If we let ni = |(i + m(Xi ) − s) − (i )|, then by (3.22), we can similarly prove

⎛

⎞

n

Var ⎝

ni ⎠ = O(n(h)

2 (h + (n(h))−1/2 )) = o(n(h)).

(3.26)

i=1

From (3.21), (3.25) and (3.26), we know that the following equation holds uniformly for (n(h))1/2 |s − m(x)| c,

⎛

⎞

n

Var ⎝

i [(Yi − s) − (Yi − m(x)) + (Yi − m(x))(s − m(x))]⎠ = o((s − m(x))2 n(h)) = o(1).

i=1

This implies that

n

{i [(Yi − s) − (Yi − m(x)) + (Yi − m(x))(s − m(x))]

i=1

− E(i [(Yi − s) − (Yi − m(x)) + (Yi − m(x))(s − m(x))])}

= oP (1)

(3.27)

uniformly for (n(h))1/2 |s − m(x)| c. Moreover, by Lemma 1 in Bai et al. (1992), we have

E[(1 + u) − (1 )|X1 = z] = 12 1 (z)u2 + o(u2 )

as |u| → 0.

Author's personal copy

J. Chen, L. Zhang / Journal of Statistical Planning and Inference 139 (2009) 533 -- 546

541

By (3.22) and (3.23), we know that

n

E(i [(Yi − s) − (Yi − m(x))]) = nE(1 E[(Yi − s) − (Yi − m(x))|X1 ])

i=1

1

nE(1 1 (X1 )[(m(Xi ) − s)2 − (m(X1 ) − m(x))2 ])(1 + o(1))

2

1

= (m(x) − s)nE[1 1 (X1 )(2m(X1 ) − s − m(x))](1 + o(1))

2

=

(3.28)

holds uniformly for (n(h))1/2 |s − m(x)| c. On the other hand,

(s − m(x))

n

E[i (Yi − m(x))] = (s − m(x))nE[1 1 (X1 )(m(X1 ) − m(x))](1 + o(1)).

(3.29)

i=1

Combining (3.28) with (3.29), we get

E

n

(i [(Yi − s) − (Yi − m(x)) + (Yi − m(x))(s − m(x))])

i=1

1

(m(x) − s)2 nE[1 1 (X1 )](1 + o(1))

2

1

= (m(x) − s)2 1 (x)f1 (x)K1 n(h)(1 + o(1)).

2

=

(3.30)

By (3.27) and (3.30), we know that

n

1

2

= oP (1)

[

(Y

−

s)

−

(Y

−

m(x))

+

(Y

−

m(x))(s

−

m(x))]

−

(x)f

(x)K

n

(h)

(m(x)

−

s)

i

i

i

i

1

1

1

2

i=1

holds uniformly for (n(h))1/2 |s − m(x)| c. As

n

i=1 i [(Yi − s) − (Yi − m(x)) + (Yi − m(x))(s − m(x))] is convex in s, and

1 (m(x) − s)2 (x)f (x)K n(h) is continuous and convex in s, by Theorem 10.8 of Rockafellar (1970), we have

1

1

1

2

n

sup

i [(Yi − s) − (Yi − m(x)) + (Yi − m(x))(s − m(x))]

1/2

(n(h)) |s−m(x)| c i=1

1

2

− (m(x) − s) 1 (x)f1 (x)K1 n(h) = oP (1).

2

By arguments similar to the proof of (3.31) and by Theorem 2.5.7 of Rockafellar (1970), we have

n

1

sup

i [(Yi − s) − (Yi − m(x))]

n(h) i=1

(n(h))1/2 |s−m(x)| c + n(h)

1 (x)f1 (x)K1 (s − m(x)) = oP (1).

(3.31)

(3.32)

Denote n = mn (x) − m(x) and

n =

n

1

(

(x)f1 (x)K1 )−1

i (Yi − m(x)) = (

1 (x)f1 (x)K1 )−1 Hn (x, m(x)).

n(h) 1

i=1

Using the same technique as that used in the proof of Theorem 2.4 in Bai et al. (1992), we obtain

n(h)|n − n | = oP (1).

(3.33)

Hence, in order to prove Theorem 3, it remains for us to prove

d

(n(h))1/2 (Hn (x, m(x)) − EHn (x, m(x))) −→ N(0, 2 (m(x))),

(3.34)

EHn (x, m(x)) = Bn = O(h ).

(3.35)

and

Author's personal copy

542

J. Chen, L. Zhang / Journal of Statistical Planning and Inference 139 (2009) 533 -- 546

Eq. (3.35) is obvious. In fact

|Bn | 1

(h)

1

=

(h)

E(1 |E[(Y1 − m(x))|X1 ]|)

E(1 |

1 (X1 )(m(X1 ) − m(x))|)

Ch |

1 (x)|f1 (x)

= O(h ).

1

h

K(u) (hu) du

(h) 0

Next, we will prove (3.34). Let Zn,i (m(x)) = (1/ (h))(Zn,i (m(x)) − EZ n,i (m(x))), then

n

1 Zn,i (m(x)).

(n(h))1/2 (Hn (x, m(x)) − EHn (x, m(x))) = √

n

i=1

For simplicity, we will write Zn,i instead of Zn,i (m(x)). It is easy to see that (3.34) is equivalent to

n

1 d

Zn,i −→ N(0, 2 (m(x))).

√

n

(3.36)

i=1

To show this, we adopt the small- and large-block technique. Namely, partition {1, . . . , n} into 2kn + 1 subsets with small-block

of size v = vn , which is defined in Theorem 3, and large-block of size u = un and kn = [n/(un + vn )], where [a] denotes the integer

part of a. For the choice of un , note that by the conditions in Theorem 3, there exists a sequence of positive integers {qn } such that

qn → ∞, qn vn = o((n(h))1/2 ) and qn (n/ (h))1/2 (vn ) → 0. Let un = [(n(h))1/2 /qn ], then

vn

→ 0,

un

un

→ 0,

n

un

(n(h))

1/2

→ 0,

n

(vn ) → 0.

un

(3.37)

Define

j(u+v)+u

j =

Zn,i ,

j = 0, . . . , kn − 1,

i=j(u+v)+1

(j+1)(u+v)

j =

Zn,i ,

j = 0, . . . , kn − 1

i=j(u+v)+u+1

and

n

kn =

Zn,i ,

i=kn (u+v)+1

then

n

i=1 Zn,i =

kn −1

j=0

j +

kn −1

j=0

j + kn =: Qn1 + Qn2 + Qn3 . We will show (3.36) via

1 2

EQ → 0,

n n2

1 2

EQ → 0,

n n3

k

n −1

−1/2

−1/2

E exp(itn

Q

)

−

E

exp(itn

)

n1

j → 0,

j=0

(3.38)

(3.39)

(3.40)

kn −1

1 E2j → 2 (m(x))

n

(3.41)

kn −1

√

1 E2j I(|j | (m(x)) n) → 0

n

(3.42)

j=0

and

j=0

for any > 0.

Author's personal copy

J. Chen, L. Zhang / Journal of Statistical Planning and Inference 139 (2009) 533 -- 546

543

As for (3.38) and (3.39), we only prove (3.38), since the proof of (3.39) is similar. By stationarity,

EQ 2n2 = kn E20 +

k

n −1

i,j=0 ij

Cov(i , j )

=: I1 + I2 .

(3.43)

By (3.37), the proofs of (3.9) and (3.10) and the definition of kn , we know

⎤

⎡

v

2

I1 = kn ⎣vEZ +

Cov(Zn,i , Z )⎦

n,1

n,j

i,j=1 ij

= kn [v(2 (m(x)) + o(1)) + o(v)]

= kn v[2 (m(x)) + o(1)] = o(n).

(3.44)

Note that

k

n −1

|I2 | (i+1)(u+v)

(j+1)(u+v)

i,j=0 ij l1 =i(u+v)+u+1 l2 =j(u+v)+u+1

|Cov(

Zn,l , Zn,l )|.

1

2

For l1 ∈ {i(u + v) + u + 1, i(u + v) + u + 2, . . . , (i + 1)(u + v)} and l2 ∈ {j(u + v) + u + 1, j(u + v) + u + 2, . . . , (j + 1)(u + v)}, ij, it is

obvious that |l1 − l2 | u. Hence,

|I2 | n

i,j=1 |j−i| u

|Cov(

Zn,i , Zn,j )| = o(n).

(3.45)

In view of (3.43)–(3.45), (3.38) holds.

As for (3.40), by Lemma 2 in the Appendix, we know that

k

n −1

−1/2

−1/2

E exp(itn

Q

)

−

E

exp(itn

)

n1

j 16kn (vn ),

j=0

which tends to zero by (3.37).

A simple computation gives

kn −1

k n un 2

1 [ (m(x)) + o(1)],

E2j =

n

n

j=0

which implies (3.41) by kn un /n → 1.

It remains for us to prove (3.42). As is not necessarily bounded, we employ a truncation method. Denote L (·)=(·)I(|(·)| L)

Z L = (Z L − EZ L )/ (h) and L , j = 0, . . . , kn − 1, be large-block with Zn,i replaced by Z L . By

and define Z L = i (Yi − m(x)), L

n,i

n,i

n,i

n,i

the boundedness of K(·), we have

|Lj | C un

(h)

j

√

= o( n).

n,i

(3.46)

Let 2L (s) = E[2L (Y1 − s)|X1 = x]K2 f1 (x), then by (3.46), we have

k−1

√

1 L2

Ej I(|Lj | L n) → 0

n

for any > 0.

j=0

It is obvious that (3.38)–(3.41) still hold when we substitute with L in each expression. As a result,

kn −1

1 d

Lj −→ N(0, 2L (m(x))).

√

n

j=0

L

Let j = j − Lj . Reminding (3.47), we only need to show

⎞2

⎛

kn −1

L

1 ⎝

j ⎠ → 0

E

n

j=0

(3.47)

Author's personal copy

544

J. Chen, L. Zhang / Journal of Statistical Planning and Inference 139 (2009) 533 -- 546

as first n → ∞ and then L → ∞. In fact, a calculation similar to the proof of (3.38) gives

⎛

⎞2

kn −1

kn −1

L

L

L L

1 ⎝

k

1 E

j ⎠ = n E(1 )2 +

Cov(i , j )

n

n

n

j=0

i,j=0 ij

2

kn u

E[L (Y1 − m(x))|X1 = x]f1 (x)K2 (1 + o(1)) + o(1)

=

n

2

n→∞

−→ f1 (x)K2 E[L (Y1 − m(x))|X1 = x]

L→∞

−→ 0,

where L = − L . Thus, the proof of Theorem 3 is completed. Remark 5. Beran et al. (2003) studied the following fixed design model

yi = g(ti ) + i ,

ti =

i

, i = 1, 2, . . . , n

n

with long-memory errors i and got the order O(h2 ) for the bias of the proposed nonparametric M-estimator g(t). In their paper,

1

K(·) was assumed to be a symmetric kernel on [−1, 1], so −1

uK(u) du = 0. After applying the third order Taylor expansion to g(ti ),

g(t) − g(t). However, as H is an abstract functional space in our paper, we do not apply Taylor expansion

O(h2 ) was obtained for E

directly to m(Xi ). By imposing the smoothness condition A5 on the regression function m(·), we get

1

h

K(u) (hu) du = O(h ).

Emn (x) − m(x) = n = O(Bn ) = O h 1 (x)f1 (x)

(h) 0

4. Examples and numerical implementation

We first give two examples that satisfy the -mixing conditions assumed in this paper. The first one is a multivariate process

and the second one is a sequence of random curves (or functions).

Example 1. Let H = Rd . For x = (x1 , . . . , xd ) ∈ Rd , x is defined as x =

x21 + · · · + x2d , which is the Euclid norm of x. For any

d × d matrix A, A = supx=1 Ax. Suppose that {Xt } is a multivariate MA(∞) process defined by

Xt =

∞

Ai t−i ,

(4.1)

i=0

where Ai are d × d matrices and An → 0 exponentially fast as n → ∞, t are i.i.d. Rd -valued random vectors with mean 0. If

the probability density function of t exists (such as multivariate normal, exponential, and uniform distribution), then by Pham

and Tran (1985), {Xt } is -mixing with (n) → 0 exponentially fast. It can be easily verified that A5 holds. If we choose An such

∞

2

1/2 1/2

−r

2

2

that ∞

n ([n1/2 ]) < ∞. So the conditions in (2.2) are satisfied.

n=1 An < ∞ and n (h)/An log n → ∞, then

n=1 (An / (h))

Besides, if we let (h) = O(n− ), 0 < < 1, and v = O(n(1−)/3 ), then (n/ (h))1/2 (v ) → 0, which implies that the assumptions

n

concerning the -mixing coefficient in Theorem 3 are satisfied.

n

Example 2. Assume that {Vt } is a real-valued autoregressive moving average (ARMA) process, then it admits a Markovian

representation

Vi = HZ i ,

Zi = FZ i−1 + Gei ,

(4.2)

where Zi are random variables, H, F, G are appropriate constants and et are i.i.d. random variables with density function g(·). If

|F| < 1, E|e1 | < ∞ and |g(x) − g(x − )| dx = O(|| ) for some > 0 and > 0, then by Pham and Tran (1985), {Vt } is an -mixing

process with (n) → 0 at an exponential rate. Let Xi (t) = h(Vi t), where h(·) is some real-valued function, then {Xi (t)} is a sequence

of -mixing random curves with exponentially decaying mixing rate. Hence, for suitably chosen h and vn , the -mixing conditions

in Theorem 3 hold.

Although there are cases of time series that are mixing, the mixing condition is difficult to check in general. So in the context

of asymptotic theory of statistics, it is usually a common practice to assume the mixing property as a precondition.

Numerical implementation: Next, we will give a numerical example to show the efficiency of the M-estimation discussed in

this paper. We choose H = C[0, 1], which is the space composed of all of the continuous functions defined in [0, 1]. We consider

Author's personal copy

J. Chen, L. Zhang / Journal of Statistical Planning and Inference 139 (2009) 533 -- 546

545

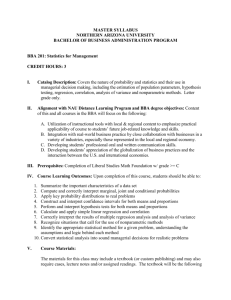

Table 1

The average MADE of mn and m∗n

Error distribution

n

mn

m∗n

(i)

(i)

(ii)

(ii)

(iii)

(iii)

300

800

300

800

300

800

0.1073 (0.0102)

0.0919 (0.0068)

0.1206 (0.0121)

0.0997 (0.0081)

0.2843 (0.0636)

0.3122 (0.0618)

0.2152 (0.0221)

0.1967 (0.0183)

0.5029 (0.0510)

0.4308 (0.0385)

6.3254 (12.3969)

6.4584 (11.0093)

the random curves Xi (t) = exp(2Wi t), 0 t 1, where Wi are random variables generated by

Wi = 0.1Wi−1 + ei ,

ei ∼ N(0, 1).

By Example 2, we know that {Xi (t)} is a sequence of -mixing random curves with exponentially decaying mixing rate.

Consider the following model:

Yi = m(Xi ) + i ,

m(x) = 4

1

(x (t))2 dt,

(4.3)

0

which was also used in Laksaci et al. (2007). In view of the smoothness of the random curves Xi (t), we choose the distance in H

as d(x, y) = ( 01 (x (t) − y (t))2 dt)1/2 for x = x(t) ∈ H and y = y(t) ∈ H. For more discussion on the choices of distance in functional

nonparametric estimation, see Ferraty and Vieu (2006).

In this example, we apply the quadratic kernel function K(u) = 32 (1 − u2 )I(0 u 1). In order to compare the performance of

the proposed M-estimator mn with the NW estimator m∗n , we consider the following different distributions of the errors i ,

(i) standard normal distribution: i ∼ N(0, 1);

(ii) contaminated errors: i ∼ 0.4N(0, 22 ) + 0.9N(0, 1);

(iii) Cauchy distribution: i ∼ C[0, 1].

Furthermore, we choose (y) = max{−1.75, min{y, 1.75}}, which is Huber's function with c = 1.75.

A difficult problem in simulation is the choice of a proper bandwidth. Here, we will apply a simple method to choose the

bandwidth. For a predetermined sequence of h's from a wide range, say from 0.05 to 1 with an increment 0.02, we choose the

bandwidth to minimize the mean absolute deviation error (MADE), which is defined in (4.4).

As it may be not easy to find the solution to (1.4), we apply an iterative procedure to obtain mn . For any original value 0 ,

calculate t iteratively by

⎞−1

n

n

t = t−1 + ⎝ (Yi − t−1 )i ⎠

(Yi − t−1 )i .

⎛

i=1

i=1

This procedure terminates at t0 when |t0 − t0 −1 | < 1 × 10−4 or t0 = 500, then we let mn = t0 . We compare the MADE of mn

with that of the NW estimator m∗n . The MADEs of mn and m∗n are defined as

n

1

|mn (Xi ) − m(Xi )|

n

i=1

and

n

1 ∗

|mn (Xi ) − m(Xi )|,

n

(4.4)

i=1

respectively. The simulation data consist of 500 replications of sample sizes n = 300 and 800. The results are reported in Table 1.

From Table 1, we know that the proposed M-estimator mn is more robust than the NW estimator m∗n . This is more apparent

when the errors i are heavy-tailed (distribution (iii)). So when dealing with outliers or aberrant observations, mn performs better

than the NW estimator m∗n .

Acknowledgments

We show our gratitude to the referees for their constructive comments, which help greatly to improve the presentation of

this paper. We would also like to thank Dr. Li, D.G. for his help in the completion of this paper. This work was supported by the

National Natural Science Foundation of China (Grant no. 10771192).

Appendix

The first lemma follows by Theorem 1.3 of Bosq (1998) and the second lemma is due to Volkonskii and Rozanov (1959).

Author's personal copy

546

J. Chen, L. Zhang / Journal of Statistical Planning and Inference 139 (2009) 533 -- 546

Lemma 1. Suppose that {Xt } is a stationary -mixing process with mean 0 and Sn =

each q = 1, . . . , [n/2] and > 0,

P(|Sn | > n) 4 exp −

2 q

8v2 (q)

n

t=1 Xt . If P(|Xt | b) = 1, t = 1, . . . , n, then for

n

4b 1/2

,

q

+ 22 1 +

2q

where v2 (q) = 22 (q)/p2 + b/2, p = n/(2q), and

2 (q) =

max

0 j 2q−1

E{([jp] + 1 − jp)X1 + X2

+ · · · + X[(j+1)p]−[jp] + (jp + p − [jp + p])X[(j+1)p]−[jp]+1 }2 .

Lemma 2. Let V1 , . . . , VL be -mixing random variables that can take values in the complex space and are measurable with respect to

j

j

the -algebras Fi1 , . . . , FiL , respectively, with 1 i1 < j1 < i2 < · · · < jL n, il+1 − jl w 1 for 1 l L − 1. Assume that |Vj | 1 for

L

1

j = 1, . . . , L, then

⎛

⎞

L

L

E ⎝

⎠−

V

E(V

)

j

j 16(L − 1)(w),

j=1

j=1

where (w) is the mixing coefficient.

References

Araujo, A., Gine, E., 1980. The Central Limit Theorem for Real and Banach Valued Random Variables. Wiley, New York.

Bai, Z.D., Rao, C.R., Wu, Y., 1992. M-estimation of multivariate linear regression parameters under a convex discrepancy function. Statist. Sinica 2, 237–254.

Beran, J., 1991. M estimators of location for Gaussian and related processes with slowly decaying serial correlations. J. Amer. Statist. Assoc. 86, 704–707.

Beran, J., Ghosh, S., Sibbertsen, P., 2003. Nonparametric M-estimation with long-memory errors. J. Statist. Plann. Inference 117, 199–205.

Boente, G., Fraiman, R., 1989. Robust nonparametric regression estimation for dependent observations. Ann. Statist. 17, 1242–1256.

Bosq, D., 1998. Nonparametric Statistics for Stochastic Processes: Estimation and Prediction. Lecture Notes in Statistics, second ed., vol. 110. Springer, Berlin.

Cai, Z., 2002. Regression quantiles for time series. Econom. Theory 18, 169–192.

Fan, J., Yao, Q., 2003. Nonlinear Time Series. Springer, New York.

Ferraty, F., Vieu, P., 2002. Nonparametric models for functional data, with application in regression, time series prediction and curve discrimination. Presented at

the International Conference on Advances and Trends in Nonparametric Statistics, Crete, Greece.

Ferraty, F., Vieu, P., 2006. Nonparametric Functional Data Analysis. Springer Series in Statistics. Springer, New York.

Galambos, J., 1978. The Asymptotic Theory of Extreme Order Statistics. Wiley, New York.

Gasser, T., Hall, P., Presnell, B., 1998. Nonparametric estimation of the mode of a distribution of random curves. J. Roy. Statist. Soc. Ser. B 60, 681–691.

Hall, P., Heyde, C.C., 1980. Martingale Limit Theory and its Applications. Academic Press, New York.

Härdle, W., 1984. Robust regression function estimation. J. Multivariate Anal. 14, 169–180.

Härdle, W., 1989. Asymptotic maximal derivation of M-smoothers. J. Multivariate Anal. 29, 163–179.

Härdle, W., Lütkepohl, H., Chen, R., 1997. A review of nonparametric time series analysis. Internat. Statist. Rev. 21, 49–72.

Huber, P.J., 1964. Robust estimation of a location parameter. Ann. Math. Statist. 35, 73–101.

Ibragimov, I.A., Linnik, Yu.V., 1971. Independent and Stationary Sequences of Random Variables. Wolters, Groningen.

Laksaci, A., Lemdani, M., Ould-Sa¨d, E., 2007. L1 -norm kernel estimator of conditional quantile for functional regressors: consistency and asymptotic normality.

Manuscript.

Lin, Z., Lu, C., 1996. Limit Theory for Mixing Dependent Random Variables. Science Press, Kluwer Academic Publisher, Beijing.

Masry, E., 2005. Nonparametric regression estimation for dependent functional data: asymptotic normality. Stochastic Process. Appl. 115, 155–177.

Masry, E., Fan, J., 1997. Local polynomial estimation of regression functions for mixing processes. Scand. J. Statist. 24, 165–179.

Pham, T.D., Tran, L.T., 1985. Some mixing properties of time series models. Stochastic Process. Appl. 19, 297–303.

Ramsay, J., Silverman, B., 2002. Applied Functional Data Analysis: Methods and Case Studies. Springer, New York.

Robinson, P.M., 1989. Hypothesis testing in semiparametric and nonparametric models for econometric time series. Rev. Econom. Stud. 56, 511–534.

Rockafellar, R.T., 1970. Convex Analysis. Princeton University Press, Princeton.

Roussas, G.G., 1990. Nonparametric regression estimation under mixing conditions. Stochastic Process. Appl. 36, 107–116.

Serfling, R.J., 1980. Approximation Theorems of Mathematical Statistics. Wiley, New York.

Vakashnia, N.N., Tarieladze, V.I., Chobanyan, S.A., 1987. Probability Distributions on Banach Spaces. D. Reidel Publishing Co., Boston.

Volkonskii, V.A., Rozanov, Yu.A., 1959. Some limit theorems for random functions. Theory Probab. Appl. 4, 178–197.