A generalized drop-the-loser rule for multi-treatment clinical trials ARTICLE IN PRESS

advertisement

ARTICLE IN PRESS

Journal of Statistical Planning and Inference

(

)

–

www.elsevier.com/locate/jspi

A generalized drop-the-loser rule for multi-treatment clinical trials

Ruibo Suna , Siu Hung Cheungb,∗,1 , Li-Xin Zhangc,2

a Department of Statistics, Nanjing University of Finance and Economics, Nanjing 210046, PR China

b Department of Statistics, The Chinese University of Hong Kong, Shatin, Hong Kong

c Department of Mathematics, Zhejiang University, Hangzhou 310028, PR China

Received 22 November 2005; accepted 28 June 2006

Abstract

Urn models are popular for response adaptive designs in clinical studies. Among different urn models, Ivanova’s drop-the-loser

rule is capable of producing superior adaptive treatment allocation schemes. Ivanova [2003. A play-the-winner-type urn model with

reduced variability. Metrika 58, 1–13] obtained the asymptotic normality only for two treatments. Recently, Zhang et al. [2007.

Generalized drop-the-loser urn for clinical trials with delayed responses. Statist. Sinica, in press] extended the drop-the-loser rule to

tackle more general circumstances. However, their discussion is also limited to only two treatments. In this paper, the drop-the-loser

rule is generalized to multi-treatment clinical trials, and delayed responses are allowed. Moreover, the rule can be used to target any

desired pre-specified allocation proportion. Asymptotic properties, including strong consistency and asymptotic normality, are also

established for general multi-treatment cases.

© 2006 Elsevier B.V. All rights reserved.

MSC: 62L12; 62P10; 60F15; 60G10; 60F05

Keywords: Response adaptive design; Play-the-winner rule; Delayed response; Strong consistency; Asymptotic normality

1. Introduction

In clinical studies, it is common that subjects arrive sequentially and then each person is assigned randomly to one of

K available treatments. Response adaptive designs are useful randomization tools that formulate treatment allocation

as a function of previous responses. One major purpose of response adaptive designs is to develop treatment allocation

schemes, so that more subjects receive the treatments that have performed better thus far in the study. At the same time,

it is important to maintain an adequate level of randomness in the allocation process to provide a solid foundation for

statistical inferences.

A large class of response adaptive designs is generated from urn models. An urn contains K different types of balls,

representing K different treatments. When a subject arrives, a ball is drawn at random with replacement. If the ball is

of type k, the subject receives treatment k. The responses of subjects after treatment play an essential role in updating

the urn. The basic strategy is to “reward” more balls to successful treatments.

∗ Corresponding author.

E-mail address: shcheung@sta.cuhk.edu.hk (S.H. Cheung).

1 Research supported by a grant from the Research Grants Council of the Hong Kong Special Administrative Region (CUHK400204).

2 Work partially supported by the National Science Foundation of China (10471126).

0378-3758/$ - see front matter © 2006 Elsevier B.V. All rights reserved.

doi:10.1016/j.jspi.2006.06.039

Please cite this article as: Ruibo Sun et al., A generalized drop-the-loser rule for multi-treatment clinical trials, Journal of Statistical Planning

and Inference (2006), doi: 10.1016/j.jspi.2006.06.039

ARTICLE IN PRESS

2

R. Sun et al. / Journal of Statistical Planning and Inference

(

)

–

An early important urn model for adaptive design was proposed by Wei and Durham (1978). Given two treatments

(K = 2), the randomized play-the-winner (RPW) rule was used for treatment allocation. With the RPW rule, the urn

is updated at each stage by adding one additional ball of the same treatment type if the response of a subject to this

treatment is a success, and adding one additional ball of the opposite treatment type if the response is a failure. Wei

(1979) generalized the RPW rule to multi-treatment cases (K > 2) and developed the generalized Pólya urn (GPU)

model. For GPU, the urn is updated at each stage by adding one additional ball of the same treatment type if the response

of a subject to this treatment is a success, and adding an additional 1/(K − 1) ball of the other K − 1 treatment types

if the response is a failure.

A useful randomized version of the GPU, which is called the randomized Pólya urn (RPU), was proposed by Durham

et al. (1998). Unlike the GPU, the RPU design only rewards balls to successful treatments. The success of a treatment

generates a new ball of the same treatment type, whereas the urn remains unchanged if the response is a failure. For

the RPU, the urn process can be embedded in the family of continuous-time pure birth processes with linear birth rate

(Yule processes). The embedding technique of RPU enables the derivation of important limiting properties of the urn

process (Ivanova and Flournoy, 2001).

With the framework of embedding the urn process in a continuous-time birth and death process (Ivanova et al., 2000;

Ivanova and Flournoy, 2001), Ivanova (2003) formulated the drop-the-loser (DL) urn and established the asymptotic

normality for the special case of two treatments. Among various urn models, the DL rule is reported to produce superior

treatment allocation results in terms of reducing the number of failures and the variability of the randomization procedure

(Rosenberger and Hu, 2004). A discussion of the DL rule is given in Section 2.

To cover a wider spectrum of clinical applications such as delayed responses and pre-specified treatment allocation

targets, Zhang et al. (2007) extended the DL rule. However, their discussion of the generalized drop-the-loser (GDL) rule

is limited to two treatments (K = 2). The main objective of this paper is to generalize the GDL rule to multi-treatment

cases where K > 2 and establish its asymptotic properties. The allocation scheme of the GDL rule will be provided

in Section 3. Then, in Section 4, the asymptotic properties, including strong consistency and asymptotic normality,

will be given. In particular, the asymptotic normality of Ivanova’s DL rule will be obtained for general multi-treatment

cases. Simulation studies on treatment allocation proportions will be presented in Section 5. Section 6 conclude the

paper.

2. Drop-the-loser rule

We first outline the mechanism of the DL rule. The allocation scheme is as follows: consider an urn that contains

K + 1 different types of balls. Balls of types 1, 2, . . . , K are called treatment balls that represent K different treatments.

Balls of type 0 are called immigration balls. Initially, there are Z0,k balls of type k (k = 0, 1, 2, . . . , K) in the urn.

Let Z0 = (Z0,0 , Z0,1 , Z0,2 , . . . , Z0,K ) be the initial urn composition. After m draws, the urn composition changes

to Zm = (Zm,0 , Zm,1 , Zm,2 , . . . , Zm,K ). When a subject arrives, one ball is drawn randomly from the urn without

replacement. If a treatment ball of type k is drawn, then the subject will receive treatment k. The response is then observed

after the patient is treated. If the treatment is a success, then the corresponding ball (i.e. type k) is replaced. Consequently,

the urn composition remains unchanged, Zm+1 = Zm . However, if the treatment is a failure, the corresponding ball is

not replaced, so Zm+1,k = Zm,k − 1 and Zm+1,j = Zm,j for j = k.

If an immigration ball is selected, then no subject is treated and the ball is returned to the urn together with K

additional treatment balls, one for each treatment type. Thus, Zm+1,0 = Zm,0 and Zm+1,k = Zm,k + 1, k = 1, 2, . . . , K.

The function of the immigration ball is to avoid the extinction of a particular type of treatment ball.

With respect to the treatment allocation mechanism, there is one major difference between the DL rule and the

RPU. For the DL rule, the successful treatments receive no reward, but the failed treatments are penalized by losing

a corresponding ball in the urn. In contrast, the RPU procedure rewards successful treatments without penalizing the

losers. The allocation proportions of the RPU may converge to extreme limits. For instance, if one of the treatments

has a considerably higher efficacy, the allocation proportion of it always converges to 1, whereas the other inferior

treatments will have their allocation proportions converge to 0.

The variability of allocation proportions should be an important concern in choosing different types of response

adaptive designs. In clinical studies, one concern is to increase power in the discrimination of the efficacy of different

treatments. Hu and Rosenberger (2003) showed that the power is a function of limiting allocation proportions. For given

allocation proportions, the asymptotic power is a decreasing function of the variability of the allocation proportions.

Please cite this article as: Ruibo Sun et al., A generalized drop-the-loser rule for multi-treatment clinical trials, Journal of Statistical Planning

and Inference (2006), doi: 10.1016/j.jspi.2006.06.039

ARTICLE IN PRESS

R. Sun et al. / Journal of Statistical Planning and Inference

(

)

–

3

Therefore, response adaptive designs with smaller variability have an advantage, especially if higher power for statistical

inferences is desired. To strike a balance between the increasing power of statistical inferences and the ethical goal of

providing more patients with the better treatment is a difficult task. More comprehensive discussions of this issue have

been conducted by Rosenberger et al. (2001) and Rosenberger and Lachin (2002).

The DL rule has been shown to be a superior urn model in terms of reducing the number of failures and variability.

However, it has some drawbacks. First, it can only be employed to target one particular allocation proportion. Let

Nk (t) be the number of trials on treatment k up to time t. Ivanova (2003) proved that the allocation proportion of each

treatment k (k = 1, . . . , K) converged to a limit, namely,

1/Qk

Nk (t)

P

→

N1 (t) + · · · + NK (t)

1/Q1 + · · · + 1/QK

as t → ∞,

(1)

where Pk and Qk = 1 − Pk are the probabilities of success and failure of treatment k, respectively. Ivanova also

established the asymptotic normality for the special case of K = 2. However, there are times when other allocation

targets are desired. For instance, for K = 2, Rosenberger et al. (2001) examined the target allocation proportions

√

Pk

√

√

P1 + P2

(2)

of treatment k, k = 1, 2, that minimize the expected number of failures under the fixed variance of the estimator of the

treatment difference.

Besides the inability to target more than one particular allocation proportion, the disparities between the target

allocation proportion and the sample allocation proportion may be large when responses are not available prior to the

treatment allocation of the next subject (Zhang et al., 2007). Delayed response is very popular in clinical studies, and

to design a procedure to cope with such cases is practically important. Finally, the DL rule is also incapable of dealing

with non-dichotomous responses.

A modification of the DL rule was provided by Zhang et al. (2007) to widen the application spectrum such that the

above-mentioned problems of the DL rule can be tackled. However, the GDL rule only covers the case for K = 2. In

this paper, we extended the GDL rule to K > 2 treatments.

3. Generalized drop-the-loser rule

In this section, we describe the GDL rule for K 2 treatments. For K = 2, our proposed procedure reduces to the rule

of Zhang et al. (2007). Consider an urn that contains balls of K + 1 types. Balls of types 1, . . . , K represent treatments,

and balls of type 0 are the immigration balls. At each stage, balls are drawn at random. We start with Z0,k (> 0) balls

of type k, k = 0, . . . , K. Now, Z0 = (Z0,0 , . . . , Z0,K ) is the initial urn composition and Zm = (Zm,0 , . . . , Zm,K ) is the

+

+

+

= max(0, Zm,k ), k = 0, 1, 2, . . . , K, and Z+

urn composition after m draws. Denote Zm,k

m = (Zm,0 , . . . , Zm,K ). This

is a slight adjustment for when the number of balls for a particular type of balls is negative.

When a subject arrives to be assigned to a treatment, a ball is drawn

at random. Suppose that one ball of type k is

+

+ | = K Z + . Consequently, the balls with negative

drawn with a probability Zm,k

/|Z+

|,

k

=

0,

1,

.

.

.

,

K.

Here,

|Z

m

m

k=0 m,k

numbers will have no chance of being selected.

If the selected ball is of type 0 (i.e., an immigration ball), then no subject is treated and the ball is placed back to

the urn. Furthermore, am,k ( 0) balls of type k, k = 1, . . . , K are added to the urn. This procedure is repeated until a

treatment ball is drawn. We denote Am = am,1 + · · · + am,K .

If a treatment ball is drawn (say, of type k, for some k = 1, . . . , K), then the newly arrived subject is given treatment

k and the ball is not replaced immediately. The addition of balls occurs according to the observed treatment response

of the subject. Denote the response of this subject to treatment k by m,k . The response m,k may not be available

immediately before the arrival of the next subject. In fact, there is a chance that the response can only be available after

several new arrivals (a random number). After the response m,k is observed, Dm,k ( 0), which is a function of m,k ,

balls of type k will be added to the urn.

Please cite this article as: Ruibo Sun et al., A generalized drop-the-loser rule for multi-treatment clinical trials, Journal of Statistical Planning

and Inference (2006), doi: 10.1016/j.jspi.2006.06.039

ARTICLE IN PRESS

4

R. Sun et al. / Journal of Statistical Planning and Inference

(

)

–

With the GDL rule, the number of immigration balls remains unchanged. The number of treatment balls added to

the urn depends on

(a) the value of am,k when an immigration ball is drawn from the urn, and

(b) the value of Dm,k when a treatment ball is selected.

After n subjects are treated, let Nn,k be the number of subjects who are assigned to treatment k, k = 1, . . . , K. We

are interested in the statistical behavior of proportions Nn,k /n, k = 1, . . . , K.

Denote pk = E[Dm,k ], k = 1, . . . , K. We assume that 0 pk < 1, k = 1, . . . , K and am,k → ak > 0, k = 1, 2, . . . , K.

Under suitable conditions (stated in Section 4), we have

Nn,k

ak /qk

→ vk :=

n

a1 /q1 + · · · + aK /qK

a.s. k = 1, . . . , K,

(3)

where qk = 1 − pk , k = 1, 2, . . . , K. The convergence property (3) has been proved by Zhang et al. (2007) for the

two-treatment case. For equal limiting immigration rates (a1 = a2 = · · · = aK ), Dm,k = 1 if the response is a success

and Dm,k = 0 if the response is a failure, k = 1, . . . , K, the limiting proportions vk , k = 1, . . . , K, reduce to that of the

DL rule.

Given dichotomous outcomes, as discussed by Zhang et al. (2007), for pre-specified limiting allocation proportions

vk , k = 1, 2, . . . , K, an alternative and convenient approach is to set

(a) ak = C0 vk , where C0 is a constant, and

(b) Dm,k = 0.

With this allocation scheme, when a treatment ball of type k is selected, it is not replaced regardless of whether the

response is a success or failure. If the selected ball is of type 0 (i.e., an immigration ball), then no subject is treated and

the ball is placed back into the urn together with am,k ( 0) balls of type k, k = 1, . . . , K. This procedure is repeated

until a treatment ball is drawn.

The choice of C0 will be discussed in Section 5. As an example, let us consider a natural extension of the allocation

target (2) to K > 2 treatments. That is, if the desired allocation proportions (vk ) are

√

Pk

(4)

√

√ , k = 1, 2, . . . , K,

P1 + · · · + PK

then the choice of ak will be

ak (P1 , . . . , PK ) = C0 √

√

Pk

√ ,

P1 + · · · + PK

k = 1, 2, . . . , K.

(5)

Because the parameters P1 , . . . , PK are practically unknown, the Bayesian estimate can be employed. Hence, substitute

m,k , which is the current estimate of Pk at step m, k = 1, 2, . . . , K. Therefore, am,k = ak (P

m,1 , . . . , P

m,K ) is a

Pk by P

m,K . We propose the following estimate:

m,1 , . . . , P

function of P

m,k = (number of observed successes on treatment k) + 1 ,

P

(number of observed outcomes on treatment k) + 2

(6)

which is the Bayesian estimate of Pk with a uniform prior distribution, k = 1, 2, . . . , K. We can replace 1 in the

numerator by and 2 in the denominator by + if the beta distribution Beta(, ) is used as the prior distribution

instead. The constants and can be estimated from other earlier trials.

For the above allocation scheme, the superior treatments (those with higher probabilities of success) will have a

better chance of being rewarded more balls each time when the immigration ball is chosen. As a result, on average, the

allocation scheme is able to send more subjects to better treatments.

When the outcomes are not dichotomous, one can choose suitable adding rules {Dm,k } to define a design. For

example, the outcome of a patient after treatment for cancer can be classified as “clinically ineffective”, “gradual

improvement with extended treatment”, and “fully recovered”, one can define Dm,k = 1 if the outcome is a “fully

Please cite this article as: Ruibo Sun et al., A generalized drop-the-loser rule for multi-treatment clinical trials, Journal of Statistical Planning

and Inference (2006), doi: 10.1016/j.jspi.2006.06.039

ARTICLE IN PRESS

R. Sun et al. / Journal of Statistical Planning and Inference

(

)

–

5

recovered”, Dm,k = (0 < < 1) if the outcome is “gradual improvement with extended treatment”, and Dm,k = 0 if

the outcome is “clinically ineffective”.

4. Asymptotic properties

4.1. Notation and assumptions

In this section, two important asymptotic properties of the GDL rule, consistency and asymptotic normality, will be

discussed. However, before the examination of these asymptotic properties, notation and assumptions are required.

Let tl be the entry time of the lth subject, where tl is an increasing sequence of random variables. Assume that

{tl+1 − tl } is a sequence of independent and identically distributed random variables. The response time of the lth

subject on treatment k is denoted by rl (k). Suppose that {rl (k); l 1} is a sequence of independent random variables,

k = 1, . . . , K. We assume that the draw, removal, and addition of balls require no time. To state the asymptotic results,

we need a few assumptions. The first two assumptions are also given by Zhang et al. (2007).

Assumption 4.1. Let k (l, n) = I {rl (k) > tl+n − tl } be an indicator function that takes the value 1 if the response of

the lth subject to treatment k occurs after at least another n subjects are randomized, and 0 otherwise. Suppose that for

some constants C > 0 and > 2,

k (l, n) = P{k (l, n) = 1} Cn− ,

l, n = 1, . . . , k = 1, . . . , K.

Assumption 4.2. {Dm,k ; m 1}, k = 1, . . . , K, are K sequences of i.i.d. random variables with 0 pk = E[Dm,k ] < 1

and E|Dm,k |p < ∞ for any p > 0, k = 1, . . . , K. The sequences {Dm,k ; m 1, k = 1, 2, . . . , K} and {rl (k); l 1, k =

1, 2, . . . , K} are independent.

Assumption 4.1 is similar to Assumption 1 of Bai et al. (2002), who were the first to discuss the asymptotic properties

of response adaptive designs with delayed response. When Assumption 4.2 is satisfied, we let 2k = Var(Dm,k ) be the

variance of the adding rules and qk = 1 − pk , k = 1, . . . , K.

For the immigration mechanism we assume the following.

Assumption 4.3. There are positive constants ak , k = 1, . . . , K, such that

am,k → ak

a.s., k = 1, . . . , K.

By Assumption 4.3, without loss of generality, we assume that there are positive constants a and a such that

a am,k a,

k = 1, . . . , K, m = 1, 2, . . . .

(7)

4.2. Strong consistency

Theorem 4.1. Suppose that Assumptions 4.1–4.3 are satisfied. Then,

Nn,k

→ vk

n

a.s.,

where vk are defined in (3), k = 1, . . . , K. Further, if

log log m

am,k − ak = O

a.s., k = 1, . . . , K,

m

then

Nn,k

− vk = O

n

log log n

n

(8)

a.s., k = 1, . . . , K.

Please cite this article as: Ruibo Sun et al., A generalized drop-the-loser rule for multi-treatment clinical trials, Journal of Statistical Planning

and Inference (2006), doi: 10.1016/j.jspi.2006.06.039

ARTICLE IN PRESS

6

R. Sun et al. / Journal of Statistical Planning and Inference

(

)

–

Proof. Let Xm = (Xm,0 , Xm,1 , . . . , Xm,K ) be the result of the

mth draw, where Xm,k = 1 if the selected ball is of type

m

∗ , . . . , N∗ ) =

∗

k, k = 0, . . . , K. Further, denote N∗m = (Nm,0

j =1 Xj , where Nm,k is the number of draws of type k

m,K

∗

∗

balls in the first m draws. Let un = max{m : Nm,1 + · · · + Nm,K n}. Then, un is the total number of draws when the

nth subject is allocated to a treatment and Nn,k = Nu∗n ,k , k = 1, . . . , K.

Let Ik (m, n) be the indicator function, which takes the value 1 if the response m,k to treatment k of the subject

who is assigned at the mth draw occurs after the (m + n)th draw and before the (m + n + 1)th draw, k = 1, . . . , K.

Remember that when m,k occurs we add Dm,k balls of type k to the urn. So, for given m and n, if Ik (m, n) = 1, we

add Xm,k Dm,k balls of type k to the urn. Therefore,

Zm,k − Z0,k =

=

m

j =1

m

aj,k Xj,0 −

ai,k Xi,0 +

i=1

m

Xj,k +

j =1

m

j

m Ik (i, j − i)Xi,k Di,k

j =1 i=1

Xi,k (Di,k − 1) − Rm,k ,

(9)

i=1

where

Rm,k =

∞

m

Xi,k Di,k Ik (i, j − i).

i=1 j =m+1

That is,

Zm,k = am,k Xm,0 + Xm,k (Dm,k − 1) − Rm,k ,

k = 1, . . . , K,

(10)

where zm = zm − zm−1 denotes the differencing of a sequence {zm }. From (9), it follows that

Zm,k − Z0,k =

m

i=1

∗

ai,k Xi,0 − qk Nm,k

+

∗

∗

= ak Nm,0

− qk Nm,k

+

where Mm,k =

m

i=1 Xi,k (Di,k

m

m

Xi,k (Di,k − E[Di,k ]) − Rm,k

i=1

(ai,k − ak )Xi,0 + Mm,k − Rm,k ,

(11)

i=1

− E[Di,k ]), k = 1, . . . , K.

The next two lemmas indicate that Rm,k , Zm,k can be neglected for large m. The proofs of these two lemmas are

provided in the Appendix.

Lemma 4.1. Suppose that Assumptions 4.1 and 4.2 and (7) are satisfied. Then, for any > 0, we have

Rm,k = o(m1/+ )

a.s., k = 1, . . . , K.

(12)

Lemma 4.2. Under Assumptions 4.1 and 4.2 and (7), for each k = 1, . . . , K and any > 0,

Zm,k = o(m1/+ + m1/4+ ) a.s.

(13)

Now, let s=a1 /q1 +· · ·+aK /qK . Then, vk =(ak /qk )/s, k=1, . . . , K. Further, let Am =(X1 , . . . , Xm , Xm+1 , 1 , . . . ,

m ) where i = (i,1 , i,2 , . . . , i,K ) and Mm = (Mm,1 , . . . , Mm,K ). Then, {Mm , Am ; m 1} is a martingale with

m

i=1

E[(Mi,k )2 |Ai−1 ] =

m

i=1

E[Mi,k · Mi,j |Ai−1 ] = 0,

∗

Xi,k Var(Di,k ) = Nm,k

2k ,

j = k

(14)

(15)

and

E[|Mi,k |p |Ai−1 ] 2p E[|D1,k |p ].

Please cite this article as: Ruibo Sun et al., A generalized drop-the-loser rule for multi-treatment clinical trials, Journal of Statistical Planning

and Inference (2006), doi: 10.1016/j.jspi.2006.06.039

ARTICLE IN PRESS

R. Sun et al. / Journal of Statistical Planning and Inference

(

)

–

According to the law of the iterated logarithm for martingales, we have

Mm,k = O( m log log m) a.s., k = 1, . . . , K.

7

(16)

Combining (11)–(13), (16), and Assumption 4.3 yields that, for any > 0,

∗

qk Nm,k

∗

− ak Nm,0

= Mm,k +

m

(ai,k − ak )Xi,0 + o(m(+1)/(3)+ )

i=1

= o(m)

a.s., k = 1, . . . , K,

(17)

which implies that

∗

Nm,k

∗ + · · · + N∗

Nm,1

m,K

→ vk

a.s., k = 1, . . . , K.

∗ + · · · + N∗

∗

Note that un = max{m : Nm,1

m,K n} and Nn,k = Nun ,k . It follows that

Nu∗n ,k

Nn,k

= ∗

→ vk

n

Nun ,1 + · · · + Nu∗n ,K

a.s., k = 1, . . . , K.

Further, suppose that (8) is satisfied. By (16) and (17)

∗

∗

qk Nm,k

− ak Nm,0

= O( m log log m) a.s., k = 1, . . . , K,

∗ + · · · + N∗

which, together with Nm,0

m,K = m, yields

∗

Nm,k

=m

s

vk + O( m log log m) a.s., k = 1, . . . , K.

s+1

(18)

Hence,

∗

∗

un = max{m : Nm,1

+ · · · + Nm,K

n} =

s+1

n + O( n log log n) a.s.

s

(19)

Combining (18) and (19), we have

Nn,k = Nu∗n ,k = un

s

vk + O( un log log un ) = nv k + O( n log log n) a.s. k = 1, . . . , K.

s+1

The proof of Theorem 4.1 is now complete.

(20)

m,1 , . . . , P

m,K ) with P

m,k s being defined as in

Remark 4.1. If ak (P1 , . . . , PK ) is defined as in (5) and am,k = ak (P

(6), k = 1, 2 . . . , K, then Assumption 4.3 and the condition given in Eq. (8) are satisfied.

4.3. Asymptotic normality

Theorem 4.2. Suppose that Assumptions 4.1 and 4.2 are satisfied and for some > 0,

am,k − ak = O(m1/2− )

a.s., k = 1, . . . , K.

(21)

One can define a K-dimensional standard Wiener process {W(t) = (W1 (t), . . . , WK (t)); t 0} such that for some > 0

Nn,k − nv k =

√

√

K

j vj

k vk

Wk (n) − vk

Wj (n) + o(n1/2−

)

qk

qj

a.s., k = 1, . . . , K.

j =1

The proof of Theorem 4.2 is given in the appendix. The following is an immediate corollary by the properties of the

Wiener processes.

Please cite this article as: Ruibo Sun et al., A generalized drop-the-loser rule for multi-treatment clinical trials, Journal of Statistical Planning

and Inference (2006), doi: 10.1016/j.jspi.2006.06.039

ARTICLE IN PRESS

8

R. Sun et al. / Journal of Statistical Planning and Inference

(

)

–

Corollary 4.1. Denote Nn = (Nn,1 , . . . , Nn,K ) and v = (v1 , . . . , vK ), where vk , k = 1, . . . , K, are defined in (3). Under

assumptions in Theorem 4.2,

√

Nn

Nn

D

→ v a.s. and n

− v → N (0, ),

n

n

where

= P diag(21 v1 , . . . , 2K vK )P

and P = (a1 /q1 + · · · + aK /qK )diag(1/a1 , . . . , 1/aK )(diag(v) − v v).

5. Simulation study

According to Theorem 4.1, the sample allocation proportions converge to the aimed allocation proportions when the

sample size is large. In this section, a simulation study is used to explore the performance of our allocation scheme for

finite samples. Furthermore, it is interesting to study the effect of delayed responses on sample allocation proportions.

Two different allocation targets, (1) and (4), are used in our simulation study, with the number of treatments (K)

equaling 3. Different configurations of P1 , P2 , and P3 are chosen. Although we have also conducted simulation studies

for larger values of K, the results are not reported here because they are similar to the case when K = 3. To estimate

m,k , k = 1, 2, 3, the Bayesian estimator in (6) is employed.

P

Exponential distribution is used to model both the delay times of treatment responses and the patient entry times.

The mean parameters of the delayed times for treatments 1, 2 and 3 are 1 , 2 , and 3 , respectively. For patient

entry times, the mean parameter is 0 . We select three different configurations for the mean parameters. The first

configuration corresponds to the case in which there is no delayed response. The second configuration corresponds to

(0 , 1 , 2 , 3 ) = (1, 1, 1, 1), which represents similar delay times for the responses to all three treatments. Finally, the

third configuration is selected to represent larger disparities among delay times for the responses to different treatments.

As explained earlier, for simplicity we pick Dm,k = 0 for the GDL rules.

The sample size and the number of replications in our simulation study are 100 and 100 000, respectively. The

proportions of subjects who are allocated to treatments 1 and 2 (Nn,1 /n and Nn,2 /n) are shown in Tables 1 and 2,

whereas Nn,3 /n is omitted because it is simply 1 − Nn,1 /n − Nn,2 /n.

The DBCD is also included for comparison. The allocation scheme follows that provided by Rosenberger and Hu

(2004). Their suggested value of 2 for the parameter that determines the variability of the allocation proportions which

arise from the randomized procedure is also adopted.

In Table 1, allocation target (1) is used. Although the DL rule was not designed for delayed responses, it is included

to allow exploration. A simplistic approach is used. When a treatment ball is chosen, the decision of whether to return

the ball or not is deferred until the response is observed.

In Table 2, allocation target (4) is used. Because the DL rule is not able to achieve this target, we include only the

suggested GDL rule and the DBCD. For the GDL rule, two choices of C for the immigration rates, a1 , a2 , and a3 are

selected.

We summarize the findings of the simulation results as follows:

(a) With reference to Table 1, the performance of all of the allocation schemes are similar when there is no delayed

response. However, the GDL rule and the DBCD are superior when there are delayed responses, especially when

treatment success probabilities are high (for example: (P1 , P2 , P3 ) = (.8, .7, .6)). This is why the GDL rule and

the DBCD are superior to the DL rule in terms of the convergence of the allocation proportions in such cases.

(b) As for the comparison between the GDL rule and the DBCD, the difference is quite small. Note that the variances

of the GDL rule and the DBCD are slightly larger than that of the DL rule due to the requirement of estimating P1 ,

P2 , and P3 at each stage when an immigration ball is selected.

(c) In Table 2, the two different values of C, representing the addition of roughly one treatment ball of each type when

an immigration ball is selected, do not yield much difference in terms of the allocation proportions. Other possible

values of C were examined and, as long as the number of balls added to the urn for each type remained less than 2,

similar results were obtained and hence not reported. One of the functions of the immigration ball is to avoid the

possibility of the extinction of any type of treatment balls. Another function is to refill the urn with treatment balls

Please cite this article as: Ruibo Sun et al., A generalized drop-the-loser rule for multi-treatment clinical trials, Journal of Statistical Planning

and Inference (2006), doi: 10.1016/j.jspi.2006.06.039

ARTICLE IN PRESS

R. Sun et al. / Journal of Statistical Planning and Inference

(

)

–

9

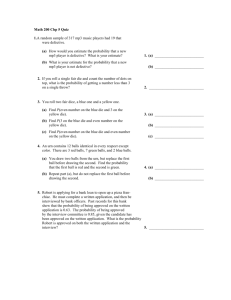

Table 1

Simulated allocation proportions with allocation target v1 and v2 given in (1)

P1

P2

P3

Immediate responses

.8

.8

.8

.8

.7

.6

.7

.6

.5

.7

.5

.5

.5

.3

.2

v1

v2

DL

GDL (1)

DBCD

Nn,1 /n

Nn,2 /n

Nn,1 /n

Nn,2 /n

Nn,1 /n

Nn,2 /n

.333

.462

.426

.455

.428

.333

.308

.319

.273

.305

.333(.059)

.413(.061)

.403(.056)

.427(.054)

.418(.039)

.334(.059)

.323(.056)

.325(.051)

.286(.044)

.309(.032)

.334(.089)

.430(.086)

.406(.071)

.428(.068)

.411(.046)

.333(.089)

.316(.074)

.323(.062)

.286(.052)

.311(.037)

.333(.094)

.447(.089)

.417(.071)

.443(.069)

.419(.047)

.334(.094)

.312(.076)

.321(.062)

.278(.053)

.308(.039)

(0 , 1 , 2 , 3 ) = (1,1,1,1)

.8

.8

.8

.333

.8

.7

.6

.462

.7

.6

.5

.426

.7

.5

.5

.455

.5

.3

.2

.428

.333

.308

.319

.273

.305

.333(.056)

.410(.058)

.401(.054)

.424(.053)

.416(.039)

.334(.056)

.324(.054)

.326(.049)

.288(.043)

.309(.032)

.333(.088)

.428(.086)

.405(.070)

.428(.068)

.410(.046)

.333(.088)

.317(.074)

.324(.062)

.286(.052)

.311(.037)

.334(.094)

.447(.089)

.416(.071)

.442(.069)

.419(.047)

.333(.094)

.312(.076)

.321(.062)

.279(.053)

.308(.039)

(0 , 1 , 2 , 3 ) = (1,5,1,1)

.8

.8

.8

.333

.8

.7

.6

.462

.7

.6

.5

.426

.7

.5

.5

.455

.5

.3

.2

.428

.333

.308

.319

.273

.305

.306(.049)

.378(.052)

.379(.049)

.402(.048)

.406(.037)

.347(.056)

.342(.053)

.338(.048)

.299(.043)

.315(.031)

.327(.086)

.422(.084)

.402(.069)

.425(.068)

.411(.046)

.336(.088)

.320(.073)

.325(.061)

.288(.052)

.311(.037)

.333(.095)

.445(.090)

.416(.072)

.442(.070)

.419(.048)

.334(.095)

.313(.077)

.321(.063)

.279(.053)

.308(.040)

Note: The simulated standard deviations are given in parentheses.

GDL (1): a1 = 2v1 , a2 = 2v2 , a3 = 2v3 .

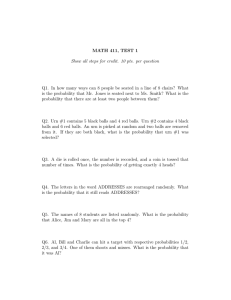

Table 2

Simulated allocation proportions with allocation target v1 and v2 given in (4)

P1

P2

P3

Immediate responses

.8

.8

.8

.8

.7

.6

.7

.6

.5

.7

.5

.5

.5

.3

.2

v1

v2

GDL (2)

GDL (3)

DBCD

Nn,1 /n

Nn,2 /n

Nn,1 /n

Nn,2 /n

Nn,1 /n

Nn,2 /n

.333

.357

.361

.372

.415

.333

.334

.334

.314

.322

.333(.017)

.354(.020)

.358(.024)

.367(.025)

.398(.034)

.333(.017)

.334(.021)

.334(.024)

.317(.026)

.322(.036)

.333(.018)

.354(.021)

.357(.025)

.366(.025)

.396(.034)

.333(.018)

.334(.022)

.334(.025)

.317(.027)

.323(.036)

.333(.025)

.357(.027)

.360(.030)

.371(.031)

.410(.040)

.333(.025)

.334(.028)

.334(.030)

.315(.032)

.321(.042)

(0 , 1 , 2 , 3 ) = (1,1,1,1)

.8

.8

.8

.333

.8

.7

.6

.357

.7

.6

.5

.361

.7

.5

.5

.372

.5

.3

.2

.415

.333

.334

.334

.314

.322

.333(.017)

.354(.020)

.357(.024)

.367(.025)

.397(.034)

.333(.017)

.334(.021)

.334(.024)

.317(.026)

.323(.036)

.333(.018)

.354(.021)

.356(.024)

.365(.025)

.396(.034)

.333(.018)

.334(.022)

.334(.025)

.317(.027)

.323(.036)

.333(.025)

.357(.027)

.360(.030)

.371(.031)

.409(.041)

.333(.025)

.334(.028)

.334(.031)

.315(.032)

.321(.042)

(0 , 1 , 2 , 3 ) = (1,5,1,1)

.8

.8

.8

.333

.8

.7

.6

.357

.7

.6

.5

.361

.7

.5

.5

.372

.5

.3

.2

.415

.333

.334

.334

.314

.322

.332(.016)

.353(.020)

.356(.024)

.365(.024)

.397(.034)

.334(.017)

.335(.021)

.335(.024)

.317(.026)

.322(.036)

.332(.018)

.352(.021)

.355(.024)

.364(.025)

.396(.034)

.334(.018)

.335(.022)

.335(.025)

.318(.027)

.323(.035)

.333(.025)

.356(.028)

.360(.030)

.371(.031)

.410(.041)

.333(.025)

.334(.028)

.334(.031)

.315(.032)

.321(.042)

Note: The simulated standard deviations are given in parentheses.

GDL (2): a1 = 2v1 , a2 = 2v2 , a3 = 2v3 .

√

√

√

GDL (3): a1 = 2 P1 , a2 = 2 P2 , a3 = 2 P3 .

Please cite this article as: Ruibo Sun et al., A generalized drop-the-loser rule for multi-treatment clinical trials, Journal of Statistical Planning

and Inference (2006), doi: 10.1016/j.jspi.2006.06.039

ARTICLE IN PRESS

10

R. Sun et al. / Journal of Statistical Planning and Inference

(

)

–

according to the most current estimates of the success probabilities. Hence, if too many treatment balls are added

to the urn during the allocation process, the immigration ball will not be able to play its role because its chance of

being selected will remain relatively small.

6. Conclusion

In this paper, the GDL rule has been extended to multi-treatment clinical studies. The asymptotic properties that are

established in Section 4 provide a complete theoretical basis for the application of the GDL rule.

The GDL rule can be considered as a modified version of traditional urn models, which normally update the urn

proportions without using the entire history of patient responses. Here, the estimates of the probability of success for

all treatments in each treatment allocation stage, together with the use of the immigration balls, play an important role

in skewing allocation proportions towards the desired allocation targets.

Finally, as indicated in the simulation study, the GDL rule works very well for delayed responses. This is a useful

feature, because in clinical studies it is frequent that patient responses cannot be observed immediately.

Acknowledgements

Special thanks go to the anonymous referees for their constructive comments, which led to a much improved version

of the paper.

Appendix

Proof of Lemma 4.1. Recall that Zm = (Zm,0 , Zm,1 , . . . , Zm,K ) are the numbers of balls in the urn after m draws,

+

+

+

+

+

+

+

Z+

m = (Zm,0 , Zm,1 , . . . , Zm,K ) are the non-negative numbers and |Zm | = Zm,0 + Zm,1 + · · · + Zm,K . Because every

+

immigration ball is replaced, Zm,0

= Zm,0 = Z0,0 for all m. For any given small enough, note that

P{Xi,0 = · · · = Xi+[m ],0 = 1}

= E I {Xi,0 = · · · = Xi+[m ]−1,0 = 1}

Z0,0

+

|Zi+[m

]−1 |

Z0,0

P{Xi,0 = · · · = Xi+[m ]−1,0 = 1}

,

Z0,0 + Ka([m ] − 1)

because at each stage from stage i to i + [m ] − 1 at least Ka balls are added in the urn by (7) and no ball is removed.

It follows that

P{Xi,0 = · · · = Xi+[m ],0 = 1} [m ]

j =1

Z0,0

C exp{−m }.

Z0,0 + Ka(j − 1)

Zhang et al. (2007) showed that

E[Ik (l, m)]Cm−(1−) +

l+m

P{Xi,0 = · · · = Xi+[m ],0 = 1}.

i=l

It follows that

E[Ik (l, m)]Cm−(1−) + Cm exp{−m } Cm−(1−) .

Hence

∞

E[Ik (i, j )]Cm−(−1−)

j =m

for any > 0 and all m, i and k = 1, . . . , K. With the above inequality, the proof is now the same as that of Lemma 5.1

of Zhang et al. (2007). Please cite this article as: Ruibo Sun et al., A generalized drop-the-loser rule for multi-treatment clinical trials, Journal of Statistical Planning

and Inference (2006), doi: 10.1016/j.jspi.2006.06.039

ARTICLE IN PRESS

R. Sun et al. / Journal of Statistical Planning and Inference

(

)

–

11

Proof of Lemma 4.2. According to (7) and (10), we have

Zm,k = Zm−1,k +

Zm−1,k +

+

+

− qk Zm−1,k

am,k Zm−1,0

|Z+

m−1 |

+

aZ0,0 − qZm−1,k

|Z+

m−1 |

+ Um,k − Rm,k

+ Um,k − Rm,k ,

where q = mink (qk ). Then,

|Zm | |Zm−1 | +

KaZ0,0 − q|Z+

m−1 |

|Z+

m−1 |

+ Um − Rm

|Zm−1 | + Um − Rm − q/2

if |Z+

m−1 | 2KaZ0,0 /q.

(22)

K

K

Here, |Zm | = K

k=1 Zm,k , Rm =

k=1 Rm,k , Um =

k=1 Um,k , Um,k = am,k (Xm,0 − E[Xm,k |Fm−1 ]) − (Xm,k Dm,k −

E[Xm,0 Dm,k |Fm−1 ]). Here, Fm = (X1 , . . . , Xm , Z1 , . . . , Zm , 1 , . . . , m ) is the history sigma field. Let C0 =

2KaZ0,0 /q and Sm = max{1j m : |Z+

j | < C0 }, where max(∅) = 0. Then, according to (22),

|Zm | |Zm−1 | + Um − Rm + Rm−1 − q/2

· · · |Z+

Sm | + USm +1 + · · · + Um − Rm + RSm +1 − (m − Sm )q/2

|Z+

0 | ∨ C0 + Um − USm + max |Ri,k | − (m − Sm )q/2.

i m

Due to the law of the iterated logarithm of martingales,

|Um − USm | max |Ui | = O( m log log m) a.s.

i m

√

If m − Sm m log m, then for m large enough

|Zm | O( m log log m) − q m log m/2 < − (K + 1),

√

which is impossible because each Zm,k > − 1. If m − Sm < m log m, then

|Zm | |Z+

0 | ∨ C0 + 2

max |Um−[√m log m]+i

√

i m log m

It is obvious that

E

m

i m log m

i m

max |Um−[√m log m]+i

√

i m log m

which implies that

P

max

√

− Um−[√m log m] | + max |Ri |.

p

− Um−[√m log m] |

= O((m log m)p/4 )

|Um−[√m log m]+i

− Um−[√m log m] | m1/4+

∀p > 0,

C

m

(log m)p/4

< ∞,

m p

if p is chosen to be large enough, which implies that

max |Um−[√m log m]+i

√

i m log m

− Um−[√m log m] | = o(m1/4+ )

The proof of (13) is now complete due to (12).

a.s. ∀ > 0.

obs be the number of the observed outcomes on treatment k at step m. Then with probability

Proof of Remark 4.1. Let Nm,k

obs → ∞ implies P

m,k → Pk (c.f., Melfi and Page, 2000; Hu and Zhang, 2004). It is, however, obvious that

one, Nm,k

Please cite this article as: Ruibo Sun et al., A generalized drop-the-loser rule for multi-treatment clinical trials, Journal of Statistical Planning

and Inference (2006), doi: 10.1016/j.jspi.2006.06.039

ARTICLE IN PRESS

12

R. Sun et al. / Journal of Statistical Planning and Inference

(

)

–

m,k } takes finitely many values if N obs is bounded. Hence, the condition given in (7) is satisfied. And

the sequence {P

m,k

then, by Lemmas 4.1, 4.2 and (17),

∗

∗

+ o(m)qk Nm,k

=

aNm,0

k = 1, 2, . . . , K. Notices

m

i=1

K

∗

j =0 Nm,j

∗

ai,k Xi,0 + o(m) aNm,0

+ o(m)

a.s.,

= m. We conclude that

⎛

⎞−1

K

∗

a Nm,0

a⎝

1

a/qj ⎠ + o(1)

1+

+ o(1) m

qk m

qk

∗

Nm,k

a.s.

j =1

∗ → ∞ a.s. and N ∗ c m if m is large enough, k = 1, 2, . . . , K. Now, with the argument given

It follows that Nm,k

0

m,k

in Lemma 4.1 we have that the sum of the observed outcomes on treatment k at step m is

j

m Ik (i, j − i)Xi,k i,k =

j =1 i=1

m

Xi,k i,k −

i=1

∞

m

Ik (i, j − i)Xi,k i,k =

i=1 j =m+1

m

Xi,k i,k + o(m1/+ )

a.s.,

i=1

where m,k (=0 or 1) is the outcome of a subject assigned to treatment k at step m, and also

obs

Nm,k

=

j

m j =1 i=1

∗

Ik (i, j − i)Xi,k = Nm,k

−

∞

m

i=1 j =m+1

∗

Ik (i, j − i)Xi,k = Nm,k

+ o(m1/+ )

a.s.

It follows that

m

1/+ )

Xi,k i,k

i=1 Xi,k i,k + o(m

= i=1 ∗

(1 + o(m1/+−1 ))

∗

1/

+

Nm,k

Nm,k + o(m

)

∗

log log Nm,k

log log m

= Pk + O

a.s.

= Pk + O

∗

Nm,k

m

m,k =

P

m

Hence we conclude that Assumption 4.3 and the condition given in (8) are satisfied.

Proof of Theorem 4.2. According to (17) and (21), for some > 0,

∗

∗

qk Nm,k

− ak Nm,0

= Mm,k + o(m1/2− )

a.s., k = 1, . . . , K.

Note that Nn,k = Nu∗n ,k , k = 1, . . . , K. It follows that

Nn,k −

ak ∗

1

Nun ,0 = Mun ,k + o(n1/2− ) a.s., k = 1, . . . , K.

qk

qk

Taking the summation over k yields

n − sN ∗un ,0 =

K

1

Mun ,k + o(n1/2− )

qk

a.s.

k=1

Then

K

Nn,k

1

1

− nv k = Mun ,k − vk

Mun ,j + o(n1/2− )

qk

qj

a.s., k = 1, . . . , K.

(23)

j =1

Please cite this article as: Ruibo Sun et al., A generalized drop-the-loser rule for multi-treatment clinical trials, Journal of Statistical Planning

and Inference (2006), doi: 10.1016/j.jspi.2006.06.039

ARTICLE IN PRESS

R. Sun et al. / Journal of Statistical Planning and Inference

(

)

–

13

Note that {Mn , An ; n1} is a martingale and {un } is a non-decreasing sequence of stopping times. {Mun , Aun ; n 1}

is also a martingale. According to (14), (15) and (20),

n

E[(Mui ) Mui |Aui−1 ] =

un

E[(Mi ) Mi |Ai−1 ]

i=1

i=1

∗

2

∗

= diag(Nun ,1 1 , . . . , Nun ,K 2K ) = diag(Nn,1 21 , . . . , Nn,K 2K )

= n diag(v1 21 , . . . , vK 2K ) + O( n log log n) a.s.

Hence, by a refinement of the theorem of Eberlein (1986) (cf. Zhang, 2004), we can define a K dimensional standard

Brownian motion W(t) such that

√

√

Mun = W(n) diag(1 v1 , . . . , K vK ) + o(n1/2−

) a.s. for some > 0.

(24)

Combining (23) and (24) completes the proof of Theorem 4.2.

References

Bai, Z.D., Hu, F., Rosenberger, W.F., 2002. Asymptotic properties of adaptive designs for clinical trials with delayed response. Ann. Statist. 30,

122–139.

Durham, S.D., Flournoy, N., Li, W., 1998. A sequential design for maximizing the probability for a favorable response. Canadian J. Statist. 26,

479–495.

Eberlein, E., 1986. On strong invariance principles under dependence. Ann. Probab. 14, 260–270.

Hu, F., Rosenberger, W.F., 2003. Optimality, variability, power: evaluating response-adaptive randomization procedures for treatment comparisons.

J. Amer. Statist. Assoc. 98, 671–678.

Hu, F., Zhang, L.-X., 2004. Asymptotic properties of doubly adaptive biased coin designs for multi-treatment clinical trials. Ann. Statist. 32,

268–301.

Ivanova, A.V., 2003. A play-the-winner-type urn model with reduced variability. Metrika 58, 1–13.

Ivanova, A.V., Flournoy, N., 2001. A birth and death urn for ternary outcomes: stochastic processes applied to urn models. In: Charalambides, C.,

Koutras, M.V., Balakrishnan, (Eds.), Probability and Statistical Models with Applications. Chapman and Hall/CRC, Boca Raton, pp. 583–600.

Ivanova, A.V., Rosenberger, W.F., Durham, S.D., Flournoy, N., 2000. A birth and death urn for randomized clinical trials: asymptotic methods.

Sankhya B 62, 104–118.

Melfi, V.F., Page, C., 2000. Estimation after adaptive allocation. J. Statist. Plann. Inference 87, 353–363.

Rosenberger, W.F., Hu, F., 2004. Maximizing power and minimizing treatment failures. Clinical Trials 1, 141–147.

Rosenberger, W.F., Lachin, J.M., 2002. Randomization in Clinical Trials: Theory and Practice. Wiley, New York.

Rosenberger, W.F., Stallard, N., Ivanova, A.V., Harper, C.N., Ricks, M.L., 2001. Optimal adaptive designs for binary response trials. Biometrics 57,

909–913.

Wei, L.J., 1979. The generalized Polya’s urn design for sequential medical trials. Ann. Statist. 7, 291–296.

Wei, L.J., Durham, S.D., 1978. The randomized play-the-winner rule in medical trials. J. Amer. Statist. Assoc. 73, 840–843.

Zhang, L.X., 2004. Strong approximations of martingale vectors and their applications in Markov-chain adaptive designs. Acta Math. Appl. Sinica,

English Series 20 (2), 337–352.

Zhang, L.X., Chan, W.S., Cheung, S.H., Hu, F., 2007. Generalized drop-the-loser urn for clinical trials with delayed responses. Statist. Sinica, in

press.

Please cite this article as: Ruibo Sun et al., A generalized drop-the-loser rule for multi-treatment clinical trials, Journal of Statistical Planning

and Inference (2006), doi: 10.1016/j.jspi.2006.06.039