BU7527 - Mathematics of Contingent Claims Mike Peardon Michaelmas Term, 2015

advertisement

BU7527 - Mathematics of Contingent Claims

Mike Peardon

School of Mathematics

Trinity College Dublin

Michaelmas Term, 2015

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

1 / 24

Introduction

to

Monte Carlo

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

2 / 24

Basic Monte Carlo Estimation

If we generate a large ensemble of N uniform variate random

numbers

eX = {X1 , X2 , X3 , . . . XN }

and then evaluate a function f at each point to give the ensemble

eF = {F1 = f (X1 ), F2 = f (X2 ), F3 = f (X3 ), . . . FN = f (XN )}

then it is easy to show that

E[F̄(N) ] = E[F] =

Z 1

dx f (x)

0

This is Monte Carlo! We have a means of reliably computing (to

arbitrary accuracy) any well-defined integral in a finite range.

Infinite ranges can often be computed by changes of variable.

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

3 / 24

Basic Monte Carlo Estimation (2)

The variance of our estimator

(Z is

Z 1

2 )

1

1

ν

[

F

]

=

dx f (x)2 −

dx f (x)

ν[F̄(N) ] =

N

N

0

0

For large N, the sample mean is normally distributed. Can make

(reliable) probabilistic statements about range of true mean.

For estimator to be useful, we must estimate this uncertainty. Can

be done by Monte Carlo too!

So we also need to compute the ensemble

0

eF0 = {F10 = f 2 (X1 ), F20 = f 2 (X2 ), . . . FN

= f 2 (XN )}

And then the uncertainty in our determination is

r

E [ F0 ] − E [ F ] 2

σF =

N

Central limit theorem: probability I lies in the range

[E[F̄] − σF , E[F̄] + σF ] is 68%

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

4 / 24

Basic Monte Carlo Estimation (3)

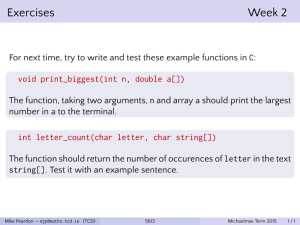

Simple example Monte Carlo calculation

I=

Z 1

dx

0

The exact answer is 4/3

N

100

1,000

10,000

100,000

1,000,000

10,000,000

100,000,000

1,000,000,000

1−x

√

x

E[F̄(N) ]

1.476353

1.279416

1.300104

1.362455

1.336083

1.334738

1.333639

1.333364

σF

0.200139

0.063977

0.022205

0.018537

0.003774

0.001661

0.000415

0.000146

Error falls by a factor ≈ 10 each time N is increased by 100

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

5 / 24

Basic Monte Carlo Estimation (4)

Simple example Monte Carlo calculation (2)

I=

Z 1

0

dx

1−x

4

√ =

3

x

Repeat 10 times with N = 1, 000, 000 and different RNG seeds:

Seed

E[F̄(N) ]

σF

I ∈ [E[F̄] − σF , E[F̄] + σF ]?

1234

2468

3702

4936

6170

7404

8638

9872

11106

12340

1.336083

1.333846

1.331265

1.334344

1.335615

1.339609

1.329398

1.331647

1.329143

1.336792

0.003774

0.003235

0.003223

0.003652

0.004920

0.004288

0.003275

0.003469

0.003333

0.003724

Y

Y

Y

Y

Y

N

N

Y

N

Y

True answer is in the quoted range in 7 of the 10 trials.

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

6 / 24

Variance reduction

Two different estimators can have different variances (eg N = 100

and N = 1, 000, 000 in the example above).

Lower variance means a smaller range for the true answer to lie in.

Different Monte Carlo estimators can be built: variance reduction

Many different methods:

Antithetic variables

Stratified sampling

Control variates

Importance Sampling

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

7 / 24

Antithetic variables

Recall that if X ∈ [0, 1] is uniform variate, then so is X̃ = 1 − X

Two independent estimators; F = f (X), F̃ = f (X̃). These are

antithetic stochastic variables.

ν[X + Y] = ν[X] + ν[Y] + 2ν(X, Y), where ν is the covariance of X

and Y

ν(X, Y) = E[XY] − E[X]E[Y]

If ν(X, Y) < 0, then the variance of the sum is reduced

These estimators are not now independently distributed, To analyse

the uncertainty, “fuse” them: F(a) = 21 (F + F̃) and generate the

ensemble F(a)

(a) (a)

(a)

Treat eFa = {F1 , F1 , . . . FN , } as iid.

In many examples, using F(a) leads to lower variance.

If the function is odd-symmetric about the mid-point of the integral;

f (x) = −f (1 − x), variance is zero (so is I!).

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

8 / 24

Antithetic variables (2)

Example: evaluate I =

R1

0

dx e0.1x sin 2πx

The integrand is positive for x ∈ [0, 12 ], negative otherwise.

Antithetic variables should work well, since f (x) and f (1 − x) have

opposite signs.

The correct answer is −0.1673423263 . . .

Using N = 1, 000, 000, a simple Monte Carlo estimator and the antithetic

estimator give

6

Ī (10 )

σI

Simple

-0.016046 0.000743

Antithetic -0.016716 0.000015

Both agree (within uncertainty) with true result.

Error is fifty times smaller for antithetic case.

This corresponds to a saving of 2,500 in cost.

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

9 / 24

Stratified Sampling

If I =

R1

0

dx f (x) then I =

R

1

2

0

dx f (x) +

R1

1

2

dx f (x)

What happens if we use N/2 samples to estimate the first half of

the integral, N/2 samples to estimate the second half and add the

two results?

Call the two sub-estimators ĪA and ĪB , so

ĪA =

1 N/2

f (Xi )

N/2 i∑

=1

1

Xi ∈ [0, ]

2

with the corresponding expression for ĪB . The definition implies

E[ĪA ] = 2

1

2

Z

dx

0

The factor of two comes from the normalisation of the probability

density for a u.v. random number ∈ [0, 1/2]

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

10 / 24

Stratified Sampling (2)

Averaging these two sub-estimators gives an estimator for I;

Ī2 =

1

(ĪA + ĪB )

2

ie. E[I ] = I.

The variance is given by

1

(ν[ĪA ] + ν[ĪB ])

4

(Z

Z 1/2

2

Z 1

2 )

1

1

ν[Ī2 ] =

dx f 2 (x) − 2

dx f (x) − 2

dx f (x)

N

0

0

1/2

ν[Ī2 ] =

Now using the trick (x + y)2 + (x − y)2 = 2(x2 + y2 ) gives

1

ν[Ī2 ] = ν[Ī ] −

N

Mike Peardon (TCD)

1/2

Z

dx f (x) −

0

Z 1

2

dx f (x)

1/2

BU7527

Michaelmas Term, 2015

11 / 24

Stratified Sampling (3)

The variance is less than or equal to that of the simple MC estimator

The biggest improvements occur when different regions contribute

opposing signs to the integral.

Example: evaluate I =

R1

0

dx e0.1x sin 2πx

The correct answer is −0.1673423263 . . .

Using N = 1, 000, 000 function evaluations: estimator give

6

Ī (10 )

σI

Simple

-0.016046 0.000743

Antithetic -0.016716 0.000015

Stratified -0.016360 0.000324

Stratified sampler improves slightly over simple MC.

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

12 / 24

Control variates

In cases where the integrand is “close” to a function f̃ that can be

integrated easily, a control variate is often useful.

Re-write the integral (trivially) as

I=

Z 1

dx f (x) − f̃ (x) +

0

Z 1

dx f̃ (x)

0

and now use Monte Carlo to estimate the first part only, while the

second part is known by some other method.

Clearly if f = f̃ , the resulting estimator has variance zero; this

suggests matching f̃ as closely as possible to f .

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

13 / 24

Control variates (2)

Example: evaluate I =

R1

0

dx e0.1x sin 2πx

The correct answer is −0.1673423263 . . .

R1

The integral 0 dx sin 2πx is zero by symmetry, so we can use

sin 2πx

R 1 as the control variate. This tells us

I = 0 dx (e0.1x − 1) sin 2πx

Using N = 1, 000, 000 function evaluations: all four estimators give

6

Ī (10 )

σI

Simple

-0.016046 0.000743

Antithetic -0.016716 0.000015

Stratified -0.016360 0.000324

Control

-0.016706 0.000038

Using a control variate reduces the error by about 20 over the

simple estimator.

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

14 / 24

Control variates (3)

If X is our original Monte Carlo estimator, and Y is a correlated

stochastic variable with a known expected value E[Y], then a new

estimator X̃ can be constructed using the control variate method:

X̃ = X − α(Y − E[Y])

E[X̃] = E[X] for any α. The variance becomes

ν[X̃] = ν[X] − 2αν[X, Y] + α2 ν[Y]

where ν[X, Y] is the covariance of X and Y

This expression is minimised when α takes the value

α∗ =

ν[X, Y]

ν [Y ]

For this value of α,

ν[X̃] = ν[X] −

ν[X, Y]2

ν [Y ]

To optimise, need ν[X, Y] and ν[Y]. Estimate using Monte Carlo!

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

15 / 24

Importance Sampling

If a random variable X is drawn from distribution pX , and a function

f evaluated; F = f (X), then the expected value of F is

E[F] =

Z

f (x)pX (x)dx

This suggests another means of performing integration;

I=

Z

g(x)dx =

Z

g(x)

pX (x)dx

pX ( x )

Evaluating g/pX and averaging gives an alternative estimator for I

The variance of the estimator is dependent on pX .

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

16 / 24

Example - importance sampling

Example: evaluate I (y) =

Ry

0

dx e−x sin πx

Compare importance sampling to flat sampling

Use probability density pX (x) =

sampling so:

I (y) = (1 − e

−y

)

e−x

,x

1 − e−y

Z y

0

∈ [0, y] for importance

dx pX (x) sin πx

Flat sampling would use uniform variate random numbers,

u ∈ [0, y], which have density pU (u) = y1

I (y) = y

Z y

0

dx pU (x)e−x sin πx

Importance sampling; draw from pX and evaluate F = sin πX.

Average of F has expectation value I (y)

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

17 / 24

Example - importance sampling(2)

0.4

I(x)

0.3

0.2

0.1

Uniform sampling

Importance sampling

Exact

0

Mike Peardon (TCD)

0.1

1

10

x

BU7527

100

1000

Michaelmas Term, 2015

18 / 24

Optimising importance sampling

R

For the estimation of a given integral, I = f (x)dx, what is the

optimal importance sampling distribution?

The optimal choice would minimise the variance in Ĩ, the estimator

for I. Re-writing I as

Z

f (x)

p(x)dx

I=

p(x)

and the variance in the estimator is

Z Z 2

f (x) 2

f (x)

2

ν[Ĩ ] =

p(x)dx − I =

dx − I2

p(x)

p(x)

The minimum of this functional is found using variational calculus;

find p that minimises ν subject to the functional constraint

Z

Mike Peardon (TCD)

p(x)dx = 1

BU7527

Michaelmas Term, 2015

19 / 24

Optimising importance sampling (2)

The minimising functional is

κ [p] = ν − λ

Z

p(x)dx − 1

with λ an unknown lagrange multiplier

Evaluate functional derivative using

δ

δa(x)

Z

b(y)dy =

Z

δb

δb

(y)δ(x − y)dy = (x)

δa

δa

δκ

f 2 (x)

=− 2

−λ = 0

δp(x)

p (x)

Which gives the optimal sampling density

p2 (x) =

Mike Peardon (TCD)

BU7527

f 2 (x)

λ

Michaelmas Term, 2015

20 / 24

Optimising importance sampling (3)

If λ > 0, can write

|f (x)|

λ̃

Now satisfying the normalisation constraint gives λ̃; and the optimal

sampling density is

p(x) =

p(x) = R

|f (x)|

|f (y)|dy

Usually, it is impossible to draw from this sampling density. Finding

an algorithm to do this is about as hard as computing I.

A simple rule: sample where |f | is large most frequently.

Mostly used when solving problems of the form

R

f (x)q(x) dx

hf i = VR

q(x) dx

V

where q(x) ≥ 0, ∀ x ∈ V

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

21 / 24

Markov Chain

Monte Carlo algorithms

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

22 / 24

The Metropolis-Hastings algorithm

An algorithm to construct a Markov chain for importance sampling

Monte Carlo, given a desired fixed-point probability, π (χ)

Useful property: normalisation of π need not be known.

Algorithm has two key steps: a proposal and an accept/reject test.

Metropolis-Hastings algorithm

1

Store current state of the system, ψ = ψk

2

Reversibly generate a proposed new state, ψ0 from ψk

3

Compute r = min[1,

4

Set

π (ψ0 )

]

π (ψ)

ψk+1 =

Mike Peardon (TCD)

ψ0

ψ

with probability r

with probability 1 − r

BU7527

Michaelmas Term, 2015

23 / 24

The Metropolis-Hastings algorithm (2)

Provided the proposal step is constructed appropriately, the chain is

irreducible, so the ergodic theorem gives a means of computing

integrals by importance sampling Monte Carlo.

The algorithm obeys detailed balance for π.

Detailed balance:

Transition probability for both steps is

0

P (χ0 ← χ) = Pp (χ‘ ← χ) min[1, ππ((χχ)) ]

0

So

P (χ0 ←χ)

P (χ←χ0 )

=

Pp (χ‘←χ) min[1, ππ((χχ)) ]

Pp (χ←χ0 ) min[1, ππ((χχ0)) ]

step is reversible,

If π (χ0 ) ≥ π (χ) then

P (χ0 ←χ)

P (χ←χ0 )

P (χ0 ←χ)

P (χ←χ0 )

=

0

=

π (χ0 )

min[1, π (χ) ]

π (χ)

min[1, π (χ0 ) ]

1

π (χ)

π (χ0 )

=

when the proposal

π (χ0 )

π (χ)

= ππ((χχ))

so in both cases, P (χ0 ← χ)π (χ) = P (χ ← χ0 )π (χ0 )

otherwise

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

24 / 24