BU7527 - Mathematics of Contingent Claims Mike Peardon Michaelmas Term, 2015

advertisement

BU7527 - Mathematics of Contingent Claims

Mike Peardon

School of Mathematics

Trinity College Dublin

Michaelmas Term, 2015

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

1 / 33

Poisson processes

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

2 / 33

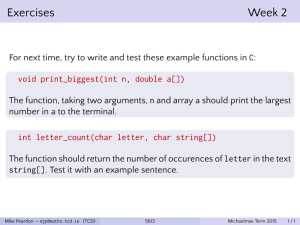

What is a poisson process?

The poisson process describes the behaviour of simple stochastic

systems where events happen in a memoryless way. ie

The probability of an event occuring in a particular time interval is

independent of the occurence of events in the past or the future.

The probability of one event occuring in a small time interval, dt is

defined to be λdt + O(dt2 ), where λ is a constant and λdt 1.

The probability of more than one event occuring in the interval dt

vanishes like O(dtn ), n ≥ 2 as dt → 0.

The last two conditions imply the probability there are no events in the

small interval is (1 − λdt) + O(dt2 ).

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

3 / 33

Probability of no events in a finite interval

Now let us compute Q0 (t), the probability no events occur in a finite

interval of length t. Since the Poisson process is memoryless, this can be

computed easily as the product of independent probabilities, so as

dt → 0,

Q0 (t + dt) = Q0 (t) × (1 − λdt)

and this leads to a simple differential equation for Q0 ,

dQ0

= λQ0

dt

⇒ Q0 (t) = Ce−λt

The constant C is determined by noting the probability there are no

arrivals in a vanishingly small interval is 1, so C = 1 and

Q0 (t) = e−λt

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

4 / 33

Probability of one event in a finite interval

t

start

+

dt

Q0 (t)

x

λ dt

Q1 (t)

x

1− λ dt

There are two mutually exclusive ways

for a single event to have occured in the

interval [0, t + dt].

One event occurs in [0, t] and no

event occurs in [t, t + dt]

No event occurs in [0, t] and one

event occurs in [t, t + dt]

Q1 (t + dt) = Q0 (t) × λdt + Q1 (t) × (1 − λdt)

and this leads to another differential equation for Q1 ,

dQ1

+ λQ1 = λQ0

dt

and given Q0 and with the condition that Q1 (0) = 0 gives

Q1 (t) = λte−λt

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

5 / 33

Probability of k event in a finite interval

The same reasoning gives a differential equation for Qk in terms of Qk

and Qk−1 ,

dQk

= λ(Qk−1 − Qk )

dt

and so Qk can be deduced for k = 2, 3, 4, . . . . A pattern quickly emerges,

and it can be shown by induction that

Qk (t) =

(λt)k e−λt

k!

It follows that these probabilities are properly normalised ie

∑k∞=0 Qk (t) = 1, and also that

∞

E(k ) =

∑ kQk (t) = λt

k =0

ie. the expected number of events in an interval t is just λt.

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

6 / 33

Intervals between events (1)

Event 0

1

T1

2

T2

3

T3

4

T4

If we measure the times between adjacent events, the resulting

sequence consists of a set of stochastic variables.

{ T1 , T2 , T3 , T4 , . . . }

Since the poisson process is memoryless, they will all be drawn

from the same underlying probability distribution. What is this

distribution?

After event 0 occurs, consider the probability event 1 does not

occur in a subsequent time t. This means T1 > t, and so

P(T1 > t) = Q0 (t) = e−λt

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

7 / 33

Intervals between events (2)

As a result, P(T1 ≤ t) = 1 − e−λt

This is the cumulative probability distribution for T1 (and

consequently all T), and so the probability density of T is given by

d fT ( t ) =

1 − e−λt = λe−λt

dt

The inter-event times of a Poisson process are exponentially

distributed.

The stochastic behaviour of the Poisson process forms a useful

starting point in the understanding of Markov processes.

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

8 / 33

The M/M/1 queue

A prototype Markov process

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

9 / 33

The M/M/1 queue

queue

server

Kendall notation: A/B/n.

A = arrival statistics

(M=Markov).

Poisson arrival statistics

rate λ

B = service statistics.

exponentially distributed

service times, rate µ

n = number of servers

Clients arrive at the queue, wait in turn for service, then leave the

system.

Arrival events are a Poisson process, so the probability a new client

arrives in a small time interval [t, t + dt] is λdt.

Service times (time spent at the head of the queue), T are

exponentially distributed, so P (T ) = µe−µT

The state of the system can be completely described by the number

of clients in the queue. Transitions are memoryless.

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

10 / 33

Steady-state probabilities (1)

After some time (and assuming λ < µ), the system relaxes into a

probabilistic steady state.

How can pk , the probability there are k clients in the queue in steady

state be computed?

Consider the probabilities the system makes a transition in a small

interval dt.

Transitions are memoryless

For n > 0:

n+1 servic

n+1

pn (t + dt) = pn+1 (t) × µdt

e

n

+ pn−1 (t) × λdt

+ pn (t) × (1 − (λ + µ)dt)

n

al

iv

arr

n−1

t

n−1

t+dt

Mike Peardon (TCD)

For n = 0:

p0 (t + dt) = p1 (t) × µdt

+ p0 (t) × (1 − λdt)

Steady-state: pk (t + dt) = pk (t) ≡ pk

BU7527

Michaelmas Term, 2015

11 / 33

Steady-state probabilities (2)

The steady-state equations can be visualised as a “flow-balance”

diagram.

λ

0

λ

1

µ

λ

...

k

µ

λ

k+1

µ

µ

Surface S

In steady-state, the net “flow of probability” into surface S must be

zero, so

λpk = µpk+1

Mike Peardon (TCD)

BU7527

∀k≥0

Michaelmas Term, 2015

12 / 33

Steady-state probabilities (3)

Solving this recursion gives

pk = ρk p0 ,

ρ = λ/µ

Now normalising the probabilities such that

∞

∑ pk = 1

k =0

gives

pk = ρk (1 − ρ)

A steady-state solution only exists if ρ < 1.

The utilisation of the server (probability it is in use) is

P ( k 6 = 0 ) = 1 − p0 = ρ

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

13 / 33

The M/M/1/m queue

Kendall’s notation: The queue can hold at most m clients waiting for

service. System has a finite set of states.

The flow balance picture is unchanged, so

0≤k<m

λpk = µpk+1 ,

The normalisation is different, however:

m

∑ pk = 1

k =0

pk = ρk

1−ρ

1 − ρ m+1

Note: no constraint on λ and ρ.

Probability queue is full = probability a packet will be turned away =

pm .

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

14 / 33

Introduction to

Markov processes

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

15 / 33

Markov chains

In 1906, Markov was interested in demonstrating that independence

was not necessary to prove the (weak) law of large numbers.

He analysed the alternating patterns of vowels and consonants in

Pushkin’s novel “Eugene Onegin”.

In a Markov process, a system makes stochastic transitions such

that the probability of a transition occuring depends only on the

start and end states. The system retains no memory of how it came

to be in the current state. The resulting sequence of states of the

system is called a Markov chain.

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

16 / 33

Markov Processes (1)

Markov processes are stochastic transitions, where the transition is

“memoryless” ie. the probability the system will be in a particular

state at some small time in the future just depends on the current

state of the system, not the details of how it got to be in its current

state.

The M/M/1 queue is a good example

The steady-state properties of the M/M/1 queue could be

computed by looking at all possible transitions the system could

make in a small time interval

The sequence of states generated by repeated applications of a

Markov process are called a Markov chain.

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

17 / 33

Markov chains

A Markov chain

A system can be in any one of n distinct states, denoted {χ1 , χ2 , . . . χn }.

If ψ(0), ψ(1), ψ(2), . . . is a sequence of these states observed at time

t = 0, 1, 2, . . . and generated by the system making random jumps

between states so that the conditional probability

P( ψ (t) = χi | ψ (t − 1) = χj , ψ (t − 2) = χk , . . . ψ (0) = χz )

= P( ψ (t) = χi | ψ (t − 1) = χj )

then the sequence {ψ} is called a Markov Chain

If P(ψ(t) = χi |ψ(t − 1) = χj ) does not depend on t, then the

sequence is called a homogenous Markov chain - we will consider

only homogenous Markov chains.

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

18 / 33

Markov matrix

The conditional probabilities describe the probability of the system

jumping from state χj to state χi . There are n × n possible jumps

and these probabilities can be packed into a matrix, with elements

Mij being the probability of jumping from j to i.

The Markov matrix

A matrix containing the n × n probabilities of the system making a

random jump from j → i is called a Markov matrix

Mij = P(ψ(t + 1) = χi |ψ(t) = χj )

Since the entries are probabilities, and the system is always in a

well-defined state, a couple of properties follow. . .

0 ≤ Mij ≤ 1

∑ni=1 Mij = 1

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

19 / 33

Markov Processes (4)

Dublin’s weather

An example: On a rainy day in Dublin, the probability tomorrow is

rainy is 80%. Similarly, on a sunny day the probability tomorrow is

sunny is 40%.

This suggests Dublin’s weather can be described by a

(homogenous) Markov process. Can we compute the probability

any given day is sunny or rainy?

For this system, the Markov matrix is

Sunny Rainy

Sunny

0.4

0.2

Rainy

0.6

0.8

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

20 / 33

Dublin’s weather (2)

1

If today is sunny we can write the state as ψ(0) =

, and the

0

0.4

0.28

state tomorrow is then ψ(1) =

, and ψ(2) =

,

0.6

0.72

0.256

ψ (3) =

, ...

0.744

0

If today is rainy we can write the state as ψ(0) =

, and the

1

0.2

0.24

state tomorrow is then ψ(1) =

, and ψ(2) =

,

0.8

0.76

0.248

ψ (3) =

,

0.752

The vector ψ quickly collapses to a fixed-point, which must be π,

the eigenvector of M with eigenvalue 1, normalised such that

∑2i=1 πi = 1.

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

21 / 33

Dublin’s weather (3)

Finding the probability of sun or rain a long time in the future is

equivalent to solving

0.4 0.2

π1

π1

=

π2

π2

0.6 0.8

with the normalising condition for the probabilities;

π1 + π2 = 1

0.25

. This is the invariant probability distribution

We find π =

0.75

of the process; with no prior information these are the probabilities

any given day is sunny (25%) or rainy (75%).

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

22 / 33

Population migrations

In a year, the fraction of the population of three provinces A, B and C

who move between provinces is given by

From/ To

A

B

C

A

3%

7%

B

1%

C

1%

2%

7%

Show the stable populations of the three provinces are in the

proportions 8 : 3 : 1

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

23 / 33

Winning a Tennis game at Deuce

Two tennis players, Alice and Bob have reached Deuce. The probability

Alice wins a point is p while the probability Bob wins is q = 1 − p. Write

a Markov matrix describing transitions this system can make.

Answer:

1 p 0 0 0

0 0 p 0 0

M=

0 q 0 p 0

0 0 q 0 0

0 0 0 q 1

with states given by χ = {A wins, Adv A, Deuce, Adv B, B wins}

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

24 / 33

Winning a Tennis game at Deuce (2)

Remember: entry Mij = P(ψ(t + 1) = χi |ψ(t) = χi )

Some transitions are forbidden (like Adv A → Adv B)

Some states are “absorbing” - once in that state, the system never

moves away.

With a bit of work, it is possible to see the long-time average after

starting in state χ3 ≡ Deuce is

2

p

1−2pq

π3 =

0

0

0

q2

1−2pq

1

The tennis game ends with probability 1

2

Alice wins with probability

Mike Peardon (TCD)

p2

1−2pq

BU7527

Michaelmas Term, 2015

25 / 33

The Markov Matrix (1)

Markov matrix has two elementary properties:

1

Since all elements are probabilities,

0 ≤ Mij ≤ 1

2

Since the system always ends in Ω,

N

∑ Mij = 1

i=1

From these properties alone, the eigenvalues of M must be in the

unit disk; |λ| ≤ 1, since if v is an eigenvector,

∑ Mij vj = λvi

j

∑

j

Mike Peardon (TCD)

=⇒ | ∑ Mij vj | = |λ||vi | =⇒

j

|vj | ∑ Mij

i

!

∑ Mij |vj | ≥ |λ||vi |

j

≥ |λ| ∑ |vi | =⇒ 1 ≥ |λ|

i

BU7527

Michaelmas Term, 2015

26 / 33

The Markov Matrix (2)

Also, a Markov matrix must have at least one eigenvalue equal to

unity. Considering the vector vi = 1, ∀i, we see

∑ vi Mij = ∑ Mij = 1,

i

∀j

i

and thus v is a left-eigenvector, with eigenvalue 1.

Similarly, for the right-eigenvectors,

∑ Mij vj = λvi

j

=⇒

∑ vj ∑ Mij = λ ∑ vi

j

i

i

=⇒

∑ vj = λ ∑ vi

j

i

and so either λ = 1 or if λ 6= 1 then ∑i vi = 0

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

27 / 33

Random walks

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

28 / 33

Random walks

An example of a Markov process with simple rules

State defined by a random integer Xt for each time-step t

Allowed transitions are hops to neighbouring values of X, so

Xt + 1 with probability p

Xt+1 =

Xt − 1 with probability q = 1 − p

Time-history can be analysed as a binomial experiment: if X0 = a,

then for t + k even, and defining l = t+2 k

P(Xt = a + k) = t Cl pl qt−l

and

E[Xt ] = a + t(2p − 1)

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

29 / 33

Gambler’s ruin

Consider a random walk, starting with X0 = a but assume the

gambler will stop once they are “rich” (ie. when Xt = c). The

gambler will be forced to stop if Xt = 0

Define the hitting times

τ0 = min(t ≥ 0 : Xt = 0)

τc = min(t ≥ 0 : Xt = c)

Now we would like to compute P(τt < τ0 ), the probability of getting

rich before going broke. We find:

Gambler’s ruin

Define ρ =

1−p

p ,

then

P(τt < τ0 ) =

Mike Peardon (TCD)

a

c

1− ρa

1− ρc

BU7527

p=

1

2

otherwise

Michaelmas Term, 2015

30 / 33

Martingales

A martingale is a markov chain for which the expected value of the

chain does not change in going from t → t + 1

Examples of Martingales

1

A simple random walk with p = 1/2

2

A game where we add 2 to Xt with probability 1/3 and subtract 1

with probability 2/3

3

A random walk Xt with p 6= 1/2 is not a martingale, but

Zt =

1−p

p

Xt

is a martingale

The expected value, E[Xt ] stays contant for all t

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

31 / 33

Stopping times

For a stochastic process Xt , define T as a stopping time if the event

T = t can be determined independently of the values of

Xt + 1 , Xt + 2 . . . .

Example - stop the process when XT = y for some value y

Stopping the process one iteration before (ie find T such that

XT+1 = y means T is not a stopping time

Optional stopping theorem

If Xt is a martingale with X0 = a, T is a stopping time and either

Xt is bounded up to T: we know xmax such that |Xt | ≤ xmax for all

t ≤ T or

T is bounded: we known tmax such that T ≤ tmax

then E[XT ] = a (on average the value of X at T is a).

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

32 / 33

Stopping times (2)

A random walk leaves some range

Consider a martingale Xt with X0 = a, two values r > a > s and their

stopping times Tr and Ts . What is the probability we hit r first? The

optional stopping theorem gives us

P(Tr < Ts ) =

a−s

r−s

Gambler’s ruin

The gambler’s ruin result for p = 1/2 follows immediately from this,

substituting r = c and s = 0 gives P(rich) = a/c. The case where

p 6= 1/2 can be solved using the Z = (q/p)X trick.

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

33 / 33