BU7527 - Mathematics of Contingent Claims Mike Peardon Michaelmas Term, 2015

advertisement

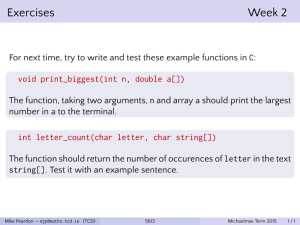

BU7527 - Mathematics of Contingent Claims

Mike Peardon

School of Mathematics

Trinity College Dublin

Michaelmas Term, 2015

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

1 / 49

Probability

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

2 / 49

Sample space

Consider performing an experiment where the outcome is purely

randomly determined and where the experiment has a set of

possible outcomes.

Sample Space

A sample space, S associated with an experiment is a set such that:

1

each element of S denotes a possible outcome O of the experiment

and

2

performing the experiment leads to a result corresponding to one

element of S in a unique way.

Example: flipping a coin - choose the sample space S = {H, T }

corresponding to coin landing heads or tails.

Not unique: choose the sample space S = {L} corresponding to

coin just landing. Not very useful!

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

3 / 49

Events

Events

An event, E can be defined for a sample space S if a question can be put

that has an unambiguous answer for all outcomes in S. E is the subset of

S for which the question is true.

Example 1: Two coin flips, with S = {HH, HT, TH, TT }. Define the

event E1T = {HT, TH }, which corresponds to one and only one tail

landing.

Example 2: Two coin flips, with S = {HH, HT, TH, TT }. Define the

event E≥1T = {HT, TH, TT }, which corresponds to at least one tail

landing.

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

4 / 49

Probability measure

Can now define a probability model, which consists of a sample

space, S a collection of events (which are all subsets of S) and a

probability measure.

Probability measure

The probability measure assigns to each event E a probability P(E), with

the following properties:

1

P(E) is a non-negative real number with 0 ≤ P(E) ≤ 1.

2

P(∅) = 0 (∅ is the empty set event).

3

P(S) = 1 and

4

P is additive, meaning that if E1 , E2 , . . . is a sequence of disjoint

events then

P(E1 ∪ E2 ∪ . . . ) = P(E1 ) + P(E2 ) + . . .

Two events are disjoint if they have no common outcomes

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

5 / 49

Probability measure (2)

Venn diagrams give a very useful way of visualising probability

models.

Example: Ec ⊂ S is the

complement to event E,

and is the set of all

outcomes NOT in E (ie

Ec = { x : x ∈

/ E}).

C

E

E

The probability of an

event is visualised as the

area of the region in the

Venn diagram.

S

The intersection A ∩ B and union A ∪ B of two events can be

depicted ...

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

6 / 49

Probability measure (3)

A B

The intersection of two

subsets A ⊂ S and B ⊂ S

A ∩ B = {x : x ∈ A and x ∈ B}

A

111111111

000000000

000000000

111111111

000000000

111111111

000000000

111111111

000000000

111111111

000000000

111111111

000000000

111111111

000000000

111111111

000000000

111111111

B

S

A B

0000000000000000

1111111111111111

1111111111111111

0000000000000000

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

0000000000000000

1111111111111111

A

B

The union of two subsets

A ⊂ S and B ⊂ S

A ∪ B = {x : x ∈ A or x ∈ B}

S

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

7 / 49

Probability measure (4)

The Venn diagram approach makes it easy to remember:

P(Ec ) = 1 − P(E)

P(A ∪ B) = P(A) + P(B) − P(A ∩ B)

Also define the conditional probability P(A|B), which is the

probability event A occurs, given event B has occured.

Since event B occurs with probability P(B) and both events A and B

occur with probability P(A ∩ B) then the conditional probability

P(A|B) can be computed from

Conditional probability

P(A|B) =

Mike Peardon (TCD)

P(A ∩ B)

P(B)

BU7527

Michaelmas Term, 2015

8 / 49

Conditional probability (1)

Conditional probability describes situations when partial

information about outcomes is given

Example: coin tossing

Three fair coins are flipped. What is the probability that the first coin

landed heads given exactly two coins landed heads?

S = {HHH, HHT, HTH, HTT, THH, THT, TTH, TTT }

A = {HHH, HHT, HTH, HTT } and B = {HHT, HTH, THH }

A ∩ B = {HHT, HTH }

P(A|B) =

Answer:

P(A∩B)

P(B)

=

2/8

3/8

=

2

3

2

3

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

9 / 49

Conditional probability (2)

Bayes’ theorem

For two events A and B with P(A) > 0 and P(B) > 0 we have

P(A|B) =

P(A)

P(B|A)

P(B)

Since P(A|B) = P(A ∩ B)/P(B) from conditional

probability result, we see P(A ∩ B) = P(B)P(A|B).

switching A and B also gives P(B ∩ A) = P(A)P(B|A)

. . . A ∩ B is the same as B ∩ A . . .

Thomas Bayes

(1702-1761)

so we get P(A)P(B|A) = P(B)P(A|B) and Bayes’

theorem follows

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

10 / 49

Partitions of state spaces

Suppose we can completely partition S into n disjoint events,

A1 , A2 , . . . An , so S = A1 ∪ A2 ∪ · · · ∪ An .

Now for any event E, we find

P(E) = P(E|A1 )P(A1 ) + P(E|A2 )P(A2 ) + . . . P(E|An )P(An )

This result is seen by using the conditional probability theorem and

additivity property of the probability measure. It can be

remembered with the Venn diagram:

A2

A4

E A1

Mike Peardon (TCD)

A5

A3

BU7527

S

Michaelmas Term, 2015

11 / 49

A sobering example

With the framework built up so far, we can make powerful (and

sometimes surprising) predictions...

Diagnostic accuracy

A new clinical test for swine flu has been devised that has a 95% chance

of finding the virus in an infected patient. Unfortunately, it has a 1%

chance of indicating the disease in a healthy patient (false positive). One

person per 1, 000 in the population is infected with swine flu. What is the

probability that an individual patient diagnosed with swine flu by this

method actually has the disease?

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

12 / 49

A sobering example

With the framework built up so far, we can make powerful (and

sometimes surprising) predictions...

Diagnostic accuracy

A new clinical test for swine flu has been devised that has a 95% chance

of finding the virus in an infected patient. Unfortunately, it has a 1%

chance of indicating the disease in a healthy patient (false positive). One

person per 1, 000 in the population is infected with swine flu. What is the

probability that an individual patient diagnosed with swine flu by this

method actually has the disease?

Answer: about 8.7%

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

12 / 49

The Monty Hall problem

When it comes to probability, intuition is often not

very helpful...

The Monty Hall problem

In a gameshow, a contestant is shown three doors and asked to select

one. Hidden behind one door is a prize and the contestant wins the prize

if it is behind their chosen door at the end of the game. The contestant

picks one of the three doors to start. The host then opens at random one

of the remaining two doors that does not contain the prize. Now the

contestant is asked if they want to change their mind and switch to the

other, unopened door. Should they? Does it make any difference?

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

13 / 49

The Monty Hall problem

When it comes to probability, intuition is often not

very helpful...

The Monty Hall problem

In a gameshow, a contestant is shown three doors and asked to select

one. Hidden behind one door is a prize and the contestant wins the prize

if it is behind their chosen door at the end of the game. The contestant

picks one of the three doors to start. The host then opens at random one

of the remaining two doors that does not contain the prize. Now the

contestant is asked if they want to change their mind and switch to the

other, unopened door. Should they? Does it make any difference?

P(Win)=2/3 when switching, P(Win) = 1/3 otherwise

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

13 / 49

The Monty Hall problem (2)

This misunderstanding about conditional probability can lead to

incorrect conclusions from experiments...

Observing rationalised decision making?

An experiment is performed where a monkey picks between two

coloured sweets. Suppose he picks black in preference to white. The

monkey is then offered white and red sweets and the experimenters

notice more often than not, the monkey continues to reject the white

sweets and chooses red. The experimental team concludes the monkey

has consciously rationalised his decision to reject white sweets and

reinforced his behaviour. Are they right in coming to this conclusion?

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

14 / 49

The Monty Hall problem (2)

This misunderstanding about conditional probability can lead to

incorrect conclusions from experiments...

Observing rationalised decision making?

An experiment is performed where a monkey picks between two

coloured sweets. Suppose he picks black in preference to white. The

monkey is then offered white and red sweets and the experimenters

notice more often than not, the monkey continues to reject the white

sweets and chooses red. The experimental team concludes the monkey

has consciously rationalised his decision to reject white sweets and

reinforced his behaviour. Are they right in coming to this conclusion? Not

necessarily. Based on the first observation, there are three possible

compatible rankings (B>W>R,B>R>W,R>B>W). In 2 of 3, red is preferred

to white, so a priori that outcome is more likely anyhow.

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

14 / 49

Independent events

Independent events

Events A and B are said to be independent if

P(B ∩ A) = P(A) × P(B)

If P(A) > 0 and P(B) > 0, then independence implies both:

P(B|A) = P(B) and

P(A|B) = P(A).

These results can be seen using the conditional probability result.

Example: Two coins are flipped where the probability the first lands

on heads is 1/2 and similarly for the second. If these events are

independent we can now show that all outcomes in

S = {HH, HT, TH, TT } have probability 1/4.

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

15 / 49

Summary

Defining a probability model means choosing a good sample space

S, collection of events (which all correspond to subsets of S) and a

probability measure defined on all the events.

Events are called disjoint if they have no common outcomes.

Understanding and remembering probability calculations or results

is often made easier by visualising with Venn diagrams.

The conditional probability P(A|B) is the probability event A occurs

given event B also occured.

Bayes’ theorem relates P(A|B) to P(B|A).

Calculations are often made easier by partitioning state spaces - ie

finding disjoint A1 , A2 , . . . An such that S = A1 ∪ A2 ∪ . . . An .

Events are called independent if P(A ∩ B) = P(A) × P(B).

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

16 / 49

Binomial experiments

A binomial experiment

Binomial experiments are defined by a sequence of probabilistic

trials where:

1

2

3

4

Each trial returns a true/false result

Different trials in the sequence are independent

The number of trials is fixed

The probability of a true/false result is constant

Usual question to ask - what is the probability the trial result is true

x times out of n, given the probability of each trial being true is p?

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

17 / 49

Examples of binomial experiments

Examples and counter-examples

These examples are binomial experiments:

1

Flip a coin 10 times, does the coin land heads?

2

Ask the next ten people you meet if they like pizza

3

Screen 1000 patients for a virus

... and these are not:

Flip a coin until it lands heads (not fixed number of trials)

Ask the next ten people you meet their age (not true/false)

Is it raining on the first Monday of each month? (not a constant

probability)

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

18 / 49

Number of experiments with x true outcomes

Number of selections

There are

Nx,n ≡ n Cx =

n!

x!(n − x)!

ways of having x out of n selections.

Coin flip outcomes

Example: how many outcomes of five coin flips result in the coin

landing heads three times?

Answer: Nx,n =

5!

3!2!

= 10

They are: {HHHTT, HHTHT, HHTTH, HTHHT, HTHTH, . . .

. . . HTTHH, THHHT, THHTH, THTHH, TTHHH }

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

19 / 49

Probability of x out of n true trials

If the probability of each trial being true is p (and so the probability

of it being false is q = 1 − p) ...

and the selection trials are independent then...

Probability of x out of n true outcomes

Px,n = n Cx px qn−x ≡ n Cx px (1 − p)n−x

We can compute this probability since we can count the number of

cases where there are x true trials and each case has the same

probability

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

20 / 49

Infinite state spaces

The set of outcomes of a probabilistic experiment may be an

uncountably infinite set

Here, the distinction between outcomes and events is more

important: events can be assigned probabilities, outcomes can’t

Outcomes described by a continuous variable

1

If I throw a coin and measure how far away it lands, the state space

is described by the set of real numbers, Ω = R

2

I could also simultaneously see if it lands heads or tails. This set of

outcomes is still “uncountably infinite”. The state space is now

Ω = (H, T ) × R

Impossible to define probability the coin lands 1m away.

Events can be defined - for example, an even might be “the coin

lands heads more than 1m away.”

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

21 / 49

Random numbers

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

22 / 49

Stochastic variables or random numbers

To be mathematically correct, stochastic variables (or random

numbers) are neither variables nor numbers! They are functions

taking an outcome and returning a number.

Depending on the nature of the state-space, they can be discrete or

continuous.

Random numbers

A random number X is a function that converts outcomes on a state

space Ω = {O1 , O2 , O3 . . . } to a number in {x1 , x2 , x3 , . . . } so X(Oi ) = xi

Example - heads you win ...

If I flip a coin and pay you e1 if it lands heads and you pay me e2 if it

lands tails, then the money you get after playing this game is a random

number: Ω = {H, T }, X(H ) = 1, X(T ) = −2

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

23 / 49

Expected value of a random number

Imagine we sample a random number lots of times and we know

the probability different values will occur. We can guess what the

average of all these samples will be:

P(O1 )x1 + P(O2 )x2 + P(O3 )x3 + . . .

Expected value

The expected value of a discrete random number which can take any of

N possible values is defined as

N

E[X ] =

∑ X(Oi )P(Oi ) ≡

i=1

N

∑ xi P(Oi )

i=1

It gives the average of n samples of the random number as n gets

large

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

24 / 49

Expected value (2)

Back to our example:

Heads you win ...

Before, we had X(H ) = 1 and X(T ) = −2. If both are equally likely (fair

coin) then the expected value,

E[X ] = P(H ) × X (H ) + P(T ) × X (T )

1

1

=

× 1 + × −2

2

2

1

= −

2

So playing n times you should expect to lose e n2 . Not a good idea to play

this game!

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

25 / 49

Expected value (3)

The expected value of a function f : R → R applied to our random

number can be defined easily too.

Expected value of a function

N

E[f (X)] =

∑ f (xi )P(Oi )

i=1

Taking the expected values of two different random numbers X and

Y is linear i.e for constant numbers α, β we see

E[αX + βY] = αE[X] + βE[Y]

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

26 / 49

Variance and standard deviation

Variance

The variance of X is defined as

σX2 = E[(X − µX )2 ] ≡ E[X2 ] − E[X]2

Standard deviation

The standard deviation of X, σX is the square root of the variance. If X

has units, σX has the same units.

The variance and standard deviation are non-negative: σX ≥ 0

They measure the amount a random variable fluctuates from

sample-to-sample.

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

27 / 49

Variance (2)

Returning again to our game:

Heads you win ...

The variance of X can be computed. Recall that µX = − 21 . The variance

is then

σX2 =

=

=

1

1

1

1

× (1 + )2 + × (−2 + )2

2

2

2

2

1 9 1 9

× + ×

2 4 2 4

9

4

and the standard deviation of X is 32 .

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

28 / 49

The expected number of successful trials

Consider the binomial experiment where n trials are performed with

probability of success p

Recall P(x) = n Cx px qn−x ≡

n!

px qn−x

x!(n−x)!

So the expected value of x is

n

µX =

∑ xP(x)

x=1

n

=

n!

∑ x x!(n − x)! px qn−x

x=1

= np

A bit more work gives

σX2 = npq

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

29 / 49

Poisson distribution

A limiting case for the binomial experiment can be considered by

taking n → ∞, while keeping µ = n × p fixed.

This models the number of times a random occurence happens in

an interval (radioactive decay, for example).

Now x, the number of times the event occurs becomes

The poisson distribution

For integer x,

P(x) =

µ x e− µ

n!

Check that ∑x∞=0 P(x) = 1 ie. the probability is properly normalised.

Also find the expected value of X is just µ

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

30 / 49

Poisson distribution (2)

Example: chirping crickets

A field full of crickets are chirping at random, with on average 0.6 chirps

per second. Assuming the chirps obey the poisson distribution, what is

the probability we hear at most 2 chirps in one second?

Answer: P(0)+P(1)+P(2).

P(0) =

0.60 e−0.6

= e−0.6

0!

(NB remember 0! = 1)

0.61 e−0.6

0.62 e−0.6

P(1) =

= 0.6e−0.6 and P(2) =

= 0.18e−0.6

1!

2!

P(0) + P(1) + P(2) = 0.9768

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

31 / 49

Continuous random numbers (1)

For continuous random number X (one that can take any value in

some range [a, b]), the sample space is (uncountably) infinite.

Consider the event E which occurs when the random number

X < x.

NB: Big X ≡ random number, little x ≡ reference point for E

Cumulative distribution function

The cumulative distribution function (cdf), FX (x) of a continuous random

number X is the probability of the event E : X < x;

FX ( x ) = P ( X < x )

Since it is a probability, 0 ≤ FX (x) ≤ 1

If X is in the range [a, b] then FX (a) = 0 and FX (b) = 1.

FX is monotonically increasing, which means that if q > p then

FX (p) ≥ FX (q).

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

32 / 49

Continuous random numbers (2)

Since E occurs when X < x, then Ec occurs when X ≥ x and so

P(X < x) + P(X ≥ x) = 1 and

P ( X ≥ x ) = 1 − FX ( x )

Take two events, A which occurs when X < q and B when X ≥ p

and assume q > p.

A

B

p

q

The event A ∪ B always occurs (so P(A ∪ B) = 1) and A ∩ B occurs

when p ≤ X < q

Since P(A ∪ B) = P(A) + P(B) − P(A ∩ B) we have

P(p ≤ X < q) = FX (q) − FX (p)

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

33 / 49

Continuous random numbers (3)

Example: exponential distribution

1 − e−2x when x ≥ 0

0

when x < 0

FX (x) =

Describes random number X in range [0, ∞]

What is probability X < 1?

FX(x) 1

0.8

0.6

What is probability X ∈ [ 12 , 1]?

0.4

0.2

-0.5

0

0.5

1

1.5

2

x

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

34 / 49

Continuous random numbers (3)

Example: exponential distribution

1 − e−2x when x ≥ 0

0

when x < 0

FX (x) =

Describes random number X in range [0, ∞]

What is probability X < 1?

P(X < 1) = FX (1)

FX(x) 1

= 1 − e−2

= 0.864664 . . .

0.8

0.6

What is probability X ∈ [ 12 , 1]?

0.4

0.2

-0.5

0

0.5

1

1.5

2

x

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

34 / 49

Continuous random numbers (3)

Example: exponential distribution

1 − e−2x when x ≥ 0

0

when x < 0

FX (x) =

Describes random number X in range [0, ∞]

What is probability X < 1?

P(X < 1) = FX (1)

FX(x) 1

= 1 − e−2

= 0.864664 . . .

0.8

0.6

What is probability X ∈ [ 12 , 1]?

1

1

P( < X < 1) = FX (1) − FX ( )

2

2

= 1 − e−2 − 1 + e−1

0.4

0.2

-0.5

0

0.5

1

1.5

2

= 0.2325442 . . .

x

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

34 / 49

Probability density function

If p and q are brought closer together so q = p + dp then

P(p ≤ X < p + dp) = FX (p + dp) − FX (p)

dF

dF

≈ FX (p) + dp − FX (p) ≈ dp

dp

dx

Probability density function

The probability density function gives the probability a random

number falls in an infinitesimally small interval, scaled by the size of

the interval.

P(x ≤ X < x + dx)

fX (x) = lim

dx

dx→0

For a random number X in the range [a, b],

FX ( x ) =

Mike Peardon (TCD)

Z x

a

BU7527

fX (z)dz

Michaelmas Term, 2015

35 / 49

Probability density function (2)

fX (the pdf) is not a probability. FX (the cdf) is.

While fX is still non-negative, it can be bigger than one.

For X in the range [a, b], FX (b) = 1 so

fX ≥ 0 and

Mike Peardon (TCD)

Z b

a

fX (z) dz = 1

BU7527

Michaelmas Term, 2015

36 / 49

The uniform distribution

A random number U that is in the range [a, b] is uniformly

distributed if all values in that range are equally likely.

This implies the pdf is a constant, fU (u) = α. Normalising this means

Rb

ensuring a fU (u) du = 1.

u−a

1

and FU (u) =

fU ( u ) =

b−a

b−a

pdf of uniform U [ 14 , 32 ]

fX(x)

-0.5

cdf of uniform U [ 14 , 23 ]

1

FX(x) 1

0.8

0.8

0.6

0.6

0.4

0.4

0.2

0.2

0

0.5

1

1.5

2

-0.5

x

Mike Peardon (TCD)

0

0.5

1

1.5

2

x

BU7527

Michaelmas Term, 2015

37 / 49

The exponential distribution

For a positive parameter, λ > 0, a random number W that is in the

range [0, ∞] is called exponentially distributed if the density function

falls exponentially.

The pdf is proportional to e−λx . Normalising again means ensuring

Rb

f (w) dw = 1. So

a W

fW (w) =

λe−λw , w ≥ 0

0,

w<0

pdf of exponential(2)

1 − e−λw w ≥ 0

0 w<0

and FW (w) =

cdf of exponential(2)

FX(x) 2

FX(x) 1

0.8

1.5

0.6

1

0.4

0.5

0.2

-0.5

0

0.5

1

1.5

-0.5

2

x

Mike Peardon (TCD)

0

0.5

1

1.5

2

x

BU7527

Michaelmas Term, 2015

38 / 49

The normal distribution

The normal distribution N (µ, σ2 ) is parameterised by two numbers,

µ and σ.

pdf is the “bell curve”

The cdf doesn’t have a nice expression (but it is sufficiently

important to get its own name - erf(x).

fW ( w ) =

pdf of N(0.75,0.4)

(x− µ )2

1

√ e− 2σ2

σ 2π

cdf of N(0.75,0.4)

FX(x) 1

FX(x)

1

0.8

0.75

0.6

0.5

0.4

0.25

-0.5

0.2

0

0.5

1

1.5

-0.5

2

Mike Peardon (TCD)

0

0.5

1

1.5

2

x

x

BU7527

Michaelmas Term, 2015

39 / 49

Continuous random numbers (4)

An expected value of a continuous random number can be defined,

in analogy to that of the discrete random number

Expected value

For a random number X taking a value in [a, b], the expected value is

defined as

Z

b

E[X ] =

a

z fX (z) dz

As with discrete random numbers, the easiest way to think of this is

the running average of n samples of X as n gets very large.

Can show E[αX + βY] = αE[X] + βE[Y]

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

40 / 49

Continuous random numbers (5)

An expected value of a continuous random number can be defined,

in analogy to that of the discrete random number

Variance

The variance of a continuous random number X has the same definition:

σX2 = E[X2 ] − E[X]2

Again, like discrete random numbers, the standard deviation is the

square root of the variance. Both the variance and standard

deviation are non-negative.

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

41 / 49

2 of uniform U [a, b]

Example: E[U ] and σU

For U a uniform variate on [a, b], what is

1

E[U ]?

2

σU2 ?

Using definitions,

1

b−a

b+a

2

E[U ] =

=

Z b

a

z dz

The mean is (as might be guessed) the mid-point of [a, b] Similarly,

substituting to find E[X2 ] gives

σU2 =

(b − a)2

12

which depends only on b − a, the width of the range

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

42 / 49

2 of exponential(λ)

Example (2): E[U ] and σU

For W an exponentially distributed number with parameter λ

1

E[W ]?

2

2 ?

σW

Again using definitions,

E[W ] =

=

Z b

a

w · λe−λw dw

1

λ

From the definition of E[W 2 ], we get

2

σW

=

1

λ2

so the expected value and standard deviation of exponentially

distributed random numbers are given by λ−1

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

43 / 49

Visualising a probability density function

Given some sample data, useful to plot a pdf. This can be done by

binning data and plotting a histogram.

Divide range [a, b] for possible values of X into N bins. Count mi , the

i(b−a)

(i+1)(b−a)

number of times X lies in ri = [a + N , a +

). Plot mi vs x

N

Care must be taken choosing bin-size; too big, structure will be lost,

too small, fluctuations will add features.

Visualising the exponential distribution - 10,000 samples

10 bins in range 0 to 10

100 bins in range 0 to 10

1000 bins in range 0 to 10

0

0

2

4

x

6

Mike Peardon (TCD)

8

10

pX(x)

1

pX(x)

1

pX(x)

1

0

0

2

4

x

BU7527

6

8

10

0

0

2

4

x

6

Michaelmas Term, 2015

8

10

44 / 49

Joint probability distributions

Sometimes in an experiment, we measure two or more (random)

numbers.

Now the sample space is more complicated, but it is still possible to

define events usefully.

The cumulative distribution function is defined as a probability:

FX,Y (x, y) = P(X ≤ x and Y ≤ y)

Probability that (X, Y) lies inside

lower-left quadrant defined by

X ≤ x and Y ≤ y

y

In this example, it would be

approximated by the fraction of red

dots to the total number of red and

green dots.

x

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

45 / 49

Joint probability distributions (2)

Can write expressions for P(x0 ≤ X < x1 and y0 ≤ Y < y1 ) in terms

of FX,Y : Get

P(x0 ≤ X < x1 and y0 ≤ Y < y1 ) =

FX,Y (x1 , y1 ) − FX,Y (x0 , y1 ) − FX,Y (x1 , y0 ) + FX,Y (x0 , y0 )

A joint probability density can be defined too: it is the ratio of the

probability a point (X, Y) lands inside an infinitesimally small area

dxdy located at (x, y) to the area dxdy:

fX,Y (x, y) =

Mike Peardon (TCD)

P(X ∈ [x, x + dx] and Y ∈ [y, y + dy])

dxdy

dx→0,dy→0

lim

BU7527

Michaelmas Term, 2015

46 / 49

Joint probability distributions (3)

Independent random numbers

Two random numbers, X and Y can be said to be independent if for all x

and y,

FX,Y (x, y) = FX (x) × FY (y)

this is equivalent to

fX,Y (x, y) = fX (x) × fY (y)

As with independent events, if two random numbers are

independent, knowing something about one doesn’t allow us to

infer anything about the other

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

47 / 49

Summary (1)

Mathematically, a random number is a function taking an outcome

and returning a number

They can be discrete or continuous

Their expected value is the sum of all possible values assigned to

outcomes, weighted by the probability of each outcome.

The variance (and standard deviation) of a random number

quantifies how much they fluctuate.

In a binomial experiment, the random number X that counts the

number of successes out of n trials has probability

P(X = x) = n Cx px (1 − p)n−x , where p is the probability a single trial

is successful.

Random occurences be modelled by the Poisson distribution. The

probability there will be X occurences if µ are expected is

µ x e− µ

P(X = x) = x!

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

48 / 49

Summary (2)

Continuous random numbers can be described by a cumulative

distribution function (cdf). It gives the probability X will be smaller

than some reference value x.

The probability density function (pdf) is the ratio of the probability a

random number will fall in an infinitesimally small range to the size

of that range.

Given the pdf, the expected value and variance of a continuous

random number can be computed by integration.

If a random number is sampled many times, an approximation to its

pdf can be visualised by binning and plotting a histogram.

If more than one random number is measured, probabilities are

described by joint distributions.

Two random numbers are independent if their joint distribution is

separable.

Mike Peardon (TCD)

BU7527

Michaelmas Term, 2015

49 / 49