Document 10414694

advertisement

148

IEEE TRANSACTIONS ON COMPUTER-AIDED DESIGN OF INTEGRATED CIRCUITS AND SYSTEMS, VOL. 30, NO. 1, JANUARY 2011

Reducing Test Execution Cost of Integrated,

Heterogeneous Systems Using Continuous Test Data

Sounil Biswas and R. D. (Shawn) Blanton, Fellow, IEEE

Abstract—Integrated, heterogeneous systems are comprehensively tested to verify whether their performance specifications

fall within some acceptable ranges. However, explicitly testing

every manufactured instance against all of its specifications can

be expensive due to the complex requirements for test setup,

stimulus application, and response measurement. To reduce manufacturing test cost, we have developed a methodology that uses

binary decision forests and several test-specific enhancements for

identifying redundant tests of an integrated system. Feasibility

is empirically demonstrated using test data from over 70 000

manufactured instances of an in-production microelectromechanical system accelerometer, and over 4500 manufactured instances

of an RF transceiver. Through our analysis, we have shown

that the expensive cold-mechanical test of the accelerometer

and nine out of the 22 RF tests of the transceiver are likely

redundant.

Index Terms—Binary decision forest, integrated system test,

statistical learning, test compaction.

I. Introduction

VER THE past decade, the increasing cost of testing

integrated, heterogeneous systems1 have become a problem of paramount importance in the electronics industry [1].

More specifically, the stringent quality requirements for integrated systems have led designers and test engineers to

mandate large sets of tests to be applied to these systems,

which in turn, has resulted in significant test cost. However,

many of these tests may be unnecessary since their outcomes

are likely predictable using results from other applied tests.

At the same time, deriving even an approximate functional

form of the pass-fail outcome of these redundant tests based

on parameters such as transistor width, device capacitance,

threshold voltage, and so on, and their distributed values is

practically impossible. Moreover, this derivation has to be repeated every time there is a revision in the integrated system’s

O

Manuscript received February 7, 2010; revised June 8, 2010; accepted

August 5, 2010. Date of current version December 17, 2010. This paper was

recommended by Associate Editor A. Ivanov.

S. Biswas was with the Department of Electrical and Computer Engineering,

Carnegie Mellon University, Pittsburgh, PA 15213 USA, during this work.

He is now with Nvidia Corporation, Santa Clara, CA 95050 USA (e-mail:

sbiswas@ece.cmu.edu).

R. D. (Shawn) Blanton is with the Department of Electrical and Computer

Engineering, Carnegie Mellon University, Pittsburgh, PA 15213 USA (e-mail:

blanton@ece.cmu.edu).

Color versions of one or more of the figures in this paper are available

online at http://ieeexplore.ieee.org.

Digital Object Identifier 10.1109/TCAD.2010.2066630

1 An integrated, heterogeneous system, also referred to simply as an “integrated systems” is a batch-fabricated chip that operates either partially or

completely with signals that are continuous.

design or manufacturing process. These observations have led

us to investigate an automated, data-driven methodology for

identifying the redundant tests of an integrated system. This

automated methodology is referred to as test compaction.2

This paper focusses on the application of statistical learning

for identifying redundant tests when continuous test measurements are available. Since statistical learning is used to

derive the redundant tests, it is referred to as statistical test

compaction. Our objective is to derive correlation functions for

the pass-fail outcomes of (potentially) redundant tests based

on continuous test measurements of non-redundant tests. Nonredundant tests are referred to as kept tests. Binary decision

forests (BDFs) are used in this paper to achieve statistical test

compaction but other methods are also applicable.

The remainder of this paper is organized as follows. Section II includes a brief background discussion along with a

description of related prior work, while Section III outlines

our statistical test compaction methodology. Then, Section IV

describes our use of BDFs in statistical test compaction. Next,

several test-specific enhancements to a BDF [?] are described

in Section V. Validation of our statistical test compaction

methodology involving two in-production integrated systems

are presented in Section VI. Finally, conclusions are drawn in

Section VII.

II. Background

Next, some necessary terminology is introduced and previous work is described.

A. Terminology

A redundant test tk in a set of specification tests T applied

to a chip is a test whose pass-fail outcome can be reliably

predicted using results from other tests in T . The subset of

redundant tests in T is denoted as Tred . A test whose pass-fail

outcome cannot be reliably predicted is called a kept test, and

therefore it must be applied to all fabricated chips. The subset

of kept tests is denoted as Tkept , therefore Tred ∪ Tkept = T .

In a test compaction methodology, the pass-fail prediction yk

for a redundant test tk ∈ Tred has a model that is “learned”

from kept-test data. This model, represented as a pass-fail

correlation function Fk , is derived based on a historical test

2 Note that the term test compaction in this paper does not refer to digital

test compression—a technique that reduces test cost in digital systems by

minimizing the number of test patterns to be applied without any information

loss—but rather to a methodology that reduces test cost by eliminating whole

tests from being applied.

c 2010 IEEE

0278-0070/$26.00 © 2011 IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media,

including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution

to servers or lists, or reuse of any copyrighted component of this work in other works.

BISWAS AND BLANTON: REDUCING TEST EXECUTION COST OF INTEGRATED, HETEROGENEOUS SYSTEMS USING CONTINUOUS TEST DATA

data, called the training data (Dtr ). Fk is typically easier to

derive than a regression function Gk which is used to predict

the measurement value vk of a redundant test tk . Accuracy of

Fk is estimated by calculating its yield loss (YL) and defect

escape (DE) using a separate data set, called the validation data

(Dval ). Both the training and validation data sets are collected

from chips that are fully tested and the amount required

depends on the confidence desired for the correlation function

Fk . YL (DE) is the fraction of passing (failing) chips that

are mispredicted as failing (passing).3 Note that some prior

approaches gauge accuracy based on the average difference

between the actual test measurement (vk ) and the predicted

value from Gk [2], [3]. However, when the average difference

between vk and the output of Gk is low, it is still possible

that the pass-fail outcome is mispredicted. Alternately, some

other approaches use the number of detected faults (in other

words, fault coverage) as a measure of accuracy [4], where

faults are functional representations of defects that can lead to

chip misbehavior. In this scenario, a limited universe of faults

considered for redundancy analysis is likely not to reflect the

true accuracy of Fk . In contrast, since YL and DE measure the

fraction of chips in the validation data that are mispredicted,

and since validation data is likely a good depiction of future

data, these metrics are likely to be better indicators of Fk

accuracy. It is, however, important to note that there may be

some excursions in future data distribution that can worsen the

accuracy of Fk . [5] describes several techniques that can be

used to improve the accuracy of Fk when excursions occur.

Depending on the data-collection procedure, test data can

contain either continuous measurements or binary pass-fail

outcomes. Pass-fail test data contains significantly less information about the tested chips than continuous test measurements. For example, a passing chip c1 and a failing chip c2

may both pass a non-redundant test ti . However, only when

we use continuous measurements from ti , we may be able

to distinguish between c1 and c2 based on ti . Since there

are considerable differences between continuous and pass-fail

test data, separate methodologies should also be developed for

deriving redundant tests using these two types of data. In this

paper, we focus on deriving redundant tests using continuous

test data. Researchers, including ourselves, have also investigated the use of pass-fail test data for deriving redundant tests.

One example methodology is described in our paper in [6].

B. Past Work

Over the past decade, a great deal of work has focussed

on developing test compaction for integrated, heterogeneous

systems using continuous test measurements. A significant

portion of this paper has focused on the use of regression

techniques. Some of these regression-based approaches use

Monte Carlo simulations of the integrated system to derive

a joint probability density function (JPDF) of its measurements [2], [4]. The resulting JPDF is used to derive the

regression function Gk of a redundant test tk . An Fk is then

3 The defect escape defined here is not the same as DPM—number of

defective chips per million units shipped—but rather is the fraction of failing

chips that are mispredicted.

149

derived by comparing the predicted output of Gk with the

range of acceptable values of tk for predicting yk . In [2], Gk

is optimized to minimize the average difference between the

predicted output of Gk and the actual value of vk for the Tkept

measurements in Dtr . Alternately, [7] derives a Gk that predicts

an out-of-spec value for vk when a fault exists in the chip. A

similar approach has also been used in [8], where the authors

divide the range of acceptable measurement values of Tkept

into discrete hypercubes instead and derive a joint probability

mass function (JPMF) of these hypercubes. A JPMF obtained

from the training data is used to derive an Fk that maximizes

the likelihood of predicting a passing (failing) outcome for

chips in Dval that pass (fail) tk . Finally, in [3], an Fk that uses

measurements from “alternate” tests is derived. An alternate

test is a simplified input stimulus and response measurement

that is significantly less expensive than a specification test.

In the recent past, other researchers have identified that

go/no-go testing only requires the modeling of the pass-fail

outcome of a test (i.e., yk ) and therefore has utilized binary

classification techniques instead. For example, the authors

in [9] learn a neural network-based Fk to identify each possible

redundant RF test tk of an in-production cell-phone transceiver.

Similarly, we have used support vector machines (SVM)

to derive an Fk for a potentially redundant test tk for an

operational amplifier and a MEMS accelerometer [10]. We

have also used binary decision tree (BDT) to predict the passfail outcome of low-temperature mechanical tests of a MEMS

accelerometer in [11].

Here, we use a BDF-based binary classifier to derive the

redundant tests of an integrated system. There are many

advantages to using a BDF. First, BDFs are data driven and do

not make any assumptions on the functional form of Fk . As a

result, they may lead to a more accurate representation of Fk as

compared to learning techniques that rely on an assumed form

of Fk . Second, constructing a BDF is a polynomial time process with complexity O(m2 ×l3 ×n) [12], where m is the number of tests in Tkept , l is the number of chips in Dtr , and n is the

number of BDTs in the forest. Moreover, classifying a future

chip using a BDF is a sub-linear time process of complexity

O(log2 l). Therefore, a BDF can be constructed in reasonable

amount of time, and the outcome of a future chip can be predicted using a BDF relatively fast. A test compaction methodology that derives an Fk that takes less time to predict the

pass-fail outcome of future chips than Fk derived using other

test compaction methodologies will achieve greater test time

reduction from removing the redundant tests, and therefore is

preferred. In addition, a shorter training time is also valuable

because it may not only enable learning an Fk even when the

training data set size is very large but also will allow faster relearning of Fk when its accuracy drops due to manufacturing

process variations [5]. Finally, it is easier to understand how

a BDF operates since it can be represented as a simple set of

rules rather than a complex mathematical formula, potentially

leading to an intuitive understanding of the prediction model.

In fact, a BDF can be depicted as a look-up table, which makes

its addition to a test program relatively straightforward.

In addition, using a BDF for deriving an Fk is even more

advantageous than using a BDT for two reasons. First, BDTs

150

IEEE TRANSACTIONS ON COMPUTER-AIDED DESIGN OF INTEGRATED CIRCUITS AND SYSTEMS, VOL. 30, NO. 1, JANUARY 2011

are especially prone to over-fitting [13]. Therefore, to learn

a single BDT, the collected test data must be divided into

three portions instead of just two. The first data set is used

to learn the BDT model, while the second data set is used to

improve accuracy of the tree by “pruning”4 it [14]. The third

portion is used to validate the accuracy of the pruned BDT.

However, if a BDF uses a large number of trees, then the law

of large numbers guarantees that the forest will not over-fit.

Therefore, no pruning of the learned model is required in a

BDF. Second, the random derivation of training data for each

BDT in a BDF is more likely to result in an Fk that only

models the primary characteristics of the data and omits any

random data variations. Consequently, a BDF typically has

higher prediction accuracy than a single BDT [15].

III. Methodology Overview

Before describing the details of our methodology, this

section first provides a brief overview. Fig. 1 illustrates our test

compaction methodology. The test compaction process begins

with collected test data comprised of continuous measurements

and the specification test set T . Next, a subset of specification

tests Tc is selected for redundancy analysis. These tests are

called candidate redundant tests. All tests that are not in Tc

comprise the set of kept tests, Tkept . After Tc and Tkept are

determined, the correlation function Fc for each candidate test

tc ∈ TC is derived using the continuous kept-test measurements

in Dtr . The prediction accuracy of each Fc is then estimated

by calculating its YL and DE based on Dval . If the resulting

YL and DE of Fc are below the acceptable levels for tc , then tc

is deemed to be redundant and added to Tred . Otherwise, tc is

placed in Tkept . This entire process is repeated until tests can no

longer be added or removed from Tkept and Tred , respectively.

The two major outcomes of this statistical test compaction

methodology includes the identification of Tc and the derivation of the correlation function Fc . The greedy nature of our

approach requires that an appropriate Tc be chosen. Otherwise,

the inherent limitations of greedy algorithms will lead to a suboptimal solution. An efficient and accurate derivation of the

correlation function Fc for a candidate test is also necessary

to improve the compaction achieved for the specification test

set under consideration. A more detailed discussion of these

two outcomes are next described.

Fig. 1.

Flowchart of statistical test compaction.

poor Tkept . Specifically, some of the tests whose measurement

values have significant correlation to the pass-fail outcomes

of the candidate tests may not be included in Tkept . This may

significantly limit the number of tests that are identified as

redundant.

Different techniques exists for selecting Tc . For example,

one approach uses the experience of design and test engineers,

that is, these experts use their know how to select a Tc that

they believe have the highest likelihood of being redundant.

Alternately, the more expensive tests can be selected as candidates since their elimination will result in significant test-cost

savings. When no prior experience is available and no test

is significantly more expensive than another, then each test

can be analyzed individually for redundancy. For this choice,

the kept test set for each candidate test tc includes all of the

remaining applied tests, that is, Tkept = {T − tc }. Therefore,

in this scenario, the number of kept tests can become quite

high. However, since BDFs do not suffer from the curse of

dimensionality [13], a large set of kept tests does not pose

any significant computation issues.

B. Redundancy Analysis

A. Candidate Redundant Tests

As mentioned in the previous subsection, one of the most

important tasks in statistical test compaction is identifying the

set of candidate tests Tc . This choice is crucial for two reasons.

First, a poor selection of candidate tests can lead to wasted

analysis of some tests that are not at all redundant. Second

and more importantly, an ill-chosen Tc may also result in a

4 The recursive partitioning method of constructing a BDT continues until

all training chips are correctly classified [14]. Consequently, the resulting Fk

may include inaccuracies from variations in the training data, a phenomenon

known as over-fitting. To remedy this shortcoming, a BDT is usually simplified

by “pruning” it using a second data set, where one or more of its subtrees

are replaced with terminal vertices to improve its prediction accuracy for the

second data set.

After the candidate test set Tc is chosen, the next important

task is to learn an Fc for each candidate test tc ∈ Tc . A different

Fc is learned for each tc , which means that the redundancy of

each tc is analyzed separately. Fc is first statistically learned

from Dtr , and then it is used to predict the pass-fail outcome

yc of tc for each chip in Dval . Next, individual misprediction

errors (YL and DE) for each tc are used to ultimately determine

which tests should be included in Tred and which must be

placed in Tkept . More specifically, the candidate test with

highest misprediction error is added back to Tkept and the

whole analysis process is repeated with the updated Tc and

Tkept . This iterative process is continued until no more tests

can be added to or removed from Tc and Tkept .

BISWAS AND BLANTON: REDUCING TEST EXECUTION COST OF INTEGRATED, HETEROGENEOUS SYSTEMS USING CONTINUOUS TEST DATA

151

In this paper, we choose to analyze the redundancy of a

single candidate test at a time, that is, a separate Fc is derived

to predict the pass-fail outcome of each candidate. In contrast,

others [3] have derived correlation functions for the pass-fail

outcome of subsets of tests, where the correlation function for

a subset predicts a passing outcome when all tests in the subset

pass and a failing outcome when any one test fails. Another

work uses algorithms, that include, e.g., genetic algorithm

and maximum cover, to derive a subset of tests that can be

used to predict the pass-fail outcome of a chip [9]. Unlike

the other technique, our methodology is greedy and, at times,

may result in a sub-optimal solution. However, the advantage

of our methodology is in the derivation of individual Fk for

each redundant test. These individual correlation functions are

useful in many applications that include, e.g., failure diagnosis,

test grading, and updating the redundant test set when the test

correlations fluctuate over the life of an integrated system.

IV. Test Compaction Using BDFs

Based on the observations outlined in Section II-B, we

believe that using a BDF for test compaction is more advantageous than using other statistical learning techniques.

Therefore, in this paper, we use BDFs to represent Fc . The

remainder of this subsection provides details of the structure

of decision forests, their derivation process, and their use in

statistical test compaction.

A. Binary Decision Tree (BDT)

Since a BDF is comprised of many BDTs, the derivation

process of a BDT is first described. The terminology used

in this section is adopted from [16]. When continuous test

data is available, the kept tests of an integrated system can

be expressed as a hyperspace, where each axis corresponds

to a kept test. Each chip used for training is a data point in

the Tkept hyperspace, and all of the training data together form

a distribution within the hyperspace. A BDT is a classifier

that recursively partitions the hyperspace into hypercubes.

In an un-pruned tree [16], the leaf hypercubes in the BDT

contains either passing or failing chips with respect to some

candidate redundant test tc . The non-terminal vertices in the

BDT represent partitions in the Tkept hyperspace. Each of these

partitions represents a hyperplane in Tkept hyperspace that

separates the hyperspace into two hypercubes at a particular

measurement value θir of a kept test ti . θir is called a decision

value, and the corresponding tree vertex is called a decision

vertex. The left child of the decision vertex is a hypercube

that includes chips with ti measurements less than θir , while

the right child is a hypercube that contains chips with ti

measurements greater than or equal to θir .

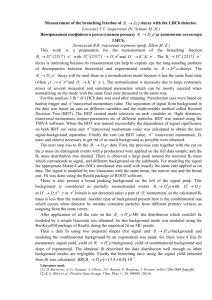

Fig. 2(a) shows an example of a data distribution for a

Tkept = {t1 , t2 } hyperspace. The circles in Fig. 2(a) represent

chips that pass a candidate test tc , and the triangles represent

chips that fail tc . The passing and failing chips are partially

separated by the lines shown, where the dotted line represents

the last partition selected. The resulting, partially-formed BDT

is shown in Fig. 2(b). The shaded region in Fig. 2(b) includes

the portion of the decision tree that resulted from the last

Fig. 2. Illustration of (a) partially separated Tkept = {t1 , t2 } hyperspace,

where the dotted line denotes the last partition in the tree-derivation process,

and (b) corresponding partially-formed binary decision tree, where the shaded

region represents the new vertices added to the tree due to the dotted-line

partition.

partition (dotted line) in Fig. 2(a). The left child of the

partition is a terminal vertex since it represents a homogeneous

hypercube that includes only failing chips. On the other hand,

the right child depicts a non-homogeneous hypercube because

it contains ten passing and two failing chips. Because of its

non-homogeneity, the right child can be further partitioned.

B. BDF

BDFs were first introduced by Brieman in [15]. A BDF

includes a collection of BDTs, where each BDT is derived

from a modified version of the training data Dtr . Modified

versions of Dtr can be obtained in a multitude of ways.

For example, one approach focusses on sampling chips from

Dtr , where chips mispredicted by already-learned BDTs have

higher probabilities of being selected. This procedure is known

as boosting [17]. Alternately, the modified Dtr can be derived

by sampling chips with replacement from the original Dtr . In

this technique, when a chip is selected during the sampling

process, its test measurements are not removed from Dtr , but

instead are copied to the modified test data set. As a result, the

probability of selecting any chip from Dtr remains constant

throughout the sampling process. This sampling technique

is known as bagging [18]. In [19], it is reported that the

prediction accuracies of bagged and boosted decision forests

are not only comparable, but they are also better than many

other techniques. Moreover, the author also pointed out that,

152

IEEE TRANSACTIONS ON COMPUTER-AIDED DESIGN OF INTEGRATED CIRCUITS AND SYSTEMS, VOL. 30, NO. 1, JANUARY 2011

since the time required for deriving a boosted BDF can be

significantly more than that required for a bagged BDF, it

may be preferable to use bagging. Therefore, in this paper,

the modified training data sets for a BDF are derived through

bagging. Once the modified training data sets are obtained,

separate BDTs are derived for each data set. Next, the pass-fail

prediction for a future chip cf is obtained from the prediction

results of individual BDTs. In this paper, we use a simple but

popular technique for deriving the overall pass-fail prediction,

namely, the threshold-voting scheme. In this technique, each

decision tree Xj in the BDF is used to predict the passfail outcome of cf . If the number of trees that predict cf

as passing is greater than a pre-defined integer, then cf is

predicted as a passing chip; otherwise, cf is predicted to fail.

Note that other schemes can also be used to derive the BDF

prediction [20]. In this paper, we choose to use threshold

voting since it is not only easy to interpret but also enables a

simple conversion of the BDF into a lookup table. The latter

property is especially important for translating a BDF into a

commercial test program.

V. Test-Specific Enhancements

In the previous section, we discussed how a BDT partitions

the Tkept hyperspace into homogeneous hypercubes. They are

hypercubes since they have boundaries that are orthogonal

to the axes of the kept tests. If the training data does not

include a sufficient number of passing or failing chips, these

hypercubes may contain “empty spaces,” that is, regions in the

hypercubes where no training chip resides. We are particularly

interested in the empty spaces of passing hypercubes since

a future failing chip residing in these spaces will be incorrectly classified as passing. Consequently, these mispredicted

failing chips will increase escape. In this section, three testspecific enhancements that minimize empty space in passing

hypercubes are evaluated. These three enhancements include

hypercube collapsing, principal component analysis (PCA),

and linear discriminant analysis (LDA).

A. Hypercube Collapsing

The aforementioned shortcoming of a BDT can occur either

in a high or low-yielding manufacturing process. We are

particularly interested in the DE from a learned Fc for a

candidate test tc when the test data is drawn from a highyielding process. In this case, even when a sufficiently large

sample of training data is available, the number of failing chips

in Dtr can be very low. Therefore, even though the passing subspaces5 may be adequately populated by the passing training

chips, the failing subspaces may not be. As a result, some

of the partitions that are necessary to completely separate

the passing and failing subspaces may not occur. In other

words, the BDT representation of Fc may erroneously include

some of the unpopulated regions of the failing subspaces in

5 Note that the terms “subspace” and “hypercube” are not equivalent. For

example, a passing subspace denotes a portion of a hyperspace that will

contain only passing chips over the entire product lifetime, while a passing

hypercube is the result of a BDT partitioning of the hyperspace that only

includes passing training chips.

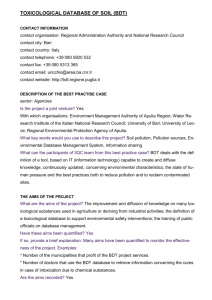

Fig. 3. Illustration of (a) possible misclassification of a future failing chip

(shown as a circumscribed triangle) due to the insufficient coverage of all

of the failing subspaces by the existing test data and (b) how the hypercube

collapsing method (shaded hypercubes) eliminates the misclassification error.

its passing hypercubes. As future failing chips begin to fill

these previously unpopulated regions, some of these chips may

reside in the passing hypercubes and be mispredicted. This

is illustrated in Fig. 3(a) using an example training data set

for a Tkept = {t1 , t2 } hyperspace. The circumscribed triangle

in Fig. 3(a) represents a future failing chip that resides in

a portion of the failing subspace that has been erroneously

included in a passing hypercube.

To guard against the aforementioned scenario, our hypercube collapsing method “collapses” the boundaries of each

passing hypercube to coincide with the passing-chip data that

reside within that hypercube. In other words, this method

assumes that all portions of passing hypercubes that do not

include any passing chips belong to failing hypercubes. As a

result, a future failing chip residing in these new failing hypercubes cannot contribute to the DE of the learned Fc . However,

a future passing chip in these hypercubes will increase YL.

Fig. 3(b) shows the result of collapsing the boundaries of the

passing hypercubes in Fig. 3(a). As shown in Fig. 3(b), the

previously misclassified failing chip (circumscribed triangle)

is now correctly identified after hypercube collapsing. Next,

we describe how the hypercube collapsing is implemented.

Collapsing a hypercube is accomplished by analyzing the

paths within a BDT. All paths from the root vertex to a

terminal vertex in a BDT include several decision values that

each correspond to a different partition in the Tkept hyperspace.

These partitions define the hypercube boundaries that correspond to the terminal vertices of the BDT. Any kept test that

BISWAS AND BLANTON: REDUCING TEST EXECUTION COST OF INTEGRATED, HETEROGENEOUS SYSTEMS USING CONTINUOUS TEST DATA

153

Fig. 4. Example of (a) training data distribution, (b) passing hypercube in the BDT partitioning of this training data that includes empty space that leads to

the misprediction of a future failing chip (shown as the circumscribed triangle), and (c) elimination of this empty space using principal component analysis

(PCA).

does not affect the decisions along a path to a terminal vertex

that corresponds to a passing hypercube represents a missing

boundary. These missing boundaries reduce the dimensionality

and unnecessarily increase the size of its corresponding hypercube. Therefore, we derive additional partitions to represent

these missing boundaries using the passing chip data in the

hypercube. Specifically, for each test ti ∈ Tkept , the chips in

the passing hypercube are examined to determine which one

is closest to the “empty space” along the ti dimension. The

measurement value vi of this passing chip is then chosen as

the decision value for the missing partition in the hypercube.

In [12], the authors state that the time complexity of deriving

a BDT is O(m2 ×l3 ), where m is the number of tests in Tkept

and l is the number of chips in the training data. In the worstcase scenario, where there is exactly one failing chip between

any two adjacent passing chips in the Tkept hyperspace and

vice versa (i.e., the passing and failing chips alternate in the

Tkept hyperspace), the resulting BDT contains exactly one leaf

node for each chip in the data. This leads to a total of l leaf

nodes in the BDT. Also, recall that each leaf node in a BDT

corresponds to a combination of decision values. In the worstcase scenario, each of these leaf nodes may correspond to none

or only one decision value per kept test as well. Therefore,

additional partitions are added to each leaf node of this BDT

by applying hypercube collapsing. The number of partitions

added to each leaf node is on the order of O(m). Consequently,

hypercube collapsing creates O(m×l) additional partitions.

In this case, the derivation of a BDT is still polynomial

but with a time complexity of O m2 ×l3 + m×l . Moreover,

the time complexity of classifying a future chip using a

BDT

collapsing increases from O(log2 l) to

with hypercube

O m× log2 l .

B. Principal Component Analysis

As opposed to simply using the Tkept measurements, we also

evaluate the use of PCA [21] to identify linear combinations

of these measurements that may result in a more accurate BDT

model. Recall that the partitions of a BDT are orthogonal to

the kept tests. Therefore, the hypercubes of a BDT derived

from training data whose principal axes are not parallel to the

kept tests may contain a significant amount of empty space.

As mentioned in Section V-A, this empty space may actually

belong to failing subspaces. Consequently, the resulting Fc

can lead to a relatively high DE. To solve this problem,

PCA uses Dtr to derive linear combinations of the kept tests

that are parallel to the principal axes of the training data.

Therefore, when a Tkept hyperspace is partitioned using kepttest combinations derived from PCA, these partitions will

be parallel to the principal axes of the training data. We

postulate the use of PCA will likely reduce the amount of

empty space in the hypercubes, and, in turn, also reduce the

DE of the learned Fc .

Fig. 4(a) shows an example of a training data distribution

in a Tkept = {t1 , t2 } hyperspace, where again circles represent

passing chips and the triangles represent failing chips. Fig. 4(b)

illustrates a BDT partitioning of this Tkept hyperspace that

has resulted in a significant amount of empty space in the

passing hypercube. Due to this partitioning, a future failing

chip (shown as a circumscribed triangle) that resides in this

empty space will be erroneously classified as passing. Fig. 4(c)

illustrates a partitioning of the same training data using two

linear combinations of t1 and t2 , namely, t1 and t2 , that are

derived using PCA. The resulting passing hypercubes include

less empty space, which lead to the correct identification of

the future failing chip as shown in Fig. 4(c).

C. Linear Discriminant Analysis

Similar to PCA, LDA can also be used to derive linear

combinations of the kept-test measurements. However, instead

of deriving test combinations that are parallel to the principal

axes of the data set, LDA derives combinations that are parallel

to the boundaries that separate passing and failing chips. More

specifically, all of the passing chips are described by one

normal distribution while all of the failing chips are modeled

by another. In the end, LDA identifies test combinations

that describe a transformed hyperspace where the Euclidean

distance between the mean values of these two distributions is

minimum for a given maximum allowable scatter (variance)

in each distribution [18].

Deriving a BDT based on the kept-test combinations from

LDA, as opposed to those from PCA, can often lead to a BDT

with fewer partitions. Fewer BDT partitions may result in a

more accurate Fc (Ockham’s Razor [22]). However, in doing

so, the amount of empty space in the passing hypercubes could

also increase and can lead to greater levels of misprediction

error for the resulting Fc .

154

IEEE TRANSACTIONS ON COMPUTER-AIDED DESIGN OF INTEGRATED CIRCUITS AND SYSTEMS, VOL. 30, NO. 1, JANUARY 2011

Fig. 5. Example of (a) test data set, where the separation boundary between the passing and failing chips is not parallel to either of its principal axes,

(b) partitioning of the Tkept hyperspace that uses kept-test combinations derived from PCA, and (c) partitioning of the Tkept hyperspace that uses kept-test

combinations derived from LDA.

Fig. 5(a) shows an example data set, where separation

boundary between passing and failing chips is not parallel

to either of its principal axes. Fig. 5(b) and (c) illustrates

the BDT partitioning of this data set using the kept-test

combinations derived from PCA and LDA, respectively. From

Fig. 5, we observe that fewer partitions are required when the

kept-test combinations are derived from LDA. At the same

time, the empty space in the passing hypercube also increases

when these LDA test combinations are used. However, it

may be possible to reduce this empty space using hypercube

collapsing.

VI. Experiment Validation

In this section, statistical test compaction is performed for

continuous test data from two production integrated systems.

Specifically, package test data from an automotive microelectromechanical systems (MEMS) accelerometer, and a cellphone radio-frequency (RF) transceiver are examined. The

accelerometer test data includes measurements from a stopon-first-fail environment, that is, test measurements from each

failing chip are only available up to the point at which

the chip fails a test. In contrast, the transceiver test data

includes measurements from all tests, that is, there is no

stopping on first fail. Finally, it is worth mentioning, that

the MEMS accelerometer is used in safety-critical automotive

applications, meaning the resulting DE from test compaction

must be driven as low as possible, ideally zero.

A. MEMS Accelerometer

The first production chip analyzed for test compaction is an

automotive MEMS accelerometer from Freescale Semiconductors. An accelerometer is an integrated transducer that converts

acceleration into an electrical signal. The accelerometer contains a proof mass, an inertial component of the accelerometer

that moves due to acceleration forces. The micro-springs (µsprings) in the accelerometer apply restoring forces when the

proof mass is deflected. Therefore, when the device undergoes

acceleration, an equilibrium is reached when the force on

the proof mass from the µ-springs is equal in magnitude but

opposite in direction to the acceleration force. In this shifted

position, the gaps between the parallel plates of the capacitor

in the accelerometer changes. Voltages of equal and opposite

polarity are applied to the two fixed plates of the capacitor,

while the movable plate in between is used for sensing. When

the proof mass shifts, the movable plate develops a voltage

due to the capacitance change that is directly proportional

to the amount of the shift and, in turn, to the acceleration

experienced by the proof mass. Consequently, the acceleration

can be determined by measuring the resulting voltage.

Since this automotive accelerometer is supposed to function

over a very large temperature range, it is subjected to a set of

electrical and mechanical tests at room temperature as well as

at a reduced (cold) temperature of −40 °C, and an elevated

(hot) temperature of 80 °C. The cold and hot mechanical tests

of the accelerometer are significantly more expensive than the

electrical and room-temperature mechanical tests since they

require relatively more expensive test setup. Therefore, test

cost can be reduced if the cold and hot mechanical tests

can be eliminated. This section evaluates four test-compaction

scenarios that focus on predicting the pass-fail outcomes of

the following:

1) cold-mechanical test using the cold electrical-test measurements;

2) cold-mechanical test using the room-temperature

mechanical-test measurement;

3) hot-mechanical test using the hot electrical-test measurements;

4) hot-mechanical test using the room-temperature and

cold-mechanical test measurements.

Separate redundancy analyses are performed for each of

the aforementioned cases. The first step in the analysis is to

select the kept tests from the set of specification tests. Next,

the test data is filtered to only include chips that pass all of

the selected kept tests. For the accelerometer test data, this

process leads to over 68 000 chips for cases 1 and 2, and

over 67 000 chips for cases 3 and 4. After the test data is

chosen for analysis, 80% of this data is randomly selected for

training and the remaining 20% is used for validation. This

process is repeated 50 times to derive 50 estimates of YL and

DE for each case. These estimates are then used to calculate

the average and 95%-confidence interval of YL and DE. This

method of obtaining prediction accuracies for Fc is called

50-fold random cross validation [18]. The BDF in each

BISWAS AND BLANTON: REDUCING TEST EXECUTION COST OF INTEGRATED, HETEROGENEOUS SYSTEMS USING CONTINUOUS TEST DATA

155

TABLE I

Average and 95%-Confidence Intervals of YL and DE When the Cold and Hot Mechanical Tests of the MEMS Accelerometer are

Eliminated

Case

1

2

3

4

Candidate

Test

Cold mechanical

Cold mechanical

Hot mechanical

Hot mechanical

Kept Tests

Cold electrical

Nominal mechanical

Hot electrical

Cold + nominal

mechanical

YL (%)

95%-Confidence

Average

Interval

0.0054%

0–0.0208%

0.0062%

0%–0.0418%

0.51%

0%–1.06%

1.16%

0%–3.72%

Average

2.24%

10.07%

11.57%

14.86%

DE (%)

95%-Confidence

Interval

0%–6.04%

4.77%–15.37%

3.93%–19.21%

2.54%–27.18%

TABLE II

Evaluation of the Test-Specific Enhancements of a BDF When the Cold Mechanical Test Pass-Fail Outcomes Are Predicted Using

the Cold Electrical Test Measurements (Case 1)

Enhancement Applied

No enhancement

Hypercube collapsing

PCA

LDA

Hypercube collapsing + PCA

Hypercube collapsing + LDA

YL (%)

95%-Confidence

Average

Interval

0.0054%

0%–0.0208%

0.26%

0.10%–0.42%

0.53%

0.49%–0.57%

1.06%

0.72%–1.40%

0.41%

0.13%–0.63%

0.86%

0.46%–1.23%

cross-validation analysis has 100 BDTs.6 Moreover, a conservative voting scheme is used for pass-fail prediction of the

BDF. Specifically, a future chip is predicted to fail if any one

BDT in the forest predicts failure. In the end, the measured

accuracy from the 50-fold cross-validation is used to determine

which candidate tests are redundant, if any.

The results of these analyses are listed in Table I. The

acceptable levels for YL and DE with 95% confidence are each

assumed to be 10%. With these relatively-high thresholds, the

results imply that only the cold mechanical test of the MEMS

accelerometer is possibly redundant with its pass-fail outcome

correlated to the measurements resulting from the electrical

tests applied at cold.

Use of the accelerometer in safety-critical, automotive applications, however, means that the cost of DE is significantly

higher than the cost of YL [24]. Consequently, to further

reduce the DE for case 1, we explore the three enhancements

discussed in Section V, namely, hypercube collapsing, PCA,

and LDA. When applying PCA or LDA, all derived kept-test

combinations are used.7 Table II lists the prediction accuracies

from each enhancement using 50-fold cross validation. From

Table II, we observe that the lowest DE is achieved when

Fc is modeled using BDFs with hypercube collapsing. On

the other hand, the YL for this Fc is higher than the other

enhancements. However, since lower DE is much preferred,

BDFs with hypercube collapsing should be used for case 1.

Notice that the accuracy of BDF with hypercube collapsing

6 One hundred BDTs in a BDF are shown to be a good choice for other

applications [23]. Consequently, in this paper we also use a forest consisting

of 100 BDTs.

7 Any combination of these four scenarios leads to similar results. For

example, the YL and DE that results from predicting the outcome of cold

mechanical test using measurements from the room-temperature and coldelectrical and cold-mechanical tests does not change when only the cold

electrical tests are used.

DE (%)

95%-Confidence

Average

Interval

2.24%

0%–6.04%

0.70%

0%–2.98%

2.06%

0%–8.60%

2.39%

0%–6.53%

0.84%

0%–4.68%

1.33%

0%–5.24%

and PCA is poorer than BDF with hypercube collapsing alone.

This is probably because the separation boundaries between

passing and failing chips are not parallel to the principal axes

of the training data. Therefore, test combinations derived using

PCA may lead to more BDT partitions and, in turn, higher

Fk inaccuracy (since Ockham’s Razor [22] implies that fewer

BDT partitions may lead a more accurate BDT). Also, the

accuracy of a BDF with hypercube collapsing and LDA is

poorer than a BDF with hypercube collapsing alone. This may

be the case if the passing and failing chips are not separable

by linear boundaries. Therefore, LDA may be deriving kepttest combinations that are worse for separating the passing and

failing chips using a BDF.

Finally, before concluding the test-compaction analysis of

the accelerometer, our claim of superior accuracy using BDFs

with test-specific enhancements is validated by comparing

accuracy against other statistical-learning techniques. Specifically, the prediction accuracies of our methodology and four

other standard statistical-learning approaches are compared

using case 1. (See Table III for the results.) These learning

methods include discriminant analysis [18], support vector

machines (SVMs) [25], neural networks [18], and a single

BDT [16]. Test compaction based on discriminant analysis,

neural networks, and a single BDT are implemented as scripts

that use the standard realizations of these learning techniques

by the statistical analysis software JMP [26]. SVMlight [27]

is used to derive an SVM model. Specifically, a Gaussian

basis function with variance of 2.56 and a capacity of 20 is

used in the SVM model. In all four learning methods, 50-fold

cross-validation is used to derive averages and 95%-confidence

intervals. From Table III, we observe that our statistical test

compaction approach is significantly more accurate in terms of

DE. However, our approach has a higher YL, but is negligible

at about 1/4%, on average.

156

IEEE TRANSACTIONS ON COMPUTER-AIDED DESIGN OF INTEGRATED CIRCUITS AND SYSTEMS, VOL. 30, NO. 1, JANUARY 2011

TABLE III

Comparison of YL and DE When the Cold Mechanical Test Outcome is Modeled Using Various Statistical Learning Techniques

Type of Statistical

Learning

Discriminant analysis

SVM

Neural networks

Single BDT

BDF with hypercube

collapsing

Average

39.51%

0.0054%

0.38%

0.0068%

0.26%

YL (%)

95%-Confidence

Interval

37.95%–41.07%

0.0054%–0.0054%

0%–0.61%

0%–0.0284%

0.10%–0.42%

DE (%)

95%-Confidence

Average

Interval

45.24%

34.06%–56.42%

7.22%

0%–26.16%

2.78%

0%–8.32%

2.67%

0%–6.58%

0.70%

0%–2.98%

TABLE IV

Sensitivity Analysis of the Test-Specific Enhancements for a BDF When the Outcome of First High-Frequency Test t1M of the

Transceiver Mixer Is Predicted Using the Measurements from DC and Low-Frequency Tests

Enhancement applied

No enhancement

Hypercube collapsing

PCA

LDA

Hypercube collapsing + PCA

Hypercube collapsing + LDA

YL (%)

95%-Confidence

Average

Interval

2.17%

0.93%–3.41%

27.06%

0%–100%

0.11%

0%–0.30%

0.30%

0%–0.63%

3.63%

0.71%–6.55%

0.72%

0.51%–0.93%

DE (%)

95%-Confidence

Average

Interval

0.38%

0%–1.75%

0%

0%–0%

7.64%

0%–29.25%

4.42%

0%–7.63%

0.31%

0%–1.09%

0.30%

0%–1.60%

TABLE V

Comparison of YL and DE When the Outcomes of Three High-Frequency Tests of the Transceiver Mixer Are Modeled Using

Various Statistical Learning Techniques

Mixer Test

t1M

M

t16

M

t20

Type of Statistical

Learning

Discriminant analysis

SVM

Neural networks

Single BDT

BDF with hypercube

collapsing + LDA

Discriminant analysis

SVM

Neural networks

Single BDT

BDF with hypercube

collapsing + LDA

Discriminant analysis

SVM

Neural networks

Single BDT

BDF with hypercube

collapsing + LDA

YL (%)

95%-Confidence

Average

Interval

0%

0%–0%

0.43%

0.33%–0.53%

0.17%

0%–0.55%

0.15%

0%–0.47%

0.72%

0.51%–0.93%

DE (%)

95%-Confidence

Average

Interval

7.44%

2.56%–12.32%

1.31%

0%–4.49%

2.02%

0%–6.53%

1.55%

0%–5.03%

0.30%

0%–1.60%

0%

0.11%

0.34%

0.42%

1.94%

0%–0%

0%–0.25%

0%–1.35%

0.20%–0.63%

1.42%–2.46%

7.00%

1.02%

7.85%

4.46%

0.38%

2.74%–11.27%

0%–2.13%

0.55%–15.16%

2.16%–6.76%

0%–1.79%

0.23%

0.94%

0.19%

0.39%

0.88%

0%–0.55%

0.34%–1.45%

0%–0.53%

0.14%–0.64%

0.57%–1.19%

7.44%

0.62%

8.27%

4.70%

0.41%

1.24%–13.65%

0%–1.37%

0.90%–15.64%

2.31%–7.09%

0%–1.15%

Before concluding this section, it is also important to explain

why we chose not to compare our methodology to a single

BDT with test-specific enhancements. In Section II-B, we have

explained that the prediction accuracy of a BDF is improved

over a single BDT. In addition, we also observe that if a

test-specific enhancement improves the accuracy of a BDT,

it will improve the accuracy of each BDT within a BDF.

Therefore, using the same principle described in Section II-B,

we conclude that the accuracy of a BDF with a test-specific

enhancement will be equal to or better than that of a BDT

with the same test-specific enhancement.

B. RF Transceiver

The next data set analyzed is package-test data from a

production cell-phone radio-frequency (RF) transceiver. An

RF transceiver is a mixed-signal system that can transmit and

receive RF signals. In this system, the receiver (Rx) “downconverts”8 and digitizes RF signal to output DC signals. On the

other hand, the transmitter (Tx) “up-converts” its digital input

to radio frequency for transmission. Rx and Tx are comprised

8 Signal down-conversion is the process of shifting the center frequency of

a signal from a high to a low value.

BISWAS AND BLANTON: REDUCING TEST EXECUTION COST OF INTEGRATED, HETEROGENEOUS SYSTEMS USING CONTINUOUS TEST DATA

of components that include, e.g., low-pass filter, low-noise

amplifier, mixer, and so on, which function in low-frequency

as well as RF domain. Consequently, the transceiver has to be

tested to function correctly in these two domains. The RF tests

of the transceiver are more expensive than the low-frequency

tests. Consequently, we target the elimination these RF tests

to achieve higher test cost reduction.

The data from the RF transceiver is collected from a fullfail test environment (i.e., an environment where all tests are

applied to each failing chip irrespective of which test the chip

fails). Consequently, the redundancy analysis of each candidate

test can be carried out using measurements from all other tests.

At the same time, since the mixers in the transceiver are RF

blocks that function at both low and high frequencies, their

high and low-frequency tests may be correlated. Consequently,

the 22 RF mixer tests of the transceiver are analyzed for

statistical test compaction using the measurements from its

72 DC and low-frequency, low-cost tests. In other words, the

22 mixer tests are used as Tc and the 72 DC and low-frequency

tests comprise Tkept . Test data from over 4600 chips are available for analysis, which is again divided randomly 50 times

into 80% training data and 20% validation data (50-fold cross

validation). Then, the averages and 95%-confidence intervals

of YL and DE are calculated for each test to determine if any

of the RF mixer tests are redundant.

Similar to the MEMS accelerometer experiment, each BDF

is again chosen to include 100 trees. However, the test-specific

enhancements that should be used for the transceiver data can

only be determined by evaluating their effect on the prediction

accuracies of Fc for the high-frequency mixer tests. Each

enhancement is applied separately to the first high-frequency

test t1M of the mixer (“M” stands for mixer). Again, while

performing PCA or LDA, all derived kept-test combinations

are used in our analysis. The results of this application are

reported in Table IV. The results indicate that even though

hypercube collapsing leads to no DE from Fc for any mixer

test, the average YL of Fc exceeds 27%. Moreover, PCA

makes DE unacceptable, and LDA does not improve YL, but

at the same time makes DE worse. However, the application

of hypercube collapsing to the test data along with PCA or

LDA reduces YL and DE. In fact, hypercube collapsing along

with LDA provides the best outcome. Similar analyses applied

to the other 21 mixer tests also yield results that are consistent

with the results reported in Table IV. Therefore, we conclude

that applying hypercube collapsing along with LDA to the

BDF is most desirable since DE of the transceiver is likely not

as important as the automotive accelerometer. As a result, the

follow-on analyses uses BDFs in combination with hypercube

collapsing and LDA for redundancy analyses of the RF mixer

tests.

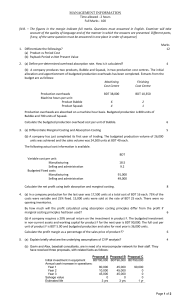

Test compaction analysis along with hypercube collapsing

and LDA is used to determine if any of the 22 RF mixer

tests can be eliminated using measurements from the 72 lowfrequency and DC tests. The results of these analyses are reported in Fig. 6. Specifically, Fig. 6(a) and (b) reports YL and

DE, respectively, when the corresponding RF test is deemed

redundant. Both plots include a straight line that represents the

95%-confidence interval of the prediction accuracy for each Fc

157

Fig. 6. Average and 95%-confidence intervals of (a) YL and (b) DE when

each of the 22 high-frequency transceiver mixer tests are predicted using an

Fc based on the DC and low-frequency tests.

for each high-frequency mixer test. The solid square marker

on each line denotes the average prediction accuracy of the

respective Fc for each test. From Fig. 6, we conclude that

M

M

tests t1M , t9M and t16

− t22

are redundant when the acceptable

DE is ≤2.0% and the acceptable YL is ≤2.5%. Obviously,

different conclusions would be drawn for other limits on YL

and DE.

Finally, we evaluate our statistical test compaction methodology against discriminant analysis, SVMs, neural networks,

and a single BDT. These approaches are implemented in

a fashion similar to the scenario involving the MEMS accelerometer. The prediction accuracy of Fc for each of the

M

M

three redundant tests t1M , t16

, and t20

, respectively, using these

learning techniques is listed in Table V. These results are

also derived using 50-fold cross validation. Table V shows

that the prediction accuracies of our approach again either

outperforms or is comparable to these other popular learning

techniques.

VII. Conclusion

In this paper, we have described a BDF-based statistical test

compaction methodology for integrated, heterogeneous systems. Comparing our approach to other popular classification

techniques using two real production systems has shown that

the use of a forest of binary decision trees along with some

158

IEEE TRANSACTIONS ON COMPUTER-AIDED DESIGN OF INTEGRATED CIRCUITS AND SYSTEMS, VOL. 30, NO. 1, JANUARY 2011

test-specific enhancements is likely more suited for identifying

redundant tests of an integrated system. Moreover, we

observed that hypercube collapsing is only suitable when the

pass-fail boundaries of the test data are parallel to the kept-test

axes. As a result, while hypercube collapsing alone is sufficient

for the MEMS accelerometer, LDA is also necessary for the

RF transceiver. Finally, a priori knowledge of which tests are

more expensive and also probably redundant enabled the elimination of the cold mechanical test of the accelerometer and

nine of the 22 high-frequency mixer tests of the RF transceiver.

These tests are of relatively higher cost, and therefore, their

elimination could achieve a significant amount of test cost

reduction. Consequently, a priori knowledge about which tests

are more expensive and also likely redundant should always be

used for initially identifying potentially redundant test for full

analysis.

Before concluding this paper we would also like to emphasize that the work here addresses the problem of deriving correlation functions for redundant tests given a set of

continuous test measurement data. However, most likely, this

test data was collected over a relatively small period of time

as compared to complete production life of the integrated

system, and the correlation among tests typically fluctuate over

the life of an integrated system. As a result, a correlation

function derived using the methodology described in this

paper, and, in fact, other related work as well, will likely

become inaccurate over time. To remedy this shortcoming,

we have also developed a technique for updating the correlation function when deemed necessary for maintaining its

prediction accuracy. Details about this paper can be found

in [5].

[11] S. Biswas and R. D. Blanton, “Statistical test compaction using binary

decision trees,” IEEE Des. Test Comput.: Process Variation Stochastic

Des. Test, vol. 23, no. 6, pp. 452–462, Nov. 2006.

[12] J. K. Martin and D. S. Hirschberg, “The time complexity of decision

tree induction,” Dept. Inform. Comp. Sci., Univ. California, Irvine, Tech.

Rep. 95-27, Aug. 1995.

[13] T. M. Mitchell, Machine Learning. Boston, MA: WCB/McGraw-Hill,

1997.

[14] J. R. Quinlan, C4.5 Programs for Machine Learning. San Mateo, CA:

Morgan Kaufmann, 1993.

[15] L. Breiman, “Bagging predictors,” J. Mach. Learn., vol. 24, no. 2, pp.

123–140, Aug. 1996.

[16] L. Rokach and O. Maimon, “Top-down induction of decision trees

classifiers: A survey,” IEEE Trans. Syst., Man, Cybern., Part C: Applicat.

Rev., vol. 35, no. 4, pp. 476–487, Nov. 2005.

[17] R. Schapire, “Strength of weak learnability,” J. Mach. Learn., vol. 5,

no. 2, pp. 197–227, 1990.

[18] T. Hastie, R. Tibshirani, and J. H. Friedman, Elements of Statistical

Learning: Data Mining. New York: Springer, 2001.

[19] J. R. Quinlan, “Bagging, boosting, and C4.5,” in Proc. Nat. Conf. Artif.

Intell., 1996, pp. 725–730.

[20] L. Todorovski and S. Dzeroski, “Combining multiple models with meta

decision trees,” in Proc. Eur. Conf. Principles Data Mining Knowledge

Discovery, 2000, pp. 54–64.

[21] M. E. Wall, A. Rechsteiner, L. M. Rocha, “Singular value decomposition and principal component analysis,” A Practical Approach to Microarray Data Analysis. Norwell, MA: Kluwer, 2003,

pp. 91–109.

[22] P. Newall. (2005). “Ockham’s razor,” The Galilean Library Manuscripts

[Online]. Available: http://www.galilean-library.org/or.html

[23] P. Latinne, O. Debeir, and C. Decaestecker, “Limiting the number of

trees in random forests,” in Proc. 2nd Int. Workshop Multiple Classifier

Syst., vol. 2096. 2001, pp. 178–187.

[24] Automotive Electronics Council, Automotive AEC-Q100 Test Quality Reports [Online]. Available: http://www.aecouncil.com/AECDocuments.

html

[25] V. N. Vapnik, Statistical Learning Theory. Danvers, MA: Wiley,

1998.

[26] JMP Statistical Discovery Software, ver. 7, SAS Softwares, Cary, NC,

2007.

[27] SVM, ver. 6.01, T. Joachims, Ithaca, NY, 2004 [Online]. Available:

http://svmlight.joachims.org

References

[1] Semiconductor Industry Association, “Test and test equipment,” in

International Technology Road Map for Semiconductors, ch. 7,

p. 36, 2008 [Online]. Available: http://www.itrs.net/Links/2007ITRS/

2007− Chapters/2007− Test.pdf

[2] J. B. Brockman and S. W. Director, “Predictive subset testing: Optimizing IC parametric performance testing for quality, cost, and yield,” IEEE

Trans. Semiconduct. Manufact., vol. 2, no. 3, pp. 104–113, Aug. 1989.

[3] R. Voorakaranam and A. Chatterjee, “Test generation for accurate

prediction of analog specifications,” in Proc. IEEE VLSI Test Symp.,

May 2000, pp. 137–142.

[4] L. Milor and A. L. Sangiovanni-Vincentelli, “Optimal test set design for

analog circuits,” in Proc. Int. Conf. Comput.-Aided Des., Nov. 1990, pp.

294–297.

[5] S. Biswas and R. D. Blanton, “Maintaining the accuracy of test compaction through adaptive re-learning,” in Proc. VLSI Test Symp., Apr.

2009, pp. 257–263.

[6] S. Biswas and R. D. Blanton, “Test compaction for mixed-signal circuits

by using pass-fail test data,” in Proc. IEEE VLSI Test Symp., Apr. 2008,

pp. 299–308.

[7] L. Milor and A. L. Sangiovanni-Vincentelli, “Minimizing production test

time to detect faults in analog circuits,” IEEE Trans. Comput.-Aided Des.

Integr. Circuits Syst., vol. 13, no. 6, pp. 796–813, Jun. 1994.

[8] E. Yilmaz and S. Ozev, “Dynamic test scheduling for analog circuits

for improved test quality,” in Proc. Int. Conf. Comput.-Aided Des., Oct.

2008, pp. 227–233.

[9] H. G. D. Stratigopoulos, P. Drineas, M. Slamani, and Y. Makris, “NonRF to RF test correlation using learning machines: A case study,” in

Proc. VLSI Test Symp., May 2007, pp. 9–14.

[10] S. Biswas, P. Li, R. D. Blanton, and L. Pileggi, “Specification test

compaction for analog circuits and MEMS,” in Proc. Des., Automat.

Test Conf. Europe, Mar. 2005, pp. 164–169.

Sounil Biswas received the B.Tech. degree in electrical engineering from the Indian Institute of Technology Kanpur, Kanpur, India, in 2002, and the

M.S. and Ph.D. degrees in electrical and computer

engineering from Carnegie Mellon University, Pittsburgh, PA, in 2004 and 2008, respectively.

He is currently with Nvidia Corporation, Santa

Clara, CA, where he focuses on test cost reduction,

yield improvement, and quality control through statistical data analyses. His current research interests

include statistical analysis of test data, systematic

and parametric yield learning, and adaptive test.

R. D. (Shawn) Blanton (S’93–M’95–SM’03–F’09)

received the B.S. degree in engineering from the

Calvin College, Grand Rapids, MI, in 1987, the M.S.

degree in electrical engineering from the University

of Arizona, Tucson, in 1989, and the Ph.D. degree

in computer science and engineering from the University of Michigan, Ann Arbor, in 1995.

He is currently a Professor with the Department

of Electrical and Computer Engineering, Carnegie

Mellon University, Pittsburgh, PA, where he is also

the Director of the Center for Silicon System Implementation, an organization consisting of 18 faculty members and over

80 graduate students focusing on the design and manufacture of siliconbased systems. His current research interests include the test and diagnosis

of integrated, heterogeneous systems and design, manufacture, and testinformation extraction from tester measurement data.