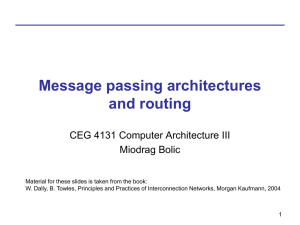

A Case for Bufferless Routing in On-Chip Networks Thomas Moscibroda Onur Mutlu

advertisement