Contents

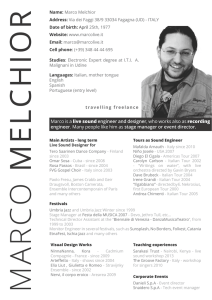

advertisement

Contents

Chapter 1. Preliminaries

1.1. Conditional Expectation

1.2. Suciency

1.3. Exponential Families.

1.4. Convex Loss Function

Chapter 2. Unbiasedness

2.1. UMVU estimators.

2.2. Non-parametric families

2.3. The Information Inequality

2.4. Multiparameter Case

Chapter 3. Equivariance

3.1. Equivariance for Location family

3.2. The General Equivariant Framework

3.3. Location-Scale Families

Chapter 4. Average-Risk Optimality

4.1. Bayes Estimation

4.2. Minimax Estimation

4.3. Minimaxity and Admissibility in Exponential families

4.4. Shrinkage Estimators

Chapter 5. Large Sample Theory

5.1. Convergence in Probability and Order in Probability

5.2. Convergence in Distribution

5.3. Asymptotic Comparisons (Pitman Eciency)

5.4. Comparison of sample mean, median and trimmed mean

Chapter 6. Maximum Likelihood Estimation

6.1. Consistency

6.2. Asymptotic Normality of the MLE

6.3. Asymptotic Optimality of the MLE

3

5

5

6

16

27

29

29

37

40

47

59

59

67

72

79

79

84

88

96

101

101

105

110

111

117

117

122

125

4

CONTENTS

CHAPTER 1

Preliminaries

1.1. Conditional Expectation

Let (X A P ) be a probability space. If X 2 L1 (A P ) and G is a sub--eld of A, then

E (X jG ) is a random variable such that

(i) E (X jG ) 2 G (i.e. is G measurable)

(ii) E (IGX ) = E (IGE (X jG )) 8G 2 G

(For X 0 (G) = E (IGX ) is a measure on G and P (G) = 0 ) (G) = 0, so by the

Radon-Nikodym

theorem there exists a G -measurable function E (X jG ) such that (G) =

R E (X jG )dP , i.e.(ii)

is satised. This shows the existence of E (X +jG ) and E (X ;jG ). Then

G

we dene E (X jG ) = E (X +jG ) ; E (X ;jG ) ).

Remark 1.1.1. (ii) generalizes to E (Y X ) = E (Y E (X jG )) 8Y 2 G such that

E jY X j < 1. The conditional probability of A given G is dened for all A 2 A as P (AjG ) =

E (IAjG ):

Remark 1.1.2. If X 2 L2 (A P ), then E (X jG ) is the orthogonal projection in L2 (A P )

of X onto the closed linear subspace L2 (A P ) since

(i) E (X jG ) 2 L2 (G P ) and

(ii) E (Y (X ; E (X jG ))) = 0 8Y 2 L2 (G P ):

Conditioning on a Statistic

Let X be a r.v. dened on (X A P ) with E jX j < 1 and let T be a measurable function

(not necessarily real-valued) from (X A) into (T F ).

(X A P ) ;!T (T F P T )

Such a T is called a statistic (and is not necessarily real-valued). The -eld of subsets of X

induced by T is

(T ) = fT ;1S S 2 Fg = T ;1 F

Definition 1.1.3. E (X jT ) E (X j (T ))

Recall that a real-valued function f on X is (T ) measurable , f = g T for some

F -measurable g on T , i.e. f (x) = g(T (x)) as shown below.

.

g

T

X ;;;!

T ;;;!

5

R

6

1. PRELIMINARIES

This implies that E (X jT ) is expressible as E (X jT ) = h(T ) for some function h 2 F

which is unique a.e. P T .

T

h

X ;;;!

T ;;;!

R

Definition 1.1.4. E (X jt) h(t)

Example 1.1.5. Suppose (X T ) has probability density p(x t) w.r.t. Lebesgue mea-

sure

R xp(xton) dxR 2 and E jX j < T1. Then E (X j(T )) = h(T ) where h(t) = E (X jT = t) =

R p(xt) dx IpT (t)>0 (t) a:s: P :

PROOF

(i) R.S. is Borel measurable in t (by Fubini)

(ii) G 2 (T ) ) G = T ;1F for some F 2 F ) IG = IF (T )

) E (IG E (X j (T ))) = E (IG X ) =

ZZ

Z

IGX dP

Z

=

xIF (t)p(x t) dxdt = IF (t)h(t)pT (t) dt

= E IF (T )h(T )] = E IG h(T )]

Properties of Conditional Expectation

If T is a statistic, X is the identity function on X and fn f g are integrable, then

(i) E af (X ) + bg(X )jT ] = aE f (X )jT ] + bE g(X )jT ] a:s:

(ii) a f (X ) b a:s: ) a E f (X )jT ] b a:s:

(iii) jfnj g fn(x) ! f (x) a:s: ) E fn(X )jT ] ! E f (X )jT ] a:s:

(iv) E E (f (X )jT )] = Ef (X ):

If E jh(T )f (X )j < 1, then

(v) E h(T )f (X )jT ] = h(T )E f (X )jT ] a:s:

1.2. Suciency

Set up

X : random observable quantity (the identity function on (X A P ))

X : sample space, the set of possible values of X

A: -algebra of subsets of X

P : fP 2 g is a family of probability measures on A (distributions of X )

T: X ! T is an A=F measurable function and T (X ) is called a statistic.

X

T

probability space (X A P ) ;;;!

sample space (X A P ) ;;;!

(T F P T )

We adopt this notation because sometimes we wish to talk about T (X ()) the random variable

and sometimes about T (X (x)) = T (x), a particular element of T . We shall also use the

1.2. SUFFICIENCY

7

notation P (AjT (x)) for P (AjT = T (x)) and P (AjT ) for the random variable P (AjT ()) on

X.

Definition 1.2.1. The statistic T is sucient for (or P ) i the conditional distribution

of X given T = t is independent of for all t, i.e. there exists an F measurable P (AjT = )

such that P (AjT = t) = P (AjT = t) a.s. PT for all A 2 A and all 2 .

Example 1.2.2.

X = (X1 : : : Xn) iid with pdf f (x) w:r:t: dx

P = P (dx1 : : : dxn) = f (x1 ) f (xn) dx1 dxn

T (X ) = (X(1) : : : X(n)) where X(i) is the ith order statistic:

The probability mass function of X given T = t is

pX jT =t(xjt) = t1 (x(1) ) n ! tn (x(n) )

i.e. it assigns point mass n1! to each x such that x(1) = t1 x(n) = tn. This is independent

of , indicating that T contains all the information about contained in the sample.

The Factorization Criterion

Definition 1.2.3. A family of probability measure's P = fP : 2 g is equivalent to

a p.m. if

(A) = 0 () P (A) = 0 8 2 :

We also say that P is dominated by a -nite measure on (X A) if

P for all 2 :

It is clear that equivalence to implies domination by .

Theorem 1.2.4. Let P be dominated by a p.m. where

=

1

X

i=0

ci Pi

(ci 0

X

ci = 1):

Then the statistic T (with range (T F )) is sucient for P () there exists an F -measurable

function g () such that

dP (x) = g (T (x)) d(x) 8 2 :

Proof. ()) Suppose T is sucient for P . Then

P (AjT (x)) = P (AjT (x)) 8:

Throughout this part of the proof X will denote the indicator function of a subset of X . The

preceding equality then implies that

E (X jT ) = E (X jT ) 8X 2 A 8:

8

1. PRELIMINARIES

Hence for all 2 X 2 A G 2 (T ), we have

E (IG E (X jT )) = E (E (IGX jT )) = E (IGX ):

Set = i , multiply by ci and sum over i = 0 1 2 : : : to get

E(IG E (X jT )) = E(IGX ) 8X 2 A 8G 2 (T ):

This implies that E (X jT ) = E (X jT ) 8X 2 A and hence

E (X jT ) = E (X jT ) = E(X jT ) 8X 2 A 8:

Now dene g (T ()) to be the Radon-Nikodym derivative of P with respect to , with both

regarded as measures on (T ). We know this exists since dominates every P . We also

know it is (T ) measurable, so it can be written in the form g (T ()), and we know that

E (X ) = E (g (T )X ) for all X 2 (T ). We need to establish however that this last relation

holds for all X 2 A. We do this as follows.

X 2 A ) E (X ) = E (E (X jT )

= E (g (T )E (X jT ))

= E (E (g (T )X jT ))

= E (E(g (T )X jT ))

= E (g (T )X ):

dP

This shows that g (T (x)) = d (x) when P and are regarded as measures on A.

(() Suppose that for each , dPd (x) = g (T (x)) for some g . We shall then show that

the conditional probability P (Ajt) is a version of P (Ajt) 8.

A 2 A G 2 (T ) )

Z

G

IA dP =

=

and

Z

G

IAdP =

=

Z

Z

G

G

Z

Z

G

Z

G

P (AjT ) dP

P (AjT )g (T ) d

IAg (T ) d

EIAg (T )jT ] d

= EIAjT ]g (T ) d

G

) P (AjT )g (T ) = E (IAjT )g (T ) a:s: and hence a.s. P 8. Also g (T ) 6= 0 a:s: P , since dP = g (T ) d. Hence P (AjT ) =

E (IAjT ) = P(AjT ) a:s: P and the R.S. is independent of .

1.2. SUFFICIENCY

9

Theorem 1.2.5. (Theorem 2, Appendix, TSH

P1) If P = fP 2 g is dominated by a

-nite measure , then it is equivalent to P

= 1

i=0 ci Pi for some countable subcollection

Pi 2 P i = 0 1 2 : : : with ci 0 and ci = 1:

Proof. is ;nite, ) 9An 2 A with A1 A2 : : : disjoint, and Ai = X such that

0 < (Ai) < 1 i = 1 2 : : : . Set

1

X

(A) = 2(Ai(\AA)i)

i

i=1

Then, is a probability measure equivalent to . Hence we can assume without loss of

generality that the dominating measure is a probability measure Let

f = dP

d

and set

S = fx : f (x) > 0g

Then

(1.2.1)

P (A) = P (A \ S ) = 0 i (A \ S ) = 0:

(Since P and since (A \ S ) > 0 f > 0 on A \ S ) P (A \ S ) > 0.) A set A 2 A

is a kernel if A S for some a nite or countable union of kernels is called a chain. Set

= sup (C )

chains C

Then = (C ) for some chain C = 1

n=1 An An Sn . (since 9 fCng such that (Cn) " and for this sequence (Cn) = .)

P 1 P (). Since

It follows from the following Lemma that P is dominated by () = 1

n=1 2n n

(A) = 0 ) Pn (A) = 0 8n

) P (A) = 0 8 (by the Lemma)

it is obvious that

P (A) = 0 8 ) (A) = 0

P

1

Hence P is equivalent to () = 1

n=1 2n Pn ():

Lemma 1.2.6. If fn g is the sequence used in the construction of C , then fP 2 g is

dominated by fPn n = 1 2 : : : g, i.e.

Pn (A) = 0 8n ) P (A) = 0 8

1

TSH stands for Testing Statistical Hypotheses, E.L. Lehmann, Springer Texts in Statistics, 1997.

10

1. PRELIMINARIES

Proof.

Pn (A) = 0 8n ) (A \ Sn ) = 0 8n (by 1:2:1)

) (C Sn ) (A \ C ) = 0

) (P ) P (A \ C ) = 0 8

If P (A) > 0 for some then, since P (A) = P (A \ C ) + P (A \ C c),

P (A \ C c) = P (A \ C c \ S ) > 0

)A \ C c \ S is a kernel disjoint from C

)C (A \ C c \ S ) is a chain with > (P (A) > 0 ) (A) > 0)

contradicting the denition of :

Hence, P (A) = 0 8 .

Theorem 1.2.7. The Factorization Theorem

Let be a -nite measure which dominates P = fP : 2 g and let

p = dP

d :

Then the statistic T is sucient for P if and only if there exists a non negative F -measurable

function g : T ! R and an A-measurable function h : X ! R such that

(1.2.2)

p (x) = g (T (x)) h (x) a.e. .

Proof. By theorem 1.2.5, P is equivalent to

=

If T is sucient for P ,

X

i

ciPi where ci 0

X

i

ci = 1:

(x) dP (x) d (x)

p (x) = dP

d (x) = d (x) d (x)

= g (T (x)) h (x)

by theorem 1.2.4.

On the other hand, if equation (1.2.2) holds,

d (x) =

=

(1.2.3)

X

X

ci dPi (x) = cipi (x) d(x)

1

X

i=1

cigi (T (x)) h (x) d (x)

= K (T (x)) h (x) d (x) :

1.2. SUFFICIENCY

11

Thus,

dP (x) = p (x) d (x) by the denition of p (x)

by equations (1.2.2) and (1.2.3)

= g (T (x)) h (x) d (x)

K (T (x)) h (x)

= g~ (T (x)) d (x)

where g~ (T (x)) := 0 if K (T (x)) = 0:

Hence T is sucient for P by theorem 1.2.4.

Remark 1.2.8. If f (x) is the density of X with respect to Lebesgue measure then T is

sucient for P i

f (x) = g (T (x)) h (x)

where h is independent of .

Example 1.2.9. Let X1 X2 : : : Xn be iid N ( 2 ) 2 R > 0, and write X =

(X1 X2 Xn). A -nite dominating measure on Bn is Lebesgue measure with

!

X

n

2

X

1

n

;

1

p2 (x) = ; p n exp 22 x2i + 2 xi ; 22

2

X X 2 1

= g2

xi xi :

P P

Therefore T (X ) = ( Xi Xi2)is sucient for P = fP2 g :

; Remark 1.2.10. T (X ) = X S 2 is also sucient for P = fP2 g, since

X X ;

2 x S 2

g2

xi x2i = g

T and T are equivalent in the following sense.

Definition 1.2.11. Two statistics T and S are equivalent if they induce the same algebra up to P -null sets. i.e. if there exists a P -null set N and functions f and g such

that

T (x) = f (S (x)) and S (x) = g (T (x)) for all x 2 N c.

Example 1.2.12. Let X1 : : : Xn be iid U (0 ) > 0 and X = (X1 : : : Xn ).

Yn

1

p (x) = n I01)(xi )I(;1](xi )

1

1

= n I01)(x(1) )I(;1](x(n) )

= g (x(n) )h(x)

) T (X ) = X(n) is sucient for :

12

1. PRELIMINARIES

Example 1.2.13. X1 : : : Xn iid N (0 2 ), = f 2 : 2 > 0g. Dene

T1 (X )

T2 (X )

T3 (X )

T4 (X )

(X1 : : : Xn)

(X12 : : : Xn2)

(X12 + + Xm2 Xm2 +1 + + Xn2)

X12 + + Xn2

n

X

p (x) = p1 n exp (; 21 2 Xi2)

( 2)

1

Each Ti (X ) is sucient. However (T4 ) (T3) (T2 ) (T1 ):

(since functions of T4 are functions of T3 , functions of T3 are functions of T2 and functions

of T2 are functions of T1 .)

Remark 1.2.14. If T is sucient for and T = H (S ) where S is some statistic, then S

is also sucient since

p (x) = g (T (x)) h (x) = g (H (S (x)) h (x)

=

=

=

=

S

H

Since (T ) = S ;1H ;1BT S ;1BS ((X A) ;!

(S BS ) ;!

(T BT )), T provides a greater

reduction of the data than S , strictly greater unless H is one to one, in which case S and T

are equivalent.

Definition 1.2.15. T is a minimal sucient statistic, if for any sucient statistic S ,

there exists a measurable function H such that

T = H (S ) a.s. P :

Theorem 1.2.16. If P is dominated by a -nite measure , then the statistic U is

sucient i

for every xed and 0 , the ratio of the densities p and p0 with respect to ,

dened to be 1 when both densities are zero, satises

p (x) = f (U (x)) a:s: P for some measurable f .

0

p0 (x) 0

Proof. HW problem.

Theorem 1.2.17. Let P be a nite family with densities fp0 p1 : : : pk g, all having the

same support (i.e. S = fx : pi (x) > 0g is independent of i). Then

p (x) p (x) p (x) T (x) = p1 (x) p2 (x) pk (x)

0

0

0

is minimal sucient. (Also true for a countable collection of densities with no change in the

proof.)

1.2. SUFFICIENCY

13

Proof. First T is sucient by theorem (1.2.16) since ppji((xx)) is a function of T (x) for all

i and j (need common support here.) If U is a sucient statistic then by theorem (1.2.16),

pi (x)

p0 (x) is a function of U for each i

)

T is a function of U

)

T is minimal sucient.

Remark 1.2.18. The theorem 1.2.17 extends to uncountable collections under further

conditions.

Theorem 1.2.19. Let P be a family with common support and suppose P0 P . If T is

minimal sucient for P0 and sucient for P , then T is minimal sucient for P .

Proof.

U is sucient for P ) U is sucient for P0 by Denition 1:2:1:

T is minimal sucient for P0 ) T (x) = H (U (x)) a.s. P0 :

But since P has common support T (x) = H (U (x)) a.s. P :

Remark 1.2.20.

1. Minimal sucient statistics for uncountable families P can often be obtained by combining the above theorems.

2. Minimal sucient statistics exist under weak assumptions (but not always). In particular they exist if (X A) = (R n Bn) and P is dominated by a -nite measure.

Example 1.2.21. P0 : (X1 : : : Xn ) iid N ( 1) 2 f0 1 g.

P : (X1 : : : Xn) iid N ( 1) 2 R .

p1 (x) = exp ; 1 hX(x ; )2 ; X(x ; )2i

i

1

i

0

p0 (x)

2

1 hX

i

2

2

= exp ; 2

2xi(0 ; 1 ) + n1 ; n0

This is a function of x, hence X is minimal sucient for P0 by Theorem 1.2.17. Since X is

sucient for P (by the factorization theorem), X is minimal sucient for P .

Example 1.2.22. P : (X1 : : : Xn) iid U (0 ) > 0.

Show that X(n) is minimal sucient (This is part of problem 1.6.16 for which you will

need to use problem 1.6.11).

14

1. PRELIMINARIES

Example 1.2.23. Logistic

P : (X1 : : : Xn) iid L( 1) 2 R.

P0 : (X1 : : : Xn) iid L( 1) 2 f0 1 : : : ng.

P(x ; )]

exp

;

i

p (x) = Qn

2

i=1 f1 + exp ;(xi ; )]g

so T = (T1 (X ) : : : Tn(X )) is minimal sucient

where

Yn (1 + e;xj )2

p

i (x)

n

i

Ti (x) = p (x) = e

;(xj ;i ) )2 :

0

j =1 (1 + e

We will show that T (X ) is equivalent to (X(i) : : : X(n)), by showing that

T (x) = T (y) , x(1) = y(1) x(n) = y(n):

Proof. (() Obvious from the expression for Ti (x).

()) Suppose that Ti(x) = Ti(y) for i = 1 2 : : : n,

Yn

;xj )2

Yn (1 + e;yj )2

(1

+

e

i.e.

i = 1 ::: n

;(xj ;i ) )2 =

;(yj ;i ) )2

j =1 (1 + e

j =1 (1 + e

Yn 1 + uj ! Yn 1 + vj !

i.e.

=

! = !1 : : : !n

j =1 1 + uj

j =1 1 + vj

where uj = e;xj vj = e;yj and !i = ei . Here we have two polynomials in ! of degree n

which are equal for n + 1 distinct values, 1 !1 : : : !n, of ! and hence for all !.

!=0)

)

Yn

Yn

j =1

n

j =1

n

Y

j =1

(1 + uj ) =

(1 + uj !) =

(1 + vj )

Y

j =1

(1 + vj !)

8!

) the zero sets of both these polynomials are the same

) x and y have the same order statistics.

By theorem 1.2.17, the order statistics are therefore minimal sucient for P0 . They are also

sucient for P , so by theorem 1.2.19, the order statistics are minimal sucient for P . There

is not much reduction possible here! This is fairly typical of location families, the normal,

uniform and exponential distributions providing happy exceptions.

1.2. SUFFICIENCY

15

Ancillarity

Definition 1.2.24. A statistic V is said to be ancillary for P if the distribution, PV , of

V does not depend on . It is called rst order ancillary if E V is independent of .

Example 1.2.25. In example 1.2.23, X(2) ; X(1) is ancillary since Y1 = X1 ; : : : Yn =

Xn ; are iid P0 , and X(2) ; X(1) = Y(2) ; Y(1) .

Example 1.2.26.

P : (X1 : : : Xn) iid N ( 1) 2 R :

X

S 2 = (Xi ; X )2 is ancillary

since

X

S 2 = (Yi ; Y )2 where Yi = Xi ; i = 1 2 : : : are iid N (0 1):

Remark 1.2.27. Ancillary statistics by themselves contain no information about , however minimal sucient statistics may contain ancillary components. For example, in 1.2.23,

T = (X(1) X(n)) is equivalent to T = (X(1) X(2) ; X(1) X(n) ; X(1) ), whose last

(n ; 1) components are ancillary. You can't drop them as X(1) is not even sucient.

Complete Statistic

A sucient statistic should bring about the best reduction of the data if it contains as

little ancillary material as possible. This suggests requiring that no non-constant function

of T be ancillary, or not even rst order ancillary, i.e. that

E f (T ) = c for all 2 ) f (T ) = c a.s. P

or equivalently that

E f (T ) = 0 for all 2 ) f (T ) = 0 a.s. P :

Definition 1.2.28. A statistic T is complete if

(1.2.4)

E f (T ) = 0 for all 2 ) f (T ) = 0 a.s. P

T is said to be boundedly complete if equation (1.2.4) holds for all bounded measurable

functions f .

Since complete sucient statistics are intended to give a good reduction of the data, it

is not unreasonable to expect them to minimal. We shall prove a slightly weaker result.

Theorem 1.2.29. Let U be a complete sucient statistic. If there exists a minimal

sucient statistic, then U is minimal sucient.

Proof. Let T be a minimal sucient statistic and let be a bounded measurable

function. We will show that

(U ) 2 (T ) i.e. E ((U )jT ) = (U ) a:s:

16

1. PRELIMINARIES

Now

E ((U )jT ) = g(U ) for some measurable g since T is minimal and U is sucient:

Let h(U ) = E ((U )jT ) ; (U ), then E h(U ) = 0 8 so h(U ) = 0 a:s: P since U is

complete. Hence (U ) = E ((U )jT ) 2 (T ): Hence U -measurable indicator functions are

T -measurable, i.e. (U ) (T ), i.e. U is minimal sucient.

Remark 1.2.30.

1. If P is dominated by a -nite measure and (X A) = (R n Bn), the existence of a

minimal sucient statistic does not need to be assumed.

2. A minimal sucient statistic is not necessarily complete. See the next example.

Example 1.2.31.

P = fN ( 2 ) > 0g

1 (x; )2

p (x) = p1 e; 2 2 = p1 e; 21 ( x ;1)2

2

2

The single observation X is minimal sucient but not complete since

E I(01)(X ) ; (1)] = P (X > 0) ; (1) = 0 8 however P (I(01)(X ) ; (1) = 0) = 0 8.

Theorem 1.2.32. (Basu's theorem) If T is complete and sucient for P , then any ancillary statistic is independent of T .

Proof. If S is ancillary, then P (S 2 B ) = pB , independent of .

Suciency of T ) P (S 2 B jT ) = h(T ), independent of .

)E (h(T ) ; pB ) = 0

)h(T ) = pB a:s: P by completeness

)S is independent of T

1.3. Exponential Families.

Definition 1.3.1. A family of probability measure's fP : 2 g is said to be an s-

parameter exponential family if there exists a -nite measure such that

X

!

s

dP

(

x

)

i () Ti (x) ; B () h (x)

p (x) = d(x) = exp

1

where i Ti and B are real-valued.

Remark 1.3.2.

1. P 2 are equivalent (since fx : p (x) > 0g is independent of ).

1.3. EXPONENTIAL FAMILIES.

17

2. The factorization theorem implies that T = (T1 Ts) is sucient.

3. If we observe X1 : : : Xn, iid with marginal distributions P then

sucient for .

Pn T (X ) is

j

j =1

Theorem 1.3.3. If f1 1 : : : s g is LI, then T = (T1 : : : Ts) is minimal sucient.

(Linear independence of f1 1 : : : s g means c1 1 () + + css () + d = 0 8 ) c1 =

= cs = d = 0. Equivalently we can say that fig is anely independent or AI since

the set of points f(1 () : : : s()) 2 g then lie in a proper ane subspace of R s .)

Proof. Fix 0 2 and consider

(1.3.1)

dP (x) = p (x) = exp fB ( ) ; B ()g exp

0

dP0

p0 (x)

(X

s

1

)

(i () ; i(0 )) Ti(x) :

If f1 1 : : : sg is LI then so is f1 1 ; 1 (0) : : : s ; s(0 )g:

Set S = f(i() ; i(0 ) : : : s() ; s(0 )) 2 g R s . Then span(S ) is a linear

subspace of R s .

If dim(span(S )) < s, then there exists a non-zero vector v = (v1 : : : vs) s.t.

v1 (1() ; 1(0 )) + + vs(s() ; s(0 )) = 0 8

contradicting the linear independence of f1 i ; i(0 )g. Hence

dim(span(S )) = s i:e: 9 1 : : : s 2 s:t:

f(1(i ) ; 1(0 ) s(i) ; s(0 )) i = 1 sg is LI.

(1.3.2)

From 1.3.1,

s

X

j =1

(j (i ) ; j (0 ))Tj (x) = ln ppi ((xx)) + (B (i) ; B (0 ))i = 1 : : : s:

0

Since the matrix j (i ) ; j (0 )]sij=1 is non-singular, Tj (x) can be expressed uniquely in

terms of ln pp0i ((xx)) , i = 1 : : : s.

But pp0i ((xx)) i = 1 : : : s is minimal sucient for P0 = fPj j = 0 1 sg by theorem

1.2.17. Hence T is minimal sucient by theorem 1.2.19.

18

1. PRELIMINARIES

Example 1.3.4.

r

p (x) = 2 expf; 12 x2 + x ; 2 g:

1 () = ; 12 2 () = T (x) = (x2 x) is sucient but not minimal

r

since rewriting the model as p (x) = 2 expf; 12 (x ; 1)2g we see that

T (x) = (x ; 1)2 is minimal sucient.

Remark 1.3.5. The exponential family can always be rewritten in such a way that the

functions fTi g and fig are AI. If there exist constants c1 : : : cs d, not all zero, such that

c1T1 (x) + + csTs(x) = d a:s: P

then one of the Ti's can be expressed in terms of the others (or is constant). After reducing

the number of functions Ti as far as possible, the same can be done with their coecients

until the new functions fTig and fig are AI.

Definition 1.3.6. (Order of the exponential family.) If the functions fTi i = 1 : : : sg

on X and fi i = 1 : : : sg on are both AI, then s is the order of the exponential family

X

!

s

dP

i () Ti (x) ; B () h (x) :

p (x) = d (x) = exp

1

Proposition 1.3.7. The order is well-dened.

Proof. We shall show that

s + 1 = dim(V )

where V is the set of functions on X dened by V = spanf1 ln dPdP0 () 2 g (independent

of the dominating measure and the choice of fig fTig).

s

X

dP

ln

dP0 (x) = i=1 (i () ; i (0 ))Ti(x) + B (0 ) ; B ()

so that

V spanf1 Ti() i = 1 : : : sg ) dim(V ) s + 1

On the other hand, since f1 i i = 1 : : : sg is LI, each Tj (x) can be expressed as a linear

dPi (x) i = 1 : : : s, as in the proof of the previous theorem,

combination of 1, ln dP

0

) spanf1 Ti () i = 1 : : : sg V

) s + 1 dim(V )

1.3. EXPONENTIAL FAMILIES.

19

Definition 1.3.8. (Canonical Form) For any s-parameter exponential family (not necessarily of order s) we can view the vector () = (1 () : : : s()0 as the parameter rather

than . Then the density with respect to can be rewritten as

p(x ) = exp

s

X

i=1

iTi (x) ; A()]h(x) 2 ():

Since p( ) is a probability density with respect to ,

eA()

(1.3.3)

=

Z Ps

i Ti (x)

e

1

h(x)d(x):

Definition 1.3.9. (The

Natural Parameter Set) This is a possibly larger set than

f() 2 g. It is the set of all s-vectors for which, by suitable choice of A(), p( ) can

be a probability density, i.e.

P

N = f = (1 s) R s : R e s1 iTi (x) h(x)d(x) < 1g

Theorem 1.3.10. N is convex.

Proof. Suppose = (1 : : : s ) and = (1 : : : s ) 2 N . Then

Z Ps

P

ep iTi (x)+(1;p) s iTi (x) h(x) d(x)

Z P

Z P

1

p e

<1

1

i Ti (x) h(x)

Theorem 1.3.11. T = (T1

d(x) + (1 ; p) e

i Ti (x) h(x)

d(x)

Ts) has density

p (t) = exp ( t ; A ())

relative to = ~ T ;1 where d~ (x) = h (x) d (x).

Proof. If f :T ! R is a bounded measurable function,

Ef (T ) =

=

Z

f (T (x))eT (x) e;A() d~(x)

Z

f (t)et e;A() d~ T ;1(t)

Definition 1.3.12. The family of densities

p (t) = exp ( t ; A ()) 2 ()

n is called an s-dimensional or s-parameter standard exponential family. (Dened on

R s , not X .)

20

1. PRELIMINARIES

Theorem 1.3.13. Let fp (x)g be the s-parameter exponential family,

p (x) = exp

and suppose

X

s

i=1

Z

(1.3.4)

!

i () Ti (x) ; B () h (x)) 2 ()

Ps1 j Tj (x)

(x) e

d (x)

exists and is nite for some and all j = aj + ibj such that a 2 N (=natural parameter

space). Then

P

R

(i) (x) e s1 j Tj (x)d (x) is an analytic function of each Ri on f :P<s () 2 int (N )g and

(ii) the derivative of all orders with respect to the i's of (x) e 1 j Tj (x)d (x) can be

computed by di

erentiating under the integral sign.

Proof. Let a0 = (a01 : : : a0s ) be in int(N ) and let 10 = a01 + ib01 . Then

P

(x)e s2 j Tj (x) = h1 (x) ; h2(x) + i(h3 (x) ; h4(x))

where h1 and h2 are the positive and negative parts of the real part and h3 and h4 are the

positive

and negative

of the imaginary part.

Ps1 j Tj (xparts

R

)

Then (x) e

d (x) can be expressed as

Z

e1 T1 (x)

d1(x) ;

Z

e1 T1 (x)

d2(x) + i

Z

e1 T1 (x)

Z

d3(x) ; i e1 T1 (x) d4(x)

where di(x) = hi(x) d(x) i = 1 : : : 4. Hence it suces to prove (i) and (ii) for

(1 ) =

Z

e1 T1 (x) d(x):

Since a0 2 int(N ), there exists > 0 s.t. (1) exists and is nite for all 1 with ja1 ; a01 j < .

Now consider the dierence quotient

Z 0 e(1 ;10)T1(x) ; 1

0

(

1 ) ; (1 )

1 T1 (x)

0

=

e

(

dx

)

j

()

1 ; 1 j < =2:

0

0

1 ; 1

1 ; 1

Observe that

1

1

j

X

X

(

zt

)

jztjj = ejztj ; 1

zt

je ; 1j = j

j

1 j!

1 j!

jztjejztj

zt

) j e z; 1 j jtjejztj

1.3. EXPONENTIAL FAMILIES.

21

(a0 + )jT (x)j

The integrand inR(*) is therefore

bounded

in

absolute

value

by

j

T

1 (x)je 1 2 1 , where

a01 = Re(10) and jT1 (x)je(a01 + 2 )jT1(x)j (dx) < 1 since

8

; 4 T1 e(a01 + 34 )T1 if T > 0

>

j

T

j

e

1

1

| {z }

<

|

{z

}

0

integrable

jT1je(a1 + 2 )jT1 j = > zbounded

z

}|

{

: jT1je 4 T1 e(a01}|+ 4 )T{1 if T1 < 0

(independent of 1).

Letting 1 ! 10 in (*) and using the dominated convergence theorem therefore gives

0(0) =

(1:3:5)

1

Z

T1(x)e10 T1 (x) (dx)

where the integral exists and is nite 810 which is the rst component of some 0 for which Re(0 ) 2

N.

Applying the same argument to (1.3.5) which we applied to (1.3.4) ) existence of all derivatives ) (i) and (ii).

Theorem 1.3.14. For an exponential family of order s in canonical form and 2

int (N ), where N is the natural parameter space,

(i) E (T ) = @@A1 @@As , and

h is

(ii) Cov (T ) = @@i2@A j

.

ij =1

Proof. From theorem 1.3.11Z

eA()

so

=

et (dt) =

Z

eT (x) h(x)(dx)

R

= Ti(x)eT (x) h(x)(dx)

@A .

whence E Ti = @

i

@A @A eA() = R T (x)T (x)eT (x) h(x)(dx)

(ii) @@i2@A j eA() + @

i

j

i @j

2A

@

i.e. @i @j = E (TiTj ) ; E (Ti)E (Tj ) = Cov (Ti Tj )

(i)

@A A()

@i e

Higher order moments of T1 Ts are frequently required, e.g.

r1rs = E (T1r1 Tsrs )

r1rs = E (T1 ; E (T1))r1 (Ts ; E (Ts))rs ]

22

1. PRELIMINARIES

etc. These can often be obtained readily from the MGF:

MT (u1 us) := E (eu1 T1++usTs )

P

If MT exists in some neighborhood of 0 ( u2i < ), then all the moments r1 rs exist and

are the coecients in the power series expansion

1

r1

rs

X

MT (u1 us) =

r1 rs ur1 ! ur s!

1

s

r1 :::rs

The cumulant generating function, CGF, is sometimes more convenient for calculations,

especially in connection with sums of independent random vectors. The CGF is dened as

KT (u1 us) := log MT (u1 us):

If MT exists in a neighborhood of 0, then so does KT and

1

r1

rs

X

KT (u1 us) =

Kr1 rs ur1 ! ur s!

1

s

r1 rs=0

where the coecients Kr1 rs are called the cumulants of T .

The moments and cumulants can be found from each other by formal comparison of the two

series.

Theorem 1.3.15. If X has the density

p (x) = exp s

X

i=1

i Ti(x) ; A()]h(x)

w.r.t some -nite measure , then for any 2 int(N ) the MGF and CGF of T exist in a

neighborhood of 0 and

KT () = A( + ) ; A()

MT () = eA(+);A()

Proof. Problem 3.4.

Summary on Exponential Families. The family of probability measures fP g with

densities relative to some -nite measure ,

s

X

dP

(1:3:6)

p (x) = d (x) = expf i ()Ti(x) ; B ()gh(x) 2 1

is an s-parameter exponential family

By redening the functions Ti() and i() if necessary, we can always arrange for both

sets of functions to be anely independent. The number of summands in the exponent is

then the order of the exponential family.

1.3. EXPONENTIAL FAMILIES.

23

If f1 1 : : : sg and f1 T1 : : : Tsg are both L.I., then the family is said to be minimal

and

dp () 2 g) ; 1

s = dim(spanf1 log dp

0

= order of the exponential family

Remark 1.3.16. Since (1.3.6) is by denition a probability density w.r.t. for each

2 , we have

Z

exp

nX

o

i ()Ti(x) ; B () h(x)(dx) = 1

) exp B () =

Z

exp

nX

o

i ()Ti(x) h(x)(dx)

which shows that the dependence of B on is through () = (1 () : : : s()) only, i.e.

B () = A(()).

Remark 1.3.17. The previous note implies that each member of the family (1.3.6) is a

member of the family.

(1:3:7)

s

X

(x) = expf

1

iTi(x) ; A( )gh(x) = (1 : : : s) 2 ()

(in fact p (x) = () (x)).

The family of densities f 2 ()g dened by (1.3.7) is the canonical family associated with (1.3.6). It is the same family parameterized by the natural parameter,

=vector of coecients of Ti (x) i = 1 : : : s.

Remark 1.3.18. Instead of restricting to the set (), it is natural to extend the

family (1.3.7) to allow all 2 R s for which we can choose a value of A( ) to make (1.3.7) a

probability density, i.e. for which

(1:3:8)

Z

X

expf

iTi (x)gh(x)(dx) < 1

N = f 2 R s : (1:3:8) holdsg is the natural parameter space of the family (1.3.7).

N () since (1.3.7) is by denition a family of probability densities.

Definition 1.3.20. (Full rank family) As with the original parameterization, we can

always redene to ensure that fT1 : : : Tsg is A.I. If () contains an s-dimensional

rectangle and fT1() : : : Ts()g is A.I., then T is minimal sucient and we say the family

Remark 1.3.19.

(1.3.7) is of full rank. (A full rank family is clearly minimal.)

24

1. PRELIMINARIES

Remark 1.3.21. Since N (), full rank ) int(N ) 6= and this is important in view

of the consequence of theorem 1.3.13 that

eA()

=

Z

s

X

exp(

i=1

iTi(x))h(x)(dx)

is analytic in each i on the set of s-dimensional complex vectors, : Re( ) 2 int(N ). (So

derivatives of eA() w.r.t. i i = 1 : : : s of all orders can be obtained by dierentiation

under the integral, yielding explicit expressions for the moments of T for all values of the

canonical parameter vector 2 int(N ).)

Example 1.3.22. Multinomial X M (0 : : : s n) = (X0 : : : Xs ) where Xi =

number of outcomes of type i in n independent trials where i i = 0 : : : s is the probability

of an outcome of type i on any one trial.

= f : 0 0 s 0 0 + + s = 1g

(1) Probability density with respect to counting measure on Zs++1

Ys

X

n

!

x

x

0

s

p (x) = x ! x ! 0 s I0 n](xi)Ifng ( xi )

0

s

i=0

= expf

s

X

i=0

xi log igh(x)

2 :

This is an (s + 1)-parameter exponential family with Ti(x) = xi i() = log i : The

vectors () 2 , are not conned to a proper ane subspace of Rs , so T is minimal

sucient.

(2) fT0 : : : Tsg is not A.I. since T0 + + Ts = n. Setting T0 (x) = x0 = n ; x1 ; ; xn

gives

s

X

p (x) = h(x) expfn log 0 + xi log i g

0

i=1

Redening () = (log 10 log 0s ), we now have an s-parameter representation in

which fT1 : : : Tsg is A.I., P

since the vectors (x1 xs) x 2 X , are subject only to

the constraints xi 0 and si=1 xi n.

(3) Furthermore the new parameter vectors, () = (log 01 log s0 ), 2 , are not

conned to any proper ane subspace of R s , since for any x 2 R s 9 0 : : : s such

that () = x and so () = R s . Hence T (x) = (x1 : : : xs) is minimal sucient for

P and the order of the family is s.

(4) The canonical representation of the family (2) is

s

X

(x) = expf ixi ; A( )gh(x) 2 () = f(log 1 log s ): 2 g

0

0

1

1.3. EXPONENTIAL FAMILIES.

25

We know from remark 1.3.16 before that B () = A(()) for some function A().

Although it is not necessary, we can verify this directly in this example, since from

the representation (2) we have

B () = ;n log 0

and

0 = 1 ; 1 ; ; s ) 1 = 1 + 1 + + s

0

0

0

1 ( )

= 1 + e + + es ()

) B () = n log(1 + e1 () + + es () )

) A( ) = n log(1 + e1 + + es )

A( ) is of course also determined by

eA()

=

Z

expf

s

X

1

ixi gh(x)d(x)

.

(5) The natural parameter space in this case is N = R s , since we know that N ()

and () = R s by (3) above. Clearly N contains an s-dimensional rectangle and

fT1 : : : Tsg is A.I., hence f (x) 2 Ng is of full rank.

(6) Moments of T (X ) = (X1 : : : Xs)

Theorem 1:3:14 ) E Ti = @A

8

2

Rs

@i

i

= 1 + e1 ne

+ + es

ni =0

=

1 + 01 + + 0s

= ni

2A

and Cov(Ti Tj ) = @@ @

( i j ;nei ej

i 6= j

(1+e1 ++es )2 = ;ni j

=

nei ; ne2i

(1++es ) (1++es )2 = ni (1 ; i ) i = j

(Moments exist 8 2 int(N ) = R s )

26

1. PRELIMINARIES

Theorem 1.3.23.

(Sucient condition for completeness of T ) If

(x) = exp

X

s

i=1

!

iTi (x) ; A ( ) h (x)

2 ()

is a minimal canonical representation of the exponential family P = fp : 2 g and ()

contains an open subset of R s , then T = (T1 : : : Ts )is complete for P :

Proof. Suppose E (f (T )) = 0 8 2 (): Then,

(1:3:9)

E f +(T ) = E f ;(T ) 8 2 ():

Choose 0 2 int(()) and r > 0 such that

N (0 r) := f : jj ; 0jj < rg ():

Now dene the probability measures,

R f +e0t (dt)

+(A) = RA f +e0 t (dt) = ~ T ;1 d~(x) = h(x)(dx)

RJ f ;e0t (dt)

;(A) = RA f ;e0 t (dt)

J

where we have assumed that (ft : f (t) 6= 0g) > 0, since otherwise f = 0 a:s: PT and we are

done.

Observe now thatZ

Z

t

+

(1:3:10)

e (dt) = et ;(dt) 8 2 R s with jjjj < r

since

L:S: =

=

Z

ZJ

by (1.3.9)

J

f +(t)e(0 +)t (dt)=

f ;(t)e(0 +)t (dt)=

Z

ZJ

J

f +(t)e0t (dt)

f ;(t)e0t (dt)

Now consider each side of (1.3.10) as a function of the complex argument = 0 + i,

2 R s . Then

L() = R() 8 = 0 + i with jj0jj < r, since (by Theorem 1.3.13 (i)) both sides are analytic in each component of on the set where Re(0 + ) 2 N and they are equal when is real. In particular,

L(i) =

Z

eit+(dt) = R(i) =

Z

eit;(dt)

1.4. CONVEX LOSS FUNCTION

27

for all 2 R s . Hence + and ; have the same characteristic function ) + = ; ) f + =

f ; a:s:, contradicting (f 6= 0) > 0. So f = 0 a:s: .

Example 1.3.24. X1 : : : Xn iid N ( 2 )

X

X

p (x) = p1 n expf; 21 2 x2i + 1 xi ; n2 g

( 2)

X

1() = 21 2 T1(x) = ; x2i

X

2 () = 1 T2 (x) = xi

P P

() does not contain a 2-dim rectangle in R2 . T (x) = ( x2i xi ) is not complete since

X

X

E ( x2i ; n +2 1 ( xi )2) = n(22 ) ; n +2 1 (n2 + n2 2 ) = 0

P

2 (P x )2 = 0 on N c .

but there exists no P -null set N such that x2i ; n+1

i

1.4. Convex Loss Function

Lemma 1.4.1. Let be a convex function on (;1 1) which is bounded below and sup-

pose that is not monotone. Then, takes on its minimum value c and ;1 (c)is a closed

interval and is a singleton when is strictly convex.

Proof. Since is convex and not monotone,

lim (x) = 1:

x!

1

Since is continuous, attains its minimum value c. ;1 (fcg) is closed by continuity and

interval by convexity. The interval must have zero length if is strictly convex.

Theorem 1.4.2. Let be a convex function dened on (;1 1) and X a random variable such that (a) = E ( (X ; a)) is nite for some a. If is not monotone, (a)takes on

its minimum value and ;1 (a) is a closed set and is a singleton when is strictly convex.

Proof. By the lemma, we only need to show that is convex and not monotone. Because

limt!

1 (t) = 1 and lima!

1 x ; a = 1,

lim (a) = 1

a!

so that is not monotone.

The convexity comes from

(pa + (1 ; p) b) = E (p (X ; a) + (1 ; p) (X ; b))

E (p (X ; a) + (1 ; p) (X ; b))

= p (a) + (1 ; p) (b) :

28

1. PRELIMINARIES

CHAPTER 2

Unbiasedness

2.1. UMVU estimators.

Notation. P =fP 2 g is a family of probability measures on A (distributions of X ).

T:X ! R is an A=B measurable function and T (or T (X )) is called a statistic.

g : ! R is a function on whose value at is to be estimated.

;

X

T

(X A P ) ;!

(X A P) ;!

R B PT

Definition 2.1.1. A statistic T (or T (X )) is called an unbiased estimators of g () if

E (T (X )) = g () for all 2 :

Objectives of point estimation. In order to specify what we mean by a good estimator

of g(), we need to specify what we mean when we say that T (X ) is close to g(). A fairly

general way of dening this is to specify a loss function:

L( d) = cost of concluding that g() = d when the parameter value is :

L( d) > 0 and L( g()) = 0:

Since T (X ) is a random variable, we measure the performance of T (X ) for estimating g()

in terms of its expected (or long-term average) loss

R( T ) = E L( T (X ))

known as the risk function.

Choice of a loss function will depend on the problem and the purpose of the estimation.

For many estimation problem, the conclusion is not particularly sensitive to the choice of loss

function within a reasonable range of alternatives. Because of this and especially because of

its mathematical convenience, we often choose (and will do so in this chapter) the squarederror loss function

L( d) = (g() ; d)2

with corresponding risk function

(2.1.1)

R( T ) = E (T (X ) ; g())2

29

30

2. UNBIASEDNESS

Ideally we would like to choose T to minimize (2.1.1) uniformly in . Unfortunately this

is impossible since the estimator T dened by

(2.1.2)

T (x) = g(0) 8x 2 X

(where 0 is some xed parameter value in ) has the risk function,

= 0

R( T ) = (g() ;0g( ))2 if

if 6= 0

0

An estimator which simultaneously minimized R( T ) for all 2 would necessarily have

R( T ) = 0 8 2 and this is impossible except in trivial cases.

Why consider the class of unbiased estimators? There is nothing intrinsically good

about unbiased estimators. The only criterion for goodness is that R( T ) should be small.

The hope is that by restricting attention to a class of estimators which excludes (2.1.2), we

may be able to minimize R( T ) uniformly in and that the resulting estimator will give

small values of R( T ). This programme is frequently successful if we attempt to minimize

R( T ) with T restricted to the class of unbiased estimators of g().

Definition 2.1.2. g () is U-estimable, if there exists an unbiased estimator of g ().

Example 2.1.3. X1 : : : Xn iid Bernoulli(p), p 2 (0 1). g (p) = p is U-estimable, since

E Xn = p 8p 2 (0 1), while h(p) = p1 is not U-estimable, since if

X

P

P

T (x)p xi (1 ; p)n; xi = p1 8p 2 (0 1)

limp!0 RS = 1 and limp!0 LS = T (0). So T (0) = 1, but this is not possible since then

EpT (X ) = 1 6= p1 8p 2 (0 1).

Remark 2.1.4.

Pni=1 Xi

p;1

a:s:

p and Pni=1n Xi a:s:

;!

;!

n

p;1 even though it is not unbiased.

8p 2 (0 1): Hence PnXi is a rea-

sonable estimate of

Theorem 2.1.5. If T0 is an unbiased estimator of g () then the totality of unbiased

estimators of g ()is given by

fT0 ; U : E U = 0 for all 2 g :

Proof. If T is unbiased for g (), then T = T0 ; (T0 ; T ) where E (T0 ; T ) = 0 8 2 .

Conversely if T = T0 ; U where E U = 0 8 2 , then E T = E T0 = g() 8 2 .

Remark 2.1.6. For squared error loss, L( d) = (d ; g ())2, the risk R( T ) is

R( T ) = E ((T (X ) ; g())2)

= V ar (T (X )) if T is unbiased

= V ar (T0 (X ) ; U )

= E (T0(X ) ; U )2 ] ; g()2

2.1. UMVU ESTIMATORS.

31

and hence the risk is minimized by minimizing E (T0 (X ) ; U )2 ] with respect to U , i.e. by

taking any xed unbiased estimator of g() and nding the unbiased estimator of zero which

minimizes E (T0(X ) ; U )2 ]. Then if U does not depend on we shall have found a uniformly

minimum risk estimator of g(), while if U depends on , there is no uniformly minimum risk

estimator. Note that for unbiased estimators and squared error loss, the risk is the same as

the variance of the estimator, so uniformly minimum risk unbiased is the same as uniformly

minimum variance unbiased in this case.

Example 2.1.7. P (X = ;1) = p P (X = k) = q 2 pk k = 0 1 : : : , where q = 1 ; p.

T0 (X ) = If;1g(X ) is unbiased for p, 0 < p < 1

T1 (X ) = If0g (X ) is unbiased for q2,

U is unbiased for 0

,0 =

1

X

k=;1

U (k)P (X = k) = pU (;1) +

= U (0) +

1

X

k=1

1

X

k=0

U (k)q2 pk 8p

(U (k) ; 2U (k ; 1) + U (k ; 2))pk

,U (k) = ;kU (;1) = ka for some a

(comparing coecients of pk k = 0 1 2 : : : )

So an unbiased estimator of p with minimum risk (i.e. variance) is T0(X ) ; a0X where a0 is

the value of a which minimizes

X

Ep(T0 (X ) ; aX )2 = Pp(X = k)T0(k) ; ak]2

Similarly an unbiased estimator of q2 with minimum risk (i.e. variance) is T1 (X ) ; a1X

where a1 is the value of a which minimizes

X

Ep(T1 (X ) ; aX )2 = Pp(X = k)T1(k) ; ak]2

Some straightforward calculations give

a0 = p + q2 ;Pp1 k2 pk and a1 = 0

Since a

1

is independent of p, the estimator T1 (X ) of q2 is minimum variance unbiased for all

p, i.e. UMVU. However a0 does depend on p and so the estimator T0(X ) = T0(X ) ; a0 X is

only locally minimum variance unbiased at p. (We are using estimator in a generalized

sense here since T0(X ) depends on p. We shall continue to use this terminology.) An UMVU

estimator of p does not exist in this case.

Definition 2.1.8. Let V () = inf T V ar (T ) where the inf is over all unbiased estimators

of g(). If an unbiased estimator T of g() satises

V ar (T ) = V () 8 2 it is called UMVU

1

32

If

2. UNBIASEDNESS

V ar0 T = V (0 ) for some 0 2 T is called LMVU at 0

Remark 2.1.9. Let H be the Hilbert space of functions on X which are square integrable

with respect to P (i.e. with respect to every P 2 P ), and let U be the set of all unbiased

estimators of 0. If T0 is an unbiased estimator of g() in H, then a LMVU estimator in H at

0 is T0 ; PU (T0), where PU denotes orthogonal projection on U in the inner product space

L2 (P0 ), i.e. PU (T0 ) is the unique element of U such that

T0 ; PU (T0 ) ? U (in L2(P0 )):

T0 ; PU (T0 ) is LMVU since PU (T0) = arg minU 2U E0 (T0 ; U )2 .

Notation 2.1.10. We denote the set of all estimators T with E T 2 < 1 for all 2 by and the set of all unbiased estimators of 0 in by U .

Theorem 2.1.11. An unbiased estimator T 2 of g () is UMVU i

E (TU ) = 0 for all U 2 U and for all 2 :

(i.e. Cov (T U ) = 0 since E U = 0 for all and E T = g () for all 2 .)

Proof. ()) Suppose T is UMVU. For U 2 U , let T 0 = T + U with real. Then T 0 is

unbiased and, by denition of T ,

V ar (T 0) = V ar (T ) + 2V ar (U ) + 2Cov (T U ) > V ar (T )

(TU )

therefore, 2V ar (U ) + 2Cov (T U ) > 0. Setting = ; Cov

V ar (U ) gives a contradiction to

this inequality unless Cov (T U ) = 0. Hence Cov (T U ) = 0.

(() If E (TU ) = 0 8U 2 U and 8 2 , let T 0 be any other unbiased estimator. If

V ar (T 0) = 1, then V ar (T ) < V ar (T 0), so suppose V ar (T 0) < 1.

Then T 0 = T ; U , for some U which is unbiased for 0 (by Theorem 2.1.5).

U = T ; T 0 ) E U 2 = E (T 0 ; T )2

6 2E T 0 2 + 2E T 2 < 1

)U 2U

Hence

V ar (T 0) = V ar (T ; U )

= V ar (T ) + V ar (U ) ; 2Cov (T U )

> V ar (T ) since Cov (T U ) = 0

) T is UMVU:

2.1. UMVU ESTIMATORS.

33

Unbiasedness and suciency. Suppose now that T 2 is unbiased for g() and S

is sucient for P = fP 2 g. Consider

T 0 = E (T jS ) = E (T jS ) independent of Then

(a)

E T 0 = E E (T jS ) = E (T ) = g() 8:

(b)

V ar (T ) = E (T ; E (T jS ) + E (T jS ) ; g())2

= E ((T ; E (T jS ))2) + V ar (T 0) + 2E (T ; E (T jS ))(E (T jS ) ; g())]

> V ar (T 0 ):

On the second line we used the fact that T ; E (T jS ) is orthogonal to (S ). The inequality

on the third line is strict for all , T = E (T jS ) a:s: P .

Theorem 2.1.12. If S is a complete sucient statistic for P , then every U -estimable

function g () has one and only one unbiased estimator which is a function of S .

Proof.

T unbiased ) E (T jS ) is unbiased and a function of S

T1 (S ) T2(S ) unbiased ) E (T1 (S ) ; T2 (S )) = 0 8

) T1(S ) = T2 (S ) a:s: P (completeness)

Theorem 2.1.13. (Rao-Blackwell) Suppose S is a complete sucient statistic for P .

Then

(i) If g () is U -estimable, there exists an unbiased estimator which uniformly minimizes

the risk for any loss function L ( d) which is convex in d.

(ii) The UMV U in (1) is the unique unbiased estimator which is a function of S it is

the unique unbiased estimator with minimum risk provided the risk is nite and L is

strictly convex in d.

Proof. (i) L( d) convex in d means

L( pd1 + (1 ; p)d2) 6 pL( d1) + (1 ; p)L( d2 ) 0 < p < 1:

Let T be any unbiased estimator of g() and let T 0 = E (T j S ), another unbiased

estimator of g(). Then

R( T 0) = E L( E (T j S ))]

6 E E (L( T ) j S )] by Jensen's inequality for conditional expectation,

= E L( T ) = R( T ) 8:

34

2. UNBIASEDNESS

If T2 is any other unbiased estimator then

T20 = E (T2 j S ) = T 0 a.s. P by Theorem 2.1.12.

Hence starting from any unbiased estimator and conditioning on the CSS S gives a

uniquely dened unbiased estimator which is UMVU and is the unique function of S

which is unbiased for g().

(ii) The rst statement was established at the end of the proof of (i).

If T is UMVU then so is T 0 = E (T j S ) as shown in (i) We will show that T is

necessarily the uniquely determined unbiased function of S , by showing that T is a

function of S a.s. P .

The proof is by contradiction. Suppose that "T is a function of S a.s. P " is false.

Then there exists and a set of positive P measure where

T 0 := E (T j S ) 6= T

But this implies that

R( T 0) = E (L( E (T j S )))

< E (E (L( T ) j S ))

(Jensen's inequality is strict unless E (T j S ) = T a:s: P )

= R( T )

contradicting the UMVU property of T .

Theorem 2.1.14. If P is an exponential family of full rank (i.e. f1 : : : s g and fT1 : : : Tsg

are A.I. and () contains an open subset of R s ) then the Rao-Blackwell theorem applies to

any U -estimable g () with S = T .

Proof. T is complete sucient for P .

Some obvious U -estimable g()'s are

E Ti (X ) = @A

@i =() f : () 2 int(N )g

P

where (x) = e iTi (x);A() h(x) is the canonical representation of p (x):]

Two methods for nding UMVU's

Method 1. Search for a function (T ), where T is a CSS, such that

E (T ) = g() 8 2 :

2.1. UMVU ESTIMATORS.

Example 2.1.15. X1 : : : Xn iid N ( 2 ) 2 R 2 > 0.

35

T =(X S 2) is CSS.

E2 X = X is UMVU for :

Method 2. Search for an unbiased (X ) and a CSS T . Then

S = E ((X ) j T ) is UMV U

Example 2.1.16. X1 : : : Xn iid U (0 ) > 0

g() = 2

1 (X ) = X1 is unbiased

X(n) is CSS

) S = E (X1 j X(n) ) is UMVU

To compute S we note that given X(n) = x,

X1 = x w:p: n1

X1 v U (0 x) w:p: 1 ; n1

x

1 x n+1x

) S (x) = + (1 ; ) =

n

n 2

n 2

1 n + 1 X is UMVU for ) S (X(n) ) =

2 n (n)

2

n

+

1

) n X(n) is UMVU for Remark 2.1.17.

(a) Convexity of L( ) is crucial to the Rao-Blackwell theorem.

(b) Large-sample theory tends to support the use of convex L( ).

Heuristically if X1 : : : Xn are iid, then as n ! 1 the error in estimating g() ! 0 for

any reasonable estimates (in some probabilistic sense). Thus only the behavior of L( d) for

d close to g() is relevant for large samples.

A Taylor expansion around d = g() gives

L( d) = a() + b()(d ; g()) + c()(d ; g())2 + Remainder

But

L( g()) = 0 ) a() = 0

L( d) 0 ) b() = 0

Hence locally, L( d) v c()(d ; g())2, a convex weighted squared error loss function.

36

2. UNBIASEDNESS

Example 2.1.18. Observe X1 : : : Xm , iid N ( 2 ), and Y1 : : : Yn, iid N ( 2 ), inde-

pendent of X1 : : : Xm:

(i) For the 4-parameter family P = fP2 2 g, (X Y SX2 SY2 ) is a CSS since the exponential family is of full rank. Hence X and SX2 are UMVU for and 2 respectively

and Y and SY2 are UMVU for and 2 :

(ii) For the 3-parameter family P = fP2 2 g, (X Y SS ) is a CSS, where SS :=

(m ; 1)SX2 +(n ; 1)SY2 . Hence X Y and m+SSn;2 are UMVU for and 2 respectively.

(iii) For the 3-parameter family with = 2 6= 2 (which arises when estimating a mean

from 2 sets of readings with dierent accuracies), (X Y SX2 SY2 ) is minimal sucient

but not complete, since X ; Y 6= 0 a:s: P , but E (X ; Y ) = 0 8 :

To deal with Case (iii) we shall rst show the following: If 22 = r for some xed r, i.e.

P = fPr 2 2 g

P

P P

P

then T = ( Xi + r Yj Xi2 + r Yj2) is CSS

Proof.

1

1

1

m 2 n

m+n

2

(2

) 2 (r ) 2 ( ) 2

2

2

X 2 1

X2 1

1

m

1

n

exp ; 2r 2 xi + r 2 m x ; 2r 2 ; 2 2 yi + 2 n y ; 2 2

1 X

X2 X

X 2

2

= exp ;A( ) exp ; 2r 2 ( xi + r yi ) + r 2 ( xi + r yi)

p 2 (x y) =

Since T is a CSS for P and since T1 =

in P .

P Xi+r P Yi

m+rn

is unbiased for , it is UMVU for

T1 is also unbiased for in P = fP2 2 g

2

0202

0

m02 + n02 where 02 = r:

(V is the smallest variance of all unbiased estimators of for P evaluated at 0 02 02 .)

) V (0 02 02 ) 6 V ar0 02 02 (T1 ) =

2.2. NON-PARAMETRIC FAMILIES

37

On the other hand, every T which is unbiased for in P is also unbiased in P .

Hence if T is unbiased for in P , then

PX +rPY

2

V ar0 02 02 (T ) > V ar0 02 02 ( mi + rn i ) where r = 02

0

and the inequality continues

to hold with the left-hand side replaced by V (0 02 02 ).

2

2

So V (0 02 02 ) = m020+0n02 and the LMVU estimator at (0 02 02 ) is

PX + PY

i i

2

0

2

0

2

0

2

0

m + n

:

Since this estimate depends on the ratio r = 002 , an UMVU for does not exist in P .

A natural estimate for is

P X + SX2 P Y

i SY2

i

^ =

:

2

m + SSXY2 n

(See Graybill and Deal, Biometrics, 1959, pp. 543550 for its properties.)

2

2.2. Non-parametric families

Consider X = (X1 : : : Xn) where X1 : : : Xn are iid F , where F 2 F , a family of distribution functions, and P is the corresponding product measure on (R n Bn). For example,

F0 = df's with density relative to Lebesgue measure

Z

F1 = df's with jxjF (dx) < 1

Z

F2 = df's with x2 F (dx) < 1 etc:

The estimand is g : F ! R . For example,

Z

g(F ) = xF (dx) = F

Z

g(F ) = x2 F (dx)

g(F ) = F (a)

g(F ) = F ;1(p)

Proposition 2.2.1. If F0 is dened as above, then (X(1) : : : X(n) ) is complete sucient

for F0 (i.e. for the corresponding family of probability measures P ).

38

2. UNBIASEDNESS

Proof. We know that T (X ) = (X(1) : : : X(n) ) is sucient for P . It remains to show

(by problem 1.6.32, p.72) that T is complete and sucient for a family P0 P such that each

member of P0 has positive density on R n . Choose P0 to be the set of probability measures

on Bn with densities relative to Lebesgue measure,

X

X

X

C (1 n ) expf1 xi + 2 xixj + + nx1 xn ; x2i n gg

i<j

This is an exponential

family whose natural parameter set N contains an open set (N = R n ).

P

P

So S (x) = ( xi i<j xi xj x1 xn) is complete. But S is equivalent to T (consider

the nth degree polynomial whose zeroes are x(1) x(n) ), so T is complete for F0.

Measurable functions of the order statistics. If T (x) := (x(1) : : : x(n) ) then

(X1 : : : Xn) 2 (T ) , (X1 : : : Xn) = (X : : : Xn )

for every permutation (1 : : : n) of (1 : : : n). Since T is a CSS for F0, this enables us to

1

identify UMVU estimators of estimands g for which they exist.

Example 2.2.2. g (F ) = F (a): An obvious unbiased estimator of F (a) is

n

X

T1(X ) := n1 I(;1a](Xi)

i=1

and T1 2 (T ) so T1 is UMVU for F (a).

R

Example 2.2.3. g (F ) = xdF F 2 F0 \ F2 : Let

n

X

T2(x) = n1 Xi:

i=1

Then T2 2 (T ) and, since T is also complete for F0 \ F2 is therefore UMVU for F :

2 F0 \ F4. Let

P(x ; x)2 P(x(i) ; 1 P x(i))2

2

n

T3 (x) = S (x) = n i; 1 =

n;1

T3 2 (T ) and is unbiased for F2 . Since T is complete for F0 \ F4 T3 is UMVU for F2 .

Remark 2.2.5. T complete for F does not imply generally that T is complete for F F . In fact the reverse is true. Completeness for F implies completeness for F . However

Example 2.2.4. g (F ) = F2 F

the same argument used in the proof of Proposition 2.2.1 shows that

T is complete for F0 \ F2 (used in example 2.2.3) and

T is complete for F0 \ F4 (used in example 2.2.4):

2.2. NON-PARAMETRIC FAMILIES

39

2 F0 \ F4

T4(X ) = n X(2i) ; S 2 (X ) is UMVU for g(F ):

Example 2.2.6. g (F ) = 2F F

1X

This result could also be obtained by observing that X1X2 is unbiased for 2F F 2 F0 \ F4,

therefore E (X1X2 j X(1) : : : X(n)) is UMVU. But conditioned on X(1) X(n) ,

X1X2 = X(i) X(j) w:p: n(n2; 1) for each subset fi j g of f1 : : : ng

) E (X1 X2 j X(1)

X(n)) = n(n1; 1)

X

i6=j

X(i) X(j)

X

X

= n(n1; 1) (( Xi )2 ; Xi2)

X

X

X

= n1 Xi2 ; n ;1 1 ( Xi2 ; n1 ( Xi)2 )

= T4 (X )

More generally suppose g(F ) is U-estimable in F0. Then

9 (X1 : : : Xm) such that EF (X1 : : : Xm) = g(F ) 8F 2 F0:

Suppose also that (X1 : : : Xm ) has nite second moment for F 2 F0 \Fk for some positive

integer k. We can assume is symmetric in X1 : : : Xm , since if not we can redene as

X

(X1 : : : Xm )

(X1 : : : Xm) = m1!

permutations of (1::: m)

which is unbiased and symmetric.

Now we dene the U-statistic,

X

T = ;1

(X : : : X )

n

m 16i1 <i2 <<im 6n

i1

im

This is symmetric in X1 : : : Xn and unbiased, and therefore UMVU for g(F ) F 2 F0 \Fk .

Questions

(1) Which g(F ) are U-estimable?

(2) If g is U-estimable, what is the smallest value of m for which there exists a U-statistic

for g of the form T ? This number is called the order of g.

Proposition 2.2.7. If g is of degree 1, then for any F1 F2 2 F0 , g (F1 + (1 ; )F2 ) is

linear in .

40

2. UNBIASEDNESS

Proof. If g is of degree 1, there exists (X1 ) such that

Z

(x)F (dx) = g(F )

Z

) g (F1 + (1 ; )F2 ) = (x)F1 (dx) + (1 ; )

= g(F1) + (1 ; )g(F2):

Z

(x)F2 (dx)

Generalization. If g is of degree s, then g(F1 + (1 ; )F2) is a polynomial in of

degree 6 s (since dF (x1 : : : xs) = sdF1(x1 ) dF1(xs) if F1 is replaced by F1.)

Example 2.2.8. g (F ) = F2 is of degree 2 in F0 \ F2 .

Proof. Let (X1 X2 ) = 21 (X1 ; X2 )2 , then

EF = EF X12 ; EF (X1 X2) = F2

so deg(g) 6 2. To show deg(g) =

6 1, consider

2

g(F1 + (1 ; )F2) = F

Z +(1;)F

Z

2

= x dF1(x) + (1 ; ) x2 dF2(x) ; F + (1 ; )F ]2

and this is linear in : , F = F : But this is not the case for every F1 F2 2 F0 \ F2:

1

2

1

1

2

2

Hence deg(g) = 2.

Sering (1980) is a good reference on U-staticstics.

Example 2.2.9. g (F ) = F is not U-estimable in F0 , since g (F1 + (1 ; )F2 ) is not a

polynomial.

2.3. The Information Inequality

For any estimator T 2 of g () and any function (X ) such that E j (X )j2 < 1,

we have the inequality

2

j

Cov

(T )j

(2.3.1)

:

Var (T ) Var ( (X ))

However, this will not in general provide a useful lower bound for Var T since the RHS

depends on T . It can be useful however when the RHS depends on T in a simple way, in

particular when it depends on T only through E T .

Theorem 2.3.1. Cov (T ) depends on T only through E T i

Cov (U ) = 0 for all U 2 U \ (unbiased square-integrable estimators of 0):

2.3. THE INFORMATION INEQUALITY

41

Proof. (() Suppose Cov (U ) = 0 for all U 2 U\ and that T1 T2 are two estimators

with nite variance and

E T1 = E T2 8 2 :

Then T1 ; T2 2 U , so Cov (T1 ) = Cov (T2 ).

()) If Cov (T ) depends on T only through E T and if U 2 U , then

Cov (T + U ) = Cov (T )

) Cov (U ) = 0

Hammersley-Chapman-Robbins Inequality

Suppose X has probability density p(x ) 2 , where p(x ) > 0 8x and . Then

(x ) = p(px(x +)) ; 1

satises the conditions of the Rprevious theorem, i.e. Cov (U ) = 0 8U 2 U \ , since

E (X ) = 0 and E (U) = U (x)(p(x + ) ; p(x ))d(x).

For any statistic S 2 ,

Z

Cov (S ) = E (S) = S p(x + ) ; p(x )](dx)

= E+ S ; E S

S2U

= g( + 0) ; g() if

if S is unbiased for g(:)

Hence from (2.3.1), if T 2 is unbiased for g(),

2

V ar (T ) > (g( p+(X)+;) g())2 8:

E ( p(X) ; 1) ]

Hence we obtain the

HCR bound

if T is unbiased for g().

2

(

g

(

+

)

;

g

(

))

V ar (T ) > sup

+ )

V ar ( p(pX

(X) )

42

2. UNBIASEDNESS

Letting ! 0 in the HCR bound gives

V ar T lim

!0

( g(+);g() )2

);p(X) )2

E ( 1 p(Xp+(X

)

0

2

()

= g @p

E ( @ =p)2

0 2

= @ logg p()

E ( @ p(X ))2

provided g is dierentiable and we can dierentiate under the expectation. These steps are

legitimized under the conditions of the following theorem.

Theorem 2.3.2. (Cramer-Rao Lower Bound CRLB) Suppose that p(x ) > 0 and

@ log p(x ) exists for all x and , and that for each there exists such that

@

( P P and

j ; j < ) 1 p(x

) ; 1 G (x )

j;j p(x)

(where G is independent of and E G (X )2 < 1 for all ). Then for any unbiased

estimator T of g (),

g0 ()2

Var T I ()

where

8

< I () = E @ log@p(x) 2

(denition of Fisher Information)

: (g0 ())2 = lim sup! g();;g() 2

Proof. By the HCR bound

Z

( p(x ) ; 1)2 p(x ) (dx) ( g() ; g() )2

j ; j p(x )

;

Let fng be a sequence such that n ! and

( g(n) ; g() )2 ! (g0())2:

n ; Then setting = n in the above equality and letting n ! 1, gives (by DC)

@ log p(X ))2 g0()2

V ar (T ) E ( @

V ar (T )

1

2

2.3. THE INFORMATION INEQUALITY

43

Corollary 2.3.3. If X1 : : : Xn are iid P1 (the marginal distribution of Xi ) and the

corresponding marginal density p1 (x ) satises the conditions of the Cramer-Rao Lower

Bound theorem, then for any unbiased estimator T (X1 Xn) of g (),

@ log p (X ) 2

0 ()2

g

1 1

where I1 () = E

:

Var (T ) nI1 ()

@

Proof. The sample space is X n and

n

dP (x) = Y

n

p

1 (xi ) where = :

n

d

i=1

The Fisher information for P is

!2

Z @ Yn

n

log

p

1 (xi ) p1 (x1 ) p1 (xn ) (dx)

@

Z X @i=1log p1(xi ) 2

=

p1(x1 ) p1 (xn )n(dx)

@

Z X @ log p1(xi ) 2

=

p1(x1 ) p1 (xn )n(dx) = nI ()

@

since

@ log

2

@ log

@

log

E @ p1(Xi ) @ p1(Xj ) = E @ p1 (Xi ) (by independence)

and

log p (X )) = 0:

E ( @@

1 i

(We can dierentiate under the integral sign by DC and the assumptions on p1(xi )).

The statement of the Corollary now follows if we can show that the assumptions on

p1(xi ) carry over to p(x ), i.e. that j ; j < )

j pp1 ((xx1 )) pp1 ((xxn )) ; 1 j =j ; j G~ (x )

1 1

1 n

2

where E G~ (X ) < 1.

Now

p1(xi ) 1 + j ; jG(x )

i

p1(xi )

1 + G(xi )

44

2. UNBIASEDNESS

and

ja1 an ; 1j ja1 an ; a2 anj + ja2 an ; a3 anj + by the triangle inequality,

ja1 ; 1jja2 anj + ja2 ; 1jja3 anj + + jan ; 1j:

Setting ai = pp11((xxii)) gives

Y

j pp1((xx1 ))pp1((xxn )) ; 1 j j ; jG(x1 ) (1 + G(xi ))

1

1 n

i>1

Y

+ j ; jG(x2 ) (1 + G(xi ))

i>2

+

+ j ; jG(xn )

:= j ; jG~ (x )

and E G~ (X )2 < 1 since X1 : : : Xn are independent and E G(Xi )2 < 1. (No G(Xi )

is raised to a power greater than 2.)

Corollary 2.3.4. Suppose p(x ) satises the conditions of theorem 2.3.2 and

E T (X ) = g() + b()

i.e., T (X ) has bias b() for estimating g (). Then

MSE (T ) = E (T (X ) ; g())2

= b 2 ( ) + V (T )

b2 () + Ic(())

where

g() + b() ; g() + b() 2

c() = lim sup

;

!

Proof. T is unbiased for g () + b(), so

E (T (X ) ; g() ; b())2 Ic(())

Hence MSE = E (T (X ) ; g())2 = Var T (X ) + E (T (X ) ; g())]2 b2 () + Ic(()) :

Behavior of I () under reparameterization. Suppose = h () reparametrizes fP : 2 g

to fP : 2 h ()g. Then

p (x ) = p (x h ())

2.3. THE INFORMATION INEQUALITY

45

where h denotes the inverse mapping and, by denition,

2

@ log

I () = E @ p (X )

@ log p (X )

2

d

= E

jj d h ()

@

dh (=)h2()

= I (h ())

d

= I0 () 2

:

(h ()) j=h()

(2.3.3)

An alternative expression for I (). Provided @@ log p(x ) exists for all x, and if

2

2

(2.3.4)

then

Proof.

Z @2

2 Z

@

@2 p(x ) d(x) = @2 p(x ) d(x) = 0

@2

I () = ;E @2 log p(x ) :

(x) )2

@ 2 log p(x ) = @@22 p(x ) ; ( @p@

@2

p(x )

p(x )2

h

i

and E p(X );1 @@22 p(X ) = 0 by (2.3.4).

Theorem 2.3.5. One-parameter exponential family. Suppose

p (x ) = exp (T (x) () ; B ()) h (x)

where = E T and () 2 Int (N ). Then

I () = Var1 T :

Proof.

Z

eT (x);A() h(x)(dx) = 1

and A0() = E T , A00 () = V ar T . Hence = A0():

Now (x ) = eT (x);A() h(x) (This is the canonical representation of the density of the

exponential family. See (1.3.7)). Hence @ log@(x) = T (x) ; A0 (), so

I () = E (T (X ) ; A0())2 = V ar T

46

2. UNBIASEDNESS

Since = A0() = h;1 (), and we have I () = I (())(A00 ())2. So

I () = I (())=A00 (())2

= V ar T=(V ar T )2

1

=

V ar T

Remark 2.3.6. T attains the CR lower bound in this case. A converse result also holds.

Under some regularity conditions, attainment of the CR lower bound implies that T is the

natural sucient statistic of some exponential family fP g.

Example 2.3.7.

Poisson family. Suppose that X1 : : : Xn are iid Poisson. Then

p(x ) = e;n = e

P xi

Qn1x !

i

P xi 1 1

;n+n lg n

Qn x !

1 i

Px

(x ) = e;ne=n+ n i Qn1x !

1 i

Px

T (x) = n i is UMVU for A0() = e=n = A00 () = n1 e=n = n

I () = V ar T = n

I () = V ar1 T = n

= n lg = e=n

The information on n lg increases with . The information on decreases with .

Theorem 2.3.8. Alternative version of CRLB theorem.

@p (x ) is nite

Suppose is an open interval, A = fx : p(x ) > 0g is independent

of , @

R

@p (x )(dx): Then

for all x 2 A and for all 2 , E @@ log p(x ) = 0, @@ (E T ) = T (x) @

V ar (T (X )) ( @@ E T )2

I ()

2.4. MULTIPARAMETER CASE

47

Proof. Choose (x ) = @@ log p(x ) in (2.3.1).

@

Cov T

@ log p(X )

=

T (x) @ (dx)

@ (E T )

= @

=

@

V ar @ log p(X )

@

E T @ log p(X )

Z

@p(X )

= I ():

2.4. Multiparameter Case

Theorem 2.4.1. For T (X ) 1 (X ) s (X ) functions with nite 2nd moments

under P , we have the multiparameter analogue of (2.3.1),

Var (T ) T C ;1

where T = (1 s), i = Cov (T i ) and C = Cov (i j )]sij =1

Proof. Let Y2^ denote the minimum

mean squared error linear predictor, of Y = T ; E T

3

in terms of = 4

1 ; E 1

5. Then

s ; E s

Y^ = aT where E (Y ; Y^ )(j ; E j ) = 0 j = 1 : : : s, i.e.

C a = = Cov (T )

These equations have a solution and hence

a = C ;1

where C ;1 is any generalized inverse of C = E (0). So Y^ = T C ;1 .

Also

E (Y ; Y^ )2 = EY 2 ; E Y^ 2 since Y^ ? Y ; Y^ in L2 (P )

= V ar T ; E ( T C ;1 T C ;1 )

= V ar T ; T C ;1 ) E (Y ; Y^ )2 = V ar T ; T C ;1 0

) V ar T T C ;1 Notice that the right hand side is the same for any generalized inverse C ;1 of C .

48

2. UNBIASEDNESS

Generalization of the Information Inequality

Assume that

8 is an open interval in Rs

>

< A = fx : p(x ) > 0g is independent of @p (x ) is nite 8x 2 A 8 2 i = 1 : : : s

>

@

: Ei @ log p(X ) = 0 i = 1 : : : s

@i

Definition 2.4.2.

The information matrix.

s

@

@

I () := E @ log p(X ) @ log p(X )

j

i@

ijs=1

@

= Cov

@i log p(X ) @j log p(X )

ij =1

I () is strictly positive denite if f @@ i log p(X ) i = 1 : : : sg is linearly independent a:s: P .

Theorem 2.4.3. Under the previous assumptions, if I () is strictly positive denite and

if T (X ) satises

E T (X )2 < 1 8

and

@ E T (X ) = Z T (x) @ p(x )(dx)

@j @j

then

2 @ E T (X ) 3

@ 4

5

where =

V ar T (X ) T I ();1 1

@

@s E T (X )

Proof. This is a direct application of Theorem 2.4.1 with the functions i dened by

i (x ) = @@ log p(x )

i

2.4. MULTIPARAMETER CASE

49

Reparameterization

If i = fi(1 : : : s) i = 1 : : : s

s

@ log p

@

log

p

I () = E @ (X ) @ (X )

ij =1

"X X i j

@ #s

@

log

p

@

log

p

@

m

E @ (X ) @ (X ) @n

=

@

i

m

n

j ij =1

n m

= JI ()J T

where J =

h @j is

@i ij =1.

Corollary 2.4.4. If I () is strictly positive denite, T1 : : : Tn are nite variance unbiased for g1 () : : : gn() respectively and each Ti satises the conditions of theorem 2.4.3,

then

(2.4.1)

Cov T ( @g )I ;1()( @g )T

@

@

2 @g @g 3

@

@s

4

5:

where A B means aT (A ; B )a 0 8a 2 R n and @g

:=

@

@g

@g

1

1

n

@1

1

@ns

Proof. Since aT (Cov T )a = V ar(aT T ), (2,4,1) is equivalent to

;1 ()( @g )T a 8a 2 R n :

)

I

V ar (aT T ) aT ( @g

@

@

But the above inequality follows at once by applying theorem 2.4.3 to the real-valued statistic

aT T for which

2 @ E (aT T ) 3 2 @ E (T T ) 3

@1 @1 T

5 = 4 5 a = ( @g

=4

)

a:

@

@ E (aT T )

@ E (T T )

@s @s Corollary 2.4.5. If T1

1 s, then

Ts are unbiased for

Cov (T ) I ();1 :

Proof. Apply corollary 2.4.4 with gi () = i i = 1 : : : s (where @g

@ = Iss).

Remark 2.4.6. Suppose we wish to estimate 1 . If 2 : : : s are known then the CR

lower bound is

" 2#;1

@

log

p

E @ (X )

:

1

50

2. UNBIASEDNESS

If 2 : : : s are not known, then the CR bound for estimating 1 is the (1,1) component of

I ;1(), denoted by I ;1()11 (by Corollary 2.4.5). Naturally we expect

" 2#;1

@log

I ;1()11

E @ p(X )

1

By the general formula for the inverse of a partitioned matrix,

D

;1

;

DA

A

12

;

1

A = ;A;1 A D A;1 A DA 22A;1

12 22

22 21

22 21

where D = (A11 ; A12A;221 A21 );1, (see e.g. Ltkepohl, Introduction to Multiple Time Series

Analysis) we nd that

I ;1 ()11 = a ; bT1 A;1b a1

Theorem 2.4.7. (Order-s exponential family). Suppose that

p (x ) = exp

X

s

1

!

i () Ti (x) ; B () h (x)

2

is an order-s exponential family parameterized by

= E T

and that () contains an open subset of R s . Then

I () = C ;1

where

C = Cov (T ) :

Proof. By theorem 1.3.14 of chapter 1, we know that for 2 int(N )

@ 2 A = cov(T T ) cov(T ) = @A2 := @ 2 A s

i j

@1 @j

@2

@i @j ij=1

We also know that if (x ) is the canonical form of the density

@ log (x ) @ log (x ) = (T ; @A )(T ; @A )

i

@i

@j

@i j @j

@2A

) I ( ) = cov (T ) = 2

@

2.4. MULTIPARAMETER CASE

51

2 @A=@ 3

1

4

5, and hence by the reparameterization formula

=

Moreover, = E T = @A

@

@A=@s

2

2

I () = @@A2 I () @@A2

But the left hand side is

@2A

@2

(a symmetric matrix) and hence

@2 A ;1

I () = @2

= C ;1:

Examples of Information Matrices.

Example 2.4.8. X N ( ) 2 R s xed and non-singular. Then

p(x ) = p s 1 1=2 exp ; 12 (x ; )T ;1 (x ; )

( 2) jdetj

Writing = ij ]sij ;1 = ij ]sij , we have

s

@ log p (X ) = X

ik (xk ; k )

@i

k=1

X

@ log p (X ) = s (x ; )

jm m

m

@j

m=1

@ log p

X

s X

s

@

log

p

) E

@i (X ) @j (X ) = k=1 m=1 ik E (Xk ; k )(Xm ; m)jm

=

s X

s

X

ik km mj

k=1 m=1

= ;1 ;1

= ;1 :

Example 2.4.9.

The order-two exponential family fN ( ) 2 R > 0}.

p (x ) = p1 e; (x;)

1

2 2

2

2

2

1

= p1 e; 22 x2+ 2 x; 22 ;log 2

52

2. UNBIASEDNESS

where = . By Theorem 2.4.7, if we let

= 1 = 2

2

+ 2

X

= E

2

X

then we can rewrite p(x ) as

p(x ) = p1 exp 1()x + 2 ()x2 ; B ()

2

X

X

and since E X 2 = = E T T = X 2 ,

I () = Cov(T )];1

2

where Cov(T ) =

2

22 2 24 + 42 2 (check from MGF).

We can now nd I () from the reparameterization formula,

I () = JI ()J T

2 @ @

where J = 64 ...

@s

@1

1

1

@1

@s

...

@s

@s

3

75 = 1 2 = 2 2 .

0 2

+

) I ( );1 = (J ;1 )T I ;1 ()J ;1

1 ;= 0 1=2

1 ;= 22 where J ;1 =

1

0

2

= ;=

1= 2

22 24 + 42 2

0 1=2

2 0 = 0 2=2

1=2 0 ) I ( ) = 0 2= 2

(could also be obtained directly from p )

Example 2.4.10.

Location-scale families.

Suppose that f is a probability density with respect to Lebesgue measure, that f (x) is strictly

positive for all x and that

p(x ) = 1 f ( x ; ) 2 R > 0:

2.4. MULTIPARAMETER CASE

53

Then

@ log p (x ) = ; 1 f 0( x; )

@

f ( x; )

@ log p = ; 1 ; x ; f 0( x; )

@

2 f ( x; )

Z f 0( x; ) 2 1 x ; 1

I11 ( ) = 2

f(

)dx

f ( x; ) Z f 0(x) 2

1

= 2

f (x) f (x)dx

Z x ; f 0( x; ) 2 1 x ; 1

I22 ( ) = 2

1+ f(

)dx

f ( x; ) Z f 0(x) 2

1

= 2

1+x

f (x) f (x)dx

Z f 0( x; ) 1 x ; Z x ; f 0( x; ) 2 1 x ; 1

1

I12 ( ) = 2

f

(

)

dx

+

f(

)dx

x

;

x

;

2

f( ) f( ) Z f 0(x) 2

1

=0+ 2 x

f (x) f (x)dx

Thus I is a diagonal matrix if f is symmetric about 0. Example 2.4.9 is a special case of

this.