Combining geometry and combinatorics A unified approach to sparse signal recovery

advertisement

Combining geometry

and combinatorics

A unified approach to sparse signal recovery

Anna C. Gilbert

University of Michigan

joint work with R. Berinde (MIT), P. Indyk (MIT),

H. Karloff (AT&T), M. Strauss (Univ. of Michigan)

Sparse signal recovery

measurements:

length m = k log(n)

k-sparse signal

length n

Problem statement

Assume x has

low complexity:

x is k-sparse

(with noise)

m as small

as possible

Construct matrix A : Rn → Rm

Given Ax for any signal x ∈ Rn , we can quickly recover b

x with

kx − b

x kp ≤ C

min

y k−sparse

kx − y kq

Parameters

Number of measurements m

Recovery time

Approximation guarantee (norms, mixed)

One matrix vs. distribution over matrices

Explicit construction

Universal matrix (for any basis, after measuring)

Tolerance to measurement noise

Applications

Applications

Data stream algorithms

xi = number of items with index i

Data stream algorithms

can maintain Ax under increments to x and recover

xi = number of

items with index

approximation

to ix

can maintain Ax under increments to x

Efficient data sensing

recover approximation to x

digital cameras

Efficient data sensing

digital/analog analog-to-digital

cameras

converters

analog-to-digital converters

Error-correcting codes

Error-correcting codes

{y ∈ Rn | Ay = 0}

code

code {y ∈ Rn |Ay

= 0}

x = error vector,

= syndrome

x =Ax

error

vector, Ax = syndrome

Two approaches

Geometric

[Donoho ’04],[Candes-Tao ’04, ’06],[Candes-Romberg-Tao ’05],

[Rudelson-Vershynin ’06], [Cohen-Dahmen-DeVore ’06], and many others...

Dense recovery matrices (e.g., Gaussian, Fourier)

Geometric recovery methods (`1 minimization, LP)

b

x = argminkzk1 s.t. Φz = Φx

Uniform guarantee: one matrix A that works for all x

Combinatorial

[Gilbert-Guha-Indyk-Kotidis-Muthukrishnan-Strauss ’02],

[Charikar-Chen-FarachColton ’02] [Cormode-Muthukrishnan ’04],

[Gilbert-Strauss-Tropp-Vershynin ’06, ’07]

Sparse random matrices (typically)

Combinatorial recovery methods or weak, greedy algorithms

Per-instance guarantees, later uniform guarantees

[CCFC02, CM06]

4

k logc n

n logc n

logc n

BERINDE, GILBERT, INDYK, KARLOFF, STRAUSS

k log n

n log n

log n

E

E

Paper

[CM04]

A/E

[CRT06]

A

[CM04]

A

[CRT06]

[GSTV06]

[GSTV07]

n logc n

c

n log

n log

nn

k

E log(n/k)

k logc n

E k logc n k log n

nk log(n/k)

n logc n

n log

n log

nn

[GSTV06]

A

[GSTV07]

A

A

A

A

A

A

[GLR08]

A

(k “large”)

c

n log n

k logc n2

n logc n

logc n

k2 logc n

nk log(n/k)

k log(n/k)

k log(n/k)

LP

LP

k logc n

n log n

logc nc

n log

n

n log n

c logn

log log n

ck(log

log log

n)log

k logc n

c

n log

c n

1−a

kn1−a

kn

n log(n/k)

n log(n/k)

log n

k log(n/k)

k log(n/k)

c

k log n

k log c n

!2 ≤ C!2

!2 ≤ C!2

Approx. error

k logc nn log n !2 ≤ C!2

n log n

!2 ≤ C!2

LP

LP

nk log(n/k)

k logc nk logc n

c

klog

log(n/k)

n

n cn

kloglog

Decode time

k log(n/k)

logc nc n

k log

n log n

k logc n

A

A

nk log(n/k)

k logc n

k log(n/k)

Column sparsity/

c

Update

logtime

n

c

loglog

nn

log n

c

n log

nn

n log

k log n

k(logA n)

This paper

This paper

k log n

k log(n/k)

[NV07]

[GLR08]

(k “large”)

E

E

A

k log(n/k)

c

A k log nk logc n

A

[NV07]

Encode time

k logc n

c

k log n k log n

E

[CCFC02,ECM06]

Sketch length

k logc n

n log n

!1 ≤ C!1 !2

!1 ≤ C!1 !

2

!2 ≤

k logc n!2 ≤

k

C

!1

k1/2

C

!11

k1/2

≤

≤

C

!1

k1/2

C

!1

1/2

k

C

!1

k1/2

≤

YC

k1/2

1−a

1−a

nn

log(n/k)

c

LP

LP

!2 ≤

LP

log(n/k)

LP

C

k1/2

!1!2

!1 ≤ C!1

≤

C

k1/2

Paper

[DM08]

[NT08]

Sketch

length 1.

Encode

time Update

time

Decoderesults.

time

Approx. error

Figure

Summary

of the

best prior

C

!1

k log(n/k)

nk log(n/k)

k log(n/k)

nk log(n/k) log D

!2 ≤ k1/2

A/E[NT08]

Sketch

Encodenktime

time

A length

k log(n/k)

log(n/k)Update

k log(n/k)

A

k log(n/k)

nk log(n/k)

k log(n/k)

A

k logc n

n log n

k logc n

A

A

[IR08]

A

k log(n/k)

n log(n/k)

k log(n/k)

k logc n

nk log(n/k)

n log n

k log(n/k)

n log(n/k)

log(n/k)

k log(n/k)

k logc n

Decode

nk log(n/k)

logtime

D

!2 ≤

nk

log(n/k)

n log

n log D log D

!2 ≤

n log(n/k)

Y

!1 ≤ C!1

A

log(n/k)

n log(n/k)

Noise

Y

Approx. Yerror

C

! ≤ k1/2

Y !1

C

!1

k1/2

C

!1

k1/22

! ≤ C!1

nk log(n/k) log D1

n log n log D

Figure 2. Recent work.

[IR08]

Y

!1

Prior work: summary

A/E

A

Y

k

Figure 1. Summary of the best prior results.

Paper

[DM08]

Y

!1

n)1/2

!1

nk log n

!n)21/2≤ C(log

k1/2

!1C(log

nk2 log c n2 !2c ≤ C(log

Yn)1/2

k1/2

!1

nk

log

n

≤

C(log !

n)

21/2

2

c

1/2

kY

!1

nk log n

!2 ≤

1/2

2

Y

Y

Y

! ≤ C log

Y n!1

1 ≤ C log n!1

logc!n

!2

!2 ≤

Noise

!1 ≤ C!1

!1 ≤ C!1

!2 ≤

!2 ≤

CY

!1

k1/2

C

!1

k1/2

!1 ≤ C!1

Theorem 1. Consider any m × n matrix Φ that is the adjacency matrix of an (k, !)-unbalanced

expander G = (A, B, E), |A| = n, |B| = m, with left degree d, such that 1/!, d are smaller than n.

Then the scaled matrix Φ/d1/p satisfies the RIPp,k,δ property, for 1 ≤ p ≤ 1 + 1/ log n and δ = C!

Figure 2. Recent work.

for some absolute constant C > 1.

Noise

Y

Y

Y

Y

Recent results: breaking news

The fact that the unbalanced expanders yield matrices with RIP-p property is not an accident.

5

Theorem 1.In Consider

any

min×Section

n matrix

that

is the

adjacency

of an

!)-unbalanced

particular, we

show

2 thatΦany

binary

matrix

Φ in whichmatrix

each column

has(k,

d ones

Y

Unify these techniques

Achieve “best of both worlds”

LP decoding using sparse matrices

combinatorial decoding (with augmented matrices)

Deterministic (explicit) constructions

What do combinatorial and geometric approaches share?

What makes them work?

Sparse matrices: Expander graphs

N(S)

S

Adjacency matrix A of a d regular (1, ) expander graph

Graph G = (X , Y , E ), |X | = n, |Y | = m

For any S ⊂ X , |S| ≤ k, the neighbor set

|N(S)| ≥ (1 − )d|S|

Probabilistic construction:

d = O(log(n/k)/), m = O(k log(n/k)/2 )

Deterministic construction:

d = O(2O(log

3

(log(n)/))

), m = k/ 2O(log

3

(log(n)/))

Measurement matrix

Adjacency matrix

Bipartite graph

(larger example)

1

0

1

1

0

1

1

0

0

1

0

1

1

1

0

1

0

1

1

0

0

1

1

1

1

0

0

1

1

0

0

1

1

1

1

0

1

0

1

0

5

10

15

20

50

100

150

200

250

RIP(p)

A measurement matrix A satisfies RIP(p, k, δ) property if for any

k-sparse vector x,

(1 − δ)kxkp ≤ kAxkp ≤ (1 + δ)kxkp .

RIP(p) ⇐⇒ expander

Theorem

(k, ) expansion implies

(1 − 2)dkxk1 ≤ kAxk1 ≤ dkxk1

for any k-sparse x. Get RIP(p) for 1 ≤ p ≤ 1 + 1/ log n.

Theorem

RIP(1) + binary sparse matrix implies (k, ) expander for

=

1 − 1/(1 + δ)

√

.

2− 2

Expansion =⇒ LP decoding

Theorem

Φ adjacency matrix of (2k, ) expander. Consider two vectors x, x∗

such that Φx = Φx∗ and kx∗ k1 ≤ kxk1 . Then

kx − x∗ k1 ≤

2

kx − xk k1

1 − 2α()

where xk is the optimal k-term representation for x and

α() = (2)/(1 − 2).

Guarantees that Linear Program recovers good sparse

approximation

Robust to noisy measurements too

Locating a Heavy

Hitter

Combinatorial

decoding:

bit-test

Augmented!expander

=⇒

Combinatorial

decoding

Suppose the signal contains

one “spike”

and no noise

! log2 d bit tests will identify its location, e.g.,

0

0

1

0 0 0 0 1 1 1 1

0

0

B1s = 0 0 1 1 0 0 1 1

0 = 1

0 1 0 1 0 1 0 1

0

0

0

0

MSB

LSB

bit-test matrix · signal = location in binary

One Sketch for All (MMDS 2006)

18

Theorem

Ψ is (k, 1/8)-expander. Φ = Ψ ⊗r B1 with m log n rows. Then, for

any k-sparse x, given Φx, we can recover x in time O(m log2 n).

With additional hash matrix and polylog(n) more rows in

structured matrices, can approximately recover all x in time

O(k 2 logO(1) n) with same error guarantees as LP decoding.

Expander central element in

[Indyk ’08], [Gilbert-Strauss-Tropp-Vershynin ’06, ’07]

RIP(1) 6= RIP(2)

Any binary sparse matrix which satisfies RIP(2) must have

Ω(k 2 ) rows [Chandar ’07]

Gaussian random matrix m = O(k log(n/k)) (scaled) satisfies

RIP(2) but not RIP(1)

xT = 0 · · · 0 1 0 · · · 0

y T = 1/k · · ·

kxk1 = ky k1

but

1/k 0 · · · 0

√

kGxk1 ≈ kkGy k1

Expansion =⇒ RIP(1)

Theorem

(k, ) expansion implies

(1 − 2)dkxk1 ≤ kAxk1 ≤ dkxk1

for any k-sparse x.

Proof.

Take any k-sparse x. Let S be the support of x.

Upper bound: kAxk1 ≤ dkxk1 for any x

Lower bound:

most right neighbors unique

if all neighbors unique, would have

kAxk1 = dkxk1

can make argument robust

Generalization to RIP(p) similar but upper bound not trivial.

RIP(1) =⇒ LP decoding

`1 uncertainty principle

Lemma

Let y satisfy Ay = 0. Let S the set of k largest coordinates of y .

Then

kyS k1 ≤ α()ky k1 .

LP guarantee

Theorem

Consider any two vectors u, v such that for y = u − v we have

Ay = 0, kv k1 ≤ kuk1 . S set of k largest entries of u. Then

ky k1 ≤

2

kuS c k1 .

1 − 2α()

`1 uncertainty principle

Proof.

(Sketch): Let S0 = S, S1 , . . . be coordinate sets of size k in

decreasing order of magnitudes

0

y

A = A restricted to N(S).

S

Ay

On the one hand

0

kA yS k1 = kAyS k1 ≥ (1 − 2)dky k1 .

S1

On the other

0

0

0 = kA y k1 = kA yS k1 −

X

X

S2

|yi |

l≥1 (i,j)∈E [Sl :N(S)]

≥ (1 − 2)dkyS k1 −

X

|E [Sl : N(S)]|1/kkyS

l−1 k1

l

≥ (1 − 2)dkyS k1 − 2dk

X

l≥1

≥ (1 − 2)dkyS k1 − 2dky k1

1/kkyS

k

l−1 1

N(S)

Combinatorial decoding

Bit-test

Good votes

Bad votes

Retain {index, val} if have > d/2 votes for index

d/2 + d/2 + d/2 = 3d/2 violates expander =⇒ each set of

d/2 incorrect votes gives at most 2 incorrect indices

Decrease incorrect indices by factor 2 each iteration

Empirical results

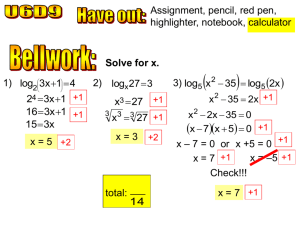

1

Probability of exact recovery, signed signals

1

0.9

0.9

0.8

ρ

ρ

0.5

0.4

0.4

0.3

0.3

0.2

0.2

0.1

0.2

0.4

δ

0.6

0.8

1

0.1

0.9

0.8

0.7

0.6

0.6

0.5

1

0.7

0.7

0.6

Probability of exact recovery, positive signals

0.8

0.8

0.7

0

0

1

0.9

0.6

0.5

0.5

0.4

0.4

0.3

0.3

0.2

0.2

0.1

0

0

0.2

0.4

δ

0.6

0.8

1

Performance comparable to dense LP decoding

Image reconstruction (TV/LP wavelets), running times, error

bounds available in [Berinde, Indyk ’08]

0.1

Summary: Structural Results

Geometric

RIP(2)

Linear

Programming

Combinatorial

⇐⇒

RIP(1)

Weak

Greedy

More specifically,

Expander

sparse

binary

Explicit constructions

m = k2(log log n)

RIP(1) matrix

LP decoding

O(1)

+ 2nd hasher

(for noise only)

bit tester

Combinatorial

decoding

(fast update time, sparse)

(fast update time, fast recovery time, sparse)