Lecture 10: Music Analysis

advertisement

EE E6820: Speech & Audio Processing & Recognition

Lecture 10:

Music Analysis

Michael Mandel <mim@ee.columbia.edu>

Columbia University Dept. of Electrical Engineering

http://www.ee.columbia.edu/∼dpwe/e6820

April 17, 2008

1

Music transcription

2

Score alignment and musical structure

3

Music information retrieval

4

Music browsing and recommendation

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

1 / 40

Outline

1

Music transcription

2

Score alignment and musical structure

3

Music information retrieval

4

Music browsing and recommendation

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

2 / 40

Music Transcription

Basic idea: recover the score

Frequency

4000

3000

2000

1000

0

0

0.5

1

1.5

2

2.5

3

3.5

4

4.5

5

Time

Is it possible? Why is it hard?

I music students do it

. . . but they are highly trained; know the rules

Motivations

I

I

I

for study: what was played?

highly compressed representation (e.g. MIDI)

the ultimate restoration system. . .

Not trivial to turn a “piano roll” into a score

I

I

meter determination, rhythmic quantization

key finding, pitch spelling

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

3 / 40

Transcription framework

Recover discrete events to explain signal

Note events

? Observations

{tk, pk, ik}

I

synthesis

X[k,n]

analysis-by-synthesis?

Exhaustive search?

would be possible given exact note waveforms

. . . or just a 2-dimensional ‘note’ template?

I

note

template

2-D

convolution

I

but superposition is not linear in |STFT| space

Inference depends on all detected notes

I

I

is this evidence ‘available’ or ‘used’ ?

full solution is exponentially complex

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

4 / 40

Problems for transcription

Music is practically worst case!

I

note events are often synchronized

I

notes have harmonic relations (2:3 etc.)

→ defeats common onset

→ collision/interference between harmonics

I

variety of instruments, techniques, . . .

Listeners are very sensitive to certain errors

. . . and impervious to others

Apply further constraints

I

I

like our ‘music student’

maybe even the whole score!

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

5 / 40

Types of transcription

Full polyphonic transcriptions is hard, but maybe unnecessary

Melody transcription

I

I

the melody is produced to stand out

useful for query-by-humming, summarization, score following

Chord transcription

I

I

consider the signal holistically

useful for finding structure

Drum transcription

I

I

very different from other instruments

can’t use harmonic models

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

6 / 40

Spectrogram Modeling

Sinusoid model

as with synthesis, but signal is more

complex

Break tracks

I

need to detect new ‘onset’ at single

frequencies

0.06

0.04

0.02

0

0

0.5

1

1.5

3000

freq / Hz

I

2500

2000

1500

1000

500

0

0

1

2

3

4

time / s

time / s

Group by onset & common harmonicity

find sets of tracks that start around the

same time

+ stable harmonic pattern

I

Pass on to constraint-based filtering. . .

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

7 / 40

Searching for multiple pitches (Klapuri, 2005)

At each frame:

I

I

I

estimate dominant f0 by checking for harmonics

cancel it from spectrum

repeat until no f0 is prominent

Subtract

& iterate

audio

frame

level / dB

Harmonics enhancement

Predominant f0 estimation

f0 spectral smoothing

60

40

dB

dB

20

10

0

-20

0

20

30

0

1000

2000

3000

frq / Hz

20

10

10

0

0

0

-10

0

1000

2000

3000

4000

frq / Hz

0

0

100

200

300

1000

2000

3000

frq/Hz

f0 / Hz

Stop when no more prominent f0s

Can use pitch predictions as features for further processing

e.g. HMM

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

8 / 40

Probabilistic Pitch Estimates (Goto, 2001)

Generative probabilistic model of spectrum as weighted

combination of tone models at different fundamental

frequencies:

!

Z X

p(x(f )) =

w (F , m)p(x(f ) | F , m) dF

m

‘Knowledge’ in terms of tone

models + prior distributions for f0 :

p(x,1 | F,2, µ(t)(F,2))

c(t)(1|F,2)

(t)

c (2|F,2)

Tone Model (m=1)

p(x | F,1, µ(t)(F,1))

c(t)(4|F,2)

c(t)(2|F,1)

fundamental

frequency F+1200

Music analysis

c(t)(3|F,2)

c(t)(1|F,1)

F

EM (RT) results:

Michael Mandel (E6820 SAPR)

Tone Model (m=2)

p(x | F,2, µ(t)(F,2))

c(t)(3|F,1)

c(t)(4|F,1)

F+1902

F+2400

x [cent]

x [cent]

April 17, 2008

9 / 40

Generative Model Fitting (Cemgil et al., 2006)

Multi-level graphical model

I

I

I

I

rj,t : piano roll note-on indicators

sj,t : oscillator state for each harmonic

yj,t : time-domain realization of each note separately

yt : combined time-domain waveform (actual observation)

Incorporates knowledge of high-level musical structure

and low-level acoustics

Inference exact in some cases, approximate in others

I

special case of the generally intractable switching Kalman filter

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

10 / 40

Transcription as Pattern Recognition (Poliner and Ellis)

Existing methods use prior knowledge

about the structure of pitched notes

i.e. we know they have regular harmonics

What if we didn’t know that,

but just had examples and features?

I

the classic pattern recognition problem

Could use music signal as evidence for pitch class in a

black-box classifier:

Audio

I

Trained

classifier

p("C0"|Audio)

p("C#0"|Audio)

p("D0"|Audio)

p("D#0"|Audio)

p("E0"|Audio)

p("F0"|Audio)

nb: more than one class at once!

But where can we get labeled training data?

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

11 / 40

Ground Truth Data

Pattern classifiers need training data

i.e. need {signal, note-label} sets

i.e. MIDI transcripts of real music. . . already exists?

Make sound out of midi

I

I

“play” midi on a software synthesizer

record a player piano playing the midi

Make midi from monophonic tracks in a multi-track recording

I

for melody, just need a capella tracks

Distort recordings to create more data

I

I

resample/detune any of the audio and repeat

add in reverb or noise

Use a classifier to train a better classifier

I

I

alignment in the classification domain

run SVM & HMM to label, use to retrain SVM

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

12 / 40

Polyphonic Piano Transcription (Poliner and Ellis, 2007)

Training data from player piano

freq / pitch

Bach 847 Disklavier

A6

20

A5

10

A4

0

A3

A2

-10

A1

-20

0

1

2

3

4

5

6

7

8

level / dB

9

time / sec

Independent classifiers for each note

I

plus a little HMM smoothing

Nice results

. . . when test data resembles training

Algorithm

SVM

Klapuri & Ryynänen

Marolt

Michael Mandel (E6820 SAPR)

Errs

False Pos

False Neg

d0

43.3%

66.6%

84.6%

27.9%

28.1%

36.5%

15.4%

38.5%

48.1%

3.44

2.71

2.35

Music analysis

April 17, 2008

13 / 40

Outline

1

Music transcription

2

Score alignment and musical structure

3

Music information retrieval

4

Music browsing and recommendation

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

14 / 40

Midi-audio alignment

Pattern classifiers need training data

i.e. need {signal, note-label} sets

i.e. MIDI transcripts of real music. . . already exists?

Idea: force-align MIDI and original

I

I

can estimate time-warp relationships

recover accurate note events in real music!

Also useful by itself

I

I

comparing performances of the same piece

score following, etc.

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

15 / 40

Which features to align with?

Transcription

classifier

posteriors

Playback piano

Recorded Audio

chroma

STFT

Feature Analysis

Warped MIDI

tr

Reference MIDI

t (t )

w r

DTW

MIDI score

MIDI

to WAV

Warping

Function

tw

Synthesized Audio

chroma

^

t (t )

r w

STFT

Audio features: STFT, chromagram, classification posteriors

Midi features: STFT of synthesized audio, derived

chromagram, midi score

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

16 / 40

Alignment example

Dynamic time warp can overcome timing variations

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

17 / 40

Segmentation and structure

Find contiguous regions that are internally similar and

different from neighbors

e.g. “self-similarity” matrix (Foote, 1997)

time / 183ms frames

DYWMB - self similarity

1800

1600

1400

1200

1000

800

600

400

200

0

0

500

1000

1500

time / 183ms frames

100

200

300

400

500

2D convolution of checkerboard down diagonal

= compare fixed windows at every point

I

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

18 / 40

BIC segmentation

Use evidence from whole segment, not just local window

Do ‘significance test’ on every possible division of every

possible context

BIC :

log

L(X1 ; M1 )L(X2 ; M2 )

λ

≷ log(N)#(M)

L(X ; M0 )

2

Eventually, a boundary is found:

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

19 / 40

HMM segmentation

Recall, HMM Viterbi path is joint classification and

segmentation

e.g. for singing/accompaniment segmentation

But: HMM states need to be defined in advance

I

I

define a ‘generic set’ ? (MPEG7)

learn them from the piece to be segmented? (Logan and Chu,

2000; Peeters et al., 2002)

Result is ‘anonymous’ state sequence characteristic of

particular piece

U2-The_Joshua_Tree-01-Where_The_Streets_Have_No_Name 33677

17 17 17 17 17 17 17 17 17 17 17 17 17 17 17 17 17 17 17 17 17 17 17

17 17 17 17 17 17 17 17 17 17 17 17 17 17 1 7 17 17 17 17 17 17 17 17

17 17 17 17 17 17 17 17 17 17 11 11 11 11 11 11 11 11 11 11 11 11 11

11 11 11 11 11 11 11 11 11 11 11 11 11 11 11 11 11 11 11 11 11 11 11

11 1 1 11 11 11 11 11 11 11 11 11 11 11 11 11 11 11 11 11 11 11 11 11

11 11 11 11 15 15 15 15 15 15 15 15 15 15 15 15 15 15 15 15 15 15 15

15 15 15 15 15 15 15 15 15 15 15 1 5 15 15 15 15 15 15 15 15 15 15 15

15 15 15 15 15 15 15 15 15 15 15 15 15 15 15 15 15 15 15 15 15 15 15

15 15 15 15 15 15 15 15 15 15 15 15 15 15 15 15 15 3 3 3 3 3 3 3 15

15 15 15 15 15 15 15 15 15 15 15 15 15 15 15 15 15

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

20 / 40

Finding Repeats

Music frequently repeats main phrases

Repeats give off-diagonal ridges in Similarity matrix (Bartsch

and Wakefield, 2001)

time / 183ms frames

DYWMB - self similarity

1800

1600

1400

1200

1000

800

600

400

200

0

0

500

1000

1500

time / 183ms frames

Or: clustering at phrase-level . . .

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

21 / 40

Music summarization

What does it mean to ‘summarize’ ?

I

I

I

compact representation of larger entity

maximize ‘information content’

sufficient to recognize known item

So summarizing music?

short version e.g. < 10% duration (< 20s for pop)

sufficient to identify style, artist

e.g. chorus or ‘hook’ ?

I

I

Why?

I

I

I

browsing existing collection

discovery among unknown works

commerce. . .

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

22 / 40

Outline

1

Music transcription

2

Score alignment and musical structure

3

Music information retrieval

4

Music browsing and recommendation

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

23 / 40

Music Information Retrieval

Text-based searching concepts for music?

I

I

I

I

“Google of music”

finding a specific item

finding something vague

finding something new

Significant commercial interest

Basic idea: Project music into a space where

neighbors are “similar”

(Competition from human labeling)

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

24 / 40

Music IR: Queries & Evaluation

What is the form of the query?

Query by humming

I

I

considerable attention, recent demonstrations

need/user base?

Query by noisy example

I

I

I

“Name that tune” in a noisy bar

Shazam Ltd.: commercial deployment

database access is the hard part?

Query by multiple examples

I

“Find me more stuff like this”

Text queries? (Whitman and Smaragdis, 2002)

Evaluation problems

I

I

requires large, shareable music corpus!

requires a well-defined task

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

25 / 40

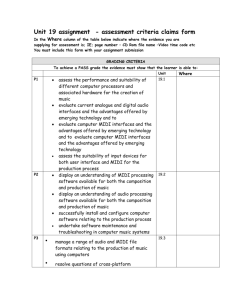

Genre Classification (Tzanetakis et al., 2001)

Classifying music into genres would get you some way towards

finding “more like this”

Genre labels are problematic, but they exist

Real-time visualization of “GenreGram”:

I

I

I

9 spectral and 8 rhythm features every 200ms

15 genres trained on 50 examples each

single Gaussian model → ∼ 60% correct

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

26 / 40

Artist Classification (Berenzweig et al., 2002)

Artist label as available stand-in for genre

Train MLP to classify frames among 21 artists

Using only “voice” segments:

I

Song-level accuracy improves 56.7% → 64.9%

Track 117 - Aimee Mann (dynvox=Aimee, unseg=Aimee)

true voice

Michael Penn

The Roots

The Moles

Eric Matthews

Arto Lindsay

Oval

Jason Falkner

Built to Spill

Beck

XTC

Wilco

Aimee Mann

The Flaming Lips

Mouse on Mars

Dj Shadow

Richard Davies

Cornelius

Mercury Rev

Belle & Sebastian

Sugarplastic

Boards of Canada

0

50

100

150

200

time / sec

Track 4 - Arto Lindsay (dynvox=Arto, unseg=Oval)

true voice

Michael Penn

The Roots

The Moles

Eric Matthews

Arto Lindsay

Oval

Jason Falkner

Built to Spill

Beck

XTC

Wilco

Aimee Mann

The Flaming Lips

Mouse on Mars

Dj Shadow

Richard Davies

Cornelius

Mercury Rev

Belle & Sebastian

Sugarplastic

Boards of Canada

0

10

Michael Mandel (E6820 SAPR)

20

30

40

Music analysis

50

60

70

80

time / sec

April 17, 2008

27 / 40

Textual descriptions

Classifiers only know about the sound of the music

not its context

So collect training data just about the sound

I

I

Have humans label short, unidentified clips

Reward for being relevant and thorough

→ MajorMiner Music game

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

28 / 40

Tag classification

Use tags to train classifiers

I

‘autotaggers’

Treat each tag separately, easy

to evaluate performance

No ‘true’ negatives

I

approximate as absence of

one tag in the presence of

others

rap

hip hop

saxophone

house

techno

r&b

jazz

ambient

beat

electronica

electronic

dance

punk

fast

rock

country

strings

synth

distortion

guitar

female

drum machine

pop

soft

piano

slow

british

male

solo

80s

instrumental

keyboard

vocal

bass

voice

drums

0.4

Michael Mandel (E6820 SAPR)

Music analysis

0.5

0.6

0.7

0.8

0.9

Classification accuracy

April 17, 2008

1

29 / 40

Outline

1

Music transcription

2

Score alignment and musical structure

3

Music information retrieval

4

Music browsing and recommendation

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

30 / 40

Music browsing

Most interesting problem in music IR is finding new music

I

is there anything on myspace that I would like?

Need a feature space where music/artists are arranged

according to perceived similarity

Particularly interested in little-known bands

I

I

little or no ‘community data’ (e.g. collaborative filtering)

audio-based measures are critical

Also need models of personal preference

I

I

where in the space is stuff I like

relative sensitivity to different dimensions

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

31 / 40

Unsupervised Clustering (Rauber et al., 2002)

Map music into an auditory-based space

Build ‘clusters’ of nearby → similar music

I

“Self-Organizing Maps” (Kohonen)

Look at the results:

ga-iwantit

ga-iwantit

verve-bittersweetga-moneymilk

ga-moneymilk

verve-bittersweet

party

party

backforgood

backforgood

bigworld

bigworld

nma-poison

nma-poison

whenyousay br-anesthesia

br-anesthesia

whenyousay

youlearn

youlearn

br-punkrock

br-punkrock

limp-lesson

limp-lesson

limp-stalemate

limp-stalemate

addict

addict

ga-lie

ga-lie

ga-innocent

ga-innocent

pinkpanther

pinkpanther

ga-time

ga-time

vm-classicalgas

vm-classicalgas

vm-toccata

vm-toccata

d3-kryptonite

d3-kryptonite

fbs-praise

ga-anneclaire

ga-anneclaire

fbs-praise

limp-rearranged korn-freak

limp-rearranged

korn-freak limp-nobodyloves

ga-heaven

limp-nobodyloves ga-heaven

limp-show

limp-show

limp-99

pr-neverenough

pr-neverenoughlimp-wandering

limp-wandering

limp-99

pr-deadcell rem-endoftheworld

pr-deadcell

pr-binge

pr-binge

wildwildwestrem-endoftheworld

wildwildwest

“Islands of music”

I

quantitative evaluation?

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

32 / 40

Artist Similarity

Artist classes as a basis for overall similarity:

Less corrupt than ‘record store genres’ ?

paula_abdul

abba

roxette

toni_braxton

westlife

aaron_carter

erasure

lara_fabian

jessica_simpson

mariah_carey

new_order

janet_jackson

a_ha

whitney_houston

eiffel_65

celine_dionpet_shop_boys

christina_aguilera

aqua

lauryn_hill

duran

y_spears

sade soft_cell

all_saints

backstreet_boys

madonna prince

spice_girlsbelinda_carlisle

ania_twain

nelly_furtado

jamiroquai

annie_lennox

cultur

ace_of_base

seal savage_garden

matthew_sweet

faith_hill

tha_mumba

But: what is similarity

between artists?

I

pattern recognition

systems give a number. . .

Need subjective ground truth:

Collected via web site

I

www.musicseer.com

Results: 1800 users, 22,500 judgments

collected over 6 months

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

33 / 40

Anchor space

A classifier trained for one artist (or genre) will respond

partially to a similar artist

A new artist will evoke a particular pattern of responses over a

set of classifiers

We can treat these classifier outputs as a new feature space in

which to estimate similarity

n-dimensional

vector in "Anchor

Space"

Anchor

Anchor

p(a1|x)

Audio

Input

(Class i)

AnchorAnchor

p(a2n-dimensional

|x)

vector in "Anchor

Space"

Anchor

Audio

Input

(Class j)

GMM

Modeling

Similarity

Computation

p(a1|x)p(an|x)

p(a2|x)

Anchor

Conversion to Anchorspace

GMM

Modeling

KL-d, EMD, etc.

p(an|x)

Conversion to Anchorspace

“Anchor space” reflects subjective qualities?

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

34 / 40

Playola interface ( http://www.playola.org )

Browser finds closest matches to single tracks

or entire artists in anchor space

Direct manipulation of anchor space axes

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

35 / 40

Tag-based search ( http://majorminer.com/search )

Two ways to search MajorMiner tag data

Use human labels directly

I

I

(almost) guaranteed to be relevant

small dataset

Use autotaggers trained on human labels

I

I

can only train classifiers for labels with enough clips

once trained, can label unlimited amounts of music

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

36 / 40

Music recommendation

Similarity is only part of recommendation

I need familiar items to build trust

. . . in the unfamiliar items (serendipity)

Can recommend based on different amounts of history

I

I

none: particular query, like search

lots: incorporate every song you’ve ever listened to

Can recommend from different music collections

I

I

personal music collection: “what am I in the mood for?”

online databases: subscription services, retail

Appropriate music is a subset of good music?

Transparency builds trust in recommendations

See (Lamere and Celma, 2007)

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

37 / 40

Evaluation

Are recommendations good or bad?

Subjective evaluation is the ground truth

. . . but subjects don’t know the bands being recommended

I can take a long time to decide if a recommendation is good

Measure match to similarity judgments

e.g. musicseer data

Evaluate on “canned” queries, use-cases

I

I

concrete answers: precision, recall, area under ROC curve

applicable to long-term recommendations?

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

38 / 40

Summary

Music transcription

I

hard, but some progress

Score alignment and musical structure

I

making good training data takes work, has other benefits

Music IR

I

alternative paradigms, lots of interest

Music recommendation

I

potential for biggest impact, difficult to evaluate

Parting thought

Data-driven machine learning techniques are valuable in each case

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

39 / 40

References

A. P. Klapuri. A perceptually motivated multiple-f0 estimation method. In IEEE Workshop on Applications of

Signal Processing to Audio and Acoustics, pages 291–294, 2005.

M. Goto. A predominant-f¡sub¿0¡/sub¿ estimation method for cd recordings: Map estimation using em algorithm

for adaptive tone models. In IEEE Intl. Conf. on Acoustics, Speech, and Signal Processing, volume 5, pages

3365–3368 vol.5, 2001.

A. T. Cemgil, H. J. Kappen, and D. Barber. A generative model for music transcription. IEEE Transactions on

Audio, Speech, and Language Processing, 14(2):679–694, 2006.

G. E. Poliner and D. P. W. Ellis. A discriminative model for polyphonic piano transcription. EURASIP J. Appl.

Signal Process., pages 154–154, January 2007.

J. Foote. A similarity measure for automatic audio classification. In Proc. AAAI Spring Symposium on Intelligent

Integration and Use of Text, Image, Video, and Audio Corpora, March 1997.

B. Logan and S. Chu. Music summarization using key phrases. In IEEE Intl. Conf. on Acoustics, Speech, and

Signal Processing, volume 2, pages 749–752, 2000.

G. Peeters, A. La Burthe, and X. Rodet. Toward automatic music audio summary generation from signal analysis.

In Proc. Intl. Symp. Music Information Retrieval, October 2002.

M. A. Bartsch and G. H. Wakefield. To catch a chorus: Using chroma-based representations for audio

thumbnailing. In IEEE Workshop on Applications of Signal Processing to Audio and Acoustics, October 2001.

B. Whitman and P. Smaragdis. Combining musical and cultural features for intelligent style detection. In Proc.

Intl. Symp. Music Information Retrieval, October 2002.

A. Rauber, E. Pampalk, and D. Merkl. Using psychoacoustic models and self-organizing maps to create a

hierarchical structuring of music by musical styles. In Proc. Intl. Symp. Music Information Retrieval, October

2002.

G. Tzanetakis, G. Essl, and P. Cook. Automatic musical genre classification of audio signals. In Proc. Intl. Symp.

Music Information Retrieval, October 2001.

A. Berenzweig, D. Ellis, and S. Lawrence. Using voice segments to improve artist classification of music. In Proc.

AES-22 Intl. Conf. on Virt., Synth., and Ent. Audio., June 2002.

P. Lamere and O. Celma. Music recommendation tutorial. In Proc. Intl. Symp. Music Information Retrieval,

September 2007.

Michael Mandel (E6820 SAPR)

Music analysis

April 17, 2008

40 / 40