A N O B J E C T... T E S T I N G *

advertisement

DEBORAH

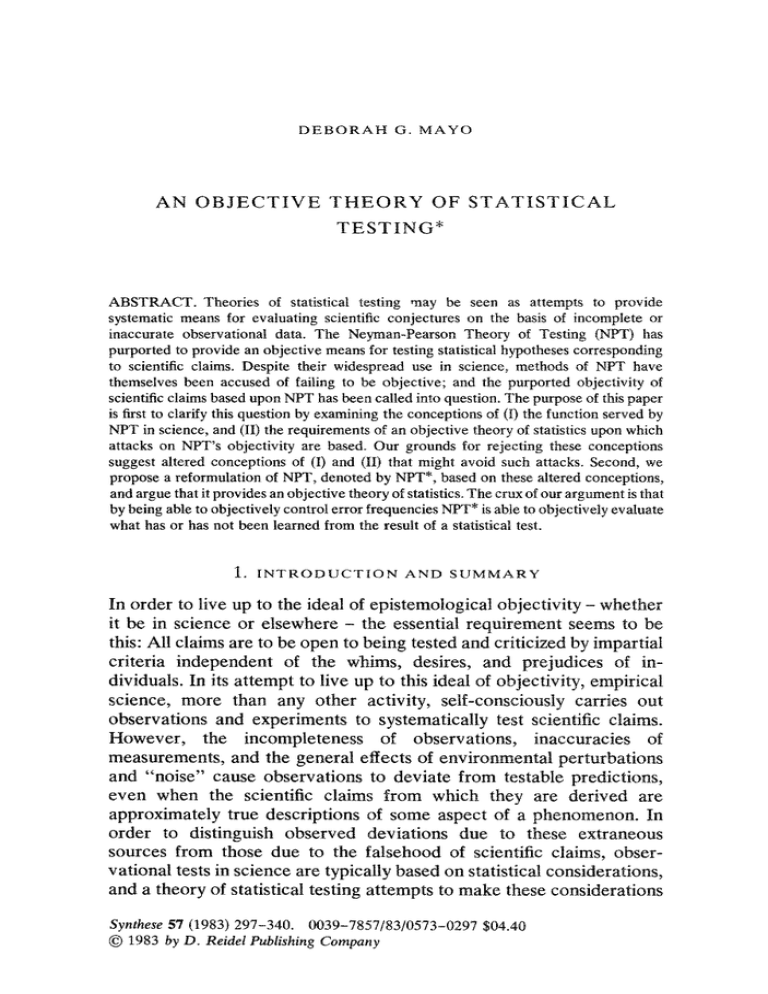

AN O B J E C T I V E

G. M A Y O

T H E O R Y OF S T A T I S T I C A L

TESTING*

ABSTRACT. Theories of statistical testing ,nay be seen as attempts to provide

systematic means for evaluating scientific conjectures on the basis of incomplete or

inaccurate observational data. The Neyman-Pearson Theory of Testing (NPT) has

purported to provide an objective means for testing statistical hypotheses corresponding

to scientific claims. Despite their widespread use in science, methods of NPT have

themselves been accused of failing to be objective; and the purported objectivity of

scientific claims based upon NPT has been called into question. The purpose of this paper

is first to clarify this question by examining the conceptions of (I) the function served by

NPT in science, and (II) the requirements of an objective theory of statistics upon which

attacks on NPT's objectivity are based. Our grounds for rejecting these conceptions

suggest altered conceptions of (I) and (II) that might avoid such attacks. Second, we

propose a reformulation of NPT, denoted by NPT*, based on these altered conceptions,

and argue that it provides an objective theory of statistics. The crux of our argument is that

by being able to objectively control error frequencies NPT* is able to objectively evaluate

what has or has not been learned from the result of a statistical test.

1, I N T R O D U C T I O N

AND SUMMARY

In order to live up to the ideal of epistemological objectivity - whether

it be in science or elsewhere - the essential requirement seems to be

this: All claims are to be open to being tested and criticized by impartial

criteria independent of the ,Maims, desires, and prejudices of individuals. In its attempt to live up to this ideal of objectivity, empirical

science, more than any other activity, self-consciously carries out

observations and experiments to systematically test scientific claims.

However, the incompleteness of observations, inaccuracies of

measurements, and the general effects of environmental perturbations

and "noise" cause observations to deviate from testable predictions,

even when the scientific claims from which they are derived are

approximately true descriptions of some aspect of a phenomenon. In

order to distinguish observed deviations due to these extraneous

sources from those due to the falsehood of scientific claims, observational tests in science are typically based on statistical considerations,

and a theory of statistical testing attempts to make these considerations

Synthese 57 (1983) 297-340. 0039-7857/83/0573-0297 $04.40

© 1983 by D. Reidet Publishing Company

298

DEBORAH

G. MAYO

precise. The procedures of the Neyman-Pearson Theory of Testing

(which we abbreviate as NPT) have been widely used in science in the

last fifty years to carry out such statistical tests in a purportedly

objective manner. However, the procedures of NPT have themselves

been accused of failing to be objective; for they appear permeated with

the very subjective, personal, arbitrary factors that the ideal of objectivity demands one exclude. As such, the purported objectivity of

scientific claims based upon NPT has been called into question; and it is

this question around which our discussion revolves. That is, we want to

ask the following: Can NPT provide objective scientific claims? Or: Is

NPT an objective theory of statistical testing?

Despite the serious ramifications of negative answers to this question,

the increasingly common arguments upon which such negative answers

are based have gone largely unanswered by followers of NPT. We

attempt to rectify this situation in this paper by clarifying the question

of the objectivity of NPT, setting out a reconstruction of NPT, and

defending its scientific objectivity.

We begin by giving a general framework for relating statistical tests

to scientific inquiries, and for spelling out the formal components of

NPT and comparing them to those of subjective Bayesian tests. The

question of the objectivity of NPT then emerges as a "metastatistical"

question concerning the relationships between the formal statistical

inquiry and the empirical scientific one. One's answer to this question is

seen to depend on one's conception of (I) the function served by NPT in

science, and (II) the requirements of an objective theory of statistics.

Firstly, the most serious attacks on the objectivity of NPT by philosophers and statisticians are seen to be based on the view that NPT

functions to provide rules for using data to decide how to act with

respect to a scientific claim. Secondly, they are seen to view only that

which is given by purely formal logical principles as truly objective.

Since these two conceptions are found in the typical formulations of

NPT, such attacks are not without some justification. Nevertheless, we

reject these conceptions, and our grounds for doing so are used to

suggest the the sort of altered conceptions of (I) and (II) that avoid

such attacks. To defend NPT against such objections we propose a

reformulation, denoted by NPT*, which is based on these altered

conceptions.

According to NPT*, statistical tests function in science as a means of

learning about variable phenomena on the basis of limited data. This

OBJECTIVE

THEORY

OF STATISTICAL

TESTING

299

function is accomplished by detecting discrepancies between the (approximately) correct models of a phenomenon and hypothesized ones.

As such, we hold that objective learning from a statistical test result can

be accomplished if one can critically evaluate what it does and does not

detect or indicate about the scientific phenomenon. Moreover, by

clearly distinguishing the formal statistical result from its (metastatistical) critical evaluation, we can deny that any arbitrariness in specifying

the test necessarily prevents this evaluation from being objective. Most

importantly, we suggest how NPT* is able to objectively interpret the

scientific import of its statistical test results by "subtracting out" the

influences of arbitrary factors. NPT* permits these influences to be

understood (and hence "subtracted out" or controlled) by making use

of frequency relations between test results and underlying discrepancies

(i.e., by making use of error frequencies).

Focusing on a common type of statistical test, we introduce two

functions that allow this appeal to frequency relations to be made

explicit. We show how, by using these functions, NPT* allows for an

objective assessment of what has been learned from a specific test

result. What is more, the ability to make objective (i.e., testable)

assertions about these probabilistic relations (i.e., error probabilities or

rates) is what fundamentally distinguishes NPT from other statistical

inference theories. So, if we are correct, it is this feature that enables

NPT* (and prevents other approaches) from providing an objective

theory of statistical testing. While our focus is limited to the problem of

providing a scientifically objective theory of statistical testing, this

problem (and hopefully our suggested strategy for its resolution) is

relevant for the more general problems that have increasingly led

philosophers to the pessimistic conclusion that science is simply a

matter of subjective, psychological preference (or "conversion")

governed by little more than the whims, desires, and chauvinistic

fantasies of individuals.

2.

a

THEORY

OF STATISTICAL

TESTING

Theories of statistical testing may be seen as attempts to provide

systematic means for dealing with a very common problem in scientific

inquiries. The problem is how to generate and analyze observational

data to test a scientific claim or h~qpothesis when it is known that such

300

DEBORAH

G. M A Y O

data need not precisely agree with the claim, even if the claim correctly

describes the phenomenon of interest. One reason for these deviations

is that the hypothetical claim of interest may involve theoretical

concepts that do not exactly match up with things that can be directly

observed. Another is that observational data are necessarily finite and

discrete, while scientific hypotheses may refer to an infinite number of

cases and involve continuous quantities, such as weight and temperature. As such, the accuracy, precision and reliability of such data is

limited by numerous distortions and "noise" introduced by various

intermediary processes of observation and measurement. A third

reason is that the scientific hypothesis may itself concern probabilistic

phenomena, as in genetics and quantum mechanics. However, whether

it is due to the incompleteness and inaccuracies of observation and

measurement, or to the genuine probabilistic nature of the scientific

hypothesis, the deviations, errors, and fluctuations found in observational data often follow very similar patterns; and statistical models

provide standard paradigms for representing such variable patterns. By

modelling the scientific inquiry statistically, it is possible to formally

model (A) the scientific claims c¢ in terms of statistical hypotheses about

a population; (B) the observational analysis of the statistical hypotheses

in terms of experimental testing rules; and (C) the actual observation ~7

in terms of a statistical sample from the population. It will be helpful to

spell out the formal components of these three models or structures of a

statistically modelled inquiry.

2.1. Models of Statistical Testing

(A) Statistical Models of Hypotheses: The scientific claim is 'translated'

into a claim about a certain population of objects, where this population

is known to vary with respect to a quantity of interest, represented by

X. For example, the population may be all houseflies in existence and

the quantity X, their winglength. The variability of X in the population

is modelled by viewing X as a random variable which takes on different

values with given probabilities; that is, X is viewed as having a

probability distribution P. The distribution P is governed by one or

more properties of the population called parameters; and hypotheses

about the values of such parameters are statistical hypotheses. An

example of a parameter is the average wing length of houseflies, and for

OBJECTIVE

THEORY

OF STATISTICAL

TESTING

301

simplicity we will focus on cases where the statistical hypotheses of

interest concern a single such parameter, represented by 0, where 0 is

the average (i.e., the mean) of a quantity X. The set of possible values

of 0 to be considered, called the parameter space, ft, is specified and

each statistical fiypothesis is associated with some subset of ~. The

class of these statistical hypotheses can be represented by means of a

probability model M(O) which describes X as belonging to the class of

probability distributions P(X[ 0) where 0 ranges over fk That is,

M( O) = [P( X[ 0), 0 ~ ~t].

(B) Statistical Models of Experimental Tests: The actual observational

inquiry is modelled as observing n independent random variables

X1, X2 . . . . . X,, where each X~ is distributed according to M(O), the

population distribution. Each experimental outcome can be represented as an n-tuple of values of these random variables, i.e., x~, x2. . . . . x,

which may be abbreviated as (x,). The set of possible experimental

outcomes, the sample space Jq, consists of the possible n-tuples of

values. On the basis of the population distribution M(O) it is possible to

derive, by means of probability theory, the probability of the possible

experimental outcomes, i.e., P((x~)l 0), where (x~) ~ X, 0 ~ Pz. We also

include in an experimental testing model the specification of a testing

rule T that maps outcomes in X to various claims about the models of

hypotheses M(O); i.e., T:X--~ M(O). That is, an experimental testing

model ET(J() = [X, P((x,~)t 0), T].

(C) Statistical Models of Data (from the experiment): An empirical

observation ~ is modelled as a particular element of X; further

modelling of (x,) corresponds to various sample properties of interest,

such as the sample average. Such a data model may be represented by

[(x,,), s((x.))], where in the simplest case s((x,)) = (x,).

We may consider the indicated components of these three models to

be the basic formal elements for setting out theories of testing; many

additional elements, built upon these, are required for different testing

theories. Figure 2.1 sketches the approximate relationships between the

scientific inquiry and the three models of a statistical testing inquiry.

The nature of the (metastatistical) relationships represented by rays

(1)-(3), and their relevance to our problem, wilt become clarified

throughout our discussion.

302

DEBORAH

G. M A Y O

Scientific Inquiry (or problem)

How to generate and analyze empirical

observation (2 to evaluate claim c~

IT\

l

\

I M°ael°fExPerimental

] I

3)

I M°del°I

Data: s((x~})

( ....

(2)

'

Test:

ET(-X-)

(1)

~

M°del° I

" Hypotheses:

M(O)

Statistical Inquiry: Testing Hypotheses

Fig. 2.1.

2.2. Subjective Bayesian Tests vs. 'Objective" Neyman-Pearson Tests

Using the models of data, experimental tests, and hypotheses, different

theories of statistical testing can be compared in terms of the different

testing rules each recommends for mapping the observed statistical

data to various assertions about statistical hypotheses in M(O). The

subjective Bayesian theory, for example, begins by specifying not only a

complete set of statistical hypotheses H 0 , . . . , Hk about values of 0 in

M(O), but also a prior probabili~ assignment P(H~) to each hypothesis

/-/~, where probability is construed as a measure of one's degree of belief

in the hypotheses. A subjective Bayesian test consists of mapping these

prior probabilities, together with the sample data s, into a final probability assignment in a hypothesis of interest /-/~, called a posterior

probability, by way of Bayes' Theorem. The theorem states:

P ( I-I~ I s) = kfl ( s i I-I~)P(H~)

( i = 0 . . . . , k)

Z P(s tttj)P(H~)

j=O

The experimental test may use the measure of posterior probability

P(/-/it s) to reject ~ if the value is too small or to calculate a more

elaborate test incorporating losses.

OBJECTIVE

THEORY

OF STATISTICAL

TESTING

303

In other words, the subjective Bayesian model translates the scientific

problem into one requiring a means for quantifying one's subjective

opinion in various hypotheses about 0, and using observed data to

coherently change one's degree of belief in them. One can then decide

to accept or reject hypotheses according to how strongly one believes

in them and according to various personal losses one associates with

incorrectly doing so. In contrast, the leaders of the so-called 'objective'

tradition in statistics (i.e., Fisher, Neyman, and Pearson) deny that a

scientist would want to multiply his initial subjective degrees of belief in

hypotheses (assuming he had them) with the 'objective' probabilities

provided by the experimental data to reach a final evaluation of the

hypotheses. For the result of doing so is that one's final evaluation is

greatly colored by the initial subjective degree of belief; and such initial

degrees of belief may simply reflect one's ignorance or prejudice rather

than what is true. (In an extreme case where one's prior probability is

zero, one can never get a nonzero posterior probability regardless of the

amount of data gathered.) Hence, the idea of testing a scientific claim

by ascertaining so and so's degree of belief in it runs counter to our idea

of scientific objectivity. While the subjective Bayesian test may be

appropriate for making personal decisions, when one is only interested

in being informed of what one believes to be the case, it does not seem

appropriate for impartially testing whether beliefs accord with what

really is the case.

In contrast, the Neyman-Pearson Theory of Testing, abbreviated

NPT, sought to provide an objective means for testing. As Neyman

(1971, p. 277) put it, "I find it desirable to use procedures, the validity

of which does not depend upon the uncertain properties of the

population." And since the hypotheses under test are uncertain, NPT

rejects the idea of basing the result of the test on one's prior conceptions about them. Rather, the only piece of information to be formally

mapped by the testing rules of NPT is the sample data. Since our focus

will be on NPT, it will be helpful to spell out its components in some

detail.

(A) NPT begins by specifying, in the hypotheses model M(O), the region

of the parameter space f~ to be associated with the null hypothesis H, i.e.,

f~H and the region to be associated with an alternative hypothesis J, i.e.,

ftj. The two possible results of a test of H are: reject H (and accept Jr)

or accept H (and reject J).

304

DEBORAH

G. M A Y O

(B) The experimental testing model E T ( X ) specifies, before the sample is

observed, which of the outcomes in Jg should be taken to reject H . This

set of outcomes forms the rejection or critical region, CR, and it is

specified so that, regardless of the true value of 0, the test has

appropriately small probabilities of leading to erroneous conclusions.

The two errors that might arise are rejecting H when it is true, the

type I error; and accepting H when it is false, i.e., when J is true, the

type H error. The probabilities of making type I and type II errors, called

error probabilities, are denoted by o~ and/3 respectively. NPT is able to

control the error probabilities by specifying (i) a test statistic S, which is

a function of Jg, and whose distribution is derivable from a given

hypothesized value for 0 and (ii) a testing rule T indicating which of the

possible values of S fall in region CR. Since, from (i), it is possible to

calculate the probability that S e CR under various hypothesized

values of O, it is possible to specify a testing rule T so that P(S ~ CR I 0

f~H) is no greater than a and 1 - P(S ~ CR[ 0 ~ f~j) is no greater than

/3. Identifying the events {T rejects H} and {S ~ CR}, it follows that

P(TRejectsHlHistrue)=

P ( S c CRIO~FtH)<--a

P ( T Accepts/-tl J is true) = 1 - P(S ~ CR t 0 c [Ij) <-/3.

Since these two error probabilities cannot be simultaneously minimized,

NPT instructs one to first select a, called the size or the significance

level of the test, and then choose the test with an appropriately small/3.

However, if alternative J consists of a set of points, i.e., if it is

composite, the value of /3 will vary with different values in ftj. The

"best" NPT test of a given size a (if it exists) is the one which at the

same time minimizes the value of /3 (i.e., the probability of type II

errors) for all possible values of 0 under the alternative J.

Error probabilities are deemed objective in that they refer, not to

subjective degrees of belief, but to frequencies of experimental outcomes in sequences of (similar or very different) applications of a given

experimental test. Since within the context of NPT parameter 0 is

viewed as fixed, hypotheses about it are viewed as either true or false.

Thus, it makes no sense to assign them any probabilities other than 0 or

1; that is, a hypothesis is true either 100% of the time or 0% of the time,

according to whether it is true or false. But the task of statistical tests

arises in precisely those cases where the truth or falsity of a hypothesis is

unknown. The sense in which NPT nevertheless accomplishes this task

OBJECTIVE

THEORY

OF STATISTICAL

TESTING

305

'objectively' is that it controls the error probabilities of tests regardless

of what the true, but unknown, value of 0 is.

The concern with objective error probabilities is what fundamentally

distinguishes NPT from other theories of statistical testing. The

Bayesian, for example, is concerned only with the particular experimental outcome and the particular application of its testing rule (i.e.,

Bayes' Theorem); while error probabilities concern properties of T in a

series of applications. As such, the Bayesian is prevented from ensuring

that his tests are appropriately reliable. In order to clarify the relationship between the choice of o~ and the specification of the critical region

of NPT, we will consider a simple, but very widespread, class of

statistical tests.

2.3. A n Illustration: A N P T "Best" One Sided Test T +

Suppose the model M(O) is the class of normal distributions N(O, o-)

with mean 0 and standard deviation o-, where o" is assumed to be

known, and 0 may take any positive value. It is supposed that some

quantity X varies according to this distribution, and it is desired to test

whether the value of 0 equals some value 0o or some greater value.

Such a test involves setting out in M(O) the following null and

alternative hypotheses:

2.3(a):

Null Hypothesis H: 0 = 0o in N(0, o-)

Alternative Hypothesis J: 0 > 0o.

The null hypothesis is simple, in that it specifies a single value of 0,

while the alternative is composite, as it consists of the set of 0 values

exceeding 0o. The experimental test statistic S is the average of the n

random variables X1,322... X,, where each Xi is N(O, ~r); i.e.,

2.3(b):

Test Statistic S = 1 ~. X, = X .

ni=l

The test begins by imagining that H is true, and that X~ is N(0o, o%

But if H is true, it follows that statistic J~ is also normally distributed

with mean 0o; but ~rx, the Standard deviation, is o-In 1/2. That is,

2.3(c):

Experimental Distribution

N( Oo, cr/n~/2).

of

J~

(under

H : 0 = Oct):

306

DEBORAH

G. MAYO

Since our test is interested in rejecting H just in case 0 exceeds 0o, i.e.,

since our test is one sided (in the positive direction from 00), it seems

plausible to reject H on the basis of sample averages (X values) that

sufficiently exceed 0o; and this is precisely what NPT recommends.

That is, our test rule, which we may represent by T +, maps X into the

critical region (i.e., T + rejects H) just in case .~ is "significantly far" (in

the positive direction) from hypothesized average 0o, where distance is

measured in standard deviation units (i.e., in o-~'s). That is,

2.3(d):

Test Rule T+: Reject H : 0 = 0oiff 3~_> 0o+ d,~cr~

for some specified value of d~ where o~ is the size (significance level) of

the test. The question of what the test is to count "significantly far"

becomes a question of how to specify the value of d~; the answer is

provided once a is specified.

For, if the test is to erroneously reject H no more than a(100%) of

the time (i.e., if its size is a), it follows from 2.3(d) that we must have

2.3(e):

P ( X >-- 0o + d~o'~lH) -< ol.

To ensure 2.3(e), d~ must equal that number of standard deviation units

in excess of 00 exceeded by X no more than a(100%) of the time, when

H is true. And by virtue of the fact that we know the experimental

distribution of X under H (which in this case is N(Oo, o/nm)), we can

derive, for a given a, the corresponding value for de. Conventional

values for a are 0.01, 0.02, and 0.05, where these correspond to

do.01 = 2.3, do.o2 = 2, d0.o5 = 1.6, respectively. For the distribution of

(under H) tells us that X exceeds its mean 0o by as much as 2.3o-~ only

1% of the time; by as much as 2o-e only 2% of the time, and by as much

as 1.6o'~ only 5% of the time. In contrast, X exceeds 0o by as much as

0.25o~x as often as 40% of the time; so if H were rejected whenever

exceeded 0o by 0.25o-~ one would erroneously do so as much as 40% of

the time; i.e., do.4 = 0.25. The relationship between a and distances of

from 00 is best seen by observing areas under the normal curve given

by the distribution of X (under H) (see Figure 2.3).

It will be helpful to have a standard example of an application of test

T + to which to refer our discussion.

Example ET+: A good example of such an application may be provided by adapting an actual inquiry in biology, a We are interested in

testing whether or not treating the larval food of houseflies with a

0.98

¢,,I

--

0.84

do.gs = - 2

>

,

0.05

+-I

~

['"---~-

0.02 0.01

+ |+

" glm

c~--~c4~_

= 0.25

~. l

,-41

do4

Fig. 2.3.

0.16

+

~

~I

+

~

= 0

~

0.5 0.4 0.3

+

6~

d o . 5

0.001

,o

do ~6 = 1

_ do.os = 1,6

~__.___

92

~ o.ool ~ 3

A r e a r i g h t of £

d~}m = 2 . 3

-a

>

H

>

0

,.~

0

0

308

DEBORAH

G. M A Y O

chemical (DDT) increases their size, as measured by their winglength

X. Earlier studies show that the average winglength 0 in the population

of normal houseflies whose food is not so treated is 45.5 mm and the

question is whether D D T increases 0. That is, the inquiry concerns the

following scientific claim (or some version of it):

2.3(f):

Scientific Claim q~+: D D T increases the average winglength

of houseflies (i.e., in D D T treated houseflies, 0 is (importantly) greater than 45.5).

The problem is that a sample from this population, whose food has been

treated with D D T , may be observed to have an average winglength J~

in excess of the normal average 45.5 even though the chemical does not

possess the positive effect intended. As such, the need for statistical

considerations arises.

(A) Statistical Hypotheses: It is supposed that the variability of X, the

winglength of houseflies in a given population, can be modelled by a

normal distribution with known standard deviation o- equal to 4, and

unknown mean O, i.e., X is N(O, 4). Hence, if the chemical does not

increase winglengths as intended, the distribution of X among flies

treated with it would not differ from what scientists have found in the

typical housefly population; that is, 0 would be 45.5 in N(O, 4). On the

other hand if q~+ is true, and D D T does have its intended effect, 0 will

exceed the usual 45.5. These correspond to the following statistical

hypotheses:

2.3(a'): Null Hypothesis H: 0 = 45.5 in N(O, 4)

Alternative Hypothesis J: 0 > 45.5 in N(O, 4).

(B) Experimental Test: (ET + - 1) Since we are within the context of

the one-sided test above, each of the general components there apply to

the present example. In particular, (b') experimental statistic S = X,

and (c') its distribution under H is N(45.5,4/nl/2); and the "best"

testing rule, according to NPT, is (d') T +. Suppose, in addition, the

following test specifications are made:

(i)

(ii)

Number of observations in sample, n = 100

Size of test a = 0.02.

It follows from (i) that o-~ = 0.4 and from (ii) that de = do.oz = 2. So

T + - t is

2.3(d'): T + - 1: R e j e c t H iff J£ >--45.5 + d,o'~ = 46.3.

OBJECTIVE

THEORY

OF STATISTICAL

TESTING

309

(C) Sample Data: The average winglength of the 100 observed flies

(treated with DDT), £, is 46.5, a value in excess of the hypothesized

average 45.5 by 2.5o'~. Since this value exceeds 46.3, T + - 1 would

deem such an Y "significantly far" from H to reject it. Hence T + - 1

rejects H.

In addition to ensuring the maximum frequency of erroneously

rejecting H is 0.02, this test ensures that the frequency of erroneously

accepting H will be minimized for all possible 0 values under the

alternative J, (i.e., for all 0 > 00). Such a test is a "best" NPT test of size

0.02, in the context of ET +.

3.

THE

OBJECTIVITY

OF NPT UNDER

ATTACK

While NPT may provide tests that are good, or even "best" according

to the criteria of low error probabilities, the question that concerns us is

whether such tests are also good from the point of view of the aims of

scientific objectivity. Answering this question takes us into the problem

of how to relate an empirical scientific inquiry to the statistical models

of NPT. But this problem is outside the domain of mathematical

statistics itself, and may be seen as a problem belonging to metastatistics. For, its resolution requires stepping outside the statistical formalism and making statistics itself an object of study.

We can organize the problem of the objectivity of NPT around three

major metastatistical tasks in linking a scientific inquiry with each of the

three statistical models of NPT:

(1)

(2)

(3)

relating the statistical hypotheses in M(O) and the results of

testing them to scientific claims;

specifying the components of experimental test ET(Jf); and,

ascertaining whether the assumptions of a model for the data

of the experimental test are met by the empirical observations (i.e., relating (xn} to ~7).

These three concerns correspond to the three rays diagrammed in

Figure 2.1 numbered (1)-(3), and what is asked in questioning the

objectivity of NPT is whether NPT can accomplish the above tasks

(1)-(3) objectively. As will be seen, these three tasks are intimately

related, and hence, the problem of objectively accomplishing them are

not really separate. However, the most serious attacks on the objectivity of NPT focus on the interrelated problems of (1) relating

310

DEBORAH

G. MAYO

statistical conclusions to scientific claims and (2) specifying statistical

tests in an objective manner; as such, our primary aim will be to clarify

and resolve these problems. In Section 5, we wilt briefly discuss the

problem of (3) validating model assumptions objectively and how it

would be handled within the present approach.

3.1 N P T as an ' Objective' Theory of Inductive Behavior

Although once the various formal experimental specifications are

made, NPT provides an objective means for testing a hypothesis, it is

not clear that these specifications are themselves objective. For these

specifications involve considerations that go beyond the formalism of

statistical testing. As Neyman and Pearson (1933, p. 146) note:

F r o m the point of view of m a t h e m a t i c a l theory all that we can do is to show how the risk

of the errors m a y be controlled and minimized. T h e use of these statistical tools in any

given case, in determining just h o w the balance should be struck, m u s t be left to the

investigator.

Acknowledging that the tasks of (1) interpreting and (2) specifying

tests go beyond the objective formalism of NPT, Neyman and Pearson

consider a situation where there would be some clear basis for accomplishing them. Noting that the sorts of informal considerations NPT

requires are similar to those involved in certain decision theoretic

contexts, they are led to suggest the behavioristic interpretation of

NPT. Although the behavioristic construal of NPT was largely advocated by Neyman and was not wholly embraced by Pearson (see

Pearson 1955), NPT has generally been viewed as purporting to offer

an objective theory of inductive behavior.

In an attempt to develop testing as an objective theory of behavior,

tests are formulated as mechanical rules or "recipes" for reaching one

of two possible decisions: accept hypothesis H or reject H, where

"accept H " and "reject H " are interpreted as deciding to "act as if H

were true" and to "act as if H were false", respectively. Neyman and

Pearson's (1933, p. 142) own description of "rules of behavior" is a

clear statement of such a conception:

Here, for example, would be s u c h a 'rule of behavior': to decide w h e t h e r a hypothesis H ,

of a given type, be rejected or not, calculate a specified character, x, of the o b s e r v e d

facts; if x > Xo reject H ; if x -< xo, accept H . Such a rule tells us nothing as to w h e t h e r in a

particular case H is true w h e n x <- xo or false w h e n x > xo. But it m a y often be proved that

OBJECTIVE

THEORY

OF STATISTICAL

TESTING

311

if we behave according to such a r u l e . . , we shall reject H when it is true not more, say,

than once in a hundred times, and in addition we may have evidence that we shall reject H

sufficiently often when it is false.

On this view, the rationale for using such a rule of behavior is the desire

for a rule with appropriately small probabilities a, /3 for leading to

erroneous decisions in a given long run sequence of applications of the

rule. Although the actual values of or, /3 are considered beyond the

NPT formalism, it is suggested that the scientist first specify a as the

maximum frequency with which he feels he can afford to erroneously

reject H. He then selects the test that at the same time minimizes the

value of/3, i.e., of erroneously accepting H.

This rationale corresponds to the NPT view of the purpose of tests;

namely, "as a result of the tests a decision must be reached determining which of two alternative courses is to be followed" (Neyman and

Pearson, 1936, p. 204). For example, rejecting H in our housefly

experiment may be associated with a decision to publish results

claiming that D D T increases winglengths of flies, a decision to ban the

use of DDT, a decision to do further research, and so on; and for each

decision there are certain losses and costs associated with acting on it

when in fact H is true. By considering such consequences the scientist

is, presumably, able to specify the risks he can "afford". However, such

considerations of consequences are deemed subjective by NPT's founders, (e.g., "this subjective element lies outside of the theory of statistics" (Neyman, 1950, p. 263)); and the desire to keep NPT objective

leads to the intentional omission of any official incorporation of pragmatic consequences within NPT itself. Ironically, this attempt to secure

NPT's objectivity is precisely what leads both to attacks on its objectivity and to strengthening the position of subjective theories of testing.

3.2 The Objectivity of the Behavioristic Interpretation of N P T Under

Attack

The most serious problems concerning the objectivity of NPT center

around its ability to objectively accomplish the tasks of (1) relating

results of NPT to scientific claims and (2) specifying the components of

experimental tests. The major criticism is this: Since the scientific

import of a statistical test conclusion is dependent upon the

specifications of the test, the objectivity of test conclusions is jeopardized if the test specifications fail to be objective. But NPT leaves the

312

DEBORAH

G. MAYO

problem of test specifications up to the individual researcher who is to

specify them according to his personal considerations of the risks (of

erroneous decisions) he finds satisfactory. But if the aim of a scientist is

to be seen as objectively finding out what is the case as opposed to

finding out how it is most prudent for him to act, then he does not seem

to be in the position of objectively making pragmatic judgments about

how often he can afford to be wrong in some long run of cases. And if

he is left to make these pragmatic judgments subjectively, it is possible

for him to influence the test in such a way that it is overwhelmingly

likely to produce the result he happens to favor. In short, it appears that

the 'objectivity' of NPT is somewhat of a sham; its results seem to be

permeated with precisely those subjective, personal factors that are

antithetical to the aim of scientific objectivity.

Among the first to criticize the objectivity of NPT on these grounds

was R. A. Fisher. While it was Fisher's ideas that formed the basis of

N P T , 2 by couching them in a behavioristic framework he felt Neyman

and Pearson had given up the ideal of objectivity in science. In his

grand polemic style, Fisher (1955, p. 70) declared that followers of the

behavioristic approach are like

3.2(a)

Russians (who) are made familiar with the ideal that research

in pure science can and should be geared to technological

performance, in the comprehensive organized effort of a

five-year plan for the nation.

Not to suggest a lack of parity between Russia and the U.S., he

continues:

In the U.S. also the great importance of organized technology has I think made it easy to confuse the process

appropriate for drawing correct conclusions, with those

aimed rather at, let us say, speeding production, or saving

money.

One finds analogous attacks on the objectivity of NPT voiced by a

great many contemporary statisticians and philosophers. They typically

begin by denying the objectivity of NPT specifications:

3.2(b)

In no case can the appropriate significance level be determined in an objective manner. (Rubin, 1971, p. 373)

OBJECTIVE

THEORY

OF STATISTICAL

TESTING

313

The basis of this denial is that fixing their levels require judgments judgments which, it is claimed, (to use a phrase of I. J. Good) NPT

tends to "sweep under the carpet" (i.e., SUTC). Good (1976, p. 143)

claims

3.2(c)

Now the hidebound objectivist tends to hide that fact; he will

not volunteer the information that he uses judgment at

all . . . .

At least the Bayesian theorist, it is argued, admits the need for

pragmatic judgments and explicitly incorporates them into his testing

theory. Rosenkrantz (1977, pp. 205-206) sums it up well:

3.2(d)

In Bayesian decision theory, an optimal experiment can be

determined, taking due account of sampling costs. But no

guidelines exist for the analogous problem of choosing a

triple (a,/3, n) in Neyman-Pearson theory . . . . These judgments are left to the individual experimenter, and they

depend in turn on his personal utilities . . . . But if we are

'interested in what the data have to tell us', why should one

experimenter's personal values and evaluations enter in at

all? How, in short, can we provide for objective scientific

reporting within this framework? (emphasis added)

Implicit in these criticisms are various assumptions of what would be

required for objective scientific reporting. A clear example of this is

found in Fetzer's attack on the objectivity of NPT. Fetzer (1981, p. 244)

argues that while the subjective judgments required to specify NPT

"are not 'defects'" in decision-making contexts, in general, nevertheless,

3.2(e)

to the extent to which principles of inference are intended to

secure the desideratum of 'epistemic objectivity' by supplying standards whose systematic application.., would warrant assigning all and only the same measures of evidential

support to all and only the same hypotheses, they [NPT]

appear inadequate.

On this view - a view which underlies the most serious attacks on the

objectivity of NPT - a theory of statistics should provide means for

measuring the degree of evidential strength (support, probability,

314

DEBORAH

G. M A Y O

belief, etc.) that data affords hypotheses. Correspondingly, a theory of

statistics is thought to be objective only if it (to use Fetzer's words)

"would warrant assigning all and only the same measures of evidential

support to all and only the same hypotheses" (given the same data).

On this view, NPT, which only seeks to ensure the scientist that he will

make erroneous decisions no more than a small percent of the time in a

series of test applications, fails to be objective. For NPT cannot tell him

whether a particular conclusion is one of the erroneous ones or not; nor

can it provide him with a measure of how probable or of h o w well

supported a particular conclusion is. For example if H is rejected with a

test with size 0.02, it is not correct to assign alternative J 98%

probability, support, or confidence - although such misinterpretations

are common. The only thing 0.02 tells him about a specific rejection of

H is that it was the result of a general testing procedure which

erroneously rejects H only 2% of the time. Similarly, the NPT rationale

may permit null hypothesis H to be accepted without guaranteeing that

H is highly supported or highly probable; it may simply mean a given

test was unable to reject it. As Edwards (1971, p. 18) puts it:

3.2(f)

Repeated non-rejection of the null hypothesis is too easily

interpreted as indicating its acceptence, so that on the basis

of no prior information coupled with little observational

data, the null hypothesis is accepted . . . . Far from being an

exercise in scientific objectivity, such a procedure is open to

the gravest misgivings. What used to be called prejudice is

now called null hypothesis . . . .

Edwards is referring to the fact that if one has a prejudice in favor of

null hypothesis H one can specify a test so that it has little chance of

rejecting H. Consider our housefly winglength example. In E T + - t

the test size o~ was set at 0.02, but if a researcher were even more

concerned to avoid erroneously rejecting H he might set a to an even

smaller value. (Possibly the researcher manufactures D D T and rejecting H would lead to banning the chemical.) Consider E T * - 2, where

a is now set at 0.001. The corresponding test T* - 2 would not reject H

unless X exceeded 0o (45.5) by at least 3 standard deviation units, as

compared to only 2 standard deviation units when o~was set at 0.02. But

with the size set at 0.001, the observed average of 46.5 in the sample

would not be taken to reject H as it was in E T * - 1; rather H would be

OBJECTIVE

THEORY

OF STATISTICAL

TESTING

315

accepted. (The test T + - 2 is: reject H i f [ J~-> 46.7.) In the extreme

case, one can ensure that H is never rejected by setting a = 0!

The fact that the same data leads to different conclusions depending

on the specification of a is entirely appropriate when such specifications

are intended to reflect the researcher's assessment of the consequences

of erroneous conclusions. For, as Neyman and Pearson (1936, p. 204)

assert, "clearly established consequences which appear satisfactory to

one individual may not be so regarded by another." But if "same data,

same test result" is taken as a requirement for an objective theory of

testing, this feature of NPT will render it entirely inappropriate for

objective scientific reporting. As Kyburg (1971, pp. 82-83) put it:

3.2(g)

To talk about accepting or rejecting hypotheses, for example is prima facie to talk epistemologically; and yet in

statistical literature to accept the hypothesis that the

parameter 0 is less than 0* is often merely a fancy and

roundabout way of saying that Mr Doe should offer no more

than $36.52 for a certain bar of bolts . . . .

When it comes to general scientific hypotheses (e.g., that

f(x) represents the distribution of weights in certain species

of fish...) then the purely pragmatic, decision theoretic

approach has nothing to offer us.

If Kyburg is right, and NPT "has nothing to offer us" when testing

hypotheses about the distribution of fish weights, it will not do much

better when testing hypotheses about the distribution of housefly

winglengths! And since our aim is to show that NPT does provide

objective means for testing such hypotheses, we will have to be able to

respond to the above criticisms. By making some brief remarks on these

criticisms our grasp of the problem may be enriched and the present

approach elucidated.

3.3 Some Presuppositions About N P T and Objectivity: Remarks on

the Attacks

How one answers the question of whether NPT lives up to the aim

of scientific objectivity depends upon how one answers two additional

questions: (I) What functions are served by NPT?; and (II) What is

required for a theory of statistical testing to satisfy the aim of scientific

objectivity? If the attacks on the objectivity of NPT turn out to be

316

DEBORAH

G. MAYO

based upon faulty answers to either (I) or (II), we need not accept their

conclusion denying NPT's objectivity.

Generally, the attacks on NPT are based on the view that NPT

functions to provide objective rules of behavior. But there are many

interpretations one can place on a mathematical theory, and the

behavioristic one is not the only interpretation of which NPT admits.

Moreover, it seems to reflect neither the actual nor the intended uses of

NPT in science. In an attempt "to dispel the picture of the Russian

technological bogey" seen in Fisher's attack (3.2(a)), Pearson (1955, p.

204) notes that the main ideas of NPT were formulated a good deal

before they became couched in decision-theoretic terms. Pearson

insists that both he and Neyman "shared Professor Fisher's view that in

scientific enquiry, a statistical test is 'a means of learning'." Nevertheless, within NPT they failed to include an indication of how to use tests

as learning tools - deeming such extrastatistical considerations subjective. As such, it appears that they shared the view of objectivity held

by those who attack NPT as hopelessly subjective.

Underlying such flat out denials of the possibility of objectively

specifying a as Rubin's (3.2(b)) is the supposition that only what is given

by formal logical principles alone is truly objective. But why should a

testing theory seek to be objective in this sense? It was such a

conception of objectivity that led positivist philosophers to seek a

logic of inductive inference that could be set out in a formal way (most

notably, Carnap and his followers). But their results - while logical

masterpieces - have tended to be purely formal measures having

questionable bearing on the actual problems of statistical inference in

science. The same mentality, of. course, has fostered the uncritical use

of NPT, leading to misuses and criticisms.

Similarly, we can question Good's suggestion (3.2(c)) that a truly

objective theory must not include any judgments whatsoever. Attacking the objectivity of NPT because judgments are required in applying

its tests is to use a straw man argument; that judgments are made in

using NPT is what allows tests to avoid being sterile formal exercises.

A ban on judgments renders all human enterprises subjective. Still, the

typical Bayesian criticism of NPT follows the line of reasoning seen in

Good and Rosenkrantz (3.2(d)) above. They reason that since NPT

requires extrastatistical judgments and hence is not truly objective, it

should make its judgments explicit (and stop sweeping them under the

carpet) - which (for them) is to say, it should incorporate degrees of

OBJECTIVE

THEORY

OF STATISTICAL

TESTING

317

belief, support, etc. in the form of prior probabilities. However, the fact

that both NPT and the methods of Bayesian tests require extrastatistical judgments does not mean that the judgments required are equally

arbitrary or equally subjective in both - a point to be taken up later. Nor

does it seem that the type of judgments needed in applying NPT are

amenable to quantification in terms of prior probabilities of hypotheses.

But their arguments are of value to us; they make it clear that our

defense of the objectivity of NPT must abandon the "no extrastatistical

judgments" view of objectivity. At the sarne time we need to show that

it is possible to avoid the sort of criticisms that the need for such

judgments is thought to give rise.

Edwards (3.2(f)), for example, attacks NPT on the grounds that the

extrastatistical judgments needed to specify its tests may be made in

such a way that the hypothesis one happens to favor is overwhelmingly

likely to be accepted. What could run counter to the ideal of scientific

objectivity more! - or so this frequently mounted attack supposes. But

this attack, we maintain, is based on a misconception of the function of

NPT in science; one which, unfortunately, has been encouraged by the

way in which NPT is formulated. The misconception is that NPT

functions to directly test scientific claims from which the statistical

hypotheses are derived. 3 As a result it is thought that since a test with

appropriate error probabiities warrants the resulting statistical conclusion (e.g., reject H), it automatically warrants the corresponding

scientific one (e.g., ~+). This leaves no room between the statistical and

the scientific conclusions whereby one c a n critically interpret the

former's bearing on the latter (i.e., ray (1) in Figure 2.1 is collapsed). As

we will see, by clearly distinguishing the two such a criticism is possible;

and the result of such a criticism is that Edwards' attack can be seen to

point to a possible misinterpretation of the import of a statistical test,

rather than to its tack of objectivity (see Section 4.4).

From Fetzer's (3.2(e)) argument we learn that our defense of NPT

must abandon the supposition that objectivity requires NPT to satisfy

the principle of "same data, same assignment of evidential support to

hypotheses". That NPT fails to satisfy this principle is not surprising

since its only quantitative measures are error probabilities of teat

procedures, and these are not intended to provide measures of evidential support. 4 Moreover, as we saw, NPT does allow one to have "Same

(sample) data, different error probabilities." So, it is also not surprising

that if error probabilities are misconstrued as evidential support

318

DEBORAH

G. M A Y O

measures - something which is not uncommon - the failure of NPT to

satisfy the principle of "same data, same evidential support" will follow.

Our task in the next section will be to show that without misconstruing

error probabilities it is possible to use them to satisfy an altered

conception of scientific objectivity.

4.

DEFENSE

OF THE

OBJECTIVITY

(A REFORMULATED)

OF

NPT: NPT*

If we accept the conception of (I) the function of NPT in science, and

(II) the nature of scientific objectivity that the above attacks presuppose, then, admittedly, we must conclude that NPT fails to be objective.

But since the correctness of these conceptions is open to the questions

we have raised, we need not accept this conclusion. Of course, rejecting

this conclusion does not constitute a positive argument showing that

NPT is objective. However, our grounds for doing so point the way to

the sort of altered conceptions of (I) and (II) that would permit such a

positive defense to be provided; and it is to this task that we now turn.

Like Pearson (3.3), we view the function of statistical tests in a

scientific inquiry as providing "a means of learning" from empirical

data. However, unlike the existing formulation of NPT, we seek to

explicitly incorporate its learning function within the model of statistical testing in science itself. That is, our model of testing will include

some of the metastatisfical elements linking the statistical to the

scientific inquiry. These elements are represented by ray (1) in Figure

2.1. To contrast our reformulation of the function of NPT with the

behavioristic model, we may refer to it as the learning model, or just

NPT*. Having altered the function of tests we could substitute existing

test conclusions, i.e., accept or reject H , by assertions expressing what

has or has not been learned. However, since our aim is to show that

existing statistical tests can provide a means for objective scientific

learning, it seems preferable to introduce our reformulation in terms of

a metastatistical interpretation of existing test results.

While our learning (re)interpretation of NPT (yielding NPT*) goes

beyond what is found in the formal apparatus of NPT, we argue that it is

entirely within its realm of legitimate considerations. It depends every

step of the way on what is fundamental to NPT, namely, being able to

use the distribution of test statistic S to objectively control error

probabilities. Moreover, we will argue, it is the objective control of

OBJECTIVE

THEORY

OF STATISTICAL

TESTING

319

error probabilities that enables NPT* to provide objective scientific

knowledge.

4.1 NPT as an Objective .Means for Learning by Detecting

Discrepancies: NPT*

Rather than view statistical tests in science as either a means of

deciding how to behave or a means of assigning measures of evidential

support we view them as a means of learning about variable phenomena

on the basis of limited empirical data. This function is accomplished by

providing tools for detecting certain discrepancies between the (approximately) correct models of a phenomenon and the hypothesized

ones; that is, between the pattern of observations that would be

observed (if a given experiment were repeated) and the pattern

hypothesized by the model being tested. In E T +, for example, we are

interested in learning if the actual value of 0 is positively discrepant

from the hypothesized value, 00. In the case of our housefly inquiry, this

amounts to learning if DDT-fed flies would give rise to observations

describable by N(45.5, 4), or by N(O, 4) where 0 exceeds 45.5.

Our experiment, however, allows us to observe, not the actual value

of this discrepancy, but only the di~(ference between the sample statistic

S (e.g., X) and a hypothesized population parameter 0 (e.g., 45.5). A

statistical test provides a standard measure for classifying such observed difference~ as "significant" or not. Ideally, a test would classify an

observed difterence significant, in the statistical sense, just in case one

had actually detected a discrepancy of scientific importance. However,

we know from the distribution of S that it is possible for an observed s

to differ from a hypothesis 0o, even by great amounts, when no

discrepancy between 0 and 0o exists. Similarly, a small or even a zero

observed difference is possible when large underlying discrepancies

exist. But, by a suitable choice of S, the size of observed differences can

be made to vary, in a known manner, with the size of underlying

discrepancies. In this way a test can be specified so that it will very

infrequently classify an observed difference as significant (and hence

reject H) when no discrepancy of scientific importance is detected, and

very infrequently fail to do so (and so accept H) when 0 is importantly

discrepant from 0o. As such, the rationale for small error probabilities

reflects the desire to detect all and only those discrepancies about which

one wishes to learn in a given scientific inquiry - as opposed m the

320

D E B O R A H O. M A Y O

desire to infrequently make erroneous decisions. That is, it reflects

epistemological rather than decision-theoretic values.

This suggests the possibility of objectively (2) specifying tests by

appealing to considerations of the type of discrepancies about which it

would be important to learn in a given scientific inquiry. Giere (1976)

attempts to do this by suggesting objective grounds upon which

"professional judgments" about scientifically important discrepancies

may be based. With respect to such judgments he remarks:

It is unfortunate that even the principal advocates of objectivist methods, e.g., Neyman,

have passed off such judgments as merely 'subjective'.... They are the kinds of

judgments on which most experienced investigators should agree. More precisely,

professional judgments should be approximately invariant under interchange of investigators. (p. 79)

Giere finds the source of such intersubjective judgments in scientific

considerations of the uses to which a statistical result is to be put. For

example, an accepted statistical hypothesis "is regarded as something

that must be explained by any proposed general theory of the relevant

kind of system . . . . "

However, critics (e.g., Rosenkrantz (1977, p. 211) and Fetzer (1981,

p. 242)) may still deny that Giere's appeal to "professional judgments"

about discrepancies renders test specifications any more objective than

the appeal to pragmatic decision-theoretic values. In addition, the conclusion of a statistical test (accept or reject H) - even if it arose from a

procedure which satisfied the (pre-trial) professional judgments of

investigators - may still be accused of failing to express the objective

import of the particular result that happened to be realized. While we

deem the appeal to discrepancies of scientific importance, exemplified

in Giere's strategy, to be.of great value in resolving the problem of the

objectivity of N F r , we attempt to use it for this end in a manner that

avoids such criticisms.

As Giere notes, there do often seem to be good scientific grounds for

specifying a test according to the discrepancies deemed scientifically

important. However, we want to argue, even if objective grounds for

(2) specifying tests are lacking, it need not preclude the possibility of

objectively accomplishing the task of (1), relating the result of a

statistical test to the scientific claim. For, regardless of how a test has

been specified, the distribution of test statistic S allows one to determine the probabilistic relations between test results and underlying

OBJECTIVE

THEORY

OF STATISTICAL

TESTING

321

discrepancies. Moreover, we maintain, by making use of such probabilistic relations (in the form of error probabilities) it is possible to

objectively understand the discrepancies that have or have not been

detected on the basis of a given test result; and in this way NPT*

accomplishes its learning function objectively. Since error probabilities

do not provide measures of evidential support, our view of objective

scientific learning must differ from the widely held supposition that

objective statistical theories must provide such measures. Hence, before

going on to the details of our reformulation of NPT, it will help to

reconsider just what an objective theory of statistical testing seems to

require.

4.2. An Objective Theory of Statistical Testing Reconsidered

If the function of a statistical test is to learn about variable phenomena

by using stastically modelled data to detect discrepancies between

statistically modelled conjectures about it, then an objective theory of

statistical testing must be able to carry out this learning function

objectively. But what is required for objective learning in science? We

take the veiw that objectivity requires assertions to be checkable,

testable, or open to criticism by means of fair standards or controls.

Correspondingly, objective learning from the result of a statistical test

requires being able to critically evaluate what it does and does not

indicate about the scientific phenomenon of interest (i.e., what it does

or does not detect). In NPT, a statistical result is an assertion to either

reject or accept a statistical hypothesis H, according to whether or not

the test classifies the observed data as significantly far from what would

be expected under H. Hence, objective learning from the statistical

result is a matter of being able to critically evaluate what such a

statistically classified observation does or does not indicate about the

variability of the scientific phenomenon. So, while the given statistical

result is influenced by the choice of classification scheme provided by a

given test specification, this need not preclude objective learning on the

basis of an observation so classified. For, as Scheffler (1967, p. 38)

notes, "having adopted a given category system, our hypotheses as to

the actual distribution of items within the several categories are not

prejudged." The reason is that the result of a statistical test "about the

distribution of items within the several categories" is only partly

determined by the specifications of the categories (significant and

322

DEBORAH

G. M A Y O

insignificant); it is also determined by the underlying scientific

phenomenon. And what enables objective learning to take place is the

possibility of devising ingenious means for "subtracting out" the

influence of test specifications in order to detect the underlying

phenomenon.

It is instructive to note the parallels between the problems of

objective learning from statistical experiments and that of objective

learning from observation in general. The problem in objectively

interpreting observations is that observations are always relative to the

particular instrument or observation scheme employed. But we often

are aware not only of the fact that observation schemes influence what

we observe, but also of how they influence observations and of how

much "noise" they are likely to produce. In this way its influence may

be subtracted out to some extent. Hence, objective learning from

observation is not a matter of getting free of arbitrary choices of

observation scheme, but a matter of critically evaluating the extent of

their influence in order to get at the underlying phenomenon. And the

function of NPT on our view (i.e., NPT*) may be seen as providing

a systematic means for accomplishing such learning. To clarify this

function we may again revert to an analogy between tests and observational instruments.

In particular, a statistical test functions in a manner analogous to the

way an instrument such as an ultrasound probe allows one to learn about

the condition of a patient's arteries indirectly. And the manner in which

test specifications determine the classification of statistical data is

analogous to the way the sensitivity of the ultrasound probe determines

whether an image is classified as diseased or not. However, a doctor's

ability to learn about a patient's condition is not precluded by the fact

that different probes classify images differently. Given an image

deemed diseased by a certain ultrasound probe, doctors are able to

learn about a patient's condition by considering how frequently such a

diseased image results from observing arteries diseased to various

extents. In an analogous fashion, learning from the result of a given test

is accomplished by making use of probabilistic relations between such

results and underlying values of a parameter 0, i.e., via error probabilities.

Having roughly described our view of objective learning from

statistical tests, it wilt help to imagine an instrument, which, while

purely invented, enables a more precise description of how NPT* learns

OBJECTIVE

THEORY

OF STATISTICAL

TESTING

323

from an experimental test such as E T + : This test may be visualized as

an instrument for categorizing observed sample averages according to

the width of the mesh of a netting on which they are "caught". Since the

example on which our discussion focuses concerns the average winglength of houseflies, it may be instructive to regard E T + as using a

type of flynet to catch flies larger than those arising from a population of

flies correctly described by H : 0 = 0o. Suppose that a netting of size c~

catches an observed average winglength Y just iI~ case ~ >- 0~ + d~o-x.

Then a test with size o~ categorizes a sample as "significant" just in case

it is caught on a size o~ net. Suppose further that it is known that

c~(100%) of the possible samples (in X) from a fly population where

0 = 0o would land on a netting of size a. Then specifying a test to have a

small size o~ (i.e., a small probability of a type I error) is tantamount to

rejecting H : 0 = 0o just in case a given sample of flies is caught on a net

on which only a small percentage of samples from fly population H

would be caught.

For T + tests an x value may be " c a u g h t " on the net deemed

significant by one test and not by another because the widths of their

significant nets differ. But while "significantly large" is a notion relative

to the 'arbitrarily' chosen width of the significant net, the notions

"significant according to test T " (or, "caught by test T") may be

understood by anyone who understands the probabilistic relation between being caught by a given test and various underlying populations.

And since NPT provides such a probabilistic relation (by means of the

distribution of the experimental test statistic) it is possible to understand

what has or has not been learned about 0 by means of a given test result.

In contrast, a Bayesian views considerations about results that have not

been observed irrelevant for reasoning from the particular result that

happened to be observed. As Lindley (1976, p. 362) remarks.

In Bayesian statistics.., once the data is to hand, the distribution of X [or N], the random

variable that generated the data, is irrelevant.

Hence, one is unable to relate the result of a Bayesian test to other

possible experimental tests- at least not by referring to the distribution of

the test statistic (e.g., _X). So if our view of statistical testing in science is

correct, it follows that the result of a Bayesian test cannot provide a

general means for objectively assessing a scientific claim (e.g., q~+). For

such assessments implicitly refer to other possible experiments (whether

324

DEBORAH

G. M A Y O

actual or hypothetical) in which the present result could be repeated or

checked. But according to Lindley (p. 359) "the Bayesian theory is about

coherence, not about right or wrong," so the fact that it allows consistent

incorrectness to go unchecked may not be deemed problematic for a

subjective Bayesian.

It follows, then, that what fundamentally distinguishes the subjective

Bayesian theory from NPT* is not the inclusion or exclusion of

extrastatistical judgments, for they both require these. Rather, what

fundamentally distinguishes them is that NPT*, unlike Bayesian tests,

allows for objective (criticizable) scientific assertions; that is, for

objectively accomplishing the task of (1) relating statistical results to

scientific claims. Having clearly distinguished the statistical conclusion

from the subsequent scientific one, we can deny that any arbitrariness

on the level of formal statistics necessarily injects arbitrariness into

assertions based on statistical results. Moreover, we have suggested the

basic type of move whereby we claim NPT* is able to avoid arbitrariness in evaluating the scientific import of its tests. In the next two

sections, we attempt to show explicitly how this may be carried out by

objectively interpreting two statistical conclusions from ET +.

Before doing so, however, two things should be noted. First, it is not

our view that the result of a single statistical inquiry suffices to find out

all that one wants to learn in typical scientific inquiries; rather,

numerous statistical tests are usually required - some of which may only

be interested in learning how to go on to learn more. As we will be able

to consider only a single statistical inquiry, our concern will be limited

to objectively evaluating what may or may not be learned from it

alone. 5 Secondly, it is not our intention to legislate what information a

given scientific inquiry requires, in the sense of telling a scientist the

magnitude of discrepancy he should deem important. On the contrary,

our aim is to show how an objective interpretation of statistical results

(from ET ÷) is possible without having to precisely specify the magnitude of the discrepancy that is of interest for the purposes of the

scientific inquiry. This interpretation will consist of indicating the

extent to which a given statistical result (from ET ÷) allows one to learn

about various 0 values that are positively discrepant from 00.

4.3 An Objective Interpretation of Rejecting a Hypothesis (with T +)

In order to objectively interpret the result of a test we said one must be

OBJECTIVE

THEORY

OF STATISTICAL

TESTING

325

able to critically evaluate what it does and does not indicate, and such a

critical evaluation is possible if one is able to distinguish between

correct interpretations and incorrect ones (i.e., misinterpretations).

Hence, the focus of our metastatistical evaluation of the result of a

statistical test will be various ways in which its scientific import may be

misconstrued. A rejection of a statistical hypothesis H is misconstrued

or misinterpreted if it is erroneously taken to indicate that a discrepancy

of scientific importance has been detected. But which discrepancies are

of "scientific importance"? In ET +, for example, is any positive

discrepancy, no matter how small, important? Although our null

hypothesis H states that 0 is precisely 45.5 ram, in fact, even if a

poptdation is that of normal untreated flies the assertion that 0 = 45.5 is

not precisely correct to any number of decimal places. Since winglength

measurements and ~+ are in terms of 0.1 mm, no distinction is made

between, say, 45.51 and 45.5. So, at the very least, the imprecision of

the scientific claim prevents any and all discrepancies from being of

scientific importance. However, we would also be mistaken in construing an observed difference as indicative of an importantly greater 0 if in

fact it was due to the various sources of genetic and environmental

error that result in the normal, expected, variability of wingtengths, all

of which may be lumped under experimental error.

The task of distinguishing differences due to experimental error or

"accidental effects" is accomplished by NPT by making use of knowledge as to how one may fail to do so. In particular, since a positive

difference between J~ and its mean 0 of less than 1 or 2 standard

deviation units arises fairly frequently from experimental error, such

small differences may often erroneously be confused with effects due to

the treatment of interest (e.g., DDT). By making ~ small (e.g., 0.02),

only observed differences as large as d~ (e.g., 2) o-~'s are taken to reject

H , i.e., to deny the difference is due to experimental error alone. But

even a small cr does not protect one from misconstruing a rejection of

H. For even if the test result rarely arises (i.e., no more than e~(100%)

of the time) from experimental error alone, it may still be a poor

indicator of a 0 value importantly greater than 45.5; that is, it may be a

misleading signal of ~ . The reason for this is that, regardless of how

small ~ is a test can be specified so that it will almost always give rise to

a Y that exceeds 0o (45.5) by the required d~o-~'s, even if the underlying

0 exceeds 00 by as little as one likes. Such a sensitive, or powerful, test

results by selecting an appropriately large sample size n° In this way o-~,

326

DEBORAH

G. M A Y O

and correspondingly, 0o + d~o-~, can be made so small that even the

smallest flywings are caught on the o~-significant netting. That NPT

thereby allows frequent misconstruals of the scientific import of a

rejection of H is often taken to show its inadequacy for science. But

such misconstruals occur only if a rejection of H is automatically taken

to indicate q~+.

How, on the metastatistical level, is such a misconstrual to be

avoided? To answer this, consider how one interprets a failing test score

on an (academic or physical) exam. If it is known that "such a score"

frequently arises when only an unimportant deficiency is present, one

would deny that a large important deficiency was indicated by the test

score. Reverting to our ultrasound probe analogy, suppose a given

image is classified as diseased by the probe, but that the doctors know

that "such a diseased image" frequently arises (in using this probe)

when no real disease has been detected (perhaps it is due to a slight

shadow). Then, the doctors would deny that the image was a good

indicator of the existence of a seriously diseased artery. (Remember, we

are not concerned here with what action the doctors should take, but

only with what they have learned.) Similarly, a rejection of H with a

given ~ is not a good indicator of a scientifically important value of 0, if

"such a rejection" (i.e., such a statistically significant result) frequently

results from the given test when the amount by which 0 exceeds 00 is

scientifically unimportant. By "such a score" or "such a rejection", we

mean one deemed as or even more significant than the one given by the

test. Since the ability to criticize an interpretation of a rejection

involves appealing to the frequency relation between such a result and

underlying values of 0, it will be helpful to introduce a function that

makes this relationship precise.

Suppose H : 0 = 0o is rejected by a test T + (in favor of alternative

J : 0 > 0o) on the basis of an observed average 2. Using the distribution

of _X for 0 ~ 12 define

4.3(a):

a(~, 0) = &(0) = P ( J ~

2[ 0)

That is, 5(0) is the area to the right of observed 2, under the Normal

curve with mean 0 (with known o-). In the special case where 0 = 0o, &

(sometimes called the observed significance level) equals the frequency

of erroneously rejecting H with "such an Y" (i.e., the frequency of

"such a Type I error.") Then, test T + rejects H with 2 just in case

&(Oo) ~<the (preset) size of the test. 6 To assert alternative J, that 0 > 0o,

OBJECTIVE

THEORY

OF STATISTICAL

TESTING

327

is to say that the sort of observations to which the population being

observed (e.g., DDT-treated flies) gives rise are not those that typically

arise from a population with 0 as small as 00. That is, it is denied that the

observations are describable as having arisen from a statistical distribution M(Oo). Then, a T + rejection of H : 0 = 00 is a good indicator

that 0 > 00 to the extent that such a rejection truly is not typical when 0

is as small as 0o (i.e., to the extent that 2 goes beyond the bounds of

typical experimental error from 0o.) And this is just to say that ~(0o) is

small. Hence, rejecting H with a T + test with small size a indicates that

J : 0 > 0o. It follows that if any and all positive discrepancies from 0o are

deemed scientifically important, then a small size ~ ensures that