Graphs ORD SFO LAX

advertisement

Graphs

337

Graphs

3

4

17

LAX

1233

ORD

802

SFO

1843

DFW

1

Outline and Reading

Graphs (§12.1)

Definition

Applications

Terminology

Properties

ADT

Data structures for graphs (§12.2)

Edge list structure

Adjacency list structure

Adjacency matrix structure

Graphs

2

Graph

A graph is a pair (V, E), where

V is a set of nodes, called vertices

E is a collection of pairs of vertices, called edges

Vertices and edges are positions and store elements

Example:

A vertex represents an airport and stores the three-letter airport code

An edge represents a flight route between two airports and stores the

mileage of the route

SFO

337

HNL

2555

1843

43

7

1

LAX

1233

849

ORD

802

DFW

Graphs

14

7

8

3

1

2

PVD

LGA

1120

10

99

MIA

3

Edge Types

Directed edge

ordered pair of vertices (u,v)

first vertex u is the origin

second vertex v is the destination

e.g., a flight

ORD

flight

AA 1206

PVD

ORD

849

miles

PVD

Undirected edge

unordered pair of vertices (u,v)

e.g., a flight route

Directed graph

all the edges are directed

e.g., route network

Undirected graph

all the edges are undirected

e.g., flight network

Graphs

4

Applications

cslab1a

cslab1b

Electronic circuits

math.brown.edu

Printed circuit board

Integrated circuit

cs.brown.edu

Transportation networks

Highway network

Flight network

brown.edu

qwest.net

Computer networks

att.net

Local area network

Internet

Web

cox.net

John

Databases

Paul

David

Entity-relationship diagram

Graphs

5

Terminology

End vertices (or endpoints) of

an edge

U and V are the endpoints of a

Edges incident on a vertex

a, d, and b are incident on V

Adjacent vertices

U and V are adjacent

Degree of a vertex

X has degree 5

Parallel edges

h and i are parallel edges

Self-loop

j is a self-loop

a

b

d

U

Graphs

V

h

Z

X

c

e

W

j

i

g

f

Y

6

Terminology (cont.)

Path

sequence of alternating

vertices and edges

begins with a vertex

ends with a vertex

each edge is preceded and

followed by its endpoints

Simple path

path such that all its vertices

and edges are distinct

Examples

P1=(V,b,X,h,Z) is a simple path

P2=(U,c,W,e,X,g,Y,f,W,d,V) is a

path that is not simple

Graphs

a

U

c

V

b

d

P2

P1

X

e

W

h

Z

g

f

Y

7

Terminology (cont.)

Cycle

circular sequence of alternating

vertices and edges

each edge is preceded and

followed by its endpoints

Simple cycle

cycle such that all its vertices

and edges are distinct

Examples

C1=(V,b,X,g,Y,f,W,c,U,a,↵) is a

simple cycle

C2=(U,c,W,e,X,g,Y,f,W,d,V,a,↵)

is a cycle that is not simple

Graphs

a

U

c

V

b

d

C2

X

e

C1

g

W

f

h

Z

Y

8

Properties

Property 1

Notation

Σv deg(v) = 2m

n

m

deg(v)

Proof: each edge is

counted twice

Property 2

number of vertices

number of edges

degree of vertex v

Example

n = 4

m = 6

deg(v) = 3

In an undirected graph

with no self-loops and

no multiple edges

m ≤ n (n − 1)/2

Proof: each vertex has

degree at most (n − 1)

What is the bound for a

directed graph?

Graphs

9

Main Methods of the Graph ADT

Vertices and edges

Update methods

are positions

store elements

Accessor methods

aVertex()

incidentEdges(v)

endVertices(e)

isDirected(e)

origin(e)

destination(e)

opposite(v, e)

areAdjacent(v, w)

insertVertex(o)

insertEdge(v, w, o)

insertDirectedEdge(v, w, o)

removeVertex(v)

removeEdge(e)

Generic methods

Graphs

numVertices()

numEdges()

vertices()

edges()

10

Edge List Structure

u

Vertex object

a

element

reference to position in

vertex sequence

v

c

b

d

w

z

Edge object

element

origin vertex object

destination vertex object

reference to position in

edge sequence

u

z

w

v

Vertex sequence

sequence of vertex

objects

a

b

c

d

Edge sequence

sequence of edge objects

Graphs

11

Adjacency List Structure

a

Edge list structure

Incidence sequence

for each vertex

sequence of

references to edge

objects of incident

edges

Augmented edge

objects

v

b

u

u

references to

associated

positions in

incidence

sequences of end

vertices

v

a

Graphs

w

w

b

12

Adjacency Matrix Structure

a

Edge list structure

Augmented vertex

objects

b

u

w

Integer key (index)

associated with vertex

2D-array adjacency

array

v

0

u

1

Reference to edge

object for adjacent

vertices

Null for non

nonadjacent vertices

0

0

a

Graphs

2

1

∅

w

2

∅

∅

1

The “old fashioned”

version just has 0 for

no edge and 1 for edge

2

v

∅

∅

b

13

Asymptotic Performance

n vertices, m edges

no parallel edges

no self-loops

Bounds are “big-Oh”

Edge

List

Adjacency

List

Adjacency

Matrix

Space

n+m

n+m

n2

incidentEdges(v)

areAdjacent (v, w)

insertVertex(o)

insertEdge(v, w, o)

m

m

1

1

deg(v)

min(deg(v), deg(w))

1

1

n

1

n2

1

removeVertex(v)

m

1

deg(v)

1

n2

1

removeEdge(e)

Graphs

14

Depth-First Search

A

B

D

E

C

Graphs

15

Outline and Reading

Definitions (§12.1)

Subgraph

Connectivity

Spanning trees and forests

Depth-first search (§12.3.1)

Algorithm

Example

Properties

Analysis

Applications of DFS

Path finding

Cycle finding

Graphs

16

Subgraphs

A subgraph S of a graph

G is a graph such that

The vertices of S are a

subset of the vertices of G

The edges of S are a

subset of the edges of G

Subgraph

A spanning subgraph of G

is a subgraph that

contains all the vertices

of G

Spanning subgraph

Graphs

17

Connectivity

A graph is

connected if there is

a path between

every pair of

vertices

A connected

component of a

graph G is a

maximal connected

subgraph of G

Graphs

Connected graph

Non connected graph with two

connected components

18

Trees and Forests

A (free) tree is an

undirected graph T such

that

T is connected

T has no cycles

This definition of tree is

different from the one of

a rooted tree

A forest is an undirected

graph without cycles

The connected

components of a forest

are trees

Graphs

Tree

Forest

19

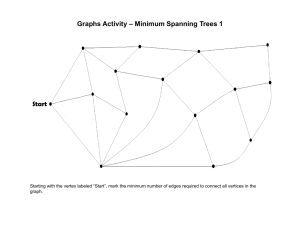

Spanning Trees and Forests

A spanning tree of a

connected graph is a

spanning subgraph that is

a tree

A spanning tree is not

unique unless the graph is

a tree

Spanning trees have

applications to the design

of communication

networks

A spanning forest of a

graph is a spanning

subgraph that is a forest

Graph

Spanning tree

Graphs

20

Depth-First Search

Depth-first search (DFS)

is a general technique

for traversing a graph

A DFS traversal of a

graph G

Visits all the vertices and

edges of G

Determines whether G is

connected

Computes the connected

components of G

Computes a spanning

forest of G

Graphs

DFS on a graph with n

vertices and m edges

takes O(n + m ) time

DFS can be further

extended to solve other

graph problems

Find and report a path

between two given

vertices

Find a cycle in the graph

Depth-first search is to

graphs what Euler tour

is to binary trees

21

DFS Algorithm

The algorithm uses a mechanism

for setting and getting “labels” of

vertices and edges

Algorithm DFS(G)

Input graph G

Output labeling of the edges of G

as discovery edges and

back edges

for all u ∈ G.vertices()

setLabel(u, UNEXPLORED)

for all e ∈ G.edges()

setLabel(e, UNEXPLORED)

for all v ∈ G.vertices()

if getLabel(v) = UNEXPLORED

DFS(G, v)

Algorithm DFS(G, v)

Input graph G and a start vertex v of G

Output labeling of the edges of G

in the connected component of v

as discovery edges and back edges

setLabel(v, VISITED)

for all e ∈ G.incidentEdges(v)

if getLabel(e) = UNEXPLORED

w ← opposite(v,e)

if getLabel(w) = UNEXPLORED

setLabel(e, DISCOVERY)

DFS(G, w)

else

setLabel(e, BACK)

Graphs

22

Example

unexplored vertex

visited vertex

unexplored edge

discovery edge

back edge

A

A

A

B

E

D

E

C

A

B

D

A

D

E

B

C

C

Graphs

23

Example (cont.)

A

B

A

D

B

E

C

C

A

A

B

D

B

E

C

D

E

D

E

C

Graphs

24

DFS and Maze Traversal

The DFS algorithm is

similar to a classic

strategy for exploring

a maze

We mark each

intersection, corner

and dead end (vertex)

visited

We mark each corridor

(edge ) traversed

We keep track of the

path back to the

entrance (start vertex)

by means of a rope

(recursion stack)

Graphs

25

Properties of DFS

Property 1

DFS(G, v) visits all the

vertices and edges in

the connected

component of v

A

Property 2

The discovery edges

labeled by DFS(G, v)

form a spanning tree of

the connected

component of v

B

Graphs

D

E

C

26

Analysis of DFS

Setting/getting a vertex/edge label takes O(1) time

Each vertex is labeled twice

once as UNEXPLORED

once as VISITED

Each edge is labeled twice

once as UNEXPLORED

once as DISCOVERY or BACK

Method incidentEdges is called once for each vertex

DFS runs in O(n + m) time provided the graph is

represented by the adjacency list structure

Recall that

Σv deg(v) = 2m

Graphs

27

Path Finding

We can specialize the DFS

algorithm to find a path

between two given

vertices u and z

We call DFS(G, u) with u

as the start vertex

We use a stack S to keep

track of the path between

the start vertex and the

current vertex

As soon as destination

vertex z is encountered,

we return the path as the

contents of the stack

Algorithm pathDFS(G, v, z)

setLabel(v, VISITED)

S.push(v)

if v = z

return S.elements()

for all e ∈ G.incidentEdges(v)

if getLabel(e) = UNEXPLORED

w ← opposite(v,e)

if getLabel(w) = UNEXPLORED

setLabel(e, DISCOVERY)

S.push(e)

pathDFS(G, w, z)

S.pop(e)

else

setLabel(e, BACK)

S.pop(v)

Graphs

28

Cycle Finding

We can specialize the

DFS algorithm to find a

simple cycle

We use a stack S to

keep track of the path

between the start vertex

and the current vertex

As soon as a back edge

(v, w) is encountered,

we return the cycle as

the portion of the stack

from the top to vertex w

Algorithm cycleDFS(G, v, z)

setLabel(v, VISITED)

S.push(v)

for all e ∈ G.incidentEdges(v)

if getLabel(e) = UNEXPLORED

w ← opposite(v,e)

S.push(e)

if getLabel(w) = UNEXPLORED

setLabel(e, DISCOVERY)

pathDFS(G, w, z)

S.pop(e)

else

T ← new empty stack

repeat

o ← S.pop()

T.push(o)

until o = w

return T.elements()

S.pop(v)

Graphs

29

Breadth-First Search

L0

L1

A

B

L2

Graphs

C

E

D

F

30

Outline and Reading

Breadth-first search (§12.3.2)

Algorithm

Example

Properties

Analysis

Applications

DFS vs. BFS

Comparison of applications

Comparison of edge labels

Graphs

31

Breadth-First Search

Breadth-first search

(BFS) is a general

technique for traversing

a graph

A BFS traversal of a

graph G

Visits all the vertices and

edges of G

Determines whether G is

connected

Computes the connected

components of G

Computes a spanning

forest of G

Graphs

BFS on a graph with n

vertices and m edges

takes O(n + m ) time

BFS can be further

extended to solve other

graph problems

Find and report a path

with the minimum

number of edges

between two given

vertices

Find a simple cycle, if

there is one

32

BFS Algorithm

The algorithm uses a

mechanism for setting and

getting “labels” of vertices

and edges

Algorithm BFS(G)

Input graph G

Output labeling of the edges

and partition of the

vertices of G

for all u ∈ G.vertices()

setLabel(u, UNEXPLORED)

for all e ∈ G.edges()

setLabel(e, UNEXPLORED)

for all v ∈ G.vertices()

if getLabel(v) = UNEXPLORED

BFS(G, v)

Algorithm BFS(G, s)

L0 ← new empty sequence

L0.insertLast(s)

setLabel(s, VISITED)

i←0

while ¬Li.isEmpty()

Li +1 ← new empty sequence

for all v ∈ Li.elements()

for all e ∈ G.incidentEdges(v)

if getLabel(e) = UNEXPLORED

w ← opposite(v,e)

if getLabel(w) = UNEXPLORED

setLabel(e, DISCOVERY)

setLabel(w, VISITED)

Li +1.insertLast(w)

else

setLabel(e, CROSS)

i ← i +1

Graphs

33

Example

L0

unexplored vertex

visited vertex

unexplored edge

discovery edge

cross edge

A

A

L0

L1

L1

L0

C

E

B

L1

D

F

C

E

A

B

A

F

A

B

C

E

Graphs

D

D

F

34

Example (cont.)

L0

L1

L0

A

B

C

E

L0

L1

D

F

L0

C

E

B

L2

A

B

L2

L1

L1

D

Graphs

C

E

D

F

A

B

L2

F

A

C

E

D

F

35

Example (cont.)

L0

L1

L0

L1

A

B

L2

C

E

L1

D

B

L2

F

A

C

E

D

F

A

B

L2

L0

C

E

D

F

Graphs

36

Properties

Notation

A

Gs: connected component of s

Property 1

BFS(G, s) visits all the vertices and

edges of Gs

B

E

Property 2

The discovery edges labeled by

BFS(G, s) form a spanning tree Ts

of Gs

Property 3

L0

L1

For each vertex v in Li

The path of Ts from s to v has i

edges

Every path from s to v in Gs has at

least i edges

Graphs

C

F

A

B

L2

D

C

E

D

F

37

Analysis

Setting/getting a vertex/edge label takes O(1) time

Each vertex is labeled twice

once as UNEXPLORED

once as VISITED

Each edge is labeled twice

once as UNEXPLORED

once as DISCOVERY or CROSS

Each vertex is inserted once into a sequence Li

Method incidentEdges() is called once for each vertex

BFS runs in O(n + m) time provided the graph is

represented by the adjacency list structure

Recall that

Σv deg(v) = 2m

Graphs

38

Applications

Using the template method pattern, we can

specialize the BFS traversal of a graph G to

solve the following problems in O(n + m) time

Compute the connected components of G

Compute a spanning forest of G

Find a simple cycle in G, or report that G is a

forest

Given two vertices of G, find a path in G between

them with the minimum number of edges, or

report that no such path exists

Graphs

39

DFS vs. BFS

Applications

DFS

BFS

√

√

Spanning forest, connected

components, paths, cycles

Shortest paths

√

Biconnected components

√

L0

A

B

C

E

D

L1

B

L2

F

DFS

A

C

E

D

F

BFS

Graphs

40

DFS vs. BFS (cont.)

Back edge (v,w)

Cross edge (v,w)

w is an ancestor of v in

the tree of discovery

edges

L0

A

B

w is in the same level as

v or in the next level in

the tree of discovery

edges

C

E

D

L1

B

L2

F

DFS

A

C

E

D

F

BFS

Graphs

41

Directed Graphs

BOS

ORD

JFK

SFO

DFW

LAX

MIA

Graphs

42

Outline and Reading (§12.4)

Reachability (§12.4.1)

Directed DFS

Strong connectivity

Transitive closure (§12.4.2)

The Floyd-Warshall Algorithm

Directed Acyclic Graphs (DAG’s) (§12.4.3)

Topological Sorting

Graphs

43

Digraphs

A digraph is a graph

whose edges are all

directed

D

Short for “directed graph”

C

Applications

E

one-way streets

flights

task scheduling

B

A

Graphs

44

E

Digraph Properties

D

C

B

A graph G=(V,E) such that

Each edge goes in one direction:

Edge (a,b) goes from a to b, but not b to a.

A

If G is simple, m < n*(n-1).

If we keep in-edges and out-edges in separate

adjacency lists, we can perform listing of inedges and out-edges in time proportional to

their size.

Graphs

45

Digraph Application

Scheduling: edge (a,b) means task a must be

completed before b can be started

ics21

ics22

ics23

ics51

ics53

ics52

ics161

ics131

ics141

ics121

ics171

The good life

ics151

Graphs

46

Directed DFS

We can specialize

the traversal

algorithms (DFS and

BFS) to digraphs by

traversing edges

only along their

direction

A directed DFS

starting a a vertex s

determines the

vertices reachable

from s

Graphs

E

D

C

B

A

47

Reachability

DFS tree rooted at v: vertices reachable

from v via directed paths

E

E

D

C

D

C

F

A

E

A

B

D

C

A

Graphs

F

B

48

Strong Connectivity

Each vertex can reach all other vertices

a

g

c

d

e

b

f

Graphs

49

Strong Connectivity

Algorithm

Pick a vertex v in G.

Perform a DFS from v in G.

G:

g

c

If there’s a w not visited, print “no”.

d

Let G’ be G with edges reversed.

Perform a DFS from v in G’.

a

e

b

f

If there’s a w not visited, print “no”.

Else, print “yes”.

a

G’:

g

c

d

Running time: O(n+m).

e

b

f

Graphs

50

Strongly Connected

Components

Maximal subgraphs such that each vertex can reach

all other vertices in the subgraph

Can also be done in O(n+m) time using DFS

a

c

d

g

{a,c,g}

b

{f,d,e,b}

e

f

Graphs

51

Transitive Closure

Given a digraph G, the

transitive closure of G is the

digraph G* such that

G* has the same vertices

as G

if G has a directed path

from u to v (u ≠ v), G*

has a directed edge from

u to v

The transitive closure

provides reachability

information about a digraph

Graphs

D

E

B

C

G

A

D

E

B

C

A

G*

52

Computing the

Transitive Closure

We can perform

DFS starting at

each vertex

If there's a way to get

from A to B and from

B to C, then there's a

way to get from A to C.

O(n(n+m))

Alternatively ... Use

dynamic programming:

The Floyd-Warshall

Algorithm

Graphs

53

Floyd-Warshall

Transitive Closure

Idea #1: Number the vertices 1, 2, …, n.

Idea #2: Consider paths that use only

vertices numbered 1, 2, …, k, as

intermediate vertices:

i

Uses only vertices numbered 1,…,k

(add this edge if it’s not already in)

j

Uses only vertices

numbered 1,…,k-1

k

Graphs

Uses only vertices

numbered 1,…,k-1

54

Floyd-Warshall’s Algorithm

Floyd-Warshall’s algorithm

numbers the vertices of G as

v1 , …, vn and computes a

series of digraphs G0, …, Gn

Algorithm FloydWarshall(G)

Input digraph G

Output transitive closure G* of G

i←1

for all v ∈ G.vertices()

G0=G

denote v as vi

Gk has a directed edge (vi, vj)

i←i+1

if G has a directed path from

G0 ← G

vi to vj with intermediate

for k ← 1 to n do

vertices in the set {v1 , …, vk}

Gk ← Gk − 1

We have that Gn = G*

for i ← 1 to n (i ≠ k) do

for j ← 1 to n (j ≠ i, k) do

In phase k, digraph Gk is

computed from Gk − 1

if Gk − 1.areAdjacent(vi, vk) ∧

Gk − 1.areAdjacent(vk, vj)

Running time: O(n3),

if ¬Gk.areAdjacent(vi, vj)

assuming areAdjacent is O(1)

Gk.insertDirectedEdge(vi, vj , k)

(e.g., adjacency matrix)

return Gn

Graphs

55

Floyd-Warshall Example

v7

BOS

ORD

v4

JFK

v2

v6

SFO

DFW

LAX

v1

v3

MIA

v5

Graphs

56

Floyd-Warshall, Iteration 1

v7

BOS

ORD

v4

JFK

v2

v6

SFO

DFW

LAX

v1

v3

MIA

v5

Graphs

57

Floyd-Warshall, Iteration 2

v7

BOS

ORD

v4

JFK

v2

v6

SFO

DFW

LAX

v1

v3

MIA

v5

Graphs

58

Floyd-Warshall, Iteration 3

v7

BOS

ORD

v4

JFK

v2

v6

SFO

DFW

LAX

v1

v3

MIA

v5

Graphs

59

Floyd-Warshall, Iteration 4

v7

BOS

ORD

v4

JFK

v2

v6

SFO

DFW

LAX

v1

v3

MIA

v5

Graphs

60

Floyd-Warshall, Iteration 5

v7

BOS

ORD

v4

JFK

v2

v6

SFO

DFW

LAX

v1

v3

MIA

v5

Graphs

61

Floyd-Warshall, Iteration 6

v7

BOS

ORD

v4

JFK

v2

v6

SFO

DFW

LAX

v1

v3

MIA

v5

Graphs

62

Floyd-Warshall, Conclusion

v7

BOS

ORD

v4

JFK

v2

v6

SFO

DFW

LAX

v1

v3

MIA

v5

Graphs

63

DAGs and Topological Ordering

A directed acyclic graph (DAG) is a

digraph that has no directed cycles

A topological ordering of a digraph

is a numbering

v1 , …, vn

of the vertices such that for every

edge (vi , vj), we have i < j

Example: in a task scheduling

digraph, a topological ordering a

task sequence that satisfies the

v2

precedence constraints

Theorem

A digraph admits a topological

v1

ordering if and only if it is a DAG

Graphs

D

E

B

C

DAG G

A

D

B

C

A

v4

E

v5

v3

Topological

ordering of G

64

Topological Sorting

Number vertices so that (u,v) in E implies u < v

wake up

1

A typical day

3

2

study computer sci.

eat

4

7

play

nap

8

write c.s. program

9

5

more c.s.

6

work out

Go out w/ friends

10

11

sleep

dream about graphs

Graphs

65

Algorithm for Topological Sorting

Method TopologicalSort(G)

H←G

// Temporary copy of G

n ← G.numVertices()

while H is not empty do

Let v be a vertex with no outgoing edges

Label v ← n

n←n-1

Remove v from H

Running time: O(n + m). Why?

Graphs

66

Topological Sorting

Algorithm using DFS

Algorithm topologicalDFS(G)

Input dag G

Output topological ordering of G

n ← G.numVertices()

for all u ∈ G.vertices()

setLabel(u, UNEXPLORED)

for all e ∈ G.edges()

setLabel(e, UNEXPLORED)

for all v ∈ G.vertices()

if getLabel(v) = UNEXPLORED

topologicalDFS(G, v)

O(n+m) time

Algorithm topologicalDFS(G, v)

Input graph G and a start vertex v of G

Output labeling of the vertices of G

in the connected component of v

setLabel(v, VISITED)

for all e ∈ G.incidentEdges(v)

if getLabel(e) = UNEXPLORED

w ← opposite(v,e)

if getLabel(w) = UNEXPLORED

setLabel(e, DISCOVERY)

topologicalDFS(G, w)

else

{e is a forward or cross edge}

Label v with topological number n

n←n-1

Graphs

67

Topological Sorting Example

Graphs

68

Topological Sorting Example

9

Graphs

69

Topological Sorting Example

8

9

Graphs

70

Topological Sorting Example

7

8

9

Graphs

71

Topological Sorting Example

6

7

8

9

Graphs

72

Topological Sorting Example

6

5

7

8

9

Graphs

73

Topological Sorting Example

4

6

5

7

8

9

Graphs

74

Topological Sorting Example

3

4

6

5

7

8

9

Graphs

75

Topological Sorting Example

2

3

4

6

5

7

8

9

Graphs

76

Topological Sorting Example

2

1

3

4

6

5

7

8

9

Graphs

77

Shortest Paths

A

8

0

4

2

B

2

Graphs

8

7

5

E

C

3

2

1

9

D

8

F

3

5

78

Outline and Reading

Weighted graphs (§12.1)

Shortest path problem

Shortest path properties

Dijkstra’s algorithm (§12.6.1)

Algorithm

Edge relaxation

The Bellman-Ford algorithm

Shortest paths in DAGs

All-pairs shortest paths

Graphs

79

Weighted Graphs

In a weighted graph, each edge has an associated numerical

value, called the weight of the edge

Edge weights may represent distances, costs, etc.

Example:

In a flight route graph, the weight of an edge represents the

distance in miles between the endpoint airports

SFO

ORD

3

4

17

LAX

1233

DFW

Graphs

849

1

42

PVD

LGA

7

138

1120

10

99

1205

337

HNL

2555

1843

802

MIA

80

Shortest Path Problem

Given a weighted graph and two vertices u and v, we want to

find a path of minimum total weight between u and v.

Length of a path is the sum of the weights of its edges.

Example:

Shortest path between Providence and Honolulu

Applications

Internet packet routing

Flight reservations

Driving directions

SFO

43

7

1

LAX

1233

849

ORD

DFW

Graphs

7

8

3

1

14

2

PVD

LGA

1120

10

99

1205

337

HNL

2555

1843

802

MIA

81

Shortest Path Properties

Property 1:

A subpath of a shortest path is itself a shortest path

Property 2:

There is a tree of shortest paths from a start vertex to all the other

vertices

Example:

Tree of shortest paths from Providence

LAX

1233

DFW

Graphs

7

8

3

1

14

2

PVD

LGA

1120

10

99

1205

337

HNL

2555

43

7

1

849

ORD

802

SFO

1843

MIA

82

Dijkstra’s Algorithm

The distance of a vertex

v from a vertex s is the

length of a shortest path

between s and v

Dijkstra’s algorithm

computes the distances

of all the vertices from a

given start vertex s

Assumptions:

We grow a “cloud” of vertices,

beginning with s and eventually

covering all the vertices

We store with each vertex v a

label d(v) representing the

distance of v from s in the

subgraph consisting of the cloud

and its adjacent vertices

At each step

the graph is connected

the edges are

undirected

the edge weights are

nonnegative

Graphs

We add to the cloud the vertex

u outside the cloud with the

smallest distance label, d(u)

We update the labels of the

vertices adjacent to u (edge

relaxation)

83

Edge Relaxation

Consider an edge e = (u,z)

such that

d(u) = 50

u is the vertex most recently

added to the cloud

z is not in the cloud

s

The relaxation of edge e

updates distance d(z) as

follows:

u

e

z

d(u) = 50

d(z) ← min{d(z),d(u) + weight(e)}

s

Graphs

u

10 d(z) = 75

10 d(z) = 60

e

z

84

Example

A

8

0

4

A

8

2

B

8

7

2

C

2

1

D

9

E

∞

F

A

8

4

B

5

8

7

5

E

2

C

3

B

2

7

5

E

D

8

A

8

3

5

0

4

2

C

3

1

9

0

4

2

F

2

8

4

2

3

∞

0

2

1

9

D

11

F

3

B

5

2

Graphs

7

7

5

E

C

3

2

1

9

D

8

F

5

85

3

Example (cont.)

A

8

0

4

2

B

2

7

7

C

3

5

E

2

1

9

D

8

F

3

5

A

8

0

4

2

B

2

Graphs

7

7

C

3

5

E

2

1

9

D

8

F

3

5

86

Dijkstra’s Algorithm

A priority queue stores

the vertices outside the

cloud

Key: distance

Element: vertex

Locator-based methods

insert(k,e) returns a

locator

replaceKey(l,k) changes

the key of an item

We store two labels

with each vertex:

distance (d(v) label)

locator in priority

queue

Algorithm DijkstraDistances(G, s)

Q ← new heap-based priority queue

for all v ∈ G.vertices()

if v = s

setDistance(v, 0)

else

setDistance(v, ∞)

l ← Q.insert(getDistance(v), v)

setLocator(v,l)

while ¬Q.isEmpty()

u ← Q.removeMin()

for all e ∈ G.incidentEdges(u)

{ relax edge e }

z ← G.opposite(u,e)

r ← getDistance(u) + weight(e)

if r < getDistance(z)

setDistance(z,r)

Q.replaceKey(getLocator(z),r)

Graphs

87

Analysis

Graph operations

Method incidentEdges is called once for each vertex

Label operations

We set/get the distance and locator labels of vertex z O(deg(z)) times

Setting/getting a label takes O(1) time

Priority queue operations

Each vertex is inserted once into and removed once from the priority

queue, where each insertion or removal takes O(log n) time

The key of a vertex in the priority queue is modified at most deg(w)

times, where each key change takes O(log n) time

Dijkstra’s algorithm runs in O((n + m) log n) time provided the

graph is represented by the adjacency list structure

Recall that

Σv deg(v) = 2m

The running time can also be expressed as O(m log n) since the

graph is connected

Graphs

88

Extension

We can extend

Dijkstra’s algorithm to

return a tree of

shortest paths from

the start vertex to all

other vertices

We store with each

vertex a third label:

parent edge in the

shortest path tree

In the edge relaxation

step, we update the

parent label

Algorithm DijkstraShortestPathsTree(G, s)

…

for all v ∈ G.vertices()

…

setParent(v, ∅)

…

for all e ∈ G.incidentEdges(u)

{ relax edge e }

z ← G.opposite(u,e)

r ← getDistance(u) + weight(e)

if r < getDistance(z)

setDistance(z,r)

setParent(z,e)

Q.replaceKey(getLocator(z),r)

Graphs

89

Why Dijkstra’s Algorithm

Works

Dijkstra’s algorithm is based on the greedy

method. It adds vertices by increasing distance.

Suppose it didn’t find all shortest

distances. Let F be the first wrong

vertex the algorithm processed.

When the previous node, D, on the

true shortest path was considered,

its distance was correct.

But the edge (D,F) was relaxed at

that time!

Thus, so long as d(F)>d(D), F’s

distance cannot be wrong. That is,

there is no wrong vertex.

Graphs

A

8

0

4

2

B

2

7

7

C

3

5

E

2

1

9

D

8

F

5

90

3

Why It Doesn’t Work for

Negative-Weight Edges

Dijkstra’s algorithm is based on the greedy

method. It adds vertices by increasing distance.

If a node with a negative

incident edge were to be added

late to the cloud, it could mess

up distances for vertices already

in the cloud.

A

8

0

4

6

B

2

7

7

C

0

5

E

5

1

-8

D

9

F

5

C’s true distance is 1, but

it is already in the cloud

with d(C)=5!

Graphs

91

4

Bellman-Ford Algorithm

Works even with negative- Algorithm BellmanFord(G, s)

weight edges

for all v ∈ G.vertices()

if v = s

Must assume directed

setDistance(v, 0)

edges (for otherwise we

else

would have negativesetDistance(v, ∞)

weight cycles)

for i ← 1 to n-1 do

Iteration i finds all shortest

for each e ∈ G.edges()

paths that use i edges.

{ relax edge e }

Running time: O(nm).

u ← G.origin(e)

z ← G.opposite(u,e)

Can be extended to detect

r ← getDistance(u) + weight(e)

a negative-weight cycle if it

if r < getDistance(z)

exists

setDistance(z,r)

How?

Graphs

92

Bellman-Ford Example

Nodes are labeled with their d(v) values

0

8

4

0

8

-2

-2

7

∞

1

∞

3

-2

8

∞

7

∞

∞

∞

3

9

5

∞

0

8

-2

4

7

1

-2

6

∞

1

∞

∞

∞

5

0

8

4

-2

1

-2

3

-2

9

-2

5 8

4

9

9

∞

4

-1

5

7

3

5

-2

Graphs

1

1

-2

9

4

9

-1

5

93

4

DAG-based Algorithm

Works even with

negative-weight edges

Uses topological order

Uses simple data

structures

Is much faster than

Dijkstra’s algorithm

Running time: O(n+m).

Algorithm DagDistances(G, s)

for all v ∈ G.vertices()

if v = s

setDistance(v, 0)

else

setDistance(v, ∞)

Perform a topological sort of the vertices

for u ← 1 to n do {in topological order}

for each e ∈ G.outEdges(u)

{ relax edge e }

z ← G.opposite(u,e)

r ← getDistance(u) + weight(e)

if r < getDistance(z)

setDistance(z,r)

Graphs

94

1

DAG Example

Nodes are labeled with their d(v) values

1

1

0

8

4

0

8

-2

-2

3

2

7

∞

∞

4

1

∞

3

-5

3

∞

∞

8

2

7

∞

6

5

-5

∞

5

0

8

-5

2

1

∞

3

6

4

1

∞

9

∞

6

4

5

5

0

8

-2

7

-2

1

8

5

∞

3

9

1

3

4

4

-2

4

1

-2

4

3

-1

5

2

7

9

7

∞

5

-5

5

Graphs

0

1

3

6

4

1

-2

9

4

7

(two steps)

-1

5

5

95

4

All-Pairs Shortest Paths

Algorithm AllPair(G) {assumes vertices 1,…,n}

Find the distance

for all vertex pairs (i,j)

between every pair of

if i = j

vertices in a weighted

D0[i,i] ← 0

directed graph G.

else if (i,j) is an edge in G

We can make n calls to

D0[i,j] ← weight of edge (i,j)

Dijkstra’s algorithm (if no

else

negative edges), which

D0[i,j] ← + ∞

takes O(nmlog n) time.

for k ← 1 to n do

Likewise, n calls to

for i ← 1 to n do

Bellman-Ford would take

for j ← 1 to n do

Dk[i,j] ← min{Dk-1[i,j], Dk-1[i,k]+Dk-1[k,j]}

O(n2m) time.

return Dn

We can achieve O(n3)

time using dynamic

Uses only vertices numbered 1,…,k

i

(compute weight of this edge)

programming (similar to

j

the Floyd-Warshall

Uses only vertices

Uses only vertices

algorithm).

numbered 1,…,k-1

k

Graphs

numbered 1,…,k-1

96

Minimum Spanning Trees

2704

BOS

867

849

PVD

ORD

740

621

1846

1391

1464

1258

BWI

1090

946

DFW

LAX

144

JFK

184

802

SFO

337

187

1235

1121

MIA

2342

Graphs

97

Outline and Reading

Minimum Spanning Trees (§12.7)

Definitions

A crucial fact

The Prim-Jarnik Algorithm (§12.7.2)

Kruskal's Algorithm (§12.7.1)

Baruvka's Algorithm

Graphs

98

Minimum Spanning Tree

Spanning subgraph

ORD

Subgraph of a graph G

containing all the vertices of G

1

Spanning tree

DEN

Spanning subgraph that is

itself a (free) tree

Minimum spanning tree (MST)

10

Spanning tree of a weighted

graph with minimum total

edge weight

PIT

9

6

STL

4

8

7

3

DCA

5

2

Applications

DFW

Communications networks

Transportation networks

Graphs

ATL

99

Cycle Property

8

f

Cycle Property:

Let T be a minimum

spanning tree of a

weighted graph G

Let e be an edge of G

that is not in T and let C

be the cycle formed by e

with T

For every edge f of C,

weight(f) ≤ weight(e)

Proof:

By contradiction

If weight(f) > weight(e) we

can get a spanning tree

of smaller weight by

replacing e with f

2

4

C

6

9

3

e

8

7

7

Replacing f with e yields

a better spanning tree

f

2

6

8

4

C

9

3

8

e

7

7

Graphs

100

Partition Property

U

f

Partition Property:

Consider a partition of the vertices of

G into subsets U and V

Let e be an edge of minimum weight

across the partition

There is a minimum spanning tree of

G containing edge e

Proof:

Let T be an MST of G

If T does not contain e, consider the

cycle C formed by e with T and let f

be an edge of C across the partition

By the cycle property,

weight(f) ≤ weight(e)

Thus, weight(f) = weight(e)

We obtain another MST by replacing

f with e

Graphs

V

7

4

9

5

2

8

8

3

e

7

Replacing f with e yields

another MST

U

f

2

V

7

4

9

5

8

8

3

e

7

101

Prim-Jarnik’s Algorithm

Similar to Dijkstra’s algorithm (for a connected graph)

We pick an arbitrary vertex s and we grow the MST as a

cloud of vertices, starting from s

We store with each vertex v a label d(v) = the smallest

weight of an edge connecting v to a vertex in the cloud

At each step:

We add to the cloud the

vertex u outside the cloud

with the smallest distance

label

We update the labels of the

vertices adjacent to u

Graphs

102

Prim-Jarnik’s Algorithm (cont.)

A priority queue stores

the vertices outside the

cloud

Key: distance

Element: vertex

Locator-based methods

insert(k,e) returns a

locator

replaceKey(l,k) changes

the key of an item

We store three labels

with each vertex:

Distance

Parent edge in MST

Locator in priority queue

Algorithm PrimJarnikMST(G)

Q ← new heap-based priority queue

s ← a vertex of G

for all v ∈ G.vertices()

if v = s

setDistance(v, 0)

else

setDistance(v, ∞)

setParent(v, ∅)

l ← Q.insert(getDistance(v), v)

setLocator(v,l)

while ¬Q.isEmpty()

u ← Q.removeMin()

for all e ∈ G.incidentEdges(u)

z ← G.opposite(u,e)

r ← weight(e)

if r < getDistance(z)

setDistance(z,r)

setParent(z,e)

Q.replaceKey(getLocator(z),r)

Graphs

103

Example

2

7

B

0

2

B

5

C

0

2

0

A

4

9

5

C

5

F

8

8

7

E

7

7

2

4

F

8

7

∞

B

7

D

7

3

9

8

A

D

7

5

F

E

7

2

2

4

8

8

A

∞

9

8

C

5

2

D

E

∞

2

3

7

B

0

Graphs

A

3

7

7

4

9

5

C

5

F

8

8

7

D

7

∞

E

3

7

104

4

Example (contd.)

2

2

B

0

4

9

5

C

5

F

8

8

A

D

7

7

7

E

4

3

3

2

2

B

0

Graphs

4

9

5

C

5

F

8

8

A

D

7

7

7

E

3

3

105

4

Analysis

Graph operations

Method incidentEdges is called once for each

vertex

Label operations

We set/get the distance, parent and locator

labels of vertex z O(deg(z)) times

Setting/getting a label takes O(1) time

Priority queue operations

Each vertex is inserted once into and

removed once from the priority queue,

where each insertion or removal takes O(log

n) time

The key of a vertex w in the priority queue is

modified at most deg(w) times, where each

key change takes O(log n) time

Prim-Jarnik’s algorithm runs in O((n + m)

log n) time provided the graph is

represented by the adjacency list structure

Recall that

Σv deg(v) = 2m

The running time is O(m log n) since the

graph is connected

Algorithm PrimJarnikMST(G)

Q ← new heap-based priority queue

s ← a vertex of G

for all v ∈ G.vertices()

if v = s

setDistance(v, 0)

else

setDistance(v, ∞)

setParent(v, ∅)

l ← Q.insert(getDistance(v), v)

setLocator(v,l)

while ¬Q.isEmpty()

u ← Q.removeMin()

for all e ∈ G.incidentEdges(u)

z ← G.opposite(u,e)

r ← weight(e)

if r < getDistance(z)

setDistance(z,r)

setParent(z,e)

Q.replaceKey(getLocator(z),r)

Graphs

106

Kruskal’s Algorithm

A priority queue stores

the edges outside the

cloud

Key: weight

Element: edge

At the end of the

algorithm

We are left with one

cloud that encompasses

the MST

A tree T which is our

MST

Algorithm KruskalMST(G)

for each vertex V in G do

define a Cloud(v) of Å {v}

let Q be a priority queue.

Insert all edges into Q using their

weights as the key

TÅ∅

while T has fewer than n-1 edges do

edge e = T.removeMin()

Let u, v be the endpoints of e

if Cloud(v) ≠ Cloud(u) then

Add edge e to T

Merge Cloud(v) and Cloud(u)

return T

Graphs

107

Kruskal

Example

2704

BOS

867

849

ORD

1391

1464

JFK

1258

BWI

1090

DFW

LAX

144

184

802

SFO

337

187

740

621

1846

PVD

946

1235

1121

MIA

2342

Graphs

108

Example

2704

BOS

867

849

ORD

1391

1464

JFK

1258

BWI

1090

946

DFW

LAX

144

184

802

SFO

337

187

740

621

1846

PVD

1235

1121

MIA

2342

Graphs

109

Example

2704

BOS

867

849

ORD

1391

1464

JFK

1258

BWI

1090

946

DFW

LAX

144

184

802

SFO

337

187

740

621

1846

PVD

1235

1121

MIA

2342

Graphs

110

Example

2704

BOS

867

849

ORD

1391

1464

JFK

1258

BWI

1090

946

DFW

LAX

144

184

802

SFO

337

187

740

621

1846

PVD

1235

1121

MIA

2342

Graphs

111

Example

2704

BOS

867

849

ORD

1391

1464

JFK

1258

BWI

1090

946

DFW

LAX

144

184

802

SFO

337

187

740

621

1846

PVD

1235

1121

MIA

2342

Graphs

112

Example

2704

BOS

867

849

ORD

1391

1464

JFK

1258

BWI

1090

946

DFW

LAX

144

184

802

SFO

337

187

740

621

1846

PVD

1235

1121

MIA

2342

Graphs

113

Example

2704

BOS

867

849

ORD

1391

1464

JFK

1258

BWI

1090

946

DFW

LAX

144

184

802

SFO

337

187

740

621

1846

PVD

1235

1121

MIA

2342

Graphs

114

Example

2704

BOS

867

849

ORD

1391

1464

JFK

1258

BWI

1090

946

DFW

LAX

144

184

802

SFO

337

187

740

621

1846

PVD

1235

1121

MIA

2342

Graphs

115

Example

2704

BOS

867

849

ORD

1391

1464

JFK

1258

BWI

1090

946

DFW

LAX

144

184

802

SFO

337

187

740

621

1846

PVD

1235

1121

MIA

2342

Graphs

116

Example

2704

BOS

867

849

ORD

1391

1464

JFK

1258

BWI

1090

946

DFW

LAX

144

184

802

SFO

337

187

740

621

1846

PVD

1235

1121

MIA

2342

Graphs

117

Example

2704

BOS

867

849

ORD

1391

1464

JFK

1258

BWI

1090

946

DFW

LAX

144

184

802

SFO

337

187

740

621

1846

PVD

1235

1121

MIA

2342

Graphs

118

Example

2704

BOS

867

849

ORD

1391

1464

JFK

1258

BWI

1090

946

DFW

LAX

144

184

802

SFO

337

187

740

621

1846

PVD

1235

1121

MIA

2342

Graphs

119

Example

2704

BOS

867

849

ORD

1391

1464

JFK

1258

BWI

1090

946

DFW

LAX

144

184

802

SFO

337

187

740

621

1846

PVD

1235

1121

MIA

2342

Graphs

120

Example

2704

BOS

867

849

ORD

1391

1464

JFK

1258

BWI

1090

DFW

LAX

144

184

802

SFO

337

187

740

621

1846

PVD

946

1235

1121

MIA

2342

Graphs

121

Data Structure for

Kruskal Algortihm

The algorithm maintains a forest of trees

An edge is accepted if it connects distinct trees

We need a data structure that maintains a partition,

i.e., a collection of disjoint sets, with the operations:

-find(u): return the set storing u

-union(u,v): replace the sets storing u and v with

their union

Graphs

122

Representation of a

Partition

Each set is stored in a sequence

Each element has a reference back to the set

operation find(u) takes O(1) time, and returns the set of

which u is a member.

in operation union(u,v), we move the elements of the

smaller set to the sequence of the larger set and update

their references

the time for operation union(u,v) is min(nu,nv), where nu

and nv are the sizes of the sets storing u and v

Whenever an element is processed, it goes into a

set of size at least double, hence each element is

processed at most log n times

Graphs

123

Partition-Based

Implementation

A partition-based version of Kruskal’s Algorithm

performs cloud merges as unions and tests as finds.

Algorithm Kruskal(G):

Input: A weighted graph G.

Output: An MST T for G.

Let P be a partition of the vertices of G, where each vertex forms a separate set.

Let Q be a priority queue storing the edges of G, sorted by their weights

Let T be an initially-empty tree

while Q is not empty do

(u,v) ← Q.removeMinElement()

if P.find(u) != P.find(v) then

Running time:

Add (u,v) to T

O((n+m)log n)

P.union(u,v)

return T

Graphs

124

Baruvka’s Algorithm

Like Kruskal’s Algorithm, Baruvka’s algorithm grows many

“clouds” at once.

Algorithm BaruvkaMST(G)

T Å V {just the vertices of G}

while T has fewer than n-1 edges do

for each connected component C in T do

Let edge e be the smallest-weight edge from C to another component in T.

if e is not already in T then

Add edge e to T

return T

Each iteration of the while-loop halves the number of connected

compontents in T.

The running time is O(m log n).

Graphs

125

Baruvka

Example

2704

BOS

867

849

ORD

PVD

187

740

1846

621

802

SFO

337

1391

1464

JFK

1258

184

BWI

1090

946

DFW

LAX

144

1235

1121

MIA

2342

Graphs

126

Example

2704

BOS

867

849

187

PVD

ORD

740

1846

621

802

SFO

337

1391

1464

1258

184

BWI

1090

946

DFW

LAX

144

JFK

1235

1121

MIA

2342

Graphs

127

Example

2704

BOS

867

849

187

PVD

ORD

740

JFK

1846

621

802

SFO

337

1391

1464

1258

184

BWI

1090

DFW

LAX

144

946

1235

1121

MIA

2342

Graphs

128

Traveling Salesperson Problem

A tour of a graph is a spanning cycle

(e.g., a cycle that goes through all

the vertices)

A traveling salesperson tour of a

weighted graph is a tour that is

simple (i.e., no repeated vertices or

edges) and has has minimum weight

No polynomial-time algorithms are

known for computing traveling

salesperson tours

The traveling salesperson problem

(TSP) is a major open problem in

computer science

Find a polynomial-time algorithm

computing a traveling salesperson

tour or prove that none exists

Graphs

B

2

4

2

5

C

F

8

6

A

D

7

1

E

Example of traveling

salesperson tour

(with weight 17)

129

3