Lecture 9. The multiple Classical Linear Regression model

Lecture 9. The multiple Classical Linear

Regression model

Often we want to estimate the relation between a dependent variable Y and say K independent variables X

1

, K , X

K

.

Even with K explanatory variables, the linear relation is not exact:

Y

= β

1

X

1

+ β

2

X

2

+ L + β

K

X

K

+ u with u the random error term that captures the effect of the omitted variables.

Note if the relation has an intercept, then

X

1

≡

1 and

β

1

is the intercept of the relation. i

If we have n observations Y i

=

1 , K , n , they satisfy

, X i 1

, K , X iK

,

Y i for i

= β

1

X i 1

+ β

2

X i 2

=

1 , K , n .

+ L + β

K

X iK

+ u i

Why do we want to include more than 1 explanatory variable?

1. Because we are interested on the relation between all K

−

1 variables and

Y

2. Because we want to estimate the direct effect of one variable and include the other variables for that purpose

To see 2. consider the relation ( K

=

3 )

Y

= β

1

X

1

+ β

2

X

2

+ β

3

X

3

+ u

Even if we are only interested in the effect of

X

2

on Y we cannot omit X

3

, if X

2

and X

3

are related. For instance, if

X

3

= γ

1

X

1

+ γ

2

X

2

+ v then after substitution we find

Y

=

(

β

1

+ β

3

γ

1

) X

1

+

(

β

2

+ β

3

γ

2

) X

2

+ u

+ β

3 v

In the relation between Y and X

1

, X

2

, the coefficient of X

2

is the sum of the direct effect

β

2

and the indirect effect

β

3

γ

2

of X

2

on Y .

If we include coefficient of

X

X

2

3

in the relation, the

is

β

2

, i.e. the direct effect of

X

2

on Y .

The assumptions are the same as before

Assumption 1: variables with u

E i

(

, u i i

=

)

1

=

, K ,

0 n are random

Assumption 2: X ik

, i

=

1 , K , n , k

=

1 , K , K are deterministic, i.e. non-random, constants.

Assumption 3 (Homoskedasticity) i

All u i

' s

=

1 , K ,

have the same variance, i.e. for n

Var ( u i

)

=

E ( u i

2

)

= σ 2

Assumption 4 (No serial correlation)

The random errors u i

and u correlated for all i

≠ j

=

1 , K , j

are not n

Cov ( u i

, u j

)

=

E ( u i u j

)

=

0

The inexact linear relation for i

=

1 , K , n

Y i

= β

1

X i 1

+ β

2

X i 2

+ L + β

K

X iK

+ u i with assumptions 1-4 is the multiple Classical

Linear Regression (CLR) model (remember with an intercept X i 1

=

1 , i

=

1 , K , n )

As in the simple CLR model the estimators of the regression parameters

β

1

, K ,

β

K

are found by minimizing the sum of squared residuals

S (

β

1

, K ,

β

K

)

= i n

∑

=

1

( Y i

− β

1

X i 1

− β

2

X i 2

− L −

The

β ˆ

1

, K ,

β ˆ

K that minimize the sum of

β

K squared residuals are Ordinary Least

Squares (OLS) estimators of

β

1

, K ,

β

K

.

X iK

)

2

The OLS residuals are e i

=

Y i

− β ˆ

1

X i 1

− β ˆ

2

X i 2

−

L

− β ˆ

K

X iK

The (unbiased) estimator of

σ

Note n

2

is s

2

−

K

= n

1

−

K i n

∑

=

1 e i

2

= no. of observations - no. of regression coefficients (including intercept)

The OLS residuals have the same properties as in the regression model with 1 regressor: for k

=

1 , K , K

(1) i n

∑

=

1

X ik e i

=

0

In words: The sample covariance of the OLS residuals and all regressors is 0.

For k

=

1 we have X i 1

=

1 and hence i n

∑

=

1 e i

=

0 .

Note: this holds if the regression model has an intercept.

Goodness of fit

Define the fitted value as before

Y

ˆ i

= β ˆ

1

X i 1

+

L

+ β ˆ

K

X iK

By definition

Y i

=

Y

ˆ i

+ e i

Because of (1) the sample covariance of the fitted values and the OLS residuals is 0. If the model has an intercept, then n

∑ i

=

1

( Y i

−

Y )

2 = i n

∑

=

1

( Y

ˆ i

−

Y )

2 + i n

∑

=

1 e i

2

Total Explained Unexplained

Variation Variation Variation

Total Sum Regression Error

of Squares Sum of Sum of

(TSS) Squares Squares

(RSS) (ESS)

The R

2

or Coefficient of Determination is defined as

R

2 =

RSS

=

1

−

ESS

TSS TSS

The R

2 increases if a regressor is added to the model. Why? Hint: Consider sum of squared residuals.

Adjusted (for degrees of freedom) R

2 decreases if added variable does not ‘explain’ much, i.e. if ESS does not decrease much,

R

2 =

1

−

ESS

TSS

/( n

/( n

−

−

K

1 )

)

If ESS with K

K

+

1

+

1

, ESS

K

are the ESS for the model

(one added) and K regressors, respectively, then ESS

K

+

1

<

ESS

K

, and the R

2 increases if X

K

+

1

is added, but R

2 decreases if

ESS

K

+

1

−

ESS

K

ESS

K > −

K n

−

+

1

K i.e. if the relative decrease is not big enough.

For instance for n

=

100 , K

=

10 , the relative decrease has to be at least 12.2% to lead to an improvement in R

2

. This cutoff value looks arbitrary (and it is!)

In Section 4.3 many more criteria that balance decrease in ESS and degrees of freedom: Forget about them.

Application: Demand for bus travel (Section

4.6 of Ramanathan)

Variables

BUSTRAVL = Demand for bus travel

(1000 of passenger hours)

FARE = Bus fare in $

GASPRICE = Price of gallon of gasoline

($)

INCOME = Average per capita income in

$

DENSITY = Population density

(persons/sq. mile)

LANDAREA = Area of city (sq. miles)

Data for 40 US cities in 1988

Question: Does demand for bus decrease if average income increases? Important for examining effect economic growth on bus system.

Date: 10/01/01 Time: 11:09

Sample: 1 40

BUSTRAVL DENSITY

Mean

Median

Maximum

Minimum

Std. Dev.

Skewness

Kurtosis

Jarque-Bera

Probability

Observations

1933.175

1589.550

13103.00

18.10000

2431.757

3.066575

13.22568

236.9669

0.000000

40

6789.100

5127.000

24288.00

1551.000

4559.464

1.701548

6.742500

42.64562

0.000000

40

FARE

0.882500

0.800000

1.500000

0.500000

0.279319

0.691675

2.732890

3.308340

0.191251

40

GASPRICE

0.914250

0.910000

1.030000

0.790000

0.051285

0.189827

3.379224

0.479913

0.786662

40

INCOME LANDAREA

16760.30

17116.00

21886.00

12349.00

2098.046

0.255876

3.491300

0.838778

0.657448

40

153.9775

99.55000

556.4000

18.90000

129.8950

1.284290

3.986898

12.61929

0.001819

40

Date: 10/01/01 Time: 11:09

Sample: 1 40

POP

Mean

Median

Maximum

Minimum

Std. Dev.

Skewness

Kurtosis

919.2600

555.8000

7323.300

167.0000

1243.917

3.870887

19.22195

Jarque-Bera

Probability

Observations

538.4778

0.000000

40

BUSTRAVL vs. INCOME

15000

10000

5000

0

12000 14000 16000 18000 20000 22000

INCOME

Dependent Variable: BUSTRAVL

Method: Least Squares

Date: 10/01/01 Time: 11:05

Sample: 1 40

Included observations: 40

Variable Coefficient Std. Error t-Statistic

C

INCOME

-2509.015

0.265042

Prob.

3091.188

-0.811667

0.4220

0.183042

1.447986

0.1558

R-squared

Adjusted R-squared

S.E. of regression

Sum squared resid

Log likelihood

Durbin-Watson stat

0.052290 Mean dependent var

0.027350 S.D. dependent var

2398.272 Akaike info criterion

2.19E+08 Schwarz criterion

-367.0318 F-statistic

1.832871 Prob(F-statistic)

1933.175

2431.757

18.45159

18.53604

2.096662

0.155822

Dependent Variable: BUSTRAVL

Method: Least Squares

Date: 10/01/01 Time: 11:07

Sample: 1 40

Included observations: 40

Variable Coefficient Std. Error t-Statistic

C

INCOME

FARE

GASPRICE

POP

DENSITY

LANDAREA

2744.680

-0.194744

-238.6544

522.1132

1.711442

0.116415

-1.155230

Prob.

2641.672

1.038994

0.3064

0.064887

-3.001294

0.0051

451.7281

-0.528314

0.6008

2658.228

0.196414

0.8455

0.231364

7.397176

0.0000

0.059570

1.954253

0.0592

1.802638

-0.640855

0.5260

R-squared

Adjusted R-squared

S.E. of regression

Sum squared resid

Log likelihood

Durbin-Watson stat

0.921026 Mean dependent var

0.906667 S.D. dependent var

742.9113 Akaike info criterion

18213267 Schwarz criterion

-317.3332 F-statistic

2.082671 Prob(F-statistic)

1933.175

2431.757

16.21666

16.51221

64.14338

0.000000

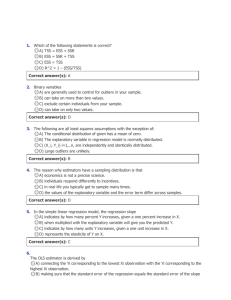

Reported are

•

Descriptive statistics

•

Scatterplot BUSTRVL and INCOME

•

OLS estimates in simple linear regression model

•

OLS estimates in multiple linear regression model

Note in simple model INCOME has positive effect (be it that coefficient is not significantly different from 0)

If we include the other variables the effect is negative: 1$ more average income per head gives about 195 fewer passenger hours.

Note that the fit improves dramatically from

R

2 =

.

05 to R

2 =

.

92 .

The sampling distribution of

β ˆ

1

, K ,

β ˆ

K

Under assumptions 1-4 the sampling distribution of

β ˆ

1

, K ,

β ˆ

K has mean

β

1

, K ,

β

K and a sampling variance that is proportional to

σ 2

. The square root of the estimated

(estimate

σ 2

by s

2

) variance of the individual regression coefficients are called the standard errors of the regression coefficients.

Regression programs always report both

β ˆ k and std (

β ˆ k

) , the standard error of

β ˆ k

.

The OLS estimator is also the Best Linear

Unbiased Estimator (BLUE) in the multiple

CLR model.

If we make the additional assumption

Assumption 5. The random error terms u i

, i

=

1 , K , n are random variables with a normal distribution.

We can derive the sampling distribution of

β ˆ

1

, K ,

β ˆ

K and s

2

.

The sampling distribution of

β ˆ

1

, multivariate normal with mean

K

β

1

,

,

β ˆ

K

K

,

β

K

.

It can be shown that for all k

=

1 , K , K

T k

=

β ˆ k

− std (

β

β ˆ k k

) has a t-distribution with n

−

K freedom.

degrees of

As in the simple CLR model we can use this to find a confidence interval for

β k

. This is done in the same way.

Hypothesis testing in the multiple CLR model

We consider two cases

1. Hypotheses involving 1 coefficient

2. Hypotheses involving 2 or more coefficients

1. Hypotheses involving 1 coefficient

For regression coefficient

β k

the hypothesis is

H

0

:

β k

= β k 0 with alternative hypothesis

H

1

:

β k

≠ or

β k 0

two-sided alternative

H

1

:

β k

> β k 0

one-sided alternative

In these hypotheses

β k 0

is a hypothesized value, e.g.

β k 0

=

0 if the null hypothesis is no effect of X k

on Y .

The test is based on the statistic

T k

=

β ˆ k

− std

β

(

β ˆ k k

)

0

If H

0

is not true, then T k

is likely either large negative or large positive. For two-sided alternative, H

1

:

β k

≠ β k 0

, we reject if T k

> c and for a one-sided alternative, H

1

:

β k

> β if T k

> d . k 0

,

For a test with a 5% confidence level, the cutoff values c , d are chosen such that

Pr(| T k

|

> c )

=

.

05 , i.e. Pr( T k

)

(two-sided)

> c )

=

.

025 or

Pr( T k

> d )

=

.

05

(one-sided) where T k

has a t-distribution with n

−

K d.f.

In example, n

=

40 , K

=

7 , c

≈

2 .

035 , d

≈

1 .

695

Note that the coefficients of INCOME, POP are significantly different from 0 at the 5% level. The coefficient of DENSITY is significantly different from 0 at the 10% level or if we test with a one-sided alternative.

2. Hypothesis involving 2 or more coefficient

As example consider the question whether the model with only INCOME as regressor is adequate.

In the more general model with INCOME,

FARE, GASPRICE, POP, DENSITY,

LANDAREA this corresponds to the hypothesis that the coefficients of FARE,

GASPRICE, POP, DENSITY, LANDAREA,

β

3

,

β

4

,

β

5

,

β

6

,

β

7

are all 0, i.e.

H

0

:

β

3

=

0 ,

β

4

=

0 ,

β

5

=

0 ,

β

6

=

0 ,

β

7

=

0 with alternative hypothesis

H

1

: One of these coefficien ts is not 0

How do we test this?

Idea: If H

0

is true then the model with only

INCOME should fit as well as the model with all regressors.

Measure of fit is sum of squared OLS residuals i n

∑

=

1 e i

2 .

Denote sum of squared residuals if H

0

is true by ESS

0

. This is ESS if only INCOME is included. The ESS if H

1

is true is denoted by

ESS

1

.

Note: ESS

0

≥

ESS

1

. Why?

We consider

F

=

ESS

0

−

ESS

1

5

ESS

1

40

−

5

Numerator: Difference of restricted ESS

( ESS

0

) and unrestricted ESS ( ESS

1

) divided by the number of restrictions.

Denominator: Estimator of

σ 2 in the model with all variables.

We reject if F is large, i.e. F

> c .

If H

0

is true then F has an F-distribution with degrees of freedom 5 (numerator) and 35

(denominator).

From Table in back cover Ramanathan, we find that e.g.

Pr( F

> c )

=

.

05 if c

≈

2 .

49 .

In example:

ESS

0

=

218564926 .

27

ESS

1

=

18213267.5

9

ESS

0

−

ESS

1

=

200351658 .

68

F

=

40070331 .

736

551917 .

20

=

72 .

60

Conclusion: We reject H

0

at the 5% level

(and at the 1% level; check this) and the model with only INCOME is not adequate.

In general, we consider

F

=

ESS

0

−

ESS

1 m

ESS

1 n

−

K with m the number of restrictions and we reject H

0

if F is (too) large. This is the F test or Wald test (Wald test is really F test if n is large)

Special case: Test of overall significance

H

0

:

β

2

= L = β

K

=

0 i.e. all regression coefficients except intercept are 0. Hence, m

=

K

−

1

In example, the F statistic for this hypothesis is 64.14 with under H

0

, m

=

6 and n

−

K

=

33 d.f. Hence, we reject H

0

and we conclude that the regressors are needed to explain

BUSTRAVL.