Understanding Data There are three basic concepts necessary to understand data

advertisement

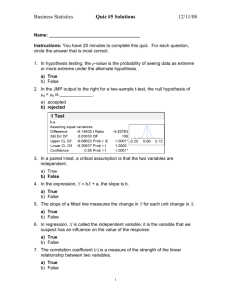

Understanding Data There are three basic concepts necessary to understand data Measures of Central Tendency Measures of Dispersion Measures of Association I. Measures of Central Tendency Defines a value about which the data clusters A. Mean (Arithmetic average) Represents the most common value based on a calculation B. Median Middle Value Rank the data from lowest to highest, pick out the one in the middle. (half above - half below) C. Mode The most frequently occurring value Count the occurrence of each value in the range of your data. The value with the greatest number of occurrences is the mode. Multi-modal Ties in the number of occurrences NOTES The mean, mode and median values need not be the same. For symmetric distributions the three are usually close. The mean is influenced by outlying observations, while the median is not. If there are values that are far removed from the observations, the median may be a better measure of central tendency than the mean. Eg. Yearling Sales Data Mean Yearling Sale Price Median Yearling Sale Price 27,295 9,162 What does this difference mean? Stud fees of weanlings cataloged in Keeneland’s Nov. Sale Interval 100-200,000 50-100,000 20-50,000 0-20,000 mean = 19,126 Number in Interval fi 8 39 228 750 Σ=1,025 Midpoint Xi 150,000 75,000 35,000 10,000 fiXi 1,200,000 2,925,000 7,980,000 7,500,000 Σ=19,605,000 II. Measures of Dispersion How much difference (variation) exists in the data. The greater the variation in the data, the smarter you need to be in interpreting the data. A. Range It is the difference between the minimum and maximum value. Useful index relative to the mean Does not account for the distribution of observations between min. and max. values. B. Variance Sum of squared differences from the mean divided by N-1. Measures the variation in the data. In squared terms - so not directly comparable to the mean. Formula C. Standard Deviation Square root of the variance Directly comparable to the mean Rule of Thumb – “If the SD is greater than the mean - your dealing with a lot of variation in the data”. Formula D. Standard error of the mean Standard deviation of the distribution of the mean. If s.e.m. is small, we expect the sample mean to be closer to the true mean of the population III. Measures of Association A Scatter plots - graph illustrating relationship between two variables. Examples B Covariance - a measure of how two variables vary relative to each other. Measures negative or positive relationship Has no relative (ie comparative) merit No indication of statistical significance C. Correlation coefficient - measures the STRENGTH of association between two variables. Measures negative or positive relationship Ranges between 0 and 1 0 = no association 1 = perfect association A measure of statistical significance can be calculated Note statistical significance is important because it provides a measure of confidence in the analysis and recommendations. Statistical significance is impacted by the variance in the data. The greater the variance, the harder it is to achieve target measures of significance. Rules of thumb measures of statistical significance. 0.10 = 10% is the minimum for significance 0.05 = 5% is the desired level of significance 0.01 = 1% is a strong level of significance D. Regression Coefficient - Measures the association between one variable and a group of variables. Properties of a Normal Distribution Necessary to understand hypothesis testing level of confidence level of significance sample vs. population characteristics Normal distribution approximates many aspects of society and nature. Often described as the “Bell-Shaped” curve. Each half is symmetric. What does this tell you about the mean, median and mode? Confidence Interval - is an interval estimate that provides a range of values in which the “true value” lies between with an associated level of confidence. Confidence interval calculation mean + (confidence factor * standard error of mean) Confidence factors: 68% level confidence factor = 1.0 90% level confidence factor = 1.64 95% level confidence factor = 1.96 Confidence intervals differ from the calculation that explains the dispersion of observations, i.e., data around the mean for a normal distribution. Assuming you have a normal distribution, the range of values that includes 68% of the observations is calculated as mean + 1.0 * standard deviation The range of values that includes 95% of the observations is calculated as mean + 2.0 * standard deviation Example Graph The standard deviation is a statistic that tells you how tightly all the various examples are clustered around the mean in a set of data. When the examples are pretty tightly bunched together and the bellshaped curve is steep, the standard deviation is small. When the examples are spread apart and the bell curve is relatively flat, that tells you you have a relatively large standard deviation. Computing the value of a standard deviation is complicated. But let me show you graphically what a standard deviation represents... One standard deviation away from the mean in either direction on the horizontal axis (the red area on the above graph) accounts for somewhere around 68 percent of the people in this group. Two standard deviations away from the mean (the red and green areas) account for roughly 95 percent of the people. And three standard deviations (the red, green and blue areas) account for about 99 percent of the people. If this curve were flatter and more spread out, the standard deviation would have to be larger in order to account for those 68 percent or so of the people. So that's why the standard deviation can tell you how spread out the examples in a set are from the mean. ANOVA Analysis of Variance - is in some ways a misleading name for a collection of statistical tests to compare means. Basic purpose is to compare a mean to a value or across groups. Is the mean sale price > $75,000 Is the mean sale price of fillies < colts One method would be to calculate means and compare the values. Problem is there is no accounting for the variation in sale price between groups. Anova - tests for the variability between groups. Assumptions needed for ANOVA Each group is independent (One group doesn’t dictate the outcome of the second group) The data on the group is from a normal distribution. One-way ANOVA only one variable is used to classify data into different groups. Variable - open Open = 1 Breed = 0 Regression Analysis Regression is a method of analysis that applies when research is dealing with an dependent variable (variable of interest) and one or more independent variables (factors affecting the dependent variable). The purposes of regression are to quantify how the dependent variable is related to the independent variables, and to make predictions on the dependent variable using knowledge/estimates of independent variables. Regression analysis is a measure of association between a dependent variable and one or more independent variables. Dependent variable = endogenous variable = value estimated by the regression model Independent variable = exogenous variable = value determined outside the system but influences the system by affecting the value of the endogenous variable. Regression analysis uses data on dependent and independent variables to estimate an equation. In regression, the dependent variable is a linear function of the independent variables. Y = Intercept + Β1 X 1 + Β2 X 2 + ...+ Βn X n + ε Where Y = dependent variable Intercept = an intercept term of a line (constant) - calculated by computer Bi = estimated regression coefficient - (calculated by computer) Xi = independent variable (variable and its value is specified by the modeler using the data) i = index 1,2 up to n n = number of independent variables in the model e = error term Interpreting Regression Results 1. Bi?or Slope Coefficients Measures the change in the dependent variable, for a given change in an independent variable. Note, the change in the independent variable should be within the range of the data. The sign (+/-) on the B coefficient indicates a positive or negative correlation between the independent and dependent variables. It is important that the correlation corresponds to "theoretical" rules. If it doesn’t there is problems with the results. Standard Error of B, is a measure of dispersion of the estimated coefficient which is in turn used in calculated the T statistic. The T value is the calculated T statistic used to test if a coefficient is different from zero. The T statistic is calculated by dividing an estimated slope coefficient by its standard error, ( Bi / SEi ). It is used to test the null hypothesis: Ho : B i = 0 Ha: Bi > 0 or Ha: Bi < 0 It tells you if your results are statistically significant. Standardized regression coefficient, labeled Beta in the SPSS output. Is a dimension less coefficient that allows you to determine the relative importance of independent variables in your model. The greater the Beta the greater the effect that variable has on the dependent variable. R2 measures the proportion of the variation in Y (independent variable) which is explained by the regression equation. R2 is often informally used as a goodness of fit statistic and to compare the validity of regression results under alternative specifications of the independent variables in the model. R2 is a proportion, therefor varies from 0 to 100 percent, or 0 to 1 depending on how its reported. The addition of independent variables will never lower R2 and may increase it. 11 The adjusted R2 takes into account the number of independent variables in the model. The R2 of time series data is usually higher than cross section data. The F statistic printed out can be used to test the significance of the R2 statistic. The F statistic indicates if the model is statistically significant. The F statistic tests the joint null hypothesis B2 = B3 = ... = Bn = 0 Durbin-Watson tests for correlation in the error terms. If the error terms are correlated, you can improve the regression by taking this information into account. Multicollinearity tests for correlation between independent variables. The problem with correlated variables is that they provide similar information and thus make it hard to separate the effects of individual variables. Elasticity A common analysis procedure for economic and business studies is to calculate and examine elasticities, which are readily available from regression model results. An elasticity measures the effect of a 1 percent change in an independent variable on the dependent variable. Ei = β i * AvgerageXi AverageY Elasticity at the mean is calculated as: There are important economic implications if the elasticity is Elastic Ei > 1.0 the dependent variable is sensitive to changes in Xi Inelastic Ei < 1.0 the dependent variable is insensitive to changes in Xi 12 Dummy Variables Sometimes called indicator variables Many variables of interest in business, economics, and social sciences are not quantitative (continuous), but are qualitative (discrete). Qualitative variables can be modeled by regression but they must represented as dummy variables. Dummy variables are defined to take on a value of 0 or 1. Example Race Sex Income class Location It is no problem to have dummy variables as independent variables, but "advanced" statistical methods are required for models with dummy variables as a dependent variable. A qualitative variable with c categories will be represented by c-1 dummy variables, each taking on the values 0 and 1. For Example - North, South, East, West Category East West North South X1 1 0 0 0 Dummy Variables X2 X3 0 0 1 0 0 1 0 0 In this case if you used 4 dummy variables, the solution procedure used to solve the regression problem will fail. The coefficients on the dummy variables measures the differential impact between the indicated category (receiving a value of 1) and the category or dummy which has been dropped from the regression, in this case south. 13 If the result of our regression analyzing differences by regions was: Y = 250,000 + 50,000 X1 + 80,000 X2 - 6,000 X3 You would make the following interpretation for regional differences South Y = 250,000 East Y = 250,000 + 50,000(1) = 300,000 West Y = 250,000 + 80,000(1) = 330,000 North Y = 250,000 - 6,000(1) = 244,000 14 Regression Descriptive Statistics Mean SALE_PRC 74349.099 STUD_FEE 17466.65 AGE 8.4730 BTYPPROD .1526 GDSTPROD 7.151E-02 USRACEE 65741.88 HIP_NUMB 1807.0144 Std. Deviation Sale Price in $ Stud fee of in vitro foal in $ Age in years Black type producer = 1 Graded stakes producer = 1 Race earnings in US $ Hip number of mare N 1664 1664 1664 1664 1664 1664 1664 Model Summary Model 1 R R Square .697a .486 Adjusted R Square .484 Std. Error of the Estimate 105497.158 a. Predictors: (Constant), HIP_NUMB, AGE, USRACEE, GDSTPROD, STUD_FEE, BTYPPROD ANOVAb Model 1 Regression Residual Total Sum of Squares 1.74E+13 1.84E+13 3.59E+13 df 6 1657 1663 Mean Square 2.902E+12 1.113E+10 F 260.769 Sig. .000a a. Predictors: (Constant), HIP_NUMB, AGE, USRACEE, GDSTPROD, STUD_FEE, BTYPPROD b. Dependent Variable: SALE_PRC Coefficientsa Model 1 Unstandardized Coefficients B Std. Error (Constant) 74963.604 8541.390 STUD_FEE 3.174 .118 AGE -6125.122 778.121 BTYPPROD 18015.341 10646.326 GDSTPROD 39840.094 13501.066 USRACEE .217 .021 HIP_NUMB -13.312 2.816 Standardized Coefficients Beta .547 -.177 .044 .070 .195 -.099 t 8.777 26.879 -7.872 1.692 2.951 10.571 -4.727 Sig. .000 .000 .000 .091 .003 .000 .000 a. Dependent Variable: SALE_PRC 15