Econometrics I Fall 2010 Seyed Mahdi Barakchian

advertisement

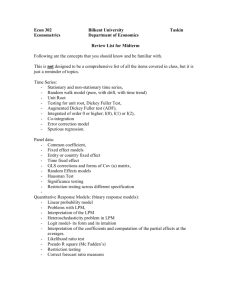

Sharif University of Technology Graduate School of Management and Economics Econometrics I Fall 2010 Seyed Mahdi Barakchian Textbook: Wooldridge, J., Introductory Econometrics: A Modern Approach, South‐Western College Pub (W., hereafter) Syllabus 1. 2. 3. 4. Introduction Probability Theory: A Review (W. Appendix B) Statistical Theory: A Review (W. Appendix C) The Simple Regression Model (W. Chapter 2) 4.1. Definition of The Simple Regression Model 4.2. Deriving The Ordinary Least Squares Estimates 4.3. Mechanics of OLS 4.3.1. Fitted Values and Residuals 4.3.2. Algebraic Properties of OLS Statistics 4.3.3. Goodness‐of‐Fit 4.4. Units of Measurement and Functional Form 4.4.1. The Effects of Changing Units of Measurement on OLS Statistics 4.4.2. Incorporating Nonlinearities in Simple Regression 4.4.3. The Meaning of “Linear” Regression 4.5. Expected Values and Variances of The OLS Estimators 4.5.1. Unbiasedness of OLS 4.5.2. Variances of the OLS Estimators 4.5.3. Estimating the Error Variance 4.6. Regression Through The Origin 5. Multiple Regression Analysis: Estimation (W. Chapter 3) 5.1. Motivation for Multiple Regression 5.1.1. The Model with Two Independent Variables 5.1.2. The Model with k Independent Variables 5.2. Mechanics and Interpretation of Ordinary Least Squares 5.2.1. 5.2.2. 5.2.3. 5.2.4. 5.2.5. 5.2.6. 5.2.7. 5.2.8. Obtaining the OLS Estimates Interpreting the OLS Regression Equation On the Meaning of “Holding Other Factors Fixed” in Multiple Regression Changing More than One Independent Variable Simultaneously OLS Fitted Values and Residuals A “Partialling Out” Interpretation of Multiple Regression Comparison of Simple and Multiple Regression Estimates Goodness‐of‐Fit 5.2.9. Regression Through the Origin 5.3. The Expected Value of the OLS Estimators 5.3.1. Including Irrelevant Variables in a Regression Model 5.3.2. Omitted Variable Bias: The Simple Case 5.3.3. Omitted Variable Bias: More General Cases 5.4. The Variance of the OLS Estimators 5.4.1. The Components of the OLS Variances: Multicollinearity 5.4.2. Variances in Misspecified Models 5.4.3. Estimating : Standard Errors of the OLS Estimators 2 5.5. Efficiency of OLS: The Gauss‐Markov Theorem 6. Mul ple Regression Analysis: Inference (W. Chapter 4) 6.1. Sampling Distributions of the OLS Estimators 6.2. Testing Hypotheses About A Single Population Parameter: The t Test 6.2.1. Testing Against One‐Sided Alternatives 6.2.2. Two‐Sided Alternatives 6.2.3. Testing Other Hypotheses About j 6.2.4. Computing p‐values for t tests 6.2.5. Economic, or Practical, versus Statistical Significance 6.3. 6.4. 6.5. Confidence Intervals Testing Hypotheses About A Single linear Combination of The Parameters Testing Multiple Linear Restrictions: The F Test 6.5.1. 6.5.2. 6.5.3. 6.5.4. 6.5.5. 6.5.6. Testing Exclusion Restrictions Relationship Between F and t Statistics The R‐Squared Form of the F Statistic Computing p‐values for F Tests The F Statistic for Overall Significance of a Regression Testing General Linear Restrictions 6.6. Reporting Regression Results 7. Multiple Regression Analysis: OLS Asymptotics (W. Chapter 5) 7.1. Consistency 7.1.1. Deriving the Inconsistency in OLS 7.2. Asymptotic Normality And Large Sample Inference 7.2.1. Other Large Sample Tests: The Lagrange Multiplier Statistic 7.3. Asymptotic Efficiency of OLS 8. Multiple Regression Analysis: Further Issues (W. Chapter 6) 8.1. Effects of Data Scaling on OLS Statistics 8.1.1. Beta Coefficients 8.2. More on Functional Form 8.2.1. More on Using Logarithmic Functional Forms 8.2.2. Models with Quadratics 8.2.3. Models with Interaction Terms 8.3. More on Goodness‐of‐Fit and Selection of Regressors 8.3.1. 8.3.2. 8.3.3. 8.3.4. 8.4. Adjusted R‐Squared Using Adjusted R‐Squared to Choose Between Nonnested Models Controlling for Too Many Factors in Regression Analysis Adding Regressors to Reduce the Error Variance Prediction and Residual Analysis 8.4.1. Confidence Intervals for Predictions 8.4.2. Residual Analysis 8.4.3. Predicting y When log(y) Is the Dependent Variable 9. Multiple Regression Analysis With Qualitative Information: Binary (or Dummy) Variables (W. Chapter 7) 9.1. Describing Qualitative Information 9.2. A Single Dummy Independent Variable 9.2.1. Interpreting Coefficients on Dummy Explanatory Variables When the Dependent Variable Is log( y) 9.3. Using Dummy Variables for Multiple Categories 9.3.1. Incorporating Ordinal Information by Using Dummy Variables 9.4. Interactions Involving Dummy Variables 9.4.1. Interactions Among Dummy Variables 9.4.2. Allowing for Different Slopes 9.4.3. Testing for Differences in Regression Functions Across Groups 9.5. A Binary Dependent Variable: A Linear Probability Model 10. Heteroskedas city (W. Chapter 8) 10.1. Consequences of Heteroskedasticity for OLS 10.2. Heteroskedasticity‐Robust Inference After OLS Estimation 10.2.1. Computing Heteroskedasticity‐Robust LM Tests 10.3. Testing for Heteroskedasticity 10.3.1. The Breusch‐Pagan Test For Heteroskedasticity 10.3.2. The White Test for Heteroskedasticity 10.4. Weighted Least Squares 10.4.1. The Heteroskedasticity Is Known up to a Multiplicative Constant 10.4.2. The Heteroskedasticity Function Must Be Estimated: Feasible GLS 10.5. The Linear Probability Model Revisited 11. More On Specification and Data Problems (W. Chapter 9) 11.1. Functional Form Misspecification 11.1.1. RESET as a General Test for Functional Form Misspecification 11.1.2. Tests Against Nonnested Alternatives 11.2. Using Proxy Variables for Unobserved Explanatory Variables 11.2.1. Using Lagged Dependent Variables as Proxy Variables 11.3. Properties of OLS under Measurement Error 11.3.1. Measurement Error in the Dependent Variable 11.3.2. Measurement Error in an Explanatory Variable 11.4. Missing Data, Non‐Random Samples, and Outlying Observations 11.4.1. Missing Data 11.4.2. Nonrandom Samples 11.4.3. Outlying Observations 12. Instrumental Variables Estimation and Two Stage Least Squares (W. Chapter 15) 12.1. Omitted Variables in a Simple Regression Model 12.1.1. Statistical Inference with the IV Estimator 12.1.2. Properties of IV with a Poor Instrumental Variable 12.1.3. Computing R‐Squared After IV Estimation 12.2. IV Estimation of the Multiple Regression Model 12.3. Two Stage Least Squares 12.3.1. 12.3.2. 12.3.3. 12.3.4. A Single Endogenous Explanatory Variable Mul collinearity and 2SLS Multiple Endogenous Explanatory Variables Tes ng Mul ple Hypotheses A er 2SLS Es ma on 12.4. IV Solutions to Errors‐in‐Variables Problems 12.5. Testing for Endogeneity and Testing for Over‐identifying Restrictions 12.5.1. Testing for Endogeneity 12.5.2. Testing Overidentification Restrictions 12.6. 2SLS with Heteroskedas city 12.7. Applying 2SLS to Time‐Series Equations 13. Basic Regression Analysis with Time Series Data (W. Chapters 10) 13.1. The Nature of Time Series Data 13.2. Examples of Time Series Regression Models 13.2.1. Static Models 13.2.2. Finite Distributed Lag Models 13.3. Finite Sample Properties of OLS under Classical Assumptions 13.3.1. Unbiasedness of OLS 13.3.2. The Variances of the OLS Estimators and the Gauss‐Markov Theorem 13.3.3. Inference Under the Classical Linear Model Assumptions 13.4. Functional Forms, Dummy Variables, and Index Numbers 13.5. Trends and Seasonality 13.5.1. Characterizing Trending Time Series 13.5.2. Using Trending Variables in Regression Analysis 13.5.3. A Detrending Interpretation of Regressions with a Time Trend 13.5.4. Computing R‐squared when the Dependent Variable is Trending 13.5.5. Seasonality 14. Serial Correlation and Heteroskedasticity in Time Series Regressions (W. Chapters 12) 14.1. Properties of OLS with Serially Correlated Errors 14.1.1. Unbiasedness and Consistency 14.1.2. Efficiency and Inference 14.1.3. Serial Correlation in the Presence of Lagged Dependent Variables 14.2. Testing for Serial Correlation 14.2.1. 14.2.2. 14.2.3. 14.2.4. A t test for AR(1) Serial Correla on with Strictly Exogenous Regressors The Durbin‐Watson Test Under Classical Assumptions Tes ng for AR(1) Serial Correlation without Strictly Exogenous Regressors Testing for Higher Order Serial Correlation 14.3. Correcting for Serial Correlation with Strictly Exogenous Regressors 14.3.1. 14.3.2. 14.3.3. 14.3.4. Obtaining the Best Linear Unbiased Es mator in the AR(1) Model Feasible GLS Estimation with AR(1) Errors Comparing OLS and FGLS Correcting for Higher Order Serial Correlation 14.4. Differencing and Serial Correlation 14.5. Serial Correlation‐Robust Inference after OLS 14.6. Heteroskedasticity in Time Series Regressions 14.6.1. 14.6.2. 14.6.3. 14.6.4. Heteroskedasticity‐Robust Statistics Testing for Heteroskedasticity Autoregressive Conditional Heteroskedasticity Heteroskedasticity and Serial Correlation in Regression Models