Day 3 Slides - Grinnell College

advertisement

Shonda Kuiper

Grinnell College

Statistical techniques taught in introductory statistics courses typically

have one response variable and one explanatory variable.

Response variable measures the outcome of a study.

Explanatory variable explain changes in the response variable.

Explanatory Variable

Response

Variable

Each variable can be classified as either categorical or quantitative.

Categorical data place individuals into one of several groups (such as

red/blue/white, male/female or yes/no).

Quantitative data consists of numerical values for which most arithmetic

operations make sense.

Explanatory Variable

Response

Variable

Categorical

Categorical

Quantitative

Chi-Square test

Logistic Regression

Two proportion test

Quantitative

Two-sample t-test

ANOVA

Regression

Statistical models have the following form:

observed value = mean response + random error

Generic Group: 𝜇1 = 𝑌1 = (70+82+90+78)/4 = 80

Brand Name Group: 𝜇2 = 𝑌2 = (75+85+95+85)/4 = 85

𝑌𝑖𝑗

=

𝑌𝑖

+

𝜀𝑖𝑗

𝑌70

11

𝑌80

1

𝜀-10

11

𝑌82

12

𝑌80

1

𝜀122

𝑌90

13

𝑌80

1

10

𝜀13

𝑌78

14

=

𝑌80

1

++

𝜀14

-2

𝑌75

21

𝑌85

2

𝜀-10

21

𝑌85

22

𝑌85

2

𝜀220

𝑌95

23

𝑌85

24

𝑌85

2

𝑌85

2

𝜀23

10

𝜀240

where i =1,2

j = 1,2,3,4

𝑌2 = 𝜇1 = 85

𝑌1 = 𝜇1 = 80

μ2

μ1

Null Hypothesis: the two groups of batteries last the same amount

of time

𝐻0 : μ1 = μ2

𝜀1,3 = 90 − 80

𝑌2 = 85

= 10

𝑌1 = 80

μ1

ε1,3 = 90 − μ1

= ?

μ2

The theoretical model used in the two-sample t-test is designed to

account for these two group means (µ1 and µ2) and random error.

observed

mean

random

value

= response + error

𝑌𝑖𝑗

=

𝑌𝑖

+

𝜀𝑖𝑗

where i =1,2

j = 1,2,3,4

𝑌𝑖𝑗

=

𝜇𝑖

+

𝜀𝑖𝑗

where i =1,2

j = 1,2,3,4

Null Hypothesis: 𝐻0 : μ1 = μ2

Alternative Hypothesis: 𝐻1 : μ2 ≠ μ1

ANOVA: Instead of using two group means, we break the mean response

into a grand mean, 𝜇, two group effects (𝛼1 and 𝛼2).

𝜇 = 𝑌 = (70 + 82 + 90 + 78 + 75 + 85 + 95 + 85)/8 = 82.5

𝛼1 = 𝜇1 − 𝜇 = 80 − 82.5 = —2.5

𝛼2 = 𝜇2 − 𝜇 = 85 + 82.5 = 2.5

𝑌𝑖,𝑗 = { 𝑌

+

𝛼𝑖 }

+ 𝜀𝑖,𝑗

70

82.5

-2.5

-10

82

82.5

-2.5

2

90

82.5

-2.5

10

78

=

82.5

+

-2.5

+

-2

75

82.5

2.5

-10

85

82.5

2.5

0

95

82.5

2.5

10

85

82.5

2.5

0

where i = 1,2

and j = 1,2,3,4

𝛼2 = 2.5

𝑌2 = 85

𝑌1 = 80

𝛼1 = 𝜇1 − 𝜇 = —2.5

μ1

μ2

𝜇 = 𝑌 = 82.5

observed

mean

random

value

= response + error

𝑌𝑌𝑖,𝑗𝑖𝑗

𝑌𝑖,𝑗

=

𝜇𝑖𝑖

++

𝜀𝜀𝑖,𝑗𝑖𝑗

= {𝜇 + 𝛼𝑖 } + 𝜀𝑖,𝑗

𝐻0 : 𝜇1 = 𝜇2

𝐻0 : 𝜇 + 𝛼1 = 𝜇 + 𝛼2

𝐻0 : 𝛼1 = 𝛼2

Null Hypothesis: 𝐻0 : 𝛼1 = 𝛼2

Alternative Hypothesis: 𝐻1 : 𝛼1 ≠ 𝛼2

where i =1,2

j = 1,2,3,4

Regression: Instead of using two group means, we create a model for a

straight line (using 𝛽0 and 𝛽1 ).

observed

mean

random

value

= response + error

𝑌𝑌𝑖,𝑗𝑖𝑗

𝑌𝑖

=

=

𝜇𝑖𝑖

+

𝜀𝜀𝑖,𝑗𝑖𝑗

𝛽0 + 𝛽1 𝑋𝑖 +

𝜀𝑖

𝑤ℎ𝑒𝑟𝑒 Xi is either 0 or 1

𝑊ℎ𝑒𝑛 Xi = 0,

𝛽0 + 𝛽1 ∙ 0 = 𝛽0 = 𝜇1

𝑊ℎ𝑒𝑛 Xi = 1,

𝛽0 + 𝛽1 ∙ 1 = 𝛽0 + 𝛽1 = 𝜇2

𝐻0 : 𝜇2 − 𝜇1 = 0

𝐻0 : 𝛽0 + 𝛽1 − 𝛽0 = 0

𝐻0 : 𝛽1 = 0

where i =1,2

j = 1,2,3,4

where i = 1,2, …, 8

𝑌𝑖 = 80 + 5 ∗ 𝑋𝑖

Regression: Instead of using two group means, we create a model

for a straight line (using 𝛽0 and 𝛽1 ).

𝛽0 = 𝜇1 = 80

𝛽1 = 𝜇2 −𝜇1 = 85 − 80 = 5

𝑌𝑖

=

𝛽0

+ 𝛽1 𝑋𝑖

+ 𝜀𝑖

70

80

0

-10

82

80

0

2

90

80

0

10

78

=

80

+

0

+

-2

75

80

5

-10

85

80

5

0

95

80

5

10

85

80

5

0

where i = 1,2,…,8

Regression: Instead of using two group means, we create a model

for a straight line (using 𝛽0 and 𝛽1 ).

The equation for the line is often written as:

𝑌𝑖

=

𝛽0

+ 𝛽1 𝑋𝑖

80

80

0

80

80

0

80

80

0

80

=

80

+

0

85

80

5

85

80

5

85

80

5

85

80

5

where i = 1,2,…,8

When there are only two groups (and we have the same

assumptions), all three models are algebraically equivalent.

𝑌𝑖𝑗

=

𝜇𝑖

+

𝜀𝑖𝑗

where i =1,2

j = 1,2,3,4

𝐻0 : μ1 = μ2

𝑌𝑖,𝑗

= {𝜇 + 𝛼𝑖 } + 𝜀𝑖,𝑗

where i =1,2

j = 1,2,3,4

𝐻0 : 𝛼1 = 𝛼2

𝑌𝑖

=

𝛽0 + 𝛽1 𝑋𝑖 +

𝜀𝑖

𝐻0 : 𝛽1 = 0

where i = 1,2, …, 8

Shonda Kuiper

Grinnell College

• Multiple regression analysis can be used to serve different goals.

The goals will influence the type of analysis that is conducted. The

most common goals of multiple regression are to:

• Describe: A model may be developed to describe the relationship

between multiple explanatory variables and the response variable.

• Predict: A regression model may be used to generalize to observations

outside the sample.

• Confirm: Theories are often developed about which variables or

combination of variables should be included in a model. Hypothesis

tests can be used to evaluate the relationship between the

explanatory variables and the response.

• Build a multiple regression model to predict retail price of cars

• Price = 35738 – 0.22 Mileage

R-Sq: 4.1%

• Slope coefficient (b1): t = -2.95 (p-value = 0.004)

Questions:

What happens to Price as Mileage

increases?

• Build a multiple regression model to predict retail price of cars

• Price = 35738 – 0.22 Mileage

R-Sq: 4.1%

• Slope coefficient (b1): t = -2.95 (p-value = 0.004)

Questions:

What happens to Price as Mileage

increases?

Since b1 = -0.22 is small can we

conclude it is unimportant?

• Build a multiple regression model to predict retail price of cars

• Price = 35738 – 0.22 Mileage

R-Sq: 4.1%

• Slope coefficient (b1): t = -2.95 (p-value = 0.004)

Questions:

What happens to Price as Mileage

increases?

Since b1 = -0.22 is small can we

conclude it is unimportant?

Does mileage help you predict price?

What does the p-value tell you?

• Build a multiple regression model to predict retail price of cars

• Price = 35738 – 0.22 Mileage

R-Sq: 4.1%

• Slope coefficient (b1): t = -2.95 (p-value = 0.004)

Questions:

What happens to Price as Mileage

increases?

Since b1 = -0.22 is small can we

conclude it is unimportant?

Does mileage help you predict price?

What does the p-value tell you?

Does mileage help you predict price?

What does the R-Sq value tell you?

• Build a multiple regression model to predict retail price of cars

• Price = 35738 – 0.22 Mileage

R-Sq: 4.1%

• Slope coefficient (b1): t = -2.95 (p-value = 0.004)

Questions:

What happens to Price as Mileage

increases?

Since b1 = -0.22 is small can we

conclude it is unimportant?

Does mileage help you predict price?

What does the p-value tell you?

Does mileage help you predict price?

What does the R-Sq value tell you?

Are there outliers or influential

observations?

0

What happens when all the points fall on the regression line?

What happens when the regression line does not help us estimate Y?

What happens when the regression line does not help us estimate Y?

What happens when the regression line does not help us estimate Y?

What happens when the regression line does not help us estimate Y?

What happens when the regression line does not help us estimate Y?

What happens when the regression line does not help us estimate Y?

What happens when the regression line does not help us estimate Y?

• R2adj includes a penalty when more terms are included in the

model.

• n is the sample size and p is the number of coefficients (including

the constant term β0, β1, β2, β3,…, βp-1)

• When many terms are in the model:

• p is larger

(n – 1)/(n-p) is larger

R2adj is smaller

Price = 35738 – 0.22 Mileage

R-Sq: 4.1%

Slope coefficient (b1): t = -2.95 (p-value = 0.004)

Shonda Kuiper

Grinnell College

• Build a multiple regression model to predict retail price of cars

R2 = 2%

Scatterplot of Price vs Mileage

70000

60000

Price

50000

40000

30000

20000

10000

0

0

10000

20000

30000

Mileage

40000

50000

• Build a multiple regression model to predict retail price of cars

R2 = 2%

Scatterplot of Price vs Mileage

70000

Mileage

60000

Cylinder

Price

50000

Liter

40000

Leather

30000

Cruise

20000

10000

Doors

0

Sound

0

10000

20000

30000

Mileage

40000

50000

• Build a multiple regression model to predict retail price of cars

R2 = 2%

Scatterplot of Price vs Mileage

70000

Mileage

60000

Cylinder

Price

50000

Liter

40000

Leather

30000

Cruise

20000

10000

Doors

0

Sound

0

10000

20000

30000

Mileage

40000

50000

Price = 6759 + 6289Cruise + 3792Cyl -1543Doors +

3349Leather - 787Liter -0.17Mileage - 1994Sound

R2 = 44.6%

Step Forward Regression (Forward Selection):

Which single explanatory variable best predicts Price?

Price = 13921.9 + 9862.3Cruise

R2 = 18.56%

Step Forward Regression:

Which single explanatory variable best predicts Price?

Price = 13921.9 + 9862.3Cruise

R2 = 18.56%

Price = -17.06 + 4054.2Cyl

R2 = 32.39%

Step Forward Regression:

Which single explanatory variable best predicts Price?

Price = 13921.9 + 9862.3Cruise

R2 = 18.56%

Price = -17.06 + 4054.2Cyl

R2 = 32.39%

Price = 24764.6 – 0.17Mileage

R2 = 2.04%

Step Forward Regression:

Which single explanatory variable best predicts Price?

Price = 13921.9 + 9862.3Cruise

R2 = 18.56%

Price = -17.06 + 4054.2Cyl

R2 = 32.39%

Price = 24764.6 – 0.17Mileage

R2 = 2.04%

Price = 6185.8.6 + 4990.4Liter

R2 = 31.15%

Step Forward Regression:

Which single explanatory variable best predicts Price?

Price = 13921.9 + 9862.3Cruise

R2 = 18.56%

Price = -17.06 + 4054.2Cyl

R2 = 32.39%

Price = 24764.6 – 0.17Mileage

R2 = 2.04%

Price = 6185.8.6 + 4990.4Liter

R2 = 31.15%

Price = 23130.1 – 2631.4Sound

R2 = 1.55%

Price = 18828.8 + 3473.46Leather

R2 = 2.47%

Price = 27033.6 -1613.2Doors

R2 = 1.93%

Step Forward Regression:

Which combination of two terms best predicts Price?

Price = - 17.06 + 4054.2Cyl

R2 = 32.39%

Price = -1046.4 + 3392.6Cyl + 6000.4Cruise

R2 = 38.4% (38.2%)

Step Forward Regression:

Which combination of two terms best predicts Price?

Price = - 17.06 + 4054.2Cyl

R2 = 32.39%

Price = 3145.8 + 4027.6Cyl – 0.152Mileage

R2 = 34% (33.8)

Step Forward Regression:

Which combination of two terms best predicts Price?

Price = -17.06 + 4054.2Cyl

R2 = 32.39%

Price = 1372.4 + 2976.4Cyl + 1412.2Liter

R2 = 32.6% (32.4%)

Step Forward Regression:

Which combination of terms best predicts Price?

Price = -17.06 + 4054.2Cyl

R2 = 32.39%

Price = -1046.4 + 3393Cyl + 6000.4Cruise

R2 = 38.4% (38.2%)

Price = -2978.4 + 3276Cyl +6362Cruise + 3139Leather

Price =

R2 = 40.4% (40.2%)

412.6 + 3233Cyl +6492Cruise + 3162Leather

-0.17Mileage

R2 = 42.3% (42%)

Price = 5530.3 + 3258Cyl +6320Cruise + 2979Leather

-0.17Mileage – 1402Doors

R2 = 43.7% (43.3%)

Price = 7323.2 + 3200Cyl + 6206Cruise + 3327Leather

-0.17Mileage – 1463Doors – 2024Sound

R2 = 44.6% (44.15%)

Price = 6759 + 3792Cyl + 6289Cruise + 3349Leather -787Liter

-0.17Mileage -1543Doors - 1994Sound

R2 = 44.6% (44.14%)

Step Forward Regression:

Which single explanatory variable best predicts Price?

Price = 13921.9 + 9862.3Cruise

R2 = 18.56%

Price = -17.06 + 4054.2Cyl

R2 = 32.39%

Price = 24764.6 – 0.17Mileage

R2 = 2.04%

Price = 6185.8.6 + 4990.4Liter

R2 = 31.15%

Price = 23130.1 – 2631.4Sound

R2 = 1.55%

Price = 18828.8 + 3473.46Leather

R2 = 2.47%

Price = 27033.6 -1613.2Doors

R2 = 1.93%

Step Backward Regression (Backward Elimination):

Price = 6759 + 3792Cyl + 6289Cruise + 3349Leather -787Liter

-0.17Mileage -1543Doors - 1994Sound

R2 = 44.6% (44.14%)

Price = 7323.2 + 3200Cyl + 6206Cruise + 3327Leather

-0.17Mileage – 1463Doors – 2024Sound

R2 = 44.6% (44.15%)

Bidirectional stepwise procedures

Other techniques, such as Akaike information criterion, Bayesian

information criterion, Mallows’ Cp, are often used to find the best

model.

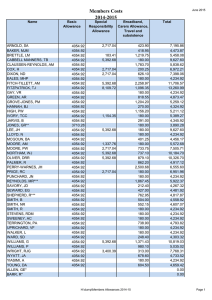

Best Subsets Regression:

Here we see that

Liter is the second

best single predictor

of price.

Important Cautions:

• Stepwise regression techniques can often ignore very important

explanatory variables. Best subsets is often preferable.

• Both best subsets and stepwise regression methods only consider

linear relationships between the response and explanatory

variables.

• Residual graphs are still essential in validating whether the model

is appropriate.

• Transformations, interactions and quadratic terms can often

improve the model.

• Whenever these iterative variable selections techniques are used,

the p-values corresponding to the significance of each individual

coefficient are not reliable.