ppt file - Electrical and Computer Engineering

advertisement

Part VII

Advanced Architectures

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 1

About This Presentation

This presentation is intended to support the use of the textbook

Computer Architecture: From Microprocessors to Supercomputers,

Oxford University Press, 2005, ISBN 0-19-515455-X. It is updated

regularly by the author as part of his teaching of the upper-division

course ECE 154, Introduction to Computer Architecture, at the

University of California, Santa Barbara. Instructors can use these

slides freely in classroom teaching and for other educational

purposes. Any other use is strictly prohibited. © Behrooz Parhami

Edition

Released

Revised

Revised

Revised

First

July 2003

July 2004

July 2005

Mar. 2007

Mar. 2007

Computer Architecture, Advanced Architectures

Revised

Slide 2

VII Advanced Architectures

Performance enhancement beyond what we have seen:

• What else can we do at the instruction execution level?

• Data parallelism: vector and array processing

• Control parallelism: parallel and distributed processing

Topics in This Part

Chapter 25 Road to Higher Performance

Chapter 26 Vector and Array Processing

Chapter 27 Shared-Memory Multiprocessing

Chapter 28 Distributed Multicomputing

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 3

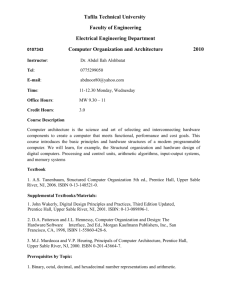

25 Road to Higher Performance

Review past, current, and future architectural trends:

• General-purpose and special-purpose acceleration

• Introduction to data and control parallelism

Topics in This Chapter

25.1 Past and Current Performance Trends

25.2 Performance-Driven ISA Extensions

25.3 Instruction-Level Parallelism

25.4 Speculation and Value Prediction

25.5 Special-Purpose Hardware Accelerators

25.6 Vector, Array, and Parallel Processing

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 4

25.1

Past and Current Performance Trends

Intel 4004: The first p (1971)

Intel Pentium 4, circa 2005

0.06 MIPS (4-bit processor)

10,000 MIPS (32-bit processor)

8008

8-bit

80386

8080

80486

Pentium, MMX

8084

32-bit

8086

16-bit

8088

80186

80188

Pentium Pro, II

Pentium III, M

Celeron

80286

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 5

Architectural Innovations for Improved Performance

Newer

methods

Mar. 2007

Improvement factor

1. Pipelining (and superpipelining)

3-8 √

2. Cache memory, 2-3 levels

2-5 √

3. RISC and related ideas

2-3 √

4. Multiple instruction issue (superscalar) 2-3 √

5. ISA extensions (e.g., for multimedia)

1-3 √

6. Multithreading (super-, hyper-)

2-5 ?

7. Speculation and value prediction

2-3 ?

8. Hardware acceleration

2-10 ?

9. Vector and array processing

2-10 ?

10. Parallel/distributed computing

2-1000s ?

Computer Architecture, Advanced Architectures

Previously

discussed

Established

methods

Architectural method

Available computing power ca. 2000:

GFLOPS on desktop

TFLOPS in supercomputer center

PFLOPS on drawing board

Covered in

Part VII

Computer performance grew by a factor

of about 10000 between 1980 and 2000

100 due to faster technology

100 due to better architecture

Slide 6

Peak Performance of Supercomputers

PFLOPS

Earth Simulator

10 / 5 years

ASCI White Pacific

ASCI Red

TFLOPS

TMC CM-5

Cray X-MP

Cray T3D

TMC CM-2

Cray 2

GFLOPS

1980

1990

2000

2010

Dongarra, J., “Trends in High Performance Computing,”

Computer J., Vol. 47, No. 4, pp. 399-403, 2004. [Dong04]

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 7

Energy Consumption is Getting out of Hand

TIPS

DSP performance

per watt

Absolute

proce ssor

performance

Performance

GIPS

GP processor

performance

per watt

MIPS

kIPS

1980

1990

2000

2010

Calendar year

Figure 25.1 Trend in energy consumption for each MIPS of

computational power in general-purpose processors and DSPs.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 8

25.2

Performance-Driven ISA Extensions

Adding instructions that do more work per cycle

Shift-add: replace two instructions with one (e.g., multiply by 5)

Multiply-add: replace two instructions with one (x := c + a b)

Multiply-accumulate: reduce round-off error (s := s + a b)

Conditional copy: to avoid some branches (e.g., in if-then-else)

Subword parallelism (for multimedia applications)

Intel MMX: multimedia extension

64-bit registers can hold multiple integer operands

Intel SSE: Streaming SIMD extension

128-bit registers can hold several floating-point operands

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 9

Intel

MMX

ISA

Extension

Class

Copy

Arithmetic

Shift

Logic

Table

25.1

Memory

access

Control

Mar. 2007

Instruction

Register copy

Parallel pack

Parallel unpack low

Parallel unpack high

Parallel add

Parallel subtract

Parallel multiply low

Parallel multiply high

Parallel multiply-add

Parallel compare equal

Parallel compare greater

Parallel left shift logical

Parallel right shift logical

Parallel right shift arith

Parallel AND

Parallel ANDNOT

Parallel OR

Parallel XOR

Parallel load MMX reg

Parallel store MMX reg

Empty FP tag bits

Vector

4, 2

8, 4, 2

8, 4, 2

8, 4, 2

8, 4, 2

4

4

4

8, 4, 2

8, 4, 2

4, 2, 1

4, 2, 1

4, 2

1

1

1

1

Op type

32 bits

Saturate

Wrap/Saturate#

Wrap/Saturate#

Bitwise

Bitwise

Bitwise

Bitwise

32 or 64 bits

32 or 64 bit

Computer Architecture, Advanced Architectures

Function or results

Integer register MMX register

Convert to narrower elements

Merge lower halves of 2 vectors

Merge upper halves of 2 vectors

Add; inhibit carry at boundaries

Subtract with carry inhibition

Multiply, keep the 4 low halves

Multiply, keep the 4 high halves

Multiply, add adjacent products*

All 1s where equal, else all 0s

All 1s where greater, else all 0s

Shift left, respect boundaries

Shift right, respect boundaries

Arith shift within each (half)word

dest (src1) (src2)

dest (src1) (src2)

dest (src1) (src2)

dest (src1) (src2)

Address given in integer register

Address given in integer register

Required for compatibility$

Slide 10

MMX Multiplication and Multiply-Add

b

a e

a

b

d

e

a

b

d

e

e

f

g

h

e

f

g

h

e h

z

v

d g

y

u

f

x

t

w

s

s

Mar. 2007

d g

b

a e

t

u

(a) Parallel multiply low

Figure 25.2

e h

f

v

u

t

s

v

add

add

s +t

u+v

(b) Parallel multiply-add

Parallel multiplication and multiply-add in MMX.

Computer Architecture, Advanced Architectures

Slide 11

MMX Parallel Comparisons

14

3

58

66

5 3 12 32 5 6 12 9

79

1

58

65

3 12 22 17 5 12 90 8

65 535

(all 1s)

0

0

0

0 0

(a) Parallel compare equal

Figure 25.3

Mar. 2007

255

(all 1s)

0 0 0

(b) Parallel compare greater

Parallel comparisons in MMX.

Computer Architecture, Advanced Architectures

Slide 12

25.3

Instruction-Level Parallelism

3

Speedup attained

Fraction of cycles

30%

20%

10%

0%

0 1 2 3 4 5 6 7 8

Issuable instructions per cycle

2

1

0

(a)

2

4

6

8

Instruction issue width

(b)

Figure 25.4 Available instruction-level parallelism and the speedup

due to multiple instruction issue in superscalar processors [John91].

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 13

Instruction-Level Parallelism

Figure 25.5

Mar. 2007

A computation with inherent instruction-level parallelism.

Computer Architecture, Advanced Architectures

Slide 14

VLIW and EPIC Architectures

VLIW

EPIC

Very long instruction word architecture

Explicitly parallel instruction computing

General

registers (128)

Memory

Execution

unit

Execution

unit

...

Execution

unit

Execution

unit

Execution

unit

...

Execution

unit

Predicates

(64)

Floating-point

registers (128)

Figure 25.6 Hardware organization for IA-64. General and floatingpoint registers are 64-bit wide. Predicates are single-bit registers.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 15

25.4

Speculation and Value Prediction

spec load

------------check load

-------

------------load

-------

(a) Control speculation

Figure 25.7

Mar. 2007

------store

---load

-------

spec load

------store

---check load

-------

(b) Data speculation

Examples of software speculation in IA-64.

Computer Architecture, Advanced Architectures

Slide 16

Value Prediction

Memo table

Miss

Mult/

Div

Inputs

Mux

0

Output

1

Done

Inputs ready

Control

Output ready

Figure 25.8 Value prediction for multiplication or

division via a memo table.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 17

25.5 Special-Purpose Hardware Accelerators

Data and

program

memory

CPU

FPGA-like unit

on which

accelerators

can be formed

via loading of

configuration

registers

Configuration

memory

Accel. 1

Accel. 3

Accel. 2

Unused

resources

Figure 25.9 General structure of a processor

with configurable hardware accelerators.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 18

Graphic Processors, Network Processors, etc.

Input

buffer

Feedback

path

PE

0

PE

1

PE

2

PE

3

PE

4

PE

PE

5

5

PE

6

PE

7

PE

8

PE

9

PE

10

PE

11

PE

12

PE

13

PE

14

PE

15

Column

memory

Column

memory

Column

memory

Column

memory

Output

buffer

Figure 25.10 Simplified block diagram of Toaster2,

Cisco Systems’ network processor.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 19

SISD

SIMD

Uniprocessors Array or vector

processors

MISD

Rarely used

MIMD

Multiproc’s or

multicomputers

Global

memory

Multiple data

streams

Distributed

memory

Single data

stream

Johnson’ s expansion

Multiple instr

streams

Single instr

stream

25.6 Vector, Array, and Parallel Processing

Shared

variables

Message

passing

GMSV

GMMP

Shared-memory

multiprocessors

Rarely used

DMSV

DMMP

Distributed

Distrib-memory

shared memory multicomputers

Flynn’s categories

Figure 25.11

Mar. 2007

The Flynn-Johnson classification of computer systems.

Computer Architecture, Advanced Architectures

Slide 20

SIMD Architectures

Data parallelism: executing one operation on multiple data streams

Concurrency in time – vector processing

Concurrency in space – array processing

Example to provide context

Multiplying a coefficient vector by a data vector (e.g., in filtering)

y[i] := c[i] x[i], 0 i < n

Sources of performance improvement in vector processing

(details in the first half of Chapter 26)

One instruction is fetched and decoded for the entire operation

The multiplications are known to be independent (no checking)

Pipelining/concurrency in memory access as well as in arithmetic

Array processing is similar (details in the second half of Chapter 26)

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 21

MISD Architecture Example

Ins truction

streams

1-5

Data

in

Data

out

Figure 25.12 Multiple instruction streams

operating on a single data stream (MISD).

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 22

MIMD Architectures

Control parallelism: executing several instruction streams in parallel

GMSV: Shared global memory – symmetric multiprocessors

DMSV: Shared distributed memory – asymmetric multiprocessors

DMMP: Message passing – multicomputers

0

Processortoprocessor

network

0

1

.

.

.

Memories and processors

Memory modules

Processortomemory

network

p1

1

.

.

.

m1

Parallel input/output

Processors

Routers

0

1

A computing node

.

.

.

Interconnection

network

p1

...

...

Parallel I/O

Figure 27.1 Centralized shared memory.

Mar. 2007

Figure 28.1 Distributed memory.

Computer Architecture, Advanced Architectures

Slide 23

Amdahl’s Law Revisited

50

f = sequential

f =0

fraction

p = speedup

of the rest

with p

processors

Speedup (s )

40

f = 0.01

30

f = 0.02

20

f = 0.05

1

s=

f + (1 – f)/p

10

f = 0.1

0

0

10

20

30

Enhancement factor (p )

40

50

min(p, 1/f)

Figure 4.4 Amdahl’s law: speedup achieved if a fraction f of a task

is unaffected and the remaining 1 – f part runs p times as fast.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 24

26 Vector and Array Processing

Single instruction stream operating on multiple data streams

• Data parallelism in time = vector processing

• Data parallelism in space = array processing

Topics in This Chapter

26.1 Operations on Vectors

26.2 Vector Processor Implementation

26.3 Vector Processor Performance

26.4 Shared-Control Systems

26.5 Array Processor Implementation

26.6 Array Processor Performance

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 25

26.1 Operations on Vectors

Sequential processor:

Vector processor:

for i = 0 to 63 do

P[i] := W[i] D[i]

endfor

load W

load D

P := W D

store P

for i = 0 to 63 do

X[i+1] := X[i] + Z[i]

Y[i+1] := X[i+1] + Y[i]

endfor

Mar. 2007

Unparallelizable

Computer Architecture, Advanced Architectures

Slide 26

26.2 Vector Processor Implementation

From scalar registers

To and from memory unit

Function unit 1 pipeline

Load

unit A

Load

unit B

Function unit 2 pipeline

Vector

register

file

Function unit 3 pipeline

Store

unit

Forwarding muxes

Figure 26.1

Mar. 2007

Simplified generic structure of a vector processor.

Computer Architecture, Advanced Architectures

Slide 27

Conflict-Free Memory Access

0

1

2

62

0,0

0,1

0,2

... 0,62

1,0

1,1

1,2

... 1,62

63

Bank

number

0

1

0,63

0,0

0,1

0,2 ...

0,62

0,63

1,63

1,63

1,0

1,0 ...

1,61

1,62

2,0

2,1

2,2 ... 2,62

2,63

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

62,0 62,1 62,2 ... 62,62 62,63

2,62 2,63 2,0 ...

.

.

. .

.

.

.

.

.

.

.

.

62,2 62,3 62,4 ...

2,60

.

.

.

62,0

2,61

.

.

.

62,1

63,0 63,1 63,2 ... 63,62 63,63

63,1 63,2 63,3 ... 63,63

63,0

.. .

(a) Conventional row-major order

2

.. .

62

(b) Skewed row-major order

Figure 26.2 Skewed storage of the elements of a 64 64 matrix

for conflict-free memory access in a 64-way interleaved memory.

Elements of column 0 are highlighted in both diagrams .

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 28

63

Overlapped Memory Access and Computation

To and from memory unit

Pipelined adder

Load X

Load Y

Store Z

Vector reg 0

Vector reg 1

Vector reg 2

Vector reg 3

Vector reg 4

Vector reg 5

Figure 26.3 Vector processing via segmented load/store of

vectors in registers in a double-buffering scheme. Solid (dashed)

lines show data flow in the current (next) segment.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 29

26.3 Vector Processor Performance

Time

Without

chaining

Multiplication

start-up

With pipeline

chaining

+

Addition

start-up

+

Figure 26.4 Total latency of the vector computation

S := X Y + Z, without and with pipeline chaining.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 30

Clock cycles per vector element

Performance as a Function of Vector Length

5

4

3

2

1

0

0

100

200

300

400

Vector length

Figure 26.5 The per-element execution time in a vector processor

as a function of the vector length.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 31

26.4 Shared-Control Systems

(a) Shared-control array

processor, SIMD

(b) Multiple shared controls,

MSIMD

Control

Processing

...

Processing

...

Control

...

Processing

...

Control

(c) Separate controls,

MIMD

Figure 26.6 From completely shared control

to totally separate controls.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 32

Example Array Processor

Control

Processor array

Switches

Control

broadcast

Parallel

I/O

Figure 26.7 Array processor with 2D torus

interprocessor communication network.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 33

26.5 Array Processor Implementation

Reg

file

Data

memory

N

E

W

ALU

0

Commun

buffer

1

S

To array

state reg

CommunEn

PE state FF

To reg file and

data memory

To NEWS

neighbors

CommunDir

Figure 26.8 Handling of interprocessor communication

via a mechanism similar to data forwarding.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 34

Configuration Switches

Processor array

Control

Switches

Control

broadcast

Parallel

I/O

Figure 26.7

(a) Torus operation

Figure 26.9

Mar. 2007

(b) Clockwise I/O

In

In

Out

Out

(c) Counterclockwise I/O

I/O switch states in the array processor of Figure 26.7.

Computer Architecture, Advanced Architectures

Slide 35

26.6 Array Processor Performance

Array processors perform well for the same class of problems that

are suitable for vector processors

For embarrassingly (pleasantly) parallel problems, array processors

can be faster and more energy-efficient than vector processors

A criticism of array processing:

For conditional computations, a significant part of the array remains

idle while the “then” part is performed; subsequently, idle and busy

processors reverse roles during the “else” part

However:

Considering array processors inefficient due to idle processors

is like criticizing mass transportation because many seats are

unoccupied most of the time

It’s the total cost of computation that counts, not hardware utilization!

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 36

27 Shared-Memory Multiprocessing

Multiple processors sharing a memory unit seems naïve

• Didn’t we conclude that memory is the bottleneck?

• How then does it make sense to share the memory?

Topics in This Chapter

27.1 Centralized Shared Memory

27.2 Multiple Caches and Cache Coherence

27.3 Implementing Symmetric Multiprocessors

27.4 Distributed Shared Memory

27.5 Directories to Guide Data Access

27.6 Implementing Asymmetric Multiprocessors

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 37

Parallel Processing

as a Topic of Study

An important area of study

that allows us to overcome

fundamental speed limits

Our treatment of the topic is

quite brief (Chapters 26-27)

Graduate course ECE 254B:

Adv. Computer Architecture –

Parallel Processing

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 38

27.1 Centralized Shared Memory

Processors

Memory modules

0

Processortoprocessor

network

0

1

.

.

.

Processortomemory

network

1

.

.

.

p1

m1

...

Parallel I/O

Figure 27.1 Structure of a multiprocessor with

centralized shared-memory.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 39

Processor-to-Memory Interconnection Network

Processors

0

Memories

Row 0

0

1

2

1

2

Row 1

3

4

3

Row 2

4

5

6

5

6

Row 3

7

8

7

Row 4

9

10

Row 5

11

12

11

Row 6

12

13

14

15

13

14

Row 7

(a) Butterfly network

15

0

1

2

3

4

5

6

7

8

9

10

P

r

o

c

e

s

s

o

r

s

0

M

e

m

o

r

i

e

s

1

2

3

4

5

6

7

(b) Beneš network

Figure 27.2 Butterfly and the related Beneš network as examples

of processor-to-memory interconnection network in a multiprocessor.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 40

Processor-to-Memory Interconnection Network

Processors

0

Sections Subsections

8

/

44

Memory banks

0, 4, 8, 12, 16, 20, 24, 28

32, 36, 40, 44, 48, 52, 56, 60

88

1

44

224, 228, 232, 236, . . . , 252

1 8 switches

2

8

/

44

1, 5, 9, 13, 17, 21, 25, 29

88

3

44

4

44

225, 229, 233, 237, . . . , 253

8

/

2, 6, 10, 14, 18, 22, 26, 30

88

5

44

6

44

226, 230, 234, 238, . . . , 254

8

/

3, 7, 11, 15, 19, 23, 27, 31

88

7

44

227, 231, 235, 239, . . . , 255

Figure 27.3 Interconnection of eight processors to 256 memory banks

in Cray Y-MP, a supercomputer with multiple vector processors.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 41

Shared-Memory Programming: Broadcasting

Copy B[0] into all B[i] so that multiple processors

can read its value without memory access conflicts

for k = 0 to log2 p – 1 processor j, 0 j < p, do

B[j + 2k] := B[j]

endfor

B

Recursive

doubling

Mar. 2007

0

1

2

3

4

5

6

7

8

9

10

11

Computer Architecture, Advanced Architectures

Slide 42

Shared-Memory Programming: Summation

Sum reduction of vector X

processor j, 0 j < p, do Z[j] := X[j]

s := 1

while s < p processor j, 0 j < p – s, do

Z[j + s] := X[j] + X[j + s]

s := 2 s

endfor

S

Recursive

doubling

Mar. 2007

0

1

2

3

4

5

6

7

8

9

0:0

1:1

2:2

3:3

4:4

5:5

6:6

7:7

8:8

9:9

0:0

0:1

1:2

2:3

3:4

4:5

5:6

6:7

7:8

8:9

0:0

0:1

0:2

0:3

1:4

2:5

3:6

4:7

5:8

6:9

Computer Architecture, Advanced Architectures

0:0

0:1

0:2

0:3

0:4

0:5

0:6

0:7

1:8

2:9

0:0

0:1

0:2

0:3

0:4

0:5

0:6

0:7

0:8

0:9

Slide 43

27.2 Multiple Caches and Cache Coherence

Processors Caches

Memory modules

0

Processortoprocessor

network

0

1

.

.

.

Processortomemory

network

p1

1

.

.

.

m1

...

Parallel I/O

Private processor caches reduce memory access traffic through the

interconnection network but lead to challenging consistency problems.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 44

Status of Data Copies

Processors

Caches

Memory modules

Multiple consistent

Processortoprocessor

network

0

w

z

w

x

1

w

y

y

Single inconsistent

Single consistent

p–1

Invalid

x

z

.

.

.

Processortomemory

network

0

1

.

.

.

z

m–1

...

Parallel I/O

Figure 27.4 Various types of cached data blocks in a parallel processor

with centralized main memory and private processor caches.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 45

A Snoopy

Cache Coherence

Protocol

P

P

P

P

C

C

C

C

Bus

Memory

CPU w rite miss:

signal write miss

on bus

Invalid

Bus write miss:

write back cache line

Bus write miss

CPU read miss:

signal read miss

on bus

Bus read miss: write back cache line

Exclusive

CPU read

or write hit

(writable)

Shared

CPU w rite hit: signal write miss on bus

(read-only)

CPU read hit

Figure 27.5 Finite-state control mechanism for a bus-based

snoopy cache coherence protocol with write-back caches.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 46

27.3 Implementing Symmetric Multiprocessors

Computing nodes

Interleaved memory

(typically, 1-4 CPUs

and caches per node)

I/O modules

Standard interfaces

Bus adapter

Bus adapter

Very wide, high-bandwidth bus

Figure 27.6 Structure of a generic bus-based symmetric multiprocessor.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 47

Bus Bandwidth Limits Performance

Example 27.1

Consider a shared-memory multiprocessor built around a single bus with

a data bandwidth of x GB/s. Instructions and data words are 4 B wide,

each instruction requires access to an average of 1.4 memory words

(including the instruction itself). The combined hit rate for caches is 98%.

Compute an upper bound on the multiprocessor performance in GIPS.

Address lines are separate and do not affect the bus data bandwidth.

Solution

Executing an instruction implies a bus transfer of 1.4 0.02 4 = 0.112 B.

Thus, an absolute upper bound on performance is x/0.112 = 8.93x GIPS.

Assuming a bus width of 32 B, no bus cycle or data going to waste, and

a bus clock rate of y GHz, the performance bound becomes 286y GIPS.

This bound is highly optimistic. Buses operate in the range 0.1 to 1 GHz.

Thus, a performance level approaching 1 TIPS (perhaps even ¼ TIPS) is

beyond reach with this type of architecture.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 48

Implementing Snoopy Caches

State

Duplicate tags

and state store

for snoop side

CPU

Tags

Addr

Cmd

Cache

data

array

Snoop side

cache control

Main tags and

state store for

processor side

Processor side

cache control

=?

Tag

=?

Snoop

state

Cmd

Addr

Buffer Buffer

Addr

Cmd

System

bus

Figure 27.7 Main structure for a snoop-based cache coherence algorithm.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 49

27.4 Distributed Shared Memory

Processors with memory

Parallel input/output

y := -1

z := 1

Routers

0

1

z :0

.

.

.

while z=0 do

x := x + y

endwhile

p1

Interconnection

network

x:0

y:1

Figure 27.8 Structure of a distributed shared-memory multiprocessor.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 50

27.5 Directories to Guide Data Access

Communication &

memory interfaces

Directories

Processors & caches

Memories

Parallel input/output

0

1

.

.

.

Interconnection

network

p1

Figure 27.9 Distributed shared-memory multiprocessor with a cache,

directory, and memory module associated with each processor.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 51

Directory-Based Cache Coherence

Write miss: return value,

set sharing set to {c}

Read miss: return value,

set sharing set to {c}

Uncached

Write miss:

fetch data from owner,

request invalidation,

return value,

set sharing set to {c}

Data w rite-back:

set sharing set to { }

Read miss:

return value,

include c in

sharing set

Read miss: fetch data from owner,

return value, include c in sharing set

Exclusive

(writable)

Shared

Write miss: invalidate all cached copies,

set sharing set to {c}, return value

(read-only)

Figure 27.10 States and transitions for a directory entry in a directorybased cache coherence protocol (c is the requesting cache).

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 52

27.6 Implementing Asymmetric Multiprocessors

To I/O controllers

Node 0

Link

Memory

Computing nodes (typically, 1-4 CPUs and associated memory)

Node 1

Node 2

Node 3

Link

Link

Link

Ring

network

Figure 27.11 Structure of a ring-based distributed-memory multiprocessor.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 53

Processors

and caches

Memories

To interconnection network

Scalable

Coherent

Interface

(SCI)

0

1

2

3

Figure 27.11 Structure of a ring-based

distributed-memory multiprocessor.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 54

28 Distributed Multicomputing

Computer architects’ dream: connect computers like toy blocks

• Building multicomputers from loosely connected nodes

• Internode communication is done via message passing

Topics in This Chapter

28.1 Communication by Message Passing

28.2 Interconnection Networks

28.3 Message Composition and Routing

28.4 Building and Using Multicomputers

28.5 Network-Based Distributed Computing

28.6 Grid Computing and Beyond

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 55

28.1 Communication by Message Passing

Parallel input/output

Memories and processors

0

1

A computing node

.

.

.

Interconnection

network

p1

Figure 28.1

Mar. 2007

Routers

Structure of a distributed multicomputer.

Computer Architecture, Advanced Architectures

Slide 56

Router Design

Routing and

arbitration

Ejection channel

LC

LC

Q

Q

Input channels

Input queues

Link controller

Message queue

Output queues

LC

Q

Q

LC

LC

Q

Q

LC

LC

Q

Q

LC

LC

Q

Q

LC

Switch

Output channels

Injection channel

Figure 28.2 The structure of a generic router.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 57

Building Networks from Switches

Straight through

Crossed connection

Lower broadcast

Upper broadcast

Figure 28.3 Example 2 2 switch with point-to-point

and broadcast connection capabilities.

Processors

0

Memories

Row 0

0

1

1

2

2

Row 1

3

4

3

Row 2

4

5

5

6

6

Row 3

7

7

Row 4

Figure 27.2

8

9

9

Butterfly and

Beneš networks

10

10

11

Row 6

12

13

14

14

Row 7

(a) Butterfly network

15

0

1

2

3

4

5

6

7

8

13

15

Mar. 2007

Row 5

11

12

P

r

o

c

e

s

s

o

r

s

0

M

e

m

o

r

i

e

s

1

2

3

4

5

6

7

(b) Beneš network

Computer Architecture, Advanced Architectures

Slide 58

Interprocess Communication via Messages

Communication

latency

Time

Process A

Process B

...

...

...

...

...

...

send x

...

...

...

...

...

...

...

...

...

receive x

...

...

...

Process B is suspended

Process B is awakened

Figure 28.4 Use of send and receive message-passing

primitives to synchronize two processes.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 59

28.2 Interconnection Networks

Nodes

(a) Direct network

Figure 28.5

Mar. 2007

Routers

Nodes

(b) Indirect network

Examples of direct and indirect interconnection networks.

Computer Architecture, Advanced Architectures

Slide 60

Direct

Interconnection

Networks

(a) 2D torus

(b) 4D hyperc ube

(c) Chordal ring

(d) Ring of rings

Figure 28.6 A sampling of common direct interconnection networks.

Only routers are shown; a computing node is implicit for each router.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 61

Indirect Interconnection Networks

Level-3 bus

Level-2 bus

Level-1 bus

(a) Hierarchical bus es

(b) Omega network

Figure 28.7 Two commonly used indirect interconnection networks.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 62

28.3 Message Composition and Routing

Message

Packet

data

Padding

First packet

Header

Data or payload

Last packet

Trailer

A transmitted

packet

Flow control

digits (flits)

Figure 28.8

Mar. 2007

Messages and their parts for message passing.

Computer Architecture, Advanced Architectures

Slide 63

Wormhole Switching

Each worm is blocked at the

point of attempted right turn

Destination 1

Destination 2

Worm 1:

moving

Worm 2:

blocked

Source 1

Source 2

(a) Two worms en route to their

respective destinations

(b) Deadlock due to circular waiting

of four blocked worms

Figure 28.9 Concepts of wormhole switching.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 64

28.4 Building and Using Multicomputers

Inputs

t=1

B

A

B

C

D

A

B

C

A

B E G H

C

F

D

E

F

GH

t=2

t=2

t=2

A

C

F

t=1

D

E

F

G

H

H

t=3

D

t=2

t=1

Outputs

G

Time

E

0

(a) Static task graph

Figure 28.10

Mar. 2007

5

10

(b) Schedules on 1-3 computers

A task system and schedules on 1, 2, and 3 computers.

Computer Architecture, Advanced Architectures

Slide 65

15

Building Multicomputers from Commodity Nodes

One module:

CPU(s),

memory,

disks

Expansion

slots

One module:

CPU,

memory,

disks

(a) Current racks of modules

Wireless

connection

surfaces

(b) Futuristic toy-block construction

Figure 28.11 Growing clusters using modular nodes.

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 66

28.5 Network-Based Distributed Computing

PC

Fast network

interface with

large memory

System or

I/O bus

NIC

Network built of

high-s peed

wormhole switches

Figure 28.12

Mar. 2007

Network of workstations.

Computer Architecture, Advanced Architectures

Slide 67

28.6 Grid Computing and Beyond

Computational grid is analogous to the power grid

Decouples the “production” and “consumption” of computational power

Homes don’t have an electricity generator; why should they have a computer?

Advantages of computational grid:

Near continuous availability of computational and related resources

Resource requirements based on sum of averages, rather than sum of peaks

Paying for services based on actual usage rather than peak demand

Distributed data storage for higher reliability, availability, and security

Universal access to specialized and one-of-a-kind computing resources

Still to be worked out: How to charge for computation usage

Mar. 2007

Computer Architecture, Advanced Architectures

Slide 68

![[#GLASSFISH-401] Vector processing is sub-optimal](http://s3.studylib.net/store/data/007685658_2-42184184a28c0164ebd19c0bb18ab114-300x300.png)