Lecture #8

advertisement

Action Research

Data Manipulation and Crosstabs

INFO 515

Glenn Booker

INFO 515

Lecture #8

1

Parametric vs. Nonparametric

Statistical tests fall into two broad

categories – parametric & nonparametric

Parametric methods

INFO 515

Require data at higher levels of measurement interval and/or ratio scales

Are more mathematically powerful than

nonparametric statistics

But often require more assumptions about the

data, such as having a normal distribution, or

equal variances

Lecture #8

2

Parametric vs. Nonparametric

Nonparametric methods

Use nominal or ordinal scale data

Still allows us to test for a relationship,

and its strength and direction (direction

only if ordinal)

Often has easier prerequisites for being

tested (e.g. no distribution limits)

Ratio or interval scale data may be

recoded to become nominal or ordinal

data, and hence be used with

nonparametric tests

INFO 515

Lecture #8

3

Significance and Association

… are useful for inferring population

values from samples (inferential statistics)

Significance establishes whether chance

can be ruled out as the most likely explanation

of differences

Association shows the nature, strength, and/or

direction of the relationship between two (or

among three or more) variables

Need to show significance before

association is meaningful

INFO 515

Lecture #8

4

Common Tests of Significance

We’ve been introduced to three common

tests of significance:

z test (large samples of ratio or interval data)

t test (small samples of ratio or interval data)

F test (ANOVA)

Shortly we’ll explore a fourth one

Pearson’s chi-square 2 (used for nominal or

ordinal scale data)

{ is the Greek letter chi, pronounced ‘kye’, rhymes with ‘rye’}

INFO 515

Lecture #8

5

Common Measures of Association

Association measures often range in value

from -1 to +1 (but not always!)

Absence of association between variables

generally means a result of 0

Examples

Pearson’s r (for interval or ratio scale data)

Yule’s Q (ordinal data in a 2x2 table)

Gamma (ordinal – more than 2x2 table)

{A “2x2” table has 2 rows and 2 columns of data.}

INFO 515

Lecture #8

6

Common Measures of Association

Notice these are all for nominal scale data

INFO 515

Phi (, ‘fee’) (nominal data in a 2x2 table)

Contingency Coefficient (nominal – table larger

than 2x2)

Cramer’s V (nominal - larger than 2x2)

Lambda (l) - nominal data

Eta () – nominal data

Lecture #8

7

Significance and Association

Tests of significance and measures of

association are often used together

But you can have statistical significance

without having association

INFO 515

Lecture #8

8

Significance and Association Examples

Ratio data: You might use F to determine

if there is a significant relationship, then

use ‘r’ from a regression to measure its

strength

Ordinal data: You might run a chisquare to determine statistical significance

in the frequencies of two variables, and

then run a Yule’s Q to show the

relationship between the variables

INFO 515

Lecture #8

9

Crosstabs

Brief digression to introduce crosstabs

before discussing non-parametric methods

INFO 515

Crosstabs are a table, often used to display

data, sorted by two nominal or ordinal

variables at once, to study the relationship

between variables that have a small number

of possible answers each

Generally contains basic descriptive statistics,

such as frequency counts and percentages

Lecture #8

10

Crosstabs

Used to check the distribution of data, and

as a foundation for more complex tests

Look for gaps or sparse data (little or no

contribution to the data set)

Rule of thumb - put independent variable

in the columns and dependent variable in

the rows

INFO 515

Lecture #8

11

Percentages

Can show both column and row

percentages in crosstabs, rather than just

frequency counts (or show both counts

and percentages)

Make sure percentages add to 100%!

Raw frequency counts of variables don’t

always provide an accurate picture

INFO 515

Unequal numbers of subjects in groups (N)

might make the numbers appear skewed

Lecture #8

12

Crosstabs Example

Open data set “GSS91 political.sav”

Use Analyze / Descriptive Statistics /

Crosstabs...

Set the Row(s) as “region”, and the

Column(s) as “relig”

Note the default scope of an SPSS

crosstab is to show frequency Counts,

with row and column totals

INFO 515

Lecture #8

13

Crosstabs Example

REGION OF INTERVIEW * RS RELIGIOUS PREFERENCE Crosstabulation

Count

RS RELIGIOUS PREFERENCE

REGION OF

INTERVIEW

Total

INFO 515

NEW ENGLAND

MIDDLE ATLANTIC

E. NOR. CENTRAL

W. NOR. CENTRAL

SOUTH ATLANTIC

E. SOU. CENTRAL

W. SOU. CENTRAL

MOUNTAIN

PACIFIC

PROTES

TANT

34

88

156

92

217

104

92

57

115

955

CATHOLIC

49

86

77

24

53

4

24

15

49

381

Lecture #8

JEWISH

0

8

4

0

6

0

1

1

12

32

NONE

6

13

17

3

14

3

7

5

33

101

OTHER

1

6

1

3

7

1

4

1

5

29

Total

90

201

255

122

297

112

128

79

214

1498

14

Crosstabs Example

Repeat the same example with

percentages selected under the “Cells…”

button to get detailed data in each cell

Percent within that region (Row)

Percent within that religious preference

(Column)

Percent of total data set (divide by Total N)

Gets a bit messy to show this much!

INFO 515

Lecture #8

15

Crosstabs

Example

REGION OF INTERVIEW * RS RELIGIOUS PREFERENCE Crosstabulation

RS RELIGIOUS PREFERENCE

REGION OF

INTERVIEW

NEW ENGLAND

MIDDLE ATLANTIC

E. NOR. CENTRAL

W. NOR. CENTRAL

SOUTH ATLANTIC

E. SOU. CENTRAL

W. SOU. CENTRAL

MOUNTAIN

PACIFIC

Total

INFO 515

Lecture #8

Count

% within REGION OF

INTERVIEW

% within RS RELIGIOUS

PREFERENCE

% of Total

Count

% within REGION OF

INTERVIEW

% within RS RELIGIOUS

PREFERENCE

% of Total

Count

% within REGION OF

INTERVIEW

% within RS RELIGIOUS

PREFERENCE

% of Total

Count

% within REGION OF

INTERVIEW

% within RS RELIGIOUS

PREFERENCE

% of Total

Count

% within REGION OF

INTERVIEW

% within RS RELIGIOUS

PREFERENCE

% of Total

Count

% within REGION OF

INTERVIEW

% within RS RELIGIOUS

PREFERENCE

% of Total

Count

% within REGION OF

INTERVIEW

% within RS RELIGIOUS

PREFERENCE

% of Total

Count

% within REGION OF

INTERVIEW

% within RS RELIGIOUS

PREFERENCE

% of Total

Count

% within REGION OF

INTERVIEW

% within RS RELIGIOUS

PREFERENCE

% of Total

Count

% within REGION OF

INTERVIEW

% within RS RELIGIOUS

PREFERENCE

% of Total

PROTES

TANT

34

CATHOLIC

49

JEWISH

0

37.8%

54.4%

3.6%

12.9%

2.3%

88

NONE

6

OTHER

1

Total

90

.0%

6.7%

1.1%

100.0%

.0%

5.9%

3.4%

6.0%

3.3%

86

.0%

8

.4%

13

.1%

6

6.0%

201

43.8%

42.8%

4.0%

6.5%

3.0%

100.0%

9.2%

22.6%

25.0%

12.9%

20.7%

13.4%

5.9%

156

5.7%

77

.5%

4

.9%

17

.4%

1

13.4%

255

61.2%

30.2%

1.6%

6.7%

.4%

100.0%

16.3%

20.2%

12.5%

16.8%

3.4%

17.0%

10.4%

92

5.1%

24

.3%

0

1.1%

3

.1%

3

17.0%

122

75.4%

19.7%

.0%

2.5%

2.5%

100.0%

9.6%

6.3%

.0%

3.0%

10.3%

8.1%

6.1%

217

1.6%

53

.0%

6

.2%

14

.2%

7

8.1%

297

73.1%

17.8%

2.0%

4.7%

2.4%

100.0%

22.7%

13.9%

18.8%

13.9%

24.1%

19.8%

14.5%

104

3.5%

4

.4%

0

.9%

3

.5%

1

19.8%

112

92.9%

3.6%

.0%

2.7%

.9%

100.0%

10.9%

1.0%

.0%

3.0%

3.4%

7.5%

6.9%

92

.3%

24

.0%

1

.2%

7

.1%

4

7.5%

128

71.9%

18.8%

.8%

5.5%

3.1%

100.0%

9.6%

6.3%

3.1%

6.9%

13.8%

8.5%

6.1%

57

1.6%

15

.1%

1

.5%

5

.3%

1

8.5%

79

72.2%

19.0%

1.3%

6.3%

1.3%

100.0%

6.0%

3.9%

3.1%

5.0%

3.4%

5.3%

3.8%

115

1.0%

49

.1%

12

.3%

33

.1%

5

5.3%

214

53.7%

22.9%

5.6%

15.4%

2.3%

100.0%

12.0%

12.9%

37.5%

32.7%

17.2%

14.3%

7.7%

955

3.3%

381

.8%

32

2.2%

101

.3%

29

14.3%

1498

63.8%

25.4%

2.1%

6.7%

1.9%

100.0%

100.0%

100.0%

100.0%

100.0%

100.0%

100.0%

63.8%

25.4%

2.1%

6.7%

1.9%

100.0%

16

Recoding

An interval or ratio scaled variable, like

age or salary, may have too many distinct

values to use in a crosstab

Recoding lets you combine values into a

single new variable -- also called

collapsing the codes

Also helpful for creating histogram

variables (e.g. ranges of age or income)

INFO 515

Lecture #8

17

Recoding Example

Use Transform / Recode /

Into Different Variables…

INFO 515

Move “age” from the dropdown list for the

Numeric Variable

Define the new Output Variable to have Name

“agegroup” and Label “Age Group”

Click “Change” button to use “agegroup”

Click on “Old and New Values” button

Lecture #8

18

Recoding Example

For the Old Value, enter Range of 18 to 30

Assign this to a New Value of 1

Click on “Add”

Repeat to define ages 31-50 as agegroup

New Value 2, 51-75 as 3, and 76-200 as 4

Click “Continue” and now a new variable

exists as defined

INFO 515

Lecture #8

19

Recoding

Example

INFO 515

Lecture #8

20

Recoding Example

Now generate a crosstab with “agegroup”

as columns, and “region” as the rows

REGION OF INTERVIEW * Age Group Crosstabulation

Count

1.00

REGION OF

INTERVIEW

Total

INFO 515

NEW ENGLAND

MIDDLE ATLANTIC

E. NOR. CENTRAL

W. NOR. CENTRAL

SOUTH ATLANTIC

E. SOU. CENTRAL

W. SOU. CENTRAL

MOUNTAIN

PACIFIC

25

36

56

29

66

15

38

22

48

335

Age Group

2.00

3.00

40

17

89

66

115

71

41

37

115

95

57

30

55

27

24

24

106

48

642

415

Lecture #8

4.00

8

12

13

15

21

10

8

9

12

108

Total

90

203

255

122

297

112

128

79

214

1500

21

Second Recoding Example

Prof. Yonker had a previous INFO515 class

surveyed for their height (in inches) and

desired salaries ($/yr)

Rather than analyze ratio data with few

frequencies larger than one, she recoded:

INFO 515

Heights into: Dwarves for people below

average height, and Giants for those above

Desired salaries were recoded into Cheap and

Expensive, again below and above average

Lecture #8

22

Second Recoding Example

The resulting crosstab was like this:

New Salary * New Height Crosstabulation

New Salary

Cheap

Expensiv

Total

INFO 515

Count

% within New Height

Count

% within New Height

Count

% within New Height

Lecture #8

New Height

Dwarves

Giants

9

7

69.2%

53.8%

4

6

30.8%

46.2%

13

13

100.0%

100.0%

Total

16

61.5%

10

38.5%

26

100.0%

23

Pearson Chi Square Test

The Chi Square test measures how much

observed (actual) frequencies (fo) differ

from “expected” frequencies (fe)

INFO 515

Is a nonparametric test, a.k.a. the Goodness of

Fit statistic

Does not require assumptions about the shape

of the population distribution

Does not require variables be measured on an

interval or ratio scale

Lecture #8

24

Chi Square Concept

Chi Square test is like the ANOVA test

ANOVA proved whether there was a difference

among several means – proved that the means

are different from each other in some way

Chi square is trying to prove whether the

frequency distribution is different from a

random one – is there a significant difference

among frequencies?

Allows us to test for a relationship (but not

the strength or direction if there is one)

INFO 515

Lecture #8

25

Chi Square Null Hypothesis

Null hypothesis is that the frequencies in

cells are independent of each other (there

is no relationship among them)

Each case is independent of every other case;

that is, the value of the variable for one

individual does not influence the value for

another individual

Chi Square works better for small sample

sizes (< hundreds of samples)

INFO 515

WARNING: Almost any really large table will

have a significant chi square

Lecture #8

26

Assumptions for Chi Square

A random sample is the “expected” basis

for comparison

No zero values are allowed for the

observed frequency, fo

Each case can fall into only one cell

And no expected frequencies, fe, less than one

At least 80% of expected frequencies, fe,

should be greater than or equal to

five (≥5)

INFO 515

Lecture #8

27

Expected Frequency

The expected frequency for a cell is based

on the fraction of things which would fall

into it randomly, given the same general

row and column count proportions as the

actual data set

fe = (row total) * (column total) / N

So if 90 people live in New England, and 335

are in Age Group 1 from a total sample of

1500, then we would expect

fe = 90*335/1500 = 20.1 people in that cell

See slide 21

INFO 515

Lecture #8

28

Expected Frequency

So the general formula for the expected

frequency of a given cell is:

fe = (actual row total)*

(actual column total)/N

Notice that this is NOT using the average

expected frequency for every cell

fe = N / [(# of rows)*(# of columns)]

INFO 515

Lecture #8

29

Calculating Chi Square

The Chi square value for each cell is the

observed frequency minus the expected

one, squared, divided by the expected

frequency

Chi square per cell = (fo-fe)2/fe

Sum this for all cells in the crosstab

For the cell on slide 28, the actual

frequency was 25, so Chi square for that

cell is = (25-20.1)2/20.1 = 1.195

Note: Chi square is always positive

INFO 515

Lecture #8

30

Calculating Chi Square

Page 36/37 of the Action Research

handout has an example of chi square

calculation, where

fo is the observed (actual) frequency

fe is the expected frequency

E.g. fe for the first cell is 20*30/60 = 10.0

Chi square for each cell is (fo-fe)2/fe

Sum chi square for all cells in the table

No comments about fe fi fo fum! Is that clear?!?!

INFO 515

Lecture #8

31

Interpreting Chi Square

When the total Chi square is larger than

the critical value, reject the null

hypothesis

See Action Research handout page 42/43 for

critical Chi square (2) values

Look up critical value using the ‘df’ value,

which is based on the number of rows and

columns in the crosstab:

df = (#rows - 1)(#columns - 1)

INFO 515

For the example on slide 21,

df = (9-1)(4-1) = 8*3 = 24

Lecture #8

32

Interpreting Chi Square

Or you can be lazy and use the old

standby:

if the significance is less than 0.050, reject the

null hypothesis

if the significance is less than 0.050, reject the

null hypothesis

if the significance is less than 0.050, reject the

null hypothesis

if the significance is less than 0.050, reject the

null hypothesis

INFO 515

Lecture #8

33

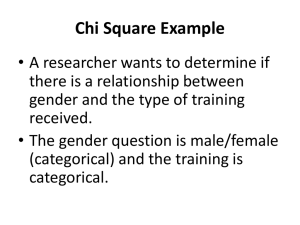

Chi Square Example

Open data set “GSS91 political.sav”

Use Analyze / Descriptive Statistics /

Crosstabs...

Set the Row(s) as “region”, and the

Column(s) as “agegroup”

Click on “Statistics…” and select the

“Chi-square” test

Notice we’re still using the Crosstab command!

INFO 515

Lecture #8

34

Chi Square Example

Chi-Square Tests

Pears on Chi-Square

Likelihood Ratio

Linear-by-Linear

Ass ociation

N of Valid Cases

Value

43.260a

43.557

1.062

24

24

Asymp. Sig.

(2-s ided)

.009

.009

1

.303

df

1500

a. 0 cells (.0%) have expected count les s than 5. The

minimum expected count is 5.69.

INFO 515

Lecture #8

35

Chi Square Example

Note that we correctly predicted the ‘df’

value of 24

SPSS is ready to warn you if too many

cells expected a count below five, or had

expected counts below one

The significance is below 0.050, indicating

we reject the null hypothesis

The total Chi square for all cells is 43.260

INFO 515

Lecture #8

36

Chi Square Example

The critical Chi square value can be looked

up on page 42/43 of Yonker

For df = 24, and significance level 0.050,

we get a critical Chi square of 36.415

Since the actual Chi square (43.260) is greater

than the critical value (36.415), reject the null

hypothesis

Chi square often shows significance falsely

for large sample sizes (hence the earlier

warning)

INFO 515

Lecture #8

37

Chi Square Example

What are the other tests? They don’t apply

here...

The Likelihood Ratio test is specifically for loglinear models

The Linear-by-Linear Association test is a

function of Pearson’s ‘r’, so it only applies to

interval or ratio scale variables

Notice that SPSS doesn’t realize those

tests don’t apply, and blindly presents

results for them…

INFO 515

Lecture #8

38

One-variable Chi square Test

To check only one variable’s distribution,

there is another way to run Chi square

Null hypothesis is that the variable is

evenly distributed across all of its

categories

Hence all expected frequencies are equal

for each category, unless you specify

otherwise

INFO 515

Expected range can also be specified

Lecture #8

39

Other Chi square Example

Use Analyze / Nonparametric Tests /

Chi-square…

NOT using the Crosstab command here

Add “region” to the Test Variable List

Now df is the number of categories in

the variable, minus one

df = (# categories) - 1

Significance is interpreted the same

INFO 515

Lecture #8

40

Other Chi square Example

Test Statistics

Chi-Squarea

df

Asymp. Sig.

REGION OF

INTERVIEW

290.352

8

.000

a. 0 cells (.0%) have expected frequencies les s than

5. The minimum expected cell frequency is 166.7.

INFO 515

Lecture #8

41

Other Chi square Example

So in this case, the “region” variable has

nine categories, for a df of 9-1 = 8

Critical Chi square for df = 8 is 15.507, so

the actual value of 290 shows these data

are not evenly distributed across regions

Significance below 0.050 still, in keeping

with our fine long established tradition,

rejects the null hypothesis

INFO 515

Lecture #8

42

Whodunit?

The chi-square value by itself doesn’t tell

us which of the cells are major

contributors to the statistical significance

We compute the standardized residual to

address that issue

This hints at which cells contribute a lot to

the total chi square

INFO 515

Lecture #8

43

Residuals

The Residual is the Observed value minus

the Estimated value for some data point

Residual = fo - fe

If this variable is evenly distributed, the

Residuals should have a normal

distribution

Plots of residuals are sometimes used to

check data normalcy (i.e. how normal is

this data’s distribution?)

INFO 515

Lecture #8

44

Standardized Residual

The Standardized Residual is the Residual

divided by the standard deviation of the

residuals

When the absolute value of the

Standardized Residual for a cell is greater

than 2, you may conclude that it is a

major contributor to the overall chi-square

value

INFO 515

Analogous to the original t test, looking for

|t| > 2

Lecture #8

45

Standardized Residual

Extreme values of Standardized Residual

(e.g. minimum, maximum) can also help

identify extreme data points

The meaning of residual is the same for

regression analysis, BTW, where residuals

are an optional output

INFO 515

Lecture #8

46

Standardized Residual Example

In the crosstab region-agegroup example

Click “Cells…” and select Standardized

Residuals

In this case, the worst cell is the

combination W. Nor. Central region Age Group 4, which produced a

standardized residual of 2.1

INFO 515

Lecture #8

47

Standardized Residual Example

REGION OF INTERVIEW * Age Group Crosstabulation

1.00

REGION OF

INTERVIEW

NEW ENGLAND

MIDDLE ATLANTIC

E. NOR. CENTRAL

W. NOR. CENTRAL

SOUTH ATLANTIC

E. SOU. CENTRAL

W. SOU. CENTRAL

MOUNTAIN

PACIFIC

Total

INFO 515

Count

Std. Res idual

Count

Std. Res idual

Count

Std. Res idual

Count

Std. Res idual

Count

Std. Res idual

Count

Std. Res idual

Count

Std. Res idual

Count

Std. Res idual

Count

Std. Res idual

Count

25

1.1

36

-1.4

56

-.1

29

.3

66

.0

15

-2.0

38

1.8

22

1.0

48

.0

335

Lecture #8

Age Group

2.00

3.00

40

17

.2

-1.6

89

66

.2

1.3

115

71

.6

.1

41

37

-1.6

.6

115

95

-1.1

1.4

57

30

1.3

-.2

55

27

.0

-1.4

24

24

-1.7

.5

106

48

1.5

-1.5

642

415

4.00

8

.6

12

-.7

13

-1.3

15

2.1

21

-.1

10

.7

8

-.4

9

1.4

12

-.9

108

Total

90

203

255

122

297

112

128

79

214

1500

48

Crosstab Statistics for 2x2 Table

2x2 tables appear so often that many

tests have been developed specifically

for them

INFO 515

Equality of proportions

McNemar Chi-square

Yates Correction

Fisher Exact Test

Lecture #8

49

Crosstab Statistics for 2x2 Table

Equality of proportions tests prove

whether the proportion of one variable is

the same as for two different values of

another variable

e.g. Do homeowners vote as often as renters?

McNemar Chi-square tests for frequencies

in a 2x2 table where samples are

dependent (such as pre-test and

post-test results)

INFO 515

Lecture #8

50

Crosstab Statistics for 2x2 Table

Yates Correction for Continuity

chi-square is refined for small observed

frequencies

fe = ( |fo-fe| - 0.5)/fe

Corrections are too conservative; don’t use!

Fisher Exact Test – assumes row/column

frequencies remain fixed, and computes

all possible tables; gives significance value

like Chi square

INFO 515

Lecture #8

51

Nominal Measures of Association

Are used to test if each measure is zero

(null hypothesis) using different scales

Phi

Cramer’s V

Contingency Coefficient

All three are zero iff Chi square is zero

INFO 515

“iff” is mathspeak for ‘if and only if’

Lecture #8

52

Nominal Measures of Association

The usual Significance criterion is used

for all three

If significance < 0.050, reject the null

hypothesis, hence the association is significant

Notice that direction is meaningless for

nominal variables, so only the strength

of an association can be determined

INFO 515

Lecture #8

53

Phi

For a 2x2 table, Phi and Cramer’s V are

equal to Pearson’s r

Phi (φ) can be > 1, making it an unusual

measure of association

Phi = sqrt[ (Chi square) / N]

Phi = 0 means no association

Phi near or over 1 means strong

association

INFO 515

Lecture #8

54

Cramer’s V

Cramer’s V ≤ 1

V = sqrt[ Chi Square / (N*(k – 1) ]

where k is the smaller of the number of

columns or rows

Is a better measure for tables larger than

2x2 instead of the Contingency Coefficient

INFO 515

Lecture #8

55

Contingency Coefficient

a.k.a. C or Pearson’s C or Pearson’s

Contingency Coefficient

Most widely used measure based on

chi-square

Requires only nominal data

C has a value of 0 when there is no

association

INFO 515

Lecture #8

56

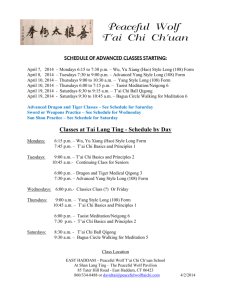

Contingency Coefficient

The max possible value

of C is the square root

of (the number of

columns minus 1,

divided by the number

of columns)

Cmax = sqrt( (#column

- 1) / #column)

C = sqrt[ Chi Square /

(Chi Square + N) ]

Maximum Contingency Coefficient

1

0.95

Cmax

0.9

0.85

0.8

0.75

0.7

0

INFO 515

Lecture #8

2

4

6

8

10

12

14

Number of Columns

57