Linear Regression/Correlation

advertisement

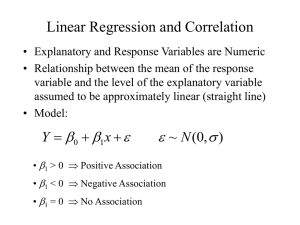

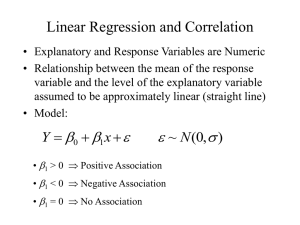

Linear Regression/Correlation • Quantitative Explanatory and Response Variables • Goal: Test whether the level of the response variable is associated with (depends on) the level of the explanatory variable • Goal: Measure the strength of the association between the two variables • Goal: Use the level of the explanatory to predict the level of the response variable Linear Relationships • Notation: – Y: Response (dependent, outcome) variable – X: Explanatory (independent, predictor) variable • Linear Function (Straight-Line Relation): Y = a + b X (Plot Y on vertical axis, X horizontal) – Slope (b): The amount Y changes when X increases by 1 b > 0 Line slopes upward (Positive Relation) b = 0 Line is flat (No linear Relation) b < 0 Line slopes downward (Negative Relation) – Y-intercept (a): Y level when X=0 Example: Service Pricing • Internet History Resources (New South Wales Family History Document Service) • Membership fee: $20A • 20¢ ($0.20A) per image viewed • Y = Total cost of service • X = Number of images viewed a = Cost when no images viewed b = Incremental Cost per image viewed • Y = a + b X = 20+0.20X Example: Service Pricing Total Cost vs Images Viewed www.ihr.com.au 60 cost = 20.00 + 0.20 * im ages R-Square = 1.00 50 cost 40 30 20 0 50 100 images 150 200 Linear Regression Probabilistic Models • In practice, the relationship between Y and X is not “perfect”. Other sources of variation exist. We decompose Y into 2 components: – Systematic Relationship with X: a + b X – Random Error: e • Random respones can be written as the sum of the systematic (also thought of as the mean) and random components: Y = a + b X + e • The (conditional on X) mean response is: E(Y) = a + b X Least Squares Estimation • Problem: a, b are unknown parameters, and must be estimated and tested based on sample data. • Procedure: – – – – ^ Sample n individuals, observing X and Y on each one Plot the pairs Y (vertical axis) versus X (horizontal) Choose the line that “best fits” the data. Criteria: Choose line that minimizes sum of squared vertical distances from observed data points to line. Least Squares Prediction Equation: Y = a bX ( X X )(Y Y ) b= (X X ) 2 a = Y bX Example - Pharmacodynamics of LSD • Response (Y) - Math score (mean among 5 volunteers) • Predictor (X) - LSD tissue concentration (mean of 5 volunteers) • Raw Data and scatterplot of Score vs LSD concentration: 80 70 60 LSD Conc (x) 1.17 2.97 3.26 4.69 5.83 6.00 6.41 50 40 SCORE Score (y) 78.93 58.20 67.47 37.47 45.65 32.92 29.97 30 20 1 2 LSD_CONC Source: Wagner, et al (1968) 3 4 5 6 7 Example - Pharmacodynamics of LSD Score (y) 78.93 58.20 67.47 37.47 45.65 32.92 29.97 350.61 LSD Conc (x) 1.17 2.97 3.26 4.69 5.83 6.00 6.41 30.33 x-xbar -3.163 -1.363 -1.073 0.357 1.497 1.667 2.077 -0.001 y-ybar 28.843 8.113 17.383 -12.617 -4.437 -17.167 -20.117 0.001 Sxx 10.004569 1.857769 1.151329 0.127449 2.241009 2.778889 4.313929 22.474943 Sxy -91.230409 -11.058019 -18.651959 -4.504269 -6.642189 -28.617389 -41.783009 -202.487243 Syy 831.918649 65.820769 302.168689 159.188689 19.686969 294.705889 404.693689 2078.183343 (Column totals given in bottom row of table) 350.61 30.33 Y= = 50.087 X= = 4.333 7 7 202.4872 b= = 9.01 a = Y b X = 50.09 (9.01)( 4.33) = 89.10 22.4749 ^ Y = 89.10 9.01X SPSS Output and Plot of Equation a c i d a i i c c B e i M t E g 1 ( 4 8 6 0 L 9 3 7 4 2 a D Math Score vs LSD Concentration (SPSS) 80.00 Linear Regression 70.00 60.00 score 50.00 40.00 30.00 1.00 2.00 score = 89.12 + -9.01 * lsd_conc R-Square4.00 = 0.88 5.00 3.00 6.00 lsd_conc Example - Retail Sales • U.S. SMSA’s • Y = Per Capita Retail Sales • X = Females per 100 Males Per Capita Retail Sales vs Females per 100 Males Linear Regression 40.00 i a c pcsale s 30.00 d a f f i i c c S B e i E M t g 1 ( 1 3 6 0 20.00 F 3 8 1 9 0 + pcsales =-9.85 0.16 * f100m R-Square 0.08 = 10.00 0.00 50.00 75.00 100.00 f100m 125.00 a D ^ Y = 9.851 0.163 X Residuals • Residuals (aka Errors): Difference between observed values and predicted values: e = Y Y^ • Error sum of squares: ^ SSE = (Y Y ) 2 • Estimate of (conditional) standard deviation of Y: ^ ^ = SSE = n2 2 ( Y Y ) n2 Linear Regression Model • • • • Data: Y = a b X + e Mean: E(Y) = a b X Conditional Standard Deviation: Error terms (e) are assumed to be independent and normally distributed Parameter Estimator b ( X X )(Y Y ) b= (X X ) a a bX a = Y bX a bX 2 ^ ^ = 2 ( Y Y ) n2 Example - Pharmacodynamics of LSD ^ Y = 89.10 9.01X Y X 78.93 58.20 67.47 37.47 45.65 32.92 29.97 1.17 2.97 3.26 4.69 5.83 6.00 6.41 Yhat e=Y-Yhat 78.5583 0.3717 62.3403 -4.1403 59.7274 7.7426 46.8431 -9.3731 36.5717 9.0783 35.04 -2.12 31.3459 -1.3759 e^2 0.138161 17.14208 59.94785 87.855 82.41553 4.4944 1.893101 253.8861 253.8861 SSE = 253.8861 = = 7.13 72 ^ Correlation Coefficient • Slope of the regression describes the direction of association (if any) between the explanatory (X) and response (Y). Problems: – The magnitude of the slope depends on the units of the variables – The slope is unbounded, doesn’t measure strength of association – Some situations arise where interest is in association between variables, but no clear definition of X and Y • Population Correlation Coefficient: r • Sample Correlation Coefficient: r Correlation Coefficient • Pearson Correlation: Measure of strength of linear association: – Does not delineate between explanatory and response variables – Is invariant to linear transformations of Y and X – Is bounded between -1 and 1 (higher values in absolute value imply stronger relation) – Same sign (positive/negative) as slope r= ( X X )(Y Y ) ( X X ) (Y Y ) 2 2 sX = sY b Example - Pharmacodynamics of LSD • Using formulas for standard deviation from beginning of course: sX = 1.935 and sY = 18.611 • From previous calculations: b = -9.01 1.935 r = (9.01) = 0.94 18.611 This represents a strong negative association between math scores and LSD tissue concentration Coefficient of Determination • Measure of the variation in Y that is “explained” by X – Step 1: Ignoring X, measure the total variation in Y (around its mean): 2 TSS = (Y Y ) – Step 2: Fit regression relating Y to X and measure the unexplained variation in Y (around its predicted ^ values): SSE = (Y Y ) 2 – Step 3: Take the difference (variation in Y “explained” by X), and divide by total: TSS SSE 2 r = TSS Example - Pharmacodynamics of LSD TSS = (Y Y ) 2 = 2078.183 ^ SSE = (Y Y ) 2 =253.89 r2 = 2078.183 253.89 = 0.88 = (0.94) 2 2078.183 TSS 80.00 80.00 Mean Linear Regression 70.00 70.00 60.00 score score SSE 60.00 50.00 50.00 Mean = 50.09 40.00 40.00 30.00 1.00 30.00 1.00 2.00 3.00 4.00 lsd_conc 5.00 6.00 score = 89.12 + -9.01 * lsd_conc 2.00 R-Square 3.00 = 0.88 4.00 5.00 6.00 lsd_conc Inference Concerning the Slope (b) • Parameter: Slope in the population model (b) • Estimator: Least squares estimate: b ^ ^ ^ • Estimated standard error: b = (X X ) 2 = sX n 1 • Methods of making inference regarding population: – Hypothesis tests (2-sided or 1-sided) – Confidence Intervals Significance Test for b • 2-Sided Test – H0: b = 0 – HA: b 0 T .S . : tobs = b ^ b P val : 2 P(t | tobs |) • 1-sided Test – H0: b = 0 – HA+: b > 0 or – HA-: b < 0 T .S . : tobs = b ^ b P val : P(t tobs ) P val : P(t tobs ) (1-a)100% Confidence Interval for b ^ ^ b ta / 2,n 2 b b ta / 2,n 2 (X X ) 2 • Conclude positive association if entire interval above 0 • Conclude negative association if entire interval below 0 • Cannot conclude an association if interval contains 0 • Conclusion based on interval is same as 2-sided hypothesis test Example - Pharmacodynamics of LSD ^ 2 ( X X ) = 22.475 n = 7 b = 9.01 = 50.72 = 7.12 7.12 b = = 1.50 22.475 ^ • Testing H0: b = 0 vs HA: b 0 T .S . : tobs 9.01 = = 6.01 1.50 P = 2 P(t | 6.01 |) 0 • 95% Confidence Interval for b : 9.01 2.571(1.50) 9.01 3.86 (12.87,5.15) t.025,5 Analysis of Variance in Regression • Goal: Partition the total variation in y into variation “explained” by x and random variation ^ ^ ( yi y ) = ( yi y i ) ( y i y ) 2 ^ ^ ( y y) = ( y y ) ( y y) 2 i i i 2 i • These three sums of squares and degrees of freedom are: •Total (TSS) dfTotal = n-1 • Error (SSE) dfError = n-2 • Model (SSR) dfModel = 1 Analysis of Variance in Regression Source of Variation Model Error Total Sum of Squares SSR SSE TSS Degrees of Freedom 1 n-2 n-1 Mean Square MSR = SSR/1 MSE = SSE/(n-2) F F = MSR/MSE • Analysis of Variance - F-test • H0: b = 0 HA: b 0 MSR T .S . : Fobs = MSE P val : P( F Fobs ) F represents the F-distribution with 1 numerator and n-2 denominator degrees of freedom Example - Pharmacodynamics of LSD • Total Sum of squares: TSS = ( yi y) 2 = 2078.183 dfTotal = 7 1 = 6 • Error Sum of squares: ^ SSE = ( yi y i ) 2 = 253.890 df Error = 7 2 = 5 • Model Sum of Squares: ^ SSR = ( y i y) 2 = 2078.183 253.890 = 1824.293 df Model = 1 Example - Pharmacodynamics of LSD Source of Variation Model Error Total Sum of Squares 1824.293 253.890 2078.183 Degrees of Freedom 1 5 6 Mean Square 1824.293 50.778 •Analysis of Variance - F-test • H0: b = 0 HA: b 0 MSR T .S . : Fobs = = 35.93 MSE P val : P( F 35.93) = .002 (See next slide) F 35.93 Example - SPSS Output b O m d F S M i a g f a 1 R 2 1 2 8 2 R 1 5 6 T 3 6 a P b D Significance Test for Pearson Correlation • Test identical (mathematically) to t-test for b, but more appropriate when no clear explanatory and response variable • H0: r = 0 Ha: r 0 (Can do 1-sided test) r • Test Statistic: t = obs • P-value: 2P(t|tobs|) (1 r 2 ) /( n 2) Model Assumptions & Problems • Linearity: Many relations are not perfectly linear, but can be well approximated by straight line over a range of X values • Extrapolation: While we can check validity of straight line relation within observed X levels, we cannot assume relationship continues outside this range • Influential Observations: Some data points (particularly ones with extreme X levels) can exert a large influence on the predicted equation.