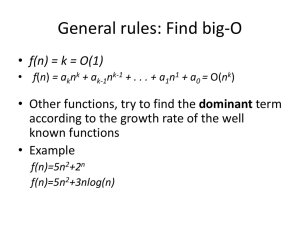

Growth in Equations spring 2011

advertisement

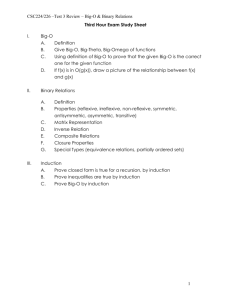

Discrete Structures CISC 2315 Growth of Functions Introduction • Once an algorithm is given for a problem and decided to be correct, it is important to determine how much in the way of resources, such as time or space, that the algorithm will require. – We focus mainly on time in this course. – Such an analysis will often allow us to improve our algorithms. Running Time Analysis N Data Items 1000101010100011111000110001110101010101010101 0010001010101000100000000000011110101000111010 0010101010101010101010101111111100000011001011 Returns in time T1(N) Algorithm 1 N Data Items 1000101010100011111000110001110101010101010101 0010001010101000100000000000011110101000111010 0010101010101010101010101111111100000011001011 Returns in time T2(N) Algorithm 2 Analysis of Running Time Running Time T(N) (which algorithm is better?) Algorithm 1 Algorithm 2 n0 Number of Input Items N Comparing Algorithms • We need a theoretical framework upon which we can compare algorithms. • The idea is to establish a relative order among different algorithms, in terms of their relative rates of growth. The rates of growth are expressed as functions, which are generally in terms of the number/size of inputs N. • We also want to ignore details, e.g., the rate of growth is N2 rather than 3N2 – 5N + 2. NOTE: The text uses the variable x. We use N here. Big-O Notation: • Definition: big-O T ( N ) O( f ( N )) if there are positive constants c and n0 such that T(N) c f(N) when N n0 NOTE: We use |T(N)| < c|f(N)| if the functions could be negative. In this class, we will assume they are positive unless stated otherwise. • This says that function T(N) grows at a rate no faster than f(N); thus c f(N) is an upper bound on T(N). Running Time T(N) Big-O Upper Bound c f(N) T(N) n0 Number of Input Items N Big-O Example • Prove that – Since – Then 5 N 3 2 N 2 O( N 3 ) 5N 3 2 N 2 5 N 3 2 N 3 7 N 3 ( for N 1) 5 N 3 2 N 2 O( N 3 ) with c 7 and n0 1 • We could also prove that 5N 3 2 N 2 O( N 4 ) but the first upper bound is tighter (lower). • Note that Why? How do you show something is not O(something)? 5N 3 2 N 2 O( N 2 ) Another Big-O Example • Prove that N 2 2 N 1 O( N 2 ) N 2 2 N 1 N 2 2 N 2 N 2 4 N 2 ( for N 1) – Since – Then N 2 2 N 1 O( N 2 ) with c 4 and n0 1 • We could also prove thatN 2 2 N 1 O( N 3 ) but the first bound is tighter. 2 N • Note that 2 N 1 O( N ) Why? Why Big-O? • It gets very complicated comparing the time complexity of algorithms when you have all the details. Big-O gets rid of the details and focuses on the most important part of the comparison. • Note that Big-O is a worst-case analysis. Same-Order Functions • • • • Let f(N) = N2 + 2N + 1 Let g(N) = N2 g(N) = O(f(N)) because N2 < N2 + 2N + 1 f(N) = O(g(N)) because: – N2 + 2N + 1 < N2 + 2N2 + N2 = 4N2, which is O(N2) with c = 4 and n0 = 1. • In this case we say that f(N) and g(N) are of the same order. Simplifying the Big-O • Theorem 1 (in text): – Let f(N) = anNn + an-1Nn-1 + … + a 1N + a 0 – Then f(N) is O(Nn). Another Big-O Example • Recall that N! = N*(N-1)*…*3*2*1 when N is a positive integer > 0, and 0! = 1. • Then N! = N*(N-1)*…*3*2*1 < N*N*N* … *N = NN • Therefore, N! = O(NN). But this is not all we know… • Taking log of both sides, log N! < log NN = N log N. Therefore log N! is O(N log N). (c=1, n0 = 2) Recall that we assume logs are base 2. Another Big-O Example • In Section 3.2 it will be shown that N < 2N. • Taking the log of both sides, log N < N. • Therefore log N = O(N). (c=1 and n0 = 1) Complexity terminology • • • • • • • O(1) O(log N) O(N) O(N log N) O(Nb) O(bN), b > 1 O(N!) Constant complexity Logarithmic complexity Linear complexity N log N complexity Polynomial complexity Exponential complexity Factorial complexity Growth of Combinations of Functions • Sum Rule: Suppose f1(N) is O(g1(N)) and f2(N) is O(g2(N)). Then (f1 + f2 )(N) is O(max(g1(N), g2(N))). • Product Rule: Suppose that f1(N) is O(g1(N)) and f2(N) is O(g2(N)). Then (f1f2) (N) is O(g1(N)g2(N)). Growth of Combinations of Functions Subprocedure 1 O(g1(N)) Subprocedure 2 O(g2(N)) * What is the big-O time complexity of running the two subprocedures sequentially? Theorem 2: If f1(N) is O(g1(N)) and f2(N) is O(g2(N)), then (f1 + f2)(N) = O(max(g1(N),g2(N)). What about M not equal to N? Growth of Combinations of Functions Subprocedure 1 O(g1(N)) Subprocedure 2 O(g2(M)) * What is the big-O time complexity of running the two subprocedures sequentially? Theorem 2: If f1(N) is O(g1(N)) and f2(M) is O(g2(M)), then f1(N)+ f2(M) = O(g1(N) + g2(M)). Growth of Combinations of Functions 2N N for i=1 to 2N do 1 1 O(g1(N)) 1 for j=1 to N do N steps * 2N steps = 2N2 steps aij : = 1 O(g2(N)) * What is the big-O time complexity for nested loops? Theorem 3: If f1(N) is O(g1(N)) and f2(N) is O(g2(N)), then (f1 * f2)(N) = O(g1(N) * g2(N)). Growth of Combinations of Functions: Example 1 • Give a big-O estimate for f(N) = 3N log(N!) + (N2 + 3) logN, where N is a positive integer. • Solution: – First estimate 3N log(N!). Since we know log(N!) is O(N log N), and 3N is O(N), then from the Product Rule we conclude 3N log(N!) = O(N2 log N). – Next estimate (N2 + 3) log N. Since (N2 + 3) < 2N2 when N > 2, N2 + 3 is O(N2). From the Product Rule, we have (N2 + 3) log N = O(N2 log N). – Using the Sum Rule to combine these estimates, f(N) = 3N log(N!) + (N2 + 3) log N is O(N2 log N). Growth of Combinations of Functions: Example 2 • Give a big-O estimate for f(N) = (N + 1) log(N2 + 1) + 3N2. • Solution: – First estimate (N + 1) log(N2 + 1) . We know (N + 1) is O(N). Also, N2 + 1 < 2N2 when N > 1. Therefore: • log(N2 + 1) < log(2N2) = log2 + logN2 = log2 + 2logN < 3logN, if N > 2. We conclude log(N2 + 1) = O(logN). – From the Product Rule, we have (N + 1)log(N2 + 1) = O(N logN). Also, we know 3N2 = O(N2). – Using the Sum Rule to combine these estimates, f(N) = O(max(N logN, N2). Since N log N < N2 for N >1, f(N) = O(N2). Big-Omega Notation • Definition: T ( N ) ( f ( N )) if there are positive constants c and n0 such that T(N) c f(N) when N n0 • This says that function T(N) grows at a rate no slower than f(N); thus c f(N) is a lower bound on T(N). Running Time T(N) Big-Omega Lower Bound T(N) f(N) n0 Number of Input Items N Big-Omega Example • Prove that 2 N 3N 2 ( N 2 ) 2 2 2 N 3 N 1 N – Since ( for N 1) – Then 2 N 3N 2 ( N 2 ) with c 1 and n0 1 • We could also prove that 2 N 3N 2 ( N ) but the first lower bound is tighter (higher). 2 N 3N 2 ( N 3 ) • Note that Another Big-Omega Example N 2 i ( N ) • Prove that – Since – Then i 1 N 2 i N ( N 1 ) / 2 N / 2 ( for N 1) i 1 N 2 i ( N ) with c 1 / 2 and n0 1 i 1 N i ( N ) • We could also prove that i 1 but the first bound is tighter. N • Note that i ( N 3 ) i 1 Big-Theta Notation • Definition: T ( N ) ( f ( N )) if and only if T ( N ) O( f ( N )) and T ( N ) ( f ( N )) We say that T(N) is of order f(N). – This says that function T(N) grows at the same rate as f(N). • Put another way: T ( N ) ( f ( N )) if there are positive constants c, d , and n0 such that cf ( N ) T(N) d f(N) when N n0 Big-Theta Example • Show that 3N2 + 8N logN is θ(N2). – 0 < 8N log N < 8N2, and so 3N2 + 8N log N < 11N2 for N > 1. – Therefore 3N2 + 8N logN is O(N2). – Clearly 3N2 + 8N logN is Ω(N2). – We conclude that 3N2 + 8N logN is θ(N2). A Hierarchy of Growth Rates c log N log N log N N N log N 2 k N N 2 3 N ! N 2 3 N N N If f(N) is O(x) for x one of the above, then it is O(y) for any y > x in the above ordering. But the higher bounds are not tight. We prefer tighter bounds. Note that if the hierarchy states that x < y, then obviously for any expression z > 0, z*x < z*y. Complexity of Algorithms vs Problems • We have been talking about polynomial, linear, logarithmic, or exponential time complexity of algorithms. • But we can also talk about the time complexity of problems. Example decision problems: – Let a, b, and c be positive integers. Is there a positive integer x < c such that x2 = a (mod b)? – Does there exist a truth assignment for all variables that can satisfy a given logical expression? Complexity of Problems • A problem that can be solved with a deterministic polynomial (or better) worst-case time complexity algorithm is called tractable. Problems that are not tractable are intractable. • P is the set of all problems solvable in polynomial time (tractable problems). • NP is the set of all problems not solvable by any known deterministic polynomial time algorithm. But their solution can be checked in polynomial time. P = NP ? Complexity of Problems (cont’d) • Unsolvable problem: A problem that cannot be solved by any algorithm. Example: – Halting Problem input program ? Will program halt on input? If we try running the program on the input, and it keeps running, how do we know if it will ever stop?