Architectural Issues

advertisement

Psycholinguistics II

Session #1

Last Semester

• Abstraction

–

–

–

–

Categories in speech perception

Neural network models and symbolic representations

Lexical access

Language acquisition and learnability

This Semester

• Architecture

– Task-specific segregation of mechanisms

– Information-specific segregation of mechanisms

Jackendoff (2002), Foundations of Language

Sound Interface

Meaning Interface

Surface structure

Semantics

Syntax

Phonology

Deep structure

Jackendoff (2002)

• Standard architecture

– “begins with phrase structure composition and lexical insertion,

and branches outward to phonology and semantics”

– “quite at odds with the logical directionality of processing, where

speech perception has to get from sounds to meaning, and speech

production has to get from meanings to sounds”

Sound Interface

Meaning Interface

Surface structure

Deep structure

Jackendoff (2002)

• Parallel architecture

– logically non-directional: one can start with any piece of structure

in any component and pass along logical pathways provided by the

constraints to construct a coherent larger structure around it […]

Because the grammar is logically non-directional, it is not

inherently biased toward either perception or production – unlike

the syntactocentric architecture, which is inherently biased against

both.”

– “possible to describe the logic of processing in terms Semantics

isomorphic to the rule types in the parallel grammar”

Syntax

Phonology

• Why might we want this…?

Chomsky (1965)

• “Linguistic theory is concerned primarily with an ideal speaker-listener

[…] who knows its language perfectly, and is unaffected by such

grammatically irrelevant conditions as memory limitations,

distractions, shifts of attention, and interest, and errors (random or

characteristic) in applying his knowledge of language in actual

performance. […]

We thus make a fundamental distinction between competence (the

speaker-hearer’s knowledge of his language) and performance (the

actual use of language in concrete situations). Only under the

idealization set forth in the preceding paragraph is performance a

direct reflection of competence.” (pp. 3-4)

• “When we say that a sentence has a certain derivation with respect to a

particular generative grammar, we say nothing about how the speaker

or hearer might proceed, in some practical or efficient way, to

construct such a derivation. These questions belong to the theory of

language use - the theory of performance.” (p. 9)

Chomsky & Lasnik (1993)

• “It has sometimes been argued that linguistic theory must meet the

empirical condition that it account for the ease and rapidity of parsing.

But parsing does not, in fact, have these properties. […] In general, it

is not the case that language is readily usable or ‘designed for use.’” (p.

18)

Competence

• Metaphor

• Real system

– Derivations

– Constraints

– Feeding relations among syntax, semantics, phonology

The Standard View

Standard View

324

697+

?

arithmetic

217 x 32 = ?

Standard View

specialized algorithm

324

697+

?

arithmetic

specialized algorithm

217 x 32 = ?

Standard View

specialized algorithm

specialized algorithm

324

697+

?

arithmetic

217 x 32 = ?

?

something deeper

Standard View

specialized algorithm

speaking

language

specialized algorithm

understanding

grammatical

knowledge,

competence

recursive characterization of

well-formed expressions

Standard View

specialized algorithm

speaking

language

specialized algorithm

understanding

grammatical

knowledge,

competence

recursive characterization of

well-formed expressions

precise

but ill-adapted to

real-time operation

Standard View

specialized algorithm

speaking

language

specialized algorithm

understanding

grammatical

knowledge,

competence

recursive characterization of

well-formed expressions

well-adapted to

real-time operation

but maybe inaccurate

Origins of Standard View

Townsend & Bever (2001, ch. 2)

• “Linguists made a firm point of insisting that, at most, a

grammar was a model of competence - that is, what the

speaker knows. This was contrasted with effects of

performance, actual systems of language behaviors such as

speaking and understanding. Part of the motive for this

distinction was the observation that sentences can be

intuitively ‘grammatical’ while being difficult to

understand, and conversely.”

Townsend & Bever (2001, ch. 2)

• “…Despite this distinction the syntactic model had great

appeal as a model of the processes we carry out when we

talk and listen. It was tempting to postulate that the theory

of what we know is a theory of what we do, thus answering

two questions simultaneously.

1. What do we know when we know a language?

2. What do we do when we use what we know?

Townsend & Bever (2001, ch. 2)

• “…It was assumed that this knowledge is linked to

behavior in such a way that every syntactic operation

corresponds to a psychological process. The hypothesis

linking language behavior and knowledge was that they are

identical.

Townsend & Bever (2001, ch. 2)

• “…It was assumed that this knowledge is linked to

behavior in such a way that every syntactic operation

corresponds to a psychological process. The hypothesis

linking language behavior and knowledge was that they are

identical.”

Note:

(i) No indication of how psychological processes realized

(ii) No commitment on what syntactic operations count

(iii) No claim that syntactic processes are the only processes

in language comprehension.

Miller & Chomsky (1963)

• ‘The psychological plausibility of a transformational model of the

language user would be strengthened, of course, if it could be shown

that our performance on tasks requiring an appreciation of the structure

of transformed sentences is some function of the nature, number and

complexity of the grammatical transformations involved.’ (Miller &

Chomsky 1963: p. 481)

Miller (1962)

1. Mary hit Mark.

2. Mary did not hit Mark.

3. Mark was hit by Mary.

4. Did Mary hit Mark?

5. Mark was not hit by Mary.

6. Didn’t Mary hit Mark?

7. Was Mark hit by Mary?

8. Wasn’t Mark hit by Mary?

K(ernel)

N

P

Q

NP

NQ

PQ

PNQ

Miller (1962)

Transformational

Cube

Townsend & Bever (2001, ch. 2)

• “The initial results were breathtaking. The amount of time it takes to

produce a sentence, given another variant of it, is a function of the

distance between them on the sentence cube. (Miller & McKean

1964).”

“…It is hard to convey how exciting these developments were. It

appeared that there was to be a continuing direct connection between

linguistic and psychological research. […] The golden age had

arrived.”

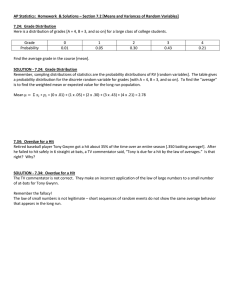

Derivational Theory of Complexity

• Miller & McKean (1964): Matching sentences with the same meaning

or ‘kernel’

• Joe warned the old woman.

The old woman was warned by Joe.

• Joe warned the old woman.

Joe didn’t warn the old woman.

• Joe warned the old woman.

The old woman wasn’t warned by Joe.

K

P

K

N

K

PN

1.65s

1.40s

3.12s

McMahon (1963)

a.

b.

c.

d.

i. seven precedes thirteen

K (true)

ii. thirteen precedes seven

K (false)

i. thirteen is preceded by seven

P (true)

ii. seven is preceded by thirteen

P (false)

i. thirteen does not precede seven N (true)

ii. seven does not precede thirteen N (false)

i. seven is not preceded by thirteen PN (true)

ii. thirteen is not preceded by seven PN (false)

Easy Transformations

• Passive

– The first shot the tired soldier the mosquito bit fired missed.

– The first shot fired by the tired soldier bitten by the mosquito missed.

• Heavy NP Shift

– I gave a complete set of the annotated works of H.H. Munro to Felix.

– I gave to Felix a complete set of the annotated works of H.H. Munro.

• Full Passives

– Fido was kissed (by Tom).

• Adjectives

– The {red house/house which is red} is on fire.

Failure of DTC?

• Any DTC-like prediction is contingent on a particular

theory of grammar, which may be wrong

• It’s not surprising that transformations are not the only

contributor to perceptual complexity

– memory demands, may increase or decrease

– ambiguity, where grammar does not help

– difficulty of access

Townsend & Bever (2001, ch. 2)

• “Alas, it soon became clear that either the linking

hypothesis was wrong, or the grammar was wrong, or

both.”

Townsend & Bever (2001, ch. 2)

• “The moral of this experience is clear. Cognitive science

made progress by separating the question of what people

understand and say from how they understand and say it.

The straightforward attempt to use the grammatical model

directly as a processing model failed. The question of what

humans know about language is not only distinct from how

children learn it, it is distinct from how adults use it.”

Received Wisdom (ca. 1974)

• Surface structures - YES

• Deep structures - MAYBE

• Transformations - NO

• Real-time operations - ROUGH-AND-READY

e.g. NP V (NP) --> subj. verb object

Implication

• If the standard view is correct, then there is little hope of a

testable, unified model

• Very difficult to make precise predictions about the nature

& timing of linguistic computations

• But there were good arguments for the standard,

fractionated architecture…

Motivations for Separation

1.

2.

3.

4.

5.

6.

7.

Real-time grammar

Accuracy of parsing

Parser-specific mechanisms

Grammaticality ≠ Parsability

Parsing ≠ Production

‘Derivational Theory of Complexity’

Competence/Performance Confusion

(see Fodor, Bever & Garrett, 1974; Levelt, 1974;

Fillenbaum, 1971)

Grammars, 1960s Style

A Simple Derivation

S

(starting axiom)

S

A Simple Derivation

S

1. S

(starting axiom)

NP VP

S

NP

VP

A Simple Derivation

S

(starting axiom)

1. S NP VP

2. VP V NP

S

NP

VP

V

NP

A Simple Derivation

S

(starting axiom)

1. S NP VP

2. VP V NP

3. NP D N

S

NP

VP

V

NP

D

N

A Simple Derivation

S

1. S

2. VP

3. NP

4. N

(starting axiom)

NP VP

V NP

DN

Bill

S

NP

VP

Bill V

NP

D

N

A Simple Derivation

S

1. S

2. VP

3. NP

4. N

5. V

(starting axiom)

NP VP

V NP

DN

Bill

hit

S

NP

VP

Bill V

hit D

NP

N

A Simple Derivation

S

1. S

2. VP

3. NP

4. N

5. V

6. D

(starting axiom)

NP VP

V NP

DN

Bill

hit

the

S

NP

VP

Bill V

NP

hit D

the

N

A Simple Derivation

S

1. S

2. VP

3. NP

4. N

5. V

6. D

7. N

(starting axiom)

NP VP

V NP

DN

Bill

hit

the

ball

S

NP

VP

Bill V

NP

hit D

N

the

ball

A Simple Derivation

S

1. S

2. VP

3. NP

4. N

5. V

6. D

7. N

(starting axiom)

NP VP

V NP

DN

Bill

hit

the

ball

S

NP

VP

Bill V

NP

hit D

N

the

ball

Reverse the derivation...

A Simple Derivation

S

1. S

2. VP

3. NP

4. N

5. V

6. D

7. N

(starting axiom)

NP VP

V NP

DN

Bill

hit

the

ball

Bill

A Simple Derivation

S

1. S

2. VP

3. NP

4. N

5. V

6. D

7. N

(starting axiom)

NP VP

V NP

DN

Bill

hit

the

ball

NP

Bill

A Simple Derivation

S

1. S

2. VP

3. NP

4. N

5. V

6. D

7. N

(starting axiom)

NP VP

V NP

DN

Bill

hit

the

ball

NP

Bill

hit

A Simple Derivation

S

1. S

2. VP

3. NP

4. N

5. V

6. D

7. N

(starting axiom)

NP VP

V NP

DN

Bill

hit

the

ball

NP

Bill V

hit

A Simple Derivation

S

1. S

2. VP

3. NP

4. N

5. V

6. D

7. N

(starting axiom)

NP VP

V NP

DN

Bill

hit

the

ball

NP

Bill V

hit

the

A Simple Derivation

S

1. S

2. VP

3. NP

4. N

5. V

6. D

7. N

(starting axiom)

NP VP

V NP

DN

Bill

hit

the

ball

NP

Bill V

hit D

the

A Simple Derivation

S

1. S

2. VP

3. NP

4. N

5. V

6. D

7. N

(starting axiom)

NP VP

V NP

DN

Bill

hit

the

ball

NP

Bill V

hit D

the

ball

A Simple Derivation

S

1. S

2. VP

3. NP

4. N

5. V

6. D

7. N

(starting axiom)

NP VP

V NP

DN

Bill

hit

the

ball

NP

Bill V

hit D

N

the

ball

A Simple Derivation

S

1. S

2. VP

3. NP

4. N

5. V

6. D

7. N

(starting axiom)

NP VP

V NP

DN

Bill

hit

the

ball

NP

Bill V

NP

hit D

N

the

ball

A Simple Derivation

S

1. S

2. VP

3. NP

4. N

5. V

6. D

7. N

(starting axiom)

NP VP

V NP

DN

Bill

hit

the

ball

NP

VP

Bill V

NP

hit D

N

the

ball

A Simple Derivation

S

1. S

2. VP

3. NP

4. N

5. V

6. D

7. N

(starting axiom)

NP VP

V NP

DN

Bill

hit

the

ball

S

NP

VP

Bill V

NP

hit D

N

the

ball

A Simple Derivation

S

1. S

2. VP

3. NP

4. N

5. V

6. D

7. N

(starting axiom)

NP VP

V NP

DN

Bill

hit

the

ball

S

NP

VP

Bill V

NP

hit D

N

the

ball

Transformations

wh-movement

--> 2

X

1

wh-NP

2

Y

3

1

0

3

Transformations

VP-ellipsis

-->

X

1

VP1

2

Y

3

VP2

4

Z

5

1

2

3

0

5

condition: VP1 = VP2

Difficulties

• How to build structure incrementally in rightbranching structures

• How to recognize output of transformations that

create nulls

Summary

• Running the grammar ‘backwards’ is not so

straightforward - problems of indeterminacy and

incrementality

• Disappointment in empirical tests of Derivational

Theory of Complexity

• Unable to account for processing of local

ambiguities

Standard View

specialized algorithm

speaking

language

specialized algorithm

understanding

grammatical

knowledge,

competence

recursive characterization of

well-formed expressions

Motivations for Separation

1.

2.

3.

4.

5.

6.

7.

Real-time grammar

Accuracy of parsing

Parser-specific mechanisms

Grammaticality ≠ Parsability

Parsing ≠ Production

‘Derivational Theory of Complexity’

Competence/Performance Confusion

(see Fodor, Bever & Garrett, 1974; Levelt, 1974;

Fillenbaum, 1971)

Accuracy of Real-time Systems

• Common view

– Grammaticality judgments are slow

– Real-time analysis is rough-and-ready

• Narrowing gap between real-time systems and grammar

presupposes grammatical accuracy of real-time systems

–

–

–

–

Constraints on movement

Binding principles

Argument structure

etc.

Motivations for Separation

1.

2.

3.

4.

5.

6.

7.

Real-time grammar

Accuracy of parsing

Parser-specific mechanisms

Grammaticality ≠ Parsability

Parsing ≠ Production

‘Derivational Theory of Complexity’

Competence/Performance Confusion

(see Fodor, Bever & Garrett, 1974; Levelt, 1974;

Fillenbaum, 1971)

Parser-Specific Mechanisms

• How is structural uncertainty handled in parsing?

– Ambiguity

The teacher sent…

The boy knew the answer…

– Indeterminacy

What do you think that John…

Parser-Specific Mechanisms

• Classic Argument: existence of ambiguity-resolution mechanisms

implies existence of separate parsing device

• Two Ways to question this

– ambiguity-resolution principles = economy principles

– there are no ambiguity resolution principles per se, only grammatical

constraints (and other lexical, semantic stuff, …), e.g. Aoshima et al,,

2003 on Active Filler Strategy

• Challenges

– how generally can structural ambiguity effects be reduced to independent

principles?

– where does the as soon as possible requirement of parsing come from?

Motivations for Separation

1.

2.

3.

4.

5.

6.

7.

Real-time grammar

Accuracy of parsing

Parser-specific mechanisms

Grammaticality ≠ Parsability

Parsing ≠ Production

‘Derivational Theory of Complexity’

Competence/Performance Confusion

(see Fodor, Bever & Garrett, 1974; Levelt, 1974;

Fillenbaum, 1971)

Townsend & Bever (2001, ch. 2)

• “Linguists made a firm point of insisting that, at most, a

grammar was a model of competence - that is, what the

speaker knows. This was contrasted with effects of

performance, actual systems of language behaviors such as

speaking and understanding. Part of the motive for this

distinction was the observation that sentences can be

intuitively ‘grammatical’ while being difficult to

understand, and conversely.”

Grammaticality ≠ Parsability

• “It is straightforward enough to show that sentence parsing and

grammaticality judgments are different. There are sentences which are

easy to parse but ungrammatical (e.g. that-trace effects), and there are

sentences which are extremely difficult to parse, but which may be

judged grammatical given appropriate time for reflection (e.g. multiply

center embedded sentences). This classic argument shows that parsing

and grammar are not identical, but it tells us very little about just how

much they have in common.”

(Phillips, 1995)

Grammaticality ≠ Parsability

• Ungrammatical yet Comprehensible

– *The boys likes cookies

– *The man donated the museum a painting

– *Who do you think that left?

• Solution: accommodation, ‘mal-rules’

• The examples don’t point to an independent system

– violations are noticed immediately

– violations are ‘diagnosed’ rather well

Grammaticality ≠ Parsability

• Grammatical yet Incomprehensible

– # The man the woman the dog bit chased watched a movie.

– # The horse raced past the barn fell.

Grammaticality ≠ Parsability

• Most ERP syntax literature focuses on speed of ungrammaticality

detection - many violations detected very quickly

• But some judgments are slow

– Why did you think that John fixed the drain?

– * Why did you remember that John fixed the drain?

– Who did everyone meet?

– Who met everyone?

Grammaticality ≠ Parsability

• Syntactic Illusions:

‘More people have been to Russia than I have.’

(Montalbetti, 1984)

• Challenges

–

–

–

–

Provide an explicit account of grammaticality judgment

Make timing predictions that map onto electrophysiology

Explain ‘syntactic illusions’

Flesh out the account of ‘slow judgments’

Motivations for Separation

1.

2.

3.

4.

5.

6.

7.

Real-time grammar

Accuracy of parsing

Parser-specific mechanisms

Grammaticality ≠ Parsability

Parsing ≠ Production

‘Derivational Theory of Complexity’

Competence/Performance Confusion

(see Fodor, Bever & Garrett, 1974; Levelt, 1974;

Fillenbaum, 1971)

Parsing ≠ Production

• Classic Argument: parsing & production look quite different

– different building blocks (cf. clause-sized templates in production)

– differentially affected in language disorders, double dissociations (e.g., classical

conduction aphasia)

– different kinds of breakdown in normal population (e.g., garden paths, slips of the

tongue)

– different tasks!

• But…

–

–

–

–

–

similar arguments hold for lexicon

evidence for incrementality in production (e.g., Ferreira, 1996)

single system can encounter different ‘bottlenecks’ in different tasks

how is imitation (etc.) possible?

challenge: show that this is feasible

Motivations for Separation

1.

2.

3.

4.

5.

6.

7.

Real-time grammar

Accuracy of parsing

Parser-specific mechanisms

Grammaticality ≠ Parsability

Parsing ≠ Production

‘Derivational Theory of Complexity’

Competence/Performance Confusion

(see Fodor, Bever & Garrett, 1974; Levelt, 1974;

Fillenbaum, 1971)

Derivational Theory of Complexity

• Miller & McKean (1964): Matching sentences with the same meaning

or ‘kernel’

• Joe warned the old woman.

The old woman was warned by Joe.

• Joe warned the old woman.

Joe didn’t warn the old woman.

• Joe warned the old woman.

The old woman wasn’t warned by Joe.

K

P

K

N

K

PN

1.65s

1.40s

3.12s

McMahon (1963)

a.

b.

c.

d.

i. seven precedes thirteen

K (true)

ii. thirteen precedes seven

K (false)

i. thirteen is preceded by seven

P (true)

ii. seven is preceded by thirteen

P (false)

i. thirteen does not precede seven N (true)

ii. seven does not precede thirteen N (false)

i. seven is not preceded by thirteen PN (true)

ii. thirteen is not preceded by seven PN (false)

Easy Transformations

• Passive

– The first shot the tired soldier the mosquito bit fired missed.

– The first shot fired by the tired soldier bitten by the mosquito missed.

• Heavy NP Shift

– I gave a complete set of the annotated works of H.H. Munro to Felix.

– I gave to Felix a complete set of the annotated works of H.H. Munro.

• Full Passives

– Fido was kissed (by Tom).

• Adjectives

– The {red house/house which is red} is on fire.

Failure of DTC?

• Any DTC-like prediction is contingent on a particular

theory of grammar, which may be wrong

• It’s not surprising that transformations are not the only

contributor to perceptual complexity

– memory demands, may increase or decrease

– ambiguity, where grammar does not help

– difficulty of access

Motivations for Separation

1.

2.

3.

4.

5.

6.

7.

Real-time grammar

Accuracy of parsing

Parser-specific mechanisms

Grammaticality ≠ Parsability

Parsing ≠ Production

‘Derivational Theory of Complexity’

Competence/Performance Confusions

(see Fodor, Bever & Garrett, 1974; Levelt, 1974;

Fillenbaum, 1971)

Competence & Performance

• Different kinds of formal systems: ‘Competence systems’

and ‘Performance systems’

• The difference between what a system can generate given

unbounded resources, and what it can generate given

bounded resources

• The difference between a cognitive system and its behavior

Competence & Performance

•

•

•

•

Mechanism vs. behavior

Declarative vs. procedural specification of system

Separation of system with or without resource limitations

Time-dependent vs. time-independent specification

Competence & Performance

(1) It’s impossible to deny the distinction between cognitive states and

actions, the distinction between knowledge and its deployment.

(2) How to distinguish ungrammatical-but-comprehensible examples (e.g.

John speaks fluently English) from hard-to-parse examples.

(3) How to distinguish garden-path sentences (e.g. The horse raced past

the barn fell) from ungrammatical sentences.

(4) How to distinguish complexity overload sentences (e.g. The cat the

dog the rat chased saw fled) from ungrammatical sentences.

Jackendoff (2002), Foundations of Language

Sound Interface

Meaning Interface

Surface structure

Semantics

Syntax

Phonology

Deep structure

Architectural Issues

• Task-specific segregation of mechanisms

– Parser

– Producer

– Grammar

• Information-specific segregation of mechanisms

–

–

–

–

–

Phonology

Lexicon

Syntax

Semantics

World knowledge

Architectural Issues

• Task-specific segregation of mechanisms

– Parser

– Producer

– Grammar

• Information-specific segregation of mechanisms

–

–

–

–

–

Phonology

Lexicon

Syntax

Semantics

World knowledge

Cross-talk among levels?

‘Modularity of Mind’

Jackendoff (2002), Foundations of Language

Sound Interface

Meaning Interface

Surface structure

Semantics

Syntax

Phonology

Deep structure

Does Jackendoff’s argument work?

• Is real-time computation grammatically accurate?

• Is transformational grammar incompatible with

incremental structure building?

• What does it take to solve the incrementality problem?

• Are parsing and production mirror images of one another?

Speed

Measures of Speed

• Measures of speed of processing

–

–

–

–

Speech-Shadowing:

Eye-tracking

Speed-accuracy Tradeoff (SAT)

Event-Related Potentials (ERPs)

Marslen-Wilson 1975

Speech shadowing involves on-line repetition of a speaker…

Speech shadowing involves on-line repetition of a speaker…

250-1000ms

Shadowing latency

The new peace terms have been announced…

surrender of …

They call for the unconditional universe of …

already of …

normal

semantic

syntactic

Marslen-Wilson 1975

Eye-movements

(Sereno & Rayner, 2003)

Head-mounted Eye-Tracking

Sussman & Sedivy (2003)

(Sussman & Sedivy, 2003)

Speed-Accuracy Tradeoff (SAT)

McElree & Griffith (1995)

- grammatical

- subcategorization

- thematic

- syntactic

Event-Related Potentials (ERPs)

John

is

laughing.

s1

s2

s3

Event-Related Potentials

• Event-Related Potentials (ERPs) are derived from the

electroencephalogram (EEG) by averaging signals that are

time-locked to a specific event.

• Scalp voltages provide millisecond-accuracy, promise

detailed timing information about syntactic computation,

plus information about amplitude and scalp topography

ERP Sentence Processing

N400

• Developing understanding of

N400 is informative

I drink my coffee with cream and sugar

I drink my coffee with cream and socks

• Response to ‘violations’

Kutas & Hillyard (1980)

Morpho-Syntactic violations

Every Monday he mows the lawn.

Every Monday he *mow the lawn.

The plane brought us to paradise.

The plane brought *we to paradise.

(Coulson et al., 1998)

(Slide from Kaan (2001)

P600

he mows

he *mow

(Slide from Kaan (2001)

Left Anterior Negativity

(LAN)

P600

he mows

he *mow

(Slide from Kaan (2001)

Neville et al., 1991

The scientist criticized a proof of the theorem.

The scientist criticized Max’s of proof the theorem.

500ms/word

500ms/word

Hahne & Friederici, 1999

Das Baby wurde gefüttert

The baby was fed

Das Baby wurde im gefüttert

The baby was in-the fed

Hahne & Friederici, 1999

ELAN

How Fast?

• Various types of evidence for processes above the word

level within 200-400 msec (conservatively) of the start of a

word.

• How are we able to compute so quickly?

• What is it that is computed so quickly?

– Rough-and-ready structural analysis?

– Richer syntactic analysis?