GEO Assessment Process - Central Arizona College

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

General Education Outcomes Assessment

Process Guide

Full Implementation 2012

Questions? Please contact:

Mary Menzel-Germanson, Director of Curriculum and Student Learning Assessment (520) 494-5215

Myna Frestedt, Curriculum and Student Learning Support Analyst (520) 494-5913

Special Thanks to Contributors:

Sylvia Gibson, Director of Accreditation and Quality Initiatives, General Education Outcomes

Subcommittee chairs and members, the Course Assessment Teams and CAT Leaders, Curriculum

Committee members 2009-2011, Valerie Jensen, Institutional GEO Rubric Task Force, GEO Map piloters, the Academic Deans and the Curriculum and Student Learning Assessment staff

This document is intended as a guidance resource to support the implementation of Central Arizona College’s

General Education Outcomes Assessment Process. Although each step in this document must be completed, the level of detail depends on the circumstances of individual departments, projects, and programs.

Access the most recent version of this Guide on the CAC Curriculum and Student Learning Assessment website: http://www.centralaz.edu/Home/About_Central/Curriculum_Development/General_Education_Outcomes.htm

This Guide was modeled upon ASU’s Assessment Guide written by Wanda Baker and used with her permission, per

IUPUI Assessment Conference 2011.

If you have questions or would like assistance, please contact the Curriculum and Student Learning Assessment

Office staff: mary.menzel@centralaz.edu

, myna.frestedt@centralaz.edu

or jennie.voyce@centralaz.edu

.

0

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

Table of Contents

DESCRIPTION ……………………………………….…………………………….……………………………..……………….… 2

COMMONLY ASKED QUESTIONS ……….…………………………………………………………….……..…………….. 2

PURPOSE ……………………………………………………………………….………………………………………..……………. 4

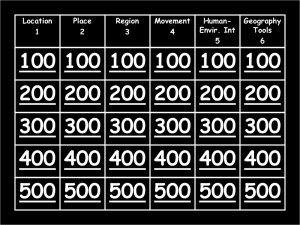

PROCESS FLOWCHART …………….………………………………………….………………………….………..…………… 5

ROLES AND RESPONSIBILITIES …………………………………………………………………………………….….…….. 6

ASSESSMENT CYCLE ……………………………………………………………………………………….……………….…..… 8

GLOSSARY OF ASSESSMENT TERMINOLOGY ………………………………………………….……………………… 12

ASSESSMENT PROCEDURES ……………………………………………………………………………….……….………… 13

A.

Mission, Goals and Outcomes………………………………………..…………….……….... 14

B.

Measures……………………………………………………………………………..……………..…. 15

C.

Performance Criteria…………………………………………………………………………….... 16

D.

Sampling……………………………………………………………………………………………….… 16

E.

Assessment Process Steps ………………………………….……….………….…………...... 18

COLLECT DATA ………………………………………………………………………….………………………………………..…. 19

INTERPRET RESULTS…………………………………………………………………………………………..…………………… 20

ACT ON RESULTS...…………………………………………………………………………………………………………………. 21

REPORT ……………………………………………………………………………………………………………………………….... 22

Data Repository……………………………………………………………………………………….……. 22

General Education Outcome Assessment Step-By-Step

for Course Assessment Teams page 23-26………………………………………………………………………………………………………………………...…… 23

A.

Establish GEO Course Assessment Team …………………………………….. 23

B.

Review CAC GEO Assessment Standardized Processes and Reports:

Blackboard Evidence of Student Success Site……………………………….. 25

B1. Review existing assessments

B2. GEO Map the course

B3. Stakeholder Analysis

C.

Standardize assessment timeline/cycles………..…………………………….. 23

D.

Gather artifacts …………………………………………………………………………… 24

E.

Collect Data…………………………………………………………………………………. 24

F.

Evaluation Team Training………………………………………………………….…. 25

G.

Submit raw scores to Institutional Planning and Research Office … 25

H.

Institutional Planning and Research Create Data Report .…………... 25

I.

Benchmarking…………………………..…………………………………………………. 26

J.

Report process, analysis and results……………………………..……………… 26

K.

Assess, refine, streamline processes…………………………………………….. 26

Appendix A

Sample Forms with Instructions…………………………………………………..……………………………..………….. 27

1

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

DESCRIPTION:

This document establishes procedures, roles, and responsibilities for Central Arizona College’s General

Education Outcomes (GEO) Assessment Process. CAC has conducted GEO Assessment Pilots in multiple courses from 2008-2011. Full implementation of GEO Course/Program Assessment begins Fall 2012.

COMMONLY ASKED QUESTIONS:

What is General Education Outcomes Assessment?

General Education Outcome (GEO) Assessment is a continuous, ongoing process which occurs within the normal academic cycle and results in meaningful data. The evidence of student learning data is used to measure the extent academic programs achieve academic objectives including the knowledge/skills of program graduates and to identify changes that will help the program or college better achieve those objectives. Each process cycle provides information about the degree of student success from the previous cycle and informs curricular, pedagogical, training, budget, quality improvement initiatives and other decisions.

Assessment answers: “What do graduates know and what can they do?” and “What programmatic changes are necessary to improve the knowledge and skills of program graduates?”

Academic program assessment is a faculty-driven structured and iterative process where:

Faculty identify the specific knowledge and skills program graduates should possess;

Faculty identify specific methods for measuring the knowledge and skills;

Faculty teams interpret the results of those measures of student knowledge and skills;

Divisions use those results to make curricular, pedagogical, support services, and institutional changes to improve student learning; and

Faculty continue the process while monitoring the effectiveness of quality changes implemented and identify additional changes.

Why assess?

Continuous improvement of our programs is an important priority for scholars and educators who want to do everything possible to prepare our graduates to perform in society, in the workplace, or in their receiving college/university. Assessment planning and reporting enable faculty to report the specific learning outcomes they desire for their graduates and to collect solid evidence of how well those outcomes have been achieved.

Assessment of student learning and providing evidence of student success is required to maintain institutional accreditation as well as specialized program accreditation. The Higher Learning Commission of the North Central Association of Colleges and Schools, CAC’s regional accrediting body, emphasizes assessment including continuous, quality assessment processes such as Program Review and GEO

Assessment.

We assess to determine the extent to which program graduates possess the intended knowledge and skills of the program as stated in the program measurable student learning outcomes (PMSLOs) in the ACRES approved curriculum. We use that information as evidence of student learning and to implement improvements designed to further support student learning.

2

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

Who must participate?

All academic programs are expected to participate in GEO assessment as CAC creates a continuous cycle of assessment. The U.S. Department of Education expects that institutions demonstrate evidence of student learning outcomes for certificates that may be eligible for Title IV financial aid and which are often marketed as preparation for specific areas of employment. In addition, our constituents expect that our degree completers are capable of demonstrating achievement of CAC’s GEOs.

Why do we need to provide evidence of student learning beyond course grades?

Course grades are based on overall satisfaction of course requirements rather than performance on a specific program-level outcome. Student course grades often contain a variety of criteria, including attendance, participation, and extra credit. Course grades alone do not provide specific information about the concepts mastered by students or those concepts that proved challenging—important information for faculty to consider if they want to improve student learning over time. In addition, course completion is not an appropriate measure of student learning. An assessment evaluation focuses only on the student artifact/product which is judged on its own merit by a team of faculty experts using the agreed upon GEO

Rubric rather than the individual instructor’s course assignment/examination grading rubric.

How is GEO Assessment different from the End of Term Course Evaluation, CSSE, and other surveys currently in place at CAC?

The purpose of GEO Assessment is to measure student learning based on student work, not to evaluate instructors, gather student perceptions of the educational climate, nor to determine why students persist, complete, withdraw, or where graduates’ strengths and challenges exist based on their first employer’s perception.

Where can I find CAC GEO Assessment results?

The Blackboard Evidence of Student Success site serves as a resource depository for CAC GEO Assessment documents, including GEO Executive and Subcommittee reports, minutes, templates, rubrics, glossary of terms, and assessment plans by term.

How does the AQIP Learning Principle support GEO Assessment?

AQIP states that a “learning-centered environment allows an institution dedicated to quality to develop everyone’s potential talents by centering attention on learning – for students, for faculty and staff, and for the institution itself. By always seeking more effective ways to enhance student achievement through careful design and evaluation of programs, courses, and learning environments,” both CAC and CAC employees “demonstrate an enthusiastic commitment to organizational and personal learning as the route to continuous improvement. Seeing itself as a set of systems that can always improve through measurement, assessment of results, and feedback,” CAC “designs practical means for gauging students’ and institutional progress toward clearly identified objectives. Conscious of costs and waste – whether human or fiscal — leadership champions careful design and rigorous evaluation to prevent problems before they occur, and enables the institution to continuously strengthen its programs, pedagogy, personnel, and processes.” Other AQIP Principles of Collaboration, Agility, People, Foresight, Information,

Focus, and Involvement also support Student Learning Assessment (AQIP: http://www.ncahlc.org/AQIP-

Categories/aqip-categories.html

, retrieved 11/11/2011).

3

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

PURPOSE:

The intent of GEO Assessment is to evaluate student competencies in six essential areas based on collected evidence of student learning. The assessment process is course based, so assessment of student performance on General Education Outcomes (GEOs) typically occurs in general education or capstone courses.

FLOWCHART OF THE PROCESS:

Plan

Assessment

Collect

Data

Interpret

Results

Act on

Results

Report

Each process step should be completed in this sequence to maximize effectiveness and efficiency.

Plan

Collect

Interpret

Act on Results

Use the data to make improvements in student learning

Document changes

Gather evidence to determine if the change resulted in improved student learning

Report

4

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

5

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

ROLES AND RESPONSIBILITIES:

All CAC staff are involved in GEO assessment related to guidance, prioritization, resource apportionment, monitoring, and performance assessment.

1. President

The President’s assessment activities include:

Encourage and support wide-spread instructor/staff participation in the GEO assessment process

Review, evaluate and approve viable recommendations resulting from the GEO assessment process

Routinely communicate importance of GEO assessment to CAC, support ongoing staff participation and training at all levels

2. Vice Presidents and Associate Vice Presidents

Each vice president serves as a member of the administrative team, including representation on the

Executive Council. They cooperate in addressing issues that relate to both their unit and the overall

Mission of the College. Assessment activities of each vice president include:

Implement, manage, and facilitate the coordination of the GEO assessment process within their respective unit

Encourage unit-wide instructor/staff participation in all aspects of the assessment process

Use the GEO assessment process for continuous improvement

Address alignment of this process with other departmental and management processes

Hold Deans and Directors accountable for duties listed in this document

Routinely communicate with President on assessment gains and initiatives to address challenges

3. Deans and Direct VP Reports:

Participate and support execution of GEO assessment process

Serve as resources to GEO Assessment Subcommittee or support and encourage division-wide participation in all aspects of GEO Assessment

Use the standardized GEO assessment process for continuous improvement

Address alignment of this process with other departmental and management processes

Hold Division chairs, Directors and Program Owners accountable for duties listed in this document

Routinely communicate with Vice Presidents to ensure goal achievement, accountability and visibility to constituents are maintained

4. Faculty/Instructors

While faculty members’ primary responsibilities lie with quality teaching and learning, faculty/instructors’ duties key to this process include:

Participate on CAC GEO Subcommittees to plan, implement research and analyze student learning

Participate in departmental activities related to developing, implementing and evaluating

plans, reports and supporting CAC assessment processes

Routinely communicate with Division chair, Directors, and program owners to ensure goal achievement, accountability, and visibility to constituents are maintained

6

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

5. Managerial/Technical Staff

While Managerial/Technical staff serve CAC in a variety of specialized responsibility areas, their assessment activities include:

Participate on CAC committees and planning systems

Participate in departmental activities related to developing, implementing, and evaluating plans and supporting CAC assessment processes

Routinely communicate with Department/Unit/Division chair, Directors, and program owners to ensure goal achievement, accountability, and visibility to constituents are maintained

6.

Division Level Assessment Mentor

Serve as the Assessment Expert and point person for the Division

Serve on Assessment Mentor Subcommittee and participate in ongoing training

Help coordinate Assessment Evaluation Team training/meetings: norming training, scoring session, analysis of results, and identification of improvement strategies

Ensure Evaluation Team follows standardized process steps; communicates with Division chair: assessment details, instrument, method, sample, and alignment of MSLOs to GEOs

Help Instructional Teams create or review GEO Course Map, identify appropriate assessment instrument (already in use), and complete planning and implementation process steps

Help Evaluation Teams in score norming training

Help coordinate and refine GEO Assessment Executive Report and addendum charts

May participate in review of internal and external reports and in presentations on results

Recommend Process improvements

7.

Course Assessment Leader (CAL)

Serve as the communication point person for the Team; Report status to Division Level

Assessment Mentor, GEO Subcommittee Chair and SLA Director on a monthly basis

Coordinate Course Assessment Team (CAT) and Evaluation Subteam, typically two evaluators and the CAL, conduct score norming training, scoring session, analysis of results, and identification of improvement strategies

Coordinate collection of student artifacts, submits to IPR all GEO Raw Data Submission forms and the Cover Sheet

Coordinate creation of GEO Assessment Executive Report and other reports

May participate in creation or review of internal and external reports and in presentations on results; Process improvement recommender

8.

Program Faculty/Instructors

Faculty in the program that owns the course typically serve on the three-person Evaluation

Team and all division faculty may serve on the data analysis team

If fewer than three full-time faculty are assigned to that department, content experts from other areas are invited to join the Evaluation Team

Members of Evaluation Teams may change over time, however at least one experienced/trained faculty member directs the process and is designated the Course

Assessment Leader. This individual will be in direct contact with the GEO Subcommittee Chair

and the Director of Student Learning Assessment

All faculty/instructors are responsible for reviewing Data Reports and providing input into the selection of the student learning improvement strategy

7

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

9.

Institutional Planning and Research (IPR)

Conduct initial statistical analysis of Evaluation Team’s GEO Raw Data Submission and return analysis via Data Report to Course Assessment Leader

Provide statistical analysis guidance to GEO Assessment evaluation teams

Review GEO Assessment Reports for accuracy and provide feedback

Current Assessment Process Deadlines Fall 2011-Spring 2012

ASSESSMENT SCHEDULE

Fall Course Assessment

August-September:

Student Learning Evaluation Teams established and Course Assessment Leader elected

Review/update GEO Course/Program Maps; Submit new map via ACRES if needed

Review curriculum and syllabi to select student product/exam questions or create assessment instrument

Communicate and collaborate with other Evaluation Teams: identify student product which may fulfil multiple assessment purposes

Determine student sample (minimum of 30 students or 10% of total enrolment in course)

Determine assessment parameters: e.g. in-class, take-home, online; 10 minute quiz, one-hour exam with embedded 2 GEO questions, three-weeks to complete research paper, etc.

Determine timeline based on type of assessment: e.g. pre- and post-tests are typically implemented the first and last week of the course

Course Assessment Leader submits raw scores to IPR using GEO Raw Data Submission form for

Pre-Test; One form per course

October-December:

Conduct assessment: midterm through final exam week

Collect sample with student CAC ID number (black-out student name)

Norm and score

Course Assessment Leader submits raw scores to IPR using GEO Raw Data Submission form; One form per course; DUE: December 9, 2011 or January 5, 2012

Adjunct Faculty Fall 2011 Contracts End: December 10

Grades DUE: December 12, 2011

Spring Semester 2012 all 27 GEO Standards:

January-February:

For Spring 2012 Course Assessment , Begin Spring 2012 Assessment Cycle (refer to steps from

August 2011 – January 2012)

Full-Time Faculty on Campus/Site Begin: January 3, 2012

C&SLA offers GEO Training for Adjunct Faculty (SPC and SMC) on January 3 and 5

Course Assessment Leader submits raw scores to IPR using GEO Raw Data Submission form; One form per course; DUE: January 5

IPR returns Data Report to Course Assessment Leader; DUE: January 6-9 or within 48 hours of receipt, excluding weekends and holidays

8

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

Adjunct Faculty Spring 2012 Contracts Begin: January 9

Course Assessment Leader creates and submits Fall 2011 GEO Course Assessment Executive

Report to GEO Subcommittee Chair; DUE: January 9

GEO Subcommittee Chair reviews, refines, and submits Fall 2011 GEO Course Assessment

Executive Report to Assessment Director; DUE: January 9

Director of SLA writes HLC Assessment Academy Six-Month Progress Report, proofs and reformats GEO Course Assessment Executive Reports and sends to Director of Accreditation and

Quality Initiatives (A&QI) for review; DUE: January 11

Administrative Feedback to Director of Student Learning Assessment; DUE: January 12

Director of SLA submits to Director of A&QI; DUE: January 12

Director of A&QI submits report to CAC President; DUE: January 13

HLC Assessment Academy Six Month Progress Report to HLC; DUE: January 15

All College Day presentations on Beyond Assessment 101: January 20

Summer and Fall Course Assessment Lottery; DUE: February 2

Summer and Fall 2012 Course Assessment List announced to District; DUE: February 3

Begin planning Summer 2012 GEO Assessment of all 27 GEO Standards

IPR offers Data Report Analysis Training Session

Training prior to CAC Governing Board meeting at SMC: February 21

GEO Subcommittees, GEO Executive, and Course Assessment Teams continue to analyze IPR Data

Report, GEO Reports and qualitative data

Each GEO Subcommittee determines student learning improvement strategies at monthly meetings

March:

Training prior to CAC Governing Board meeting at SPC: March 20

Fall 2011 Final GEO Course Assessment Report submitted by GEO Chair to Director of Student

Learning Assessment; DUE: March 19, 2012

Review Overall Assessment Process and identify improvements

Begin planning Fall 2012 GEO Assessment

April-May:

GEO Map AGEC courses; DUE: April 1, 2012

HLC Impact Report; DUE: April 2, 2012

Training prior to CAC Governing Board meeting at AVC: April 17

Act on Student Learning Improvement Goals identified

Benchmark results (internal and external)

Check progress on implementation of Improvement Strategies for next academic year

Adjunct Faculty Spring 2012 Contracts End: May 5

Full-Time Faculty Annual Contracts End: May 5, 2012 (AVC F-T Faculty: May 4, 2012)

Spring 2012 Assessment: Course Assessment Leader submits GEO Raw Data Submission to IPR;

DUE: on or before May 5

IPR returns Data Report to CAL; DUE: May 8

Course Assessment Leader collaborates with GEO Chair to write and finalize GEO Course

Assessment Executive Report; DUE: May 9

Course Assessment Leader submits Spring 2012 GEO Course Assessment Executive Report to

Director of Student Learning Assessment; DUE: May 10

GEO Subcommittee Chairs create and submit 2011-2012 Annual GEO Assessment Progress

Report to Director of Student Learning Assessment; DUE: May 11

9

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

Grades DUE: May 7, 2012

Celebrate Accomplishments and Next Steps identified

Summer Session 2012 all 27 GEO Standards:

Finalize Annual Reports and craft Presentation

CAC HLC Assessment Academy Presentation in Chicago: DUE: June 6, 2012

GEO Improvement Plan for Upcoming Year (Strategic Plan)

Strategies for Action (Key Initiatives to Help Students Learn)

Annual Reports: July

Faculty Summer 2012 Contracts End: August 4

Grades DUE: August 6

Summer 2012 Assessment: Course Assessment Leader submits GEO Raw Data Submission to

IPR 5 days after Final Exam; DUE: August 8

IPR returns Data Report to CAL by August 10

Course Assessment Leader collaborates with GEO Chair to write and finalize GEO Course

Assessment Executive Report; DUE: August 15

Course Assessment Leader submits Spring 2012 GEO Course Assessment Executive Report to

Director of Student Learning Assessment; DUE: August 17

GEO Subcommittee Chairs create and submit Summer GEO Assessment Progress Report to

Director of Student Learning Assessment; DUE: August 30

Adjunct Faculty Summer 2012 Contracts End: August 4

Celebrate Accomplishments and Next Steps identified

ASSESSMENT CYCLE

Collect

Data

Act on

Results

Plan

Assessment

Interpret

Results

Report

Fall Semester

Assess

Spring Semester

Observations and Recommendations – Improvement Plan

1

Assessment Plan Data Collect Results Interpret Results Act on Report

Summer Semester

Plan for Fall semester implementation of selected improvement and ongoing assessment of student learning results

Across the Curriculum, Full Implementation of GEO Assessment Fall 2012

Year One of Three-Year Assessment Cycle: 2012-13

Individual & Social Responsibility

Cultural & Artistic Heritage:

August-September:

Student Learning Evaluation Teams established and Course Assessment Leader elected

Review/update GEO Course/Program Maps; Submit new map via ACRES if needed

Review curriculum and syllabi to select student product/exam questions or create assessment instrument

10

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

Communicate and collaborate with other Evaluation Teams: identify student product which may fulfil multiple assessment purposes

Determine student sample (minimum of 30 students or 10% of total enrolment in course)

Determine assessment parameters: e.g. in-class, take-home, online; 10 minute quiz, one-hour exam with embedded 2 GEO questions, three-weeks to complete research paper, etc.

Determine timeline based on type of assessment: e.g. pre- and post-tests are typically implemented the first and last week of the course

Course Assessment Leader submits raw scores to IPR using GEO Raw Data Submission form for

Pre-Test; One form per course

October-December:

Conduct assessment: midterm through final exam week

Collect sample with student CAC Identification number (black-out student name)

Norm and score

Course Assessment Leader submits raw scores to IPR using GEO Raw Data Submission form; One form per course; DUE: Friday of Finals Week, December 14, 2012 or Thursday of first week back,

January 3, 2013

January-February:

Institutional Planning and Research (IPR) returns Data Report to Course Assessment Leader; DUE: within 48 hours of receipt, excluding weekends

Course Assessment Leader creates and submits Fall 2011 GEO Course Assessment Executive

Report to GEO Subcommittee Chair; DUE: January 9, 2013

GEO Subcommittee Chair reviews, collaboratively refines, and submits Fall 2011 GEO Course

Assessment Executive Report to Assessment Director; DUE: January 11

Director of Student Learning Assessment writes CAC Assessment Six-Month Progress Report, attaches GEO Course Assessment Executive Reports and updates CAC Benchmark matrix and sends to Director of Accreditation and Quality Initiatives for review; DUE: January 18

Administrative Feedback to Director of Student Learning Assessment; DUE: January 22,

CAC Assessment Report to CAC President; DUE: January 25

IPR offers Data Report Analysis Training Session

GEO Subcommittees, GEO Executive, and Course Assessment Teams continue to analyze IPR Data

Report and GEO Reports

C&SLA offers GEO Training for Adjunct Faculty (SPC and SMC) on January 2013

Each GEO Subcommittee determines student learning improvement strategies at monthly

meetings

Fall 2013 Course Assessment List announced based on February Lottery, begin forming

Assessment Team and planning assessment

March:

Review Overall Assessment Process and identify improvements

Act on Student Learning Improvement Goals identified

April-May:

Check progress on implementation of Improvement Strategies for next academic year

Benchmark results (internal and external)

11

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

Fall 2012 Assessment: Course Assessment Leader writes, collaborates with GEO Chair and submits Final Course Assessment Executive Report to GEO Subcommittee Chair; DUE: May 1,

2013

GEO Subcommittee Chairs create and submit 2012-2013 Annual GEO Assessment Progress

Report to Director of Student Learning Assessment; DUE: May 5

Director of SLA submit Annual Assessment Report to Director of A&QI; DUE: May 15

Director of A&QI submits report to CAC President; DUE: May 20, 2013

Celebrate Accomplishments and Next Steps identified

June: Review Results in Annual Reports

GEO Improvement Plan for Upcoming Year

Strategies for Action

Reports

Year Two 2013-14:

Communication

Informational and Technological Literacy

Use deadlines for previous year as guideline.

Year Three 2014-15:

Mathematical and Scientific Inquiry and Analysis

Critical Thinking and Analytical Reasoning

Use deadlines for previous year as guideline.

Fall Semester: Monitor implementation of improvements to student learning; Train faculty; submit curriculum changes

Spring Semester: Assess to determine student learning gains based on improvements (Follow

August-January assessment process steps)

GLOSSARY OF ASSESSMENT TERMINOLOGY:

ACRES: Academic Curriculum Review and Evaluation System

AM: Division Level Assessment Mentors

AQIP: Academic Quality Improvement Program

Analysis: Interpretation of data or evidence of student learning, including quantitative statistics (mean, median, mode, percentages), trend analysis, correlations, qualitative factors directly influencing the results and determining improvement initiatives (CQIs) based on the analysed data.

Artifact: Student sample of demonstrated learning, such as a research paper, videotaped presentation, project, examination, computer program, or rubric-scored demonstration.

Assessment: The ongoing process tied to CAC mission of designing, implementing, collecting, analyzing and reporting results of student learning based on student artifacts. Data results inform decisions to improve student learning and teaching.

Course Assessment Leader (CAL): Instructor on the GEO Course Assessment Team (CAT) responsible for

1.

Convenes meetings and facilitates planning, implementation and collection of artifacts

2.

Convenes Evaluation Team of three to analyze student artifacts

3.

Submits data results to IPR on GEO Assessment Raw Data Submission Form

4.

Shares data documents, facilitates further analysis of results and writes reports

5.

Reports GEO assessment plan to Subcommittee chair and SLA director

12

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

C&SLA: Curriculum and Student Learning Assessment office

Full Implementation: Participation by all faculty instructing the course or participation by all CAC staff on a given initiative.

General Education Outcome (GEO): Desired level of knowledge, skills, and abilities of all CAC graduates to ensure long-term success. CAC’s six GEOs are embedded across the general education curriculum and capstone courses.

HLC: Higher Learning Commission

Improvement Plan: Based on GEO analyzed results, teams identify improvements designed to significantly improve student learning.

IPR: Institutional Planning and Research office

Instrument: GEO assessment tool designed to gauge level of student learning.

Division Level Assessment Mentor: Faculty/staff trained in GEO Assessment process steps who serve as a Division expert.

MSLOs: Measurable Student Learning Outcomes within each ACRES curriculum proposal identify specific knowledge, skills, and abilities students will possess upon successful completion of the course or program.

Norming: Using the rubric, evaluators score artifacts, then meet to review their scores and share rationale. Norming before actual scoring supports and aligns scoring among evaluators.

Outcome: Outcomes within ACRES are frames within Bloom’s Cognitive Taxonomy which identifies increasing levels of content mastery.

PMSLOs: Program Measurable Student Learning Outcomes consist of three to five overarching student learning outcomes expected of program graduates.

Pilot: A scholarly, process-oriented assessment of specific GEO Standards in a target course.

Raw Data: Results generated by a GEO Assessment evaluator; typically team’s of three analyse one GEO

Standard and complete a Raw Data Submission form. See Appendix for GEO Assessment Forms.

Rubric: Standardized scoring tool describing the criteria set that defines between a range of student learning from Insufficient (0) to Accomplished (3). See CAC Institutional GEO Rubric.

Sample: Student population selected to represent all CAC students enrolled in a specific course/program. Sample size must be a minimum of 30 students. For courses with multiple sections, sample 15% of all students enrolled. Random samples are preferred.

Scoring: Minimum team of 3 evaluators make judgments on student artifacts using the rubric and submit scores as data to IPR.

SLA: Student Learning Assessment

SLOA: Student Learning Outcomes Assessment

Strategies: Pedagogical techniques for supporting student learning or student learning assessment.

ASSESSMENT PROCEDURES:

Plan

Assessment

Plan Assessment:

Each step of the assessment planning stage provides the foundation for the next step

The assessment planning stage is the most complex and time-consuming stage of the assessment process, but good planning is a necessary foundation. Think of the assessment plan as the design and data collection plan for a small study that you will conduct over the course of an academic year. Your investment of time at the beginning to design a high-quality assessment plan will ensure that you collect meaningful data that will yield useful information about student learning.

13

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

In the spring, the program faculty are expected to develop an assessment plan for the following year.

Faculty identify the best point(s) to assess student competency after providing students opportunities to reinforce learning and apply their knowledge, skills, and abilities. Refer to the GEO Course Map for the target course and the GEO Program Map to determine where concepts/content is introduced, reinforced, and practiced. The Program/Sequencing Map may further inform the best point in a students’ degree/certificate pathway when competency could be measured.

The planning draft should be submitted to the Division Chair for approval then presented to the GEO

Subcommittee for review and feedback. Once the GEO Subcommittee approves the plan it is presented to the GEO Executive Subcommittee for review and approval, then to the Director of Student Learning

Assessment for final approval and implementation.

The Student Learning Assessment Team (C&SLA Office staff) is available to assist you with assessment planning and curriculum mapping.

A blank assessment plan template can be found at the back of this Guide or online at the C&SLA webpage.

Assessment Planning Steps

Mission Strategic

Goals

Outcomes Measures

Performance

Criteria

(Standards)

Sampling

Assessment

Plan

The graphic above shows the six steps to follow when creating an assessment plan. It is important to follow the steps in the correct order.

A.

Mission: Consider the institutional, department, and program mission statements to connect the dots and see the relationships. Accreditors evaluate how well an institution’s mission is achieved through academic programs and other co-curricular initiatives. These statements describe who we are and what we are about. Assessment outcomes must be directly related to the departmental mission.

Please refer to the CAC mission statements.

B.

Goals: What do you want your students to be when they grow up? What hopes and aspirations do program faculty have for program graduates three to five years after graduation? Some examples are:

Transfer, further academic study

Employed in field of study

Professional licensure/certification

Contribution to scholarship (research, publication, teaching)

Use program goals to help frame your expectations for your students. Identify several possible longrange goals for students in your course/program. Refer to the GEO Course Maps.

C.

Outcomes: Review the course Measurable Student Learning Outcomes (MSLOs) for your program and determine the overarching program levels SLOs. What kinds of knowledge, skills, or abilities will students need to possess and demonstrate competency in when they graduate in order to achieve that goal? That body of knowledge or skills set is your Program Measurable Student Learning

Outcomes. PMSLOs may be expressed in three (3) to five (5) all-encompassing SLOs. Faculty should

14

CAC General Education Outcome Assessment Process Guidelines

(02/03/12) ask: “What do students need to get in this course/program to do ‘that’ out there?” Creating MSLOs and MSLOs becomes easier with practice. At CAC, many curriculum proposers and Curriculum Mentors are familiar with this process step. The time you invest now will save time later, and will ensure that you are able to collect high-quality assessment data. If you need help, please contact the C&SLA office.

We can help you simplify things.

At CAC we use Bloom’s Taxonomy of Learning, Cognitive Domain, to frame the level of thinking skills required to achieve a MSLO. Bloom’s hierarchical system of ordering thinking skills from lower to higher, with the higher levels including all of the cognitive skills from the lower levels, informs all learning activities. See CAC Institutional GEO Rubric on page 34, approved fall 2011.

See the AAC&U’s Valid Assessment of Learning in Undergraduate Education (VALUE) rubrics at: http://www.aacu.org/value/ . Download them for free from: http://www.aacu.org/value/rubrics/index.cfm

. The following rubrics are available at the program level: Intellectual and Practical Skills: Critical Thinking, Written Communication, Oral Communication,

Reading, Quantitative Literacy, and Information Literacy; Personal and Social Responsibility: civic knowledge, Intercultural knowledge, Ethical reasoning; and Integrative and Applied Learning.

The learning outcomes you develop provide a foundation for all your assessment work.

D.

Measures: Use the CAC Institutional GEO Rubric then customize the rubric to best fit the Course and

Program MSLOs which align with the CAC GEOs.

Identify two measures for each outcome. The first measure must be a direct measure and the second can be direct or indirect.

A direct measure is one in which students demonstrate their learning through some kind of performance, such as:

Classroom exams/quizzes (pre- and post-tests, several questions on a final exam)

Classroom/homework assignments

Course projects

Online discussion threads

Licensure/Certification exams

Course presentations

Artistic creations or performances

Practical clinical assignments

Presentations/publications

Capstone project/paper/portfolio

Research papers and other student samples where the students themselves actually demonstrate their knowledge or skill.

15

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

An indirect measure is one that provides information from which we can draw inferences about student learning. We rely on information reported either by the students themselves or by some third party about the level of student knowledge/skill, such as:

Student surveys and focus groups

Practicum supervisor/evaluator

End-of-Term Course evaluations

Employer surveys/interviews

Exit surveys and interviews

Job placement data

ASSIST, transfer student data

Admission to the university to pursue baccalaureate degree

Alumni surveys and interviews

Be careful

; avoid creating additional tests or other assessment activities simply to satisfy your assessment data collection needs. It should be possible to identify exam questions or other measures of student learning that already occur as part of your existing instruction and testing activities. In some cases, a course’s MSLOs may align with multiple GEO Outcomes and Standards. In this case, one instrument may be used for assessment and faculty teams of experts in each area may review the student artifact to determine level of competency in each of those GEO Standards.

For your assessment plan, you only need to list the specific measure (Ex. final exam in FOR202).

E.

Performance Criteria: For each measure, a performance criterion will be used to determine the level of performance necessary to ascertain whether student performance on the measure indicates that the program outcomes has been achieved, a “level two” on CAC’s Rubric. Not all students in a program will perform perfectly on every measure, so program faculty must identify a threshold above which they will be satisfied that, on the whole, students who graduate from the program possess the knowledge/skill specified in the outcome.

Performance criteria must be identified prior to the collection and analysis of assessment data. When setting performance criteria, it can be tempting to set unreasonably high “nothing but mastery” standards or set unreasonable low “guaranteed to show success” standards. Both of these practices can be self-defeating. Over time, it is far more beneficial to a program and its students to set reasonable expectations and work toward achieving them. Think of a reasonable standard, state it clearly on your rubric, and ensure your students are taught the skill well, have many opportunities to practice and refine the skill with helpful feedback from the faculty, and then set them up for success on the assessment.

Course grades and course completion are not appropriate for use in performance criteria. Capstone courses are excellent points to measure student learning.

F.

Sampling: Identify a sampling strategy for each measure. The sampling decision informs the collection plan for your assessment activities and will help to ensure that data collection is structured. It is not

16

CAC General Education Outcome Assessment Process Guidelines

(02/03/12) necessary to select a statistically representative student sample, although you may choose to do so. At

CAC, if 200 students typically enroll in the target course each term, a sample of 15% or 30 students across all sections would serve as a representative sample. Likewise, a high enrollment course of over

800 students could rely on a sample of 100 students. It is important that you collect and analyze data from a group of students that is reasonably representative of the group of program majors about whom inferences will be drawn. You should not focus on Honors sections; nor should you systematically exclude them.

As you plan your sampling strategy, think about the number of students who will be included in the data collection and the number of faculty involved. Also consider the complexity of the data and the time required of the faculty to assess the student artifact. The purpose of assessment is to provide information about the knowledge/skills of students. Describe your sampling and the number of students included as of the assessment date (this number may vary from the initial number of students enrolled on day one or day 45).

Suggestions for identifying and documenting a Sampling Strategy:

1.

Sampling strategy statement should reflect a before the fact decision about how you will select a

reasonably representative group of program students AND answer the following questions: a.

How many students will be included in the data collection? b.

Who are those students? (ID number, degree major/certificate) c.

What timeframe best fits securing artifacts demonstrating competency: data collection week? d.

Other unique parameters: multiple modalities, some students incarcerated, some accelerated sections.

2.

Sampling facilitates the assessment process when programs have large numbers of students and it is not feasible to assess all students and evaluate the artifacts. CAC recommends assessors choose a sample based on a percentage of total students enrolled in the course as well as artifact size and complexity. To determine a sample size, consider:

The number of students enrolled in the target course that semester

Calculate 10%: For example, if 300 students are enrolled in all sections, assess 10% or 30 students drawn from a Random Sampling across a variety of sections (representative of the whole).

Types of Sampling

Simple Random: Randomly select a certain number of students/artifacts.

Stratified: Sort students into homogenous groups, then random sample is selected from each group-- useful when there are groups that are underrepresented.

Systemic: Select the Nth artifact from an organized list (every 7 th ).

Cluster: Randomly select clusters/groups and evaluate the assignments of all the students in those randomly selected clusters/groups.

17

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

Steps A through F culminate in your Assessment Plan. Congratulations!

ASSESSMENT PROCESS STEPS:

1.

Courses selected to assess through Lottery system (February)

2.

Academic Leadership informed of Courses drawn in Lottery (February)

3.

Instructional Team notified by Director of Student Learning Assessment (after instructors finalized)

4.

Course Assessment Leader (CAL) selected at first meeting or based on experience

5.

CAL attends training

6.

Course Assessment Team (CAT) meets: review GEO Course Map, Institutional GEO Rubric and course assignments/exams to select best artifact of student learning

7.

CAT identifies existing course student artifact/assignment/exam or creates 2 exam questions

8.

CAT determines random sample (30 or 10% of total enrollment), testing parameters and timeline

9.

CAT Instructors grade the assignment/exam then submit with student ID # to CAL (black-out names and photocopy); BB courses may use EAC or follow a different process for encoding authentic student ID # on artifact

10.

CAT Evaluation team of 3, reviews rubric, scores 2 student samples, shares results and rationale to norm evaluation scores

11.

Two evaluators score student work using GEO Rubric; third evaluator determines the score if no consensus

12.

Raw scores are reported by CAL to IPR using the GEO Raw Data Submission form and Cover Sheet

13.

IPR uses SPSS to analyze Raw Data and returns a Data Report to CAL for team analysis of results

14.

CAL, Instructional Team, cross-discipline colleagues and students review the results and available reports to identify one key improvement to help students learn

15.

Team meets to write analysis report, to identify CQIs, to vote on improvement for implementation, create an implementation plan, identify and request essential resources, and to write GEO

Assessment Executive Report

16.

CAL shares report with GEO Subcommittee chair and Division chair then submits approved Report to C&SLA Director

17.

C&SLA posts Reports to BB: Evidence of Student Success site and email CAC community

18.

C&SLA Director forwards reports to Accreditation and Quality Initiatives Director with results, CQI support needed, and resources needed

19.

CAC Community acts on and supports proposed improvements to student learning

20.

Reports are formatted for HLC, Assessment Academy, AQIP, Quality Matters, CAC and others

21.

GEO Results impact Program Review, Operational Plans, Budget Requests, program initiatives, curriculum modifications and pedagogical modifications

22.

CELEBRATE! Recognition of Successes by CAC

23.

Continue the GEO Assessment Process Cycle

18

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

COLLECT DATA

Collect

Data

You have completed the complex and time-consuming work of planning your assessment activities. The outcomes, measures, performance criteria, and sampling strategies you identified were both the data collection plan for this phase of your assessment work as well as the foundation for the interpretation and decision-making phases that follow.

Your team should decide, in advance, who will serve as the Course Assessment Leader and be responsible for data collection and submission to Institutional Planning and Research (IPR). Will a support person coordinate meetings, assemble materials, and deliver data packets? CAC commonly uses Excel spreadsheets to record student scores and to analyze data. Depending upon your assessment instrument, the spreadsheet may be simple or complex, i.e. designed to capture results from several different types of assessments. Be careful! Keep it simple.

Your assessment plan points to the specific courses and timeframes in the term when student have developed knowledge/skills and experiences applying that knowledge. The assignments/exams should measure student learning near the conclusion of the course. Be sure to notify instructors well in advance of the collection point so that this important step will not be overlooked. Timing and preparation are essential.

The Course Assessment Leader serves as the point person for collection of all artifacts and ensures student data is removed except for the CAC student identification number. A Norming Session or refresher on how to use the various instruments occurs before the actual assessment of the student work. Using the agreed upon Scoring Data Collection form customized for the General Education Outcome Standards and types of assessments conducted, the Institutional Rubric, and a sample artifact, the minimum team of three individually score the artifact. All scores must fall within the whole numbers on the Institutional Rubric, 0,

1, 2, or 3.

Norming:

Each evaluator uses a sticky note to record the artifact score and attaches it to the back of the page. If multiple questions are scored, include the question number and the score on the sticky note. The second evaluator scores the artifact before viewing the score from evaluator one. After the first two samples have been scored by both evaluators, they convene to share and discuss their scores and the rationale behind determining each score.

After norming has occurred, the evaluators score all the artifacts for the GEO Standard(s). If this becomes a lengthy process, take a break every hour and Norm another student sample to fine tune evaluators’ scoring judgments.

If the artifact is used for assessment of two or more GEO Objectives, the results are recorded and the CAL delivers the artifact set to the next CAL so that the second team of evaluators may conduct their evaluation.

19

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

Immediately after scoring occurs, the Course Assessment Leader completes the GEO Raw Data Submission

Cover Sheet and submits the Raw Data Submission form with the assessment scores to IPR: ipr.ipr@centralaz.edu. Be sure to include on the GEO Raw Data Submission form all student’s ID numbers.

Scoring Sample (with team of three evaluators):

Evaluator One: score of 2

Evaluator Two: score of 1

Meet to discuss and reach consensus. If no consensus achieved, Evaluator Three scores the artifact.

Evaluator Three: score of 1

All three evaluators meet and attempt to reach consensus. If no consensus achieved, average the scores.

Total score value (2 + 1 + 1) = 4

Total divided by number of evaluators: 4/3 = 1.33

The overall score is rounded to the closest whole number, either down if less than .5 or up if .5 or higher, thus the student work would be given a score of “1” (one).

IMPORTANT: Save all General Education Outcome Assessment related e-records, evaluation and analysis documents, and reports on a CAC share drive and on your jump drive.

Interpret

Results

INTERPRET RESULTS

In the data interpretation phase of your assessment work, program faculty will use the resulting

Institutional Planning and Research Raw Data Report to further analyze their students’ learning data. The assessment data collected during the fall, scored by faculty experts, and processed by IPR led to this essential step. While reviewing the data, ask whether the scores achieved on the General Education

Outcome assessment captured your students/program graduates’ knowledge/skill level. If the scores match your expectation, explain why. If they don’t, determine the factors that impacted the results and how those factors may be mitigated or eliminated to help improve student learning. Focus on each learning outcome and Standard. Typically, the IPR data results will require significant mining by the experts to find meaningful answers to key questions they pose about their students’ learning. This phase of evaluating the IPR Data Report and analyzing the data results within the context of the course leads to the action phase: the most important part of program assessment. This is the point where program faculty determine what the assessment data means and make decisions about how to use that information to improve student learning.

The Evaluation Team should review the IPR Data Report as soon as possible, ideally in January. Note that

CAC uses only whole number scores to chart student progress toward GEO competency, score of 2.

However, your team will wish to note incremental improvement over time, i.e. pre- to post-test improvements or review previous benchmark data to gauge improvement over a longer period of time based on the quality improvement measures implemented.

Remember, the purpose of assessment is not to penalize programs that may not have met all their outcomes or to reward those who did. The purpose is to provide an honest and accurate look at where we

20

CAC General Education Outcome Assessment Process Guidelines

(02/03/12) believe our students fully meet our learning expectations, where we’ve identified room for improvement, and the strategies we’ve identified to improve student learning.

The professional judgment of faculty is essential to interpreting the data. There is no “right” answer. The important thing is for program faculty to interpret the data about student learning and determine whether students have satisfactorily demonstrated the knowledge/skill of the MSLO and the GEO.

Qualitative Data

Determine which factors may have contributed to the findings or results you reported. Provide a brief discussion of components of the course/program which led to student strengths or to student challenges in learning. Were there pockets of students who seemed more challenged? What specific shared characteristics may have contributed to this? What does the data tell you about student learning in this course/program? You may discuss a recent course change that you believe helped to improve student learning related to the GEO. You may focus on aspects of the course which are particularly strong and should be highlighted. You might also believe that the assessment measure(s) and instrument(s) used were well-suited to the GEO and provide high-quality information.

Conversely, if you are dissatisfied with the data results, i.e. the data seems disconnected to accepted, standardized instructional methods and support structures, explain that weakness and provide a process solution. Perhaps the performance criteria did not really capture your students’ knowledge/skill. Are there foundational concepts or theories that students did not adequately apply near the end of the course?

If so, at what point in the curriculum could that content have been more strongly emphasized? Was a standardized test used as one of your measures not sufficiently related to your curriculum/MSLOs? Was the rubric set at an unrealistically high level? Program faculty, as the experts on the curriculum, are the best suited to judge why student learning for the outcome did not meet expectations.

Act on

Results

ACT ON RESULTS

How will program faculty use the assessment results? After the Evaluation Team reviews the assessment data and identifies likely factors that led to the observed results, this information should be used to make curriculum assessment, or other program-related decisions aimed at the improvement of student learning.

Share your results with the respective Division, discuss your suggestions for improvement and solicit their ideas too. Jointly, determine the changes to be implemented during the coming year to improve student learning and provide a rationale for those changes. If these changes require budgetary restructuring, state those requirements.

If the assessment data indicates that course completers possess the GEO knowledge/skill expected, program faculty may determine that they have nonetheless identified opportunities for improvement in course content, instructional methods, assessment processes, or other program components that will be implemented during the next assessment cycle. Or they may conclude that the target number of students have achieved competency and no further action is needed at this time. At that point, they may decide to focus on a new assessment area such as creation of a capstone course.

21

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

If the data indicate that course completers do not possess the knowledge/skill of the GEO, program faculty should examine the factors they believe contributed to this result and identify any corrective measures to be taken, including:

Addition of course content

Addition of tutorials, assignments, or other learning activities designed to reinforce learning

Change the course sequence, prerequisite, and/or better align the program curriculum

Require more time on task

Others, as identified by the program faculty

Report

REPORT

Your Annual Assessment Report is due May 1 st. At the conclusion of your Interpretation of the Results and initial vetting within the division, prepare an Executive Summary report using the GEO Assessment

Executive Report Template (found on the C&SLA website and on Blackboard under: Evidence of Student

Success). A sample is included in the Appendix on p.51.

Update your assessment plan to indicate the outcomes, measures, and performance criteria for the next assessment cycle. Assess your plan and your suggestions for improving student learning to build your next plan. When planning for assessment which may occur one or more years in the future, it is important to consider changes that may not produce results in the next cycle. Consider the case of a capstone project used to measure student learning on one or more GEOs. Program faculty may identify some weakness of students’ knowledge/skill on one of the Standards and implement instructional strategies in a foundation course where the concept is first introduced. If program majors typically take that foundation course in their first year, it may be actually take the average student several years to take that course.

Adequate planning by program faculty can avoid confusion about outcomes and measures to be considered across subsequent cycles. Staggered results serve to reinforce the iterative nature of assessment, as well as the need to study student learning across the full span of the curriculum.

Data Repository

When you are ready to submit documents and reports to the Assessment Repository: BB: Evidence of

Student Success, please email your documents to the C&SLA office: Jennie.Voyce@centralaz.edu

. The

Assessment Repository includes the following:

Best Practices

Forms

Assessment Glossary of Terms

Meeting Minutes

Reports

Rubrics

22

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

GEO Assessment Process: Step-By-Step for Course Assessment Teams

A.

Establish GEO Assessment Team members

Select evaluation team (3 content experts) and determine roles (for example, 1 Course

Assessment Leader, 2 assessors)

Meet with Division Assessment Mentor

Select Course Assessment Leader: collector of student artifacts, raw data submitter, lead report writer

Determine training needs, set goals, meeting dates, and deadlines

Confirm selected target course points of assessment, instrument, methods, sample, align GEO objectives and standard(s) to MSLOs

B.

Refer to CAC GEO Assessment Standardized Processes and Reports: Blackboard

Evidence of

Student Success

Site or call 520-494-5911 or 5215 (C&SLA office)

B1. Review Existing Assessments a.

Evaluate instruments, process steps, student sampling methods, evaluation team expertise levels with GEO assessment b.

Consider the breadth and depth of data needed c.

Review results and determine data gaps or areas where additional data is needed to determine trends

B2. GEO Map the course (check ACRES to determine if this step is complete) a.

Align MSLOs with GEOs using GEO Map to determine assessable GEOs in the target course (If no GEO Map exists, submit one in ACRES using Expedited GEO form.) b.

Identify currently assessed GEOs and review results

B3. Stakeholder Analysis:

Collect or review information from stakeholders including Advisory Boards, Student Exit data, Employer data, Community/County focus group data, and Transfer Student success data (ASSIST). a.

Determine stakeholders’ needs

Internal - students, colleagues

External - employers, receiving institutions of higher education b.

Coordinate with GEO Subcommittee and division/department to determine GEO focus for target course c.

During the mapping process, modify course MSLOs if needed to better reflect teaching practices and content taught (submit in ACRES through standard curriculum process) d.

Refine Rubric

23

CAC General Education Outcome Assessment Process Guidelines

(02/03/12) i.

Adapt Institutional GEO Rubric to course and assessment instrument, if needed e.

Select Assessment Instrument (existing course artifact, specific test questions, paper, presentation, pre- and post-test. Share the GEO artifact with GEO colleagues who may be able to also use the artifact for their assessment.) i.

Improve tools, i.e. determine if question results in student demonstrating desired knowledge/skills (reword question if needed; document all improvements) ii.

Align questions to course MSLOs and GEO Standards f.

Define rubric terminology (if not already included in BB Glossary of Assessment

Terms) i.

Submit new definitions to C&SLA to upload to BB Glossary g.

Select sample: course, sections, faculty, total student sample size i.

Communicate plan to all instructors who will administer tests or gather artifacts (Student artifacts may be projects/papers already required for the course which after they are graded for the course are also used for General Education Outcome Assessment; Some artifacts may be used to determine multiple Standards of student learning, such as Informational Literacy, Writing, and Critical Thinking.)

1.

Random Sample: Based on a course with combined enrollment in all sections of 100 students, select every 3rd student on the rosters (10% of students) and collect 30 artifacts or samples of student work.

2.

Samples represent: all CAC students, all sites, all instructors and a variety of modalities

C.

Standardize assessment timeline/cycles

Pre-tests administered same day/week in all sections

Implement standardized guidelines for conducting tests/gathering artifacts

D.

Gather artifacts (after grading is completed for the course)

E.

Collect Data

A.

Conduct GEO Evaluation i.

Collect data at various agreed upon points of a student’s progress through the curriculum. Identify specific points where measures of student learning will occur.

24

CAC General Education Outcome Assessment Process Guidelines

(02/03/12) ii.

Alert appropriate course and program faculty regarding data collection and activities for which they are responsible.

Identify point person to send originals to C&SLA or makes copies and send them to C&SLA

C&SLA will expunge/black out names and all identifiers

C&SLA will send originals back to point person to return to instructor(s)-brown envelope labeled with instructor’s name, course title, section #

F.

Evaluation Team Training:

A.

Determine and discuss competency criteria (Level 2)

Conduct initial score Norming session (repeat as needed every hour)

Score two artifacts, discuss how/why score was determined based on the rubric

If two scores differ widely, use third evaluator to determine final score.

G.

Submit GEO Raw Data form with scores to Institutional Planning and Research office for processing: ipr.ipr@centralaz.edu

H.

Institutional Planning and Research Analyzes data and returns Data Report to Course

Assessment Leader

A.

Submit Raw Data Scores to IPR using Raw Data Submission form; be sure to include student CAC identification number.

B.

Course Assessment Leader will receive Data Report from IPR.

C.

Course Assessment Leader calls a meeting to include program faculty and division chair to review results and to consider what information about student learning they yield.

D.

Based on the data collected, the IPR report and further data analysis the faculty determine where the opportunities lie to make changes that can improve student learning. What has worked particularly well that can be reinforced or expanded? What assessment changes can be made to yield better information about student learning?

Look for trends

Consider areas to improve

Provide rationale for conclusions: qualitative data

Decide what to implement

Create report: GEO Assessment Executive Report with statistics and analysis, including next steps and how the data will be used to support improvement decisions

25

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

I.

Benchmarking

The IPR office is responsible for Benchmarking: create internal benchmark report of graduating student cohorts, track individual student learning over time, gauge improvements in student learning from term to term, determine overall success in achieving CAC General Education

Outcomes goals and create external benchmarks with peer institutions to determine how well

CAC graduates are prepared for their future career pathways.

J.

Report processes, analysis, and results following standardized processes

Submit the GEO Assessment Executive Report to the SLA Director. Summarize the data collected on each outcome and standard, explain what the data tell you about student learning, and the actions which will be taken as a result.

Prepare reports for HLC/Assessment Academy, Accreditors and CAC

Due every six months (January and June)

Use standard reporting of statistical/qualitative data

Give monthly minutes and updates to C&SLA office for posting to “Evidence of

Student Success” Blackboard site

Provide the CAC Assessment Committee and the GEO Executive Subcommittee with monthly reports on:

Project status

Results with statistics and narrative

Evidence of Student Learning

Results used to make decisions: Identify improvements to support greater Student Learning

Process improvement recommendations, embed in Operational Plans

when appropriate, implement and monitor results

K.

Assess, refine, and streamline processes:

Eliminate non-value added steps (simplify, eliminate redundancy)

Document and report assessment and process improvement(s)

Standardize cyclical, ongoing assessment(s)

Determine Time, instrument(s), population(s)

Use results to Improve student learning

Thank you!

Our scholarly assessment of student learning contributes to the longterm success of our graduates and our College!

26

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

Appendix A

Sample Forms with Instructions

Plan

Assessment

Collect

Data

Interpret

Results

Act on

Results

Report

Sample Forms with Instructions

Download the following forms from the Curriculum and Student Learning Assessment website:

Student Learning Assessment Tools: http://www.centralaz.edu/Home/About_Central/Curriculum_Development/Student_Learning_A ssessment_Tools_and_Forms.htm

Expedited GEO Map Attachment Form in ACRES

……………………………………………………………….…… 28

GEO Program Map Sample

GEO Program/Degree/Certificate Map Template

…........................................... 29

GEO Course Map Sample:

GEO Course Map Template

…………….……………………..………….………………...... 30

CAC Institutional GEO Rubric:

http://www.centralaz.edu/Home/About_Central/Curriculum_Development/Student_Learning_Assessment_Tools_and_Forms.htm

Raw Data Submission Cover Sheet

……….………………………………………………………….…………………….… 31

GEO Raw Data Submission Form

…………………………….……………………………………………………………... 32

Data Report Sample (generated by IPR based on the Raw Data Submission)

……..………………… 33

GEO Assessment Executive Report

……..…………………………………………………………………………………... 35

GEO Assessment Executive Report Sample (created after initial data analysis)

…..…………….... 36

27

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

Expedited GEO Map Attachment Form

In ACRES, under MY FORMS, select Expedited GEO Map Attachment . Use the drop down box on the far right to select Department then enter course prefix, number and title.

Expedited GEO Map Attachment

Central Arizona College

20000

Cancel Save Changes

* Department:

* Prefix:

* Number:

* Title:

* General Education

Outcomes:

Check all relevant GEOs All Communication Critical Thinking and

Analytical Reasoning Cultural and Artistic Heritage Individual and Social

Responsibility Informational/Technological Literacy

Mathematical/Scientific Inquiry and Analysis

* GEO Map: Complete a GEO Course or Program Map Form (download form here) . Choose the appropriate form and save it to your hard drive or USB flash drive. Fill it out, resave it and attach the completed form by clicking on "Add Attachment" below.

Add Attachment

Document Document Name File Type *

Cancel Save Changes Zs8T2tnSYhD9gK

Attach your GEO Map and submit. ACRES approval chain includes Division Chair.

28

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

GEO Program Map: GEO Program/Degree/Certificate Map Template

Note: The Corrections A.A.S. Degree includes the core courses as well as the AGEC courses.

For additional GEO Mapping instructions, see GEO Course Map Step-by-step at: http://www.centralaz.edu/Home/About_Central/Curriculum_Development/Studen t_Learning_Assessment_Tools_and_Forms.htm

29

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

GEO Course Map: GEO Course Map Template

Note: Each GEO Objective is now numbered and the Standards below each is lettered A through F.

Institutional GEO Rubric:

Institutional GEO Rubric

Note: Each GEO Objective is now numbered and the Standards below each is lettered A through F.

30

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

GEO RAW DATA SUBMISSION COVER SHEET

Instructions: Course Assessment Leader completes one GEO Raw Data Submission cover sheet for all sections of the course. Only the Evaluation Team agreed upon Raw Data score per student per standard is submitted.

Submit the cover sheet and all GEO Raw Data forms to ipr.ipr@centralaz.edu

. Keep a copy for your records.

(Check pages 12-13 of the GEO Assessment Process Guide for the deadlines .)

GEO Assessment Course Assessment Leader

Name and Contact Information

Date Submitted to Institutional Planning and

Research

GEO Objective(s)

GEO Standard(s)

Course Prefix and Number

Course Title

Number of CRNs Assessed

CRN(s)

Term (ex: Fall 2013)

Date of Assessment in course

Number of Assessors

Names of Assessors

Number of Students Assessed

Number of Students in Sample

CAC Institutional GEO Rubric F.2011 Used?

Number of Artifact Evaluators

Names of Evaluators

Instrument/Artifact Type Legend

1 = Final Exam

2 = Midterm Exam

3 = Paper

4 = Pre-Test

5 = Post-Test

6 = Presentation

7 = Project

8 = Quiz

9 = Research Paper

10 = Other (write in):

SPECIAL NOTES:

31

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

GEO ASSESSMENT RAW DATA SUBMISSION FORM *

Course Prefix/Number

Course Title

CRNs

Total Students Enrolled in all CRNs

Number of Students in

Assessment Sample

GEO Objective

GEO Standard**

Specify AGEC(s)

Student Artifact Title

Student Artifact Type***

Semester/Year

Class Assessment Date

Submitted By

Phone/Email

Form Completion Date

*Instructions

1. Submit one form per course.

2. For additional Standards use additional tabs, double click on tab to retitle.

3. Enter the total number of students enrolled in all course sections and the number of students included in the sample (30 students or 10% of total enrolled).

4. Course Assessment Leader submits all forms to IPR, ipr.ipr@centralaz.edu and cc: mary.menzel@centralaz.edu.

5. Refer to Institutional GEO Rubric on C&SLA website to review GEO Standards score descriptions.

6. Authentic Student ID Numbers are required. (Do not use generic Bb ID numbers.)

7. For multiple Standards assessed, you may copy cells 4c through 18c and paste them into additional tabs B, C, D, etc. However, be sure to update the Standard and artifact assessed.

8. For questions call the Curriculum and Student Learning Assessment Office (520)

494-5593 or 5215. Improvement suggestions are welcome.

Student ID

880XXXXXX

Score Notes

2

See example above…

Data Report Sample

**Institutional GEO Standard Code

Numbers refer to Objectives; letters refer to Standards.

1A: Writing Structure, Organization & Awareness

1B: Writing Conventions

1C: Effective Reading

1D: Spoken Disclosure & Nonverbal Messages

1E: Listening & Comprehension

2A: Analysis of Issues

2B: Development & Evaluation

2C: Assessment

3A: Cultural Literacy

3B: Global Perspective

3C: Artistic Heritage

4A: Personal Integrity & Core Values

4B: Personal Well-Being

4C: Perspectives of Others

4D: Contribute to Larger Community

5A: Access of Information

5B: Evaluation of Information

5C: Use of Information

5D: Knowledge of Technology

5E: Impact of Technology

5F: Use of Technology

6A: Scientific Concepts

6B: Scientific Methods

6C: Scientific Principles

6D: Mathematical Model Development

6E: Mathematical Computation

6F: Mathematical Interpretation

***Instrument/Artifact Type Legend

1 = Final Exam

2 = Midterm Exam

3 = Paper

4 = Pre-Test

5 = Post-Test

6 = Presentation

7 = Project

8 = Quiz

9 = Research Paper

10 = Other (write in):

32

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

33

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

34

CAC General Education Outcome Assessment Process Guidelines

(02/03/12)

GEO Assessment Executive Report

Date Submitted:

Course and Title:

Term and Year of Assessment:

GEO Outcome(s) and Standard(s) Assessed:

Division/Program:

Report Author/CAL and Contact Information (email and phone):

Assessment Activities (Explain the assessment method. Make sure to include the following: course(s) in which artifacts were collected; artifact(s) type/kind; when and how the artifacts were collected; sample size; training method of data collection; evaluation rubrics; other information specific to your actual process.):

Level of Faculty Engagement (Names and Number of faculty submitting assessment artifacts and participating in assessment activities. Also indicate whether faculty are full-time or part time):