Dissertation Defense Multiple Alternative Sentence Compressions

advertisement

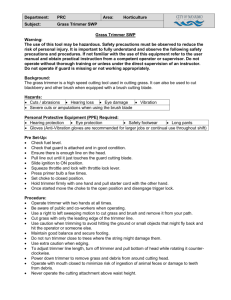

University of Maryland College Park Department of Computer Science Dissertation Defense Multiple Alternative Sentence Compressions as a Tool for Automatic Summarization Tasks David Zajic November 28, 2006 Talk Roadmap • Problem Description • Contributions and Hypotheses • Automatic Summarization under MASC framework – Single Document Summarization and Evaluation • HMM Hedge, Trimmer, Topiary – Extension to Multi-document Summarization • Collaboration and Novel Genres • Summary and Conclusion • Future Work Problem Description • Automatic Summarization – Distillation of important information from a source into an abridged form Problem Description Abstractive vs. Extractive • Abstractive Summarization: new text generated by the summarizer • Extractive Summarization: select sentences with important content from the document • Challenges of Extractive Summarization • Sentences contain mixture of relevant, nonrelevant information • Sentences partially redundant to rest of summary Problem Description Summarization Tasks • Single Document Summarization – Very short: Headline Generation – Single sentence – 75 characters • Query-focused Multi-Document Summarization – Multiple sentences – 100 – 250 words Problem Description Headline Generation • Newspaper Headlines – Natural example of human summarization – Three criteria for a good headline: • Summarize a story • Make people want to read it • Fit in specified space – Headlinese: compressed form of English Problem Description Headline Types • Eye-Catcher – Under God Under Fire • Indicative – Pledge of Allegiance • Informative – U.S. Court Decides Pledge of Allegiance Unconstitutional Problem Description Sentence Compression • Selecting words in order from a sentence – Or window of words • Feasibility Studies – Humans can almost always do this for written news – Bias for words from within a single sentence – Bias for words early in document Problem Description Sentence Compression • Single-candidate – Generates single compression of sentence • Multi-candidate – Generates multiple compressions of sentence – Schizophrenia patients whose medication couldn't stop the imaginary voices in their heads gained some relief after researchers repeatedly sent a magnetic field into a small area of their brains. – Schizophrenia patients gained some relief after researchers repeatedly sent magnetic field into small area of brains. – Schizophrenia patients gained some relief. – researchers repeatedly sent magnetic field into small area of brains Problem Description Potential of Compression • Sentence compression can reduce size of sentences while preserving relevance. • Subject was shown 103 sentences, relevant to 39 queries, asked to make relevance judgments on 430 compressed versions • Potential for 16.7% reduction by word count, 17.6% reduction by characters, with no loss of relevance Problem Description Single Document • A newspaper editor was found dead in a hotel room in this Pacific resort city a day after his paper ran articles about organized crime and corruption in the city government. The body of the editor, Misael Tamayo Hernández, of the daily El Despertar de la Costa, was found early Friday with his hands tied behind his back in a room at the Venus Motel… Problem Description Single Document • A newspaper editor was found dead in a hotel room in this Pacific resort city a day after his paper ran articles about organized crime and corruption in the city government. • Newspaper editor found dead in Pacific resort city Problem Description Single Document • A newspaper editor was found dead in a hotel room in this Pacific resort city a day after his paper ran articles about organized crime and corruption in the city government. • Paper ran articles about corruption in government Problem Description Single Document • A newspaper editor was found dead in a hotel room in this Pacific resort city a day after his paper ran articles about organized crime and corruption in the city government. • Hernández, Zihuatanjo: Newspaper editor found dead in Pacific resort city Problem Description Single Document • A newspaper editor was found dead in a hotel room in this Pacific resort city a day after his paper ran articles about organized crime and corruption in the city government. • Newspaper Editor Killed in Mexico – (A) Newspaper Editor (was) killed in Mexico Problem Description Multi-Document • Gunmen killed a prominent Shiite politician • The killing of a prominent Shiite politician by gunmen appeared to have been a sectarian assassination. • The killing appeared to have been a sectarian assassination. Automatic Evaluation of Summarization • Recall Oriented Understudy of Gisting Evaluation (Rouge)(Lin 2004) – Rouge Recall: ratio of matching candidate ngram count to reference n-gram count • Rouge parameters for high correlation with human judgments (Lin 2004) – Rouge with unigrams (R1) for headline generation – Rouge with bigrams (R2) for multi-sentence summaries (single- and mult-document) Talk Roadmap • Problem Description • Contributions and Hypotheses • Automatic Summarization under MASC framework – Single Document Summarization and Evaluation • HMM Hedge, Trimmer, Topiary – Extension to Multi-document Summarization • Collaboration and Novel Genres • Summary and Conclusion • Future Work Contributions • Multiple Alternative Sentence Compressions Summarization Framework • Sentence compression methodologies – HMM Hedge – Trimmer • Topiary Headline Generation Contributions MASC • Framework for Automatic Text Summarization • Generation of many compressions of source sentences to serve as candidates • Select from candidates using weighted features to generate summary • Environment for testing hypotheses Contributions Sentence Compression • Underlying technique: select words in order, with morphological variation • HMM Hedge (Headline Generation). Statistical method, uses language models to mimic Headlinese • Trimmer. Syntactic method, uses syntactic parse-and-trim rules to mimic Headlinese Contributions Topiary • Headline Generation technique – Combines fluent text with topic terms – Highest scoring system in Document Understanding Conference 2004 (DUC2004) • Most recent evaluation of headline generation Hypotheses 1. Extractive Summarization Systems can achieve higher Rouge scores by using larger pools of candidates • Extractive summarization systems can achieve higher Rouge scores by giving the sentence selector appropriate sets of features. • For Headline Generation, combination of fluent text and topics better than either alone Talk Roadmap • Problem Description • Contributions and Hypotheses • Automatic Summarization under MASC framework – Single Document and evaluation • HMM Hedge, Trimmer, Topiary – Extending to Multi-document Summarization • • • • Review of Evaluations Collaboration and Novel Genres Summary and Conclusion Future Work General Architecture HMM Hedge Trimmer Topiary Lead Sentence First N Sentences Document Candidates Sentence Selection Sentences Candidate Selection Feature Weights Maximal Marginal Relevance Summary Sentence Compression Sentence Selection • Select sentences to be compressed • Single document: Lead sentence – First non-trivial sentence of document • Multi-document: First 5 sentences of each document HMM Hedge Architecture Headline Language Model Sentence Part-of-speech Tagger General Language Model HMM Hedge Verb Tags Candidates HMM Hedge Noisy Channel Model • • • • Underlying method: select words in order Sentences are observed data Headlines are unobserved data Noisy channel adds words to headlines to create sentences (Knight and Marcu 2000, 2002, Banko et al 2000, Turner and Charniak 2005) President signed legislation On Tuesday the President signed the controversial legislation at a private ceremony HMM Hedge HMM for Generating Stories from Headlines • H States emit headline words • G States emit nonheadline words • Path through HMM corresponds to a headline HMM Hedge P( S | H ) P( H ) argmax H P( H | S ) argmax H P( S ) P( H ) P(h1 | start ) P(h2 | h1 )...P(hm | end ) P( S | H ) P( g1 ) P( g 2 )...P( g n ) HMM Hedge • Model parameters used to mimic Headlinese – Penalty for clumps of contiguous words – Penalty for large gaps between clumps – Word position penalty • Viterbi decoder constraints – Require a verb – Constrain word length HMM Hedge Multi-candidate Compression • Calculate best compression at each wordlength from 5 to 15 words • Calculate 5-best compressions • 5x11=55 compressions per sentence HMM Hedge Examples • Schizophrenia patients whose medication couldn't stop the imaginary voices in their heads gained some relief after researchers repeatedly sent a magnetic field into a small area of their brains. • Schizophrenia couldn't stop their heads (5) • Schizophrenia couldn't stop in their heads some relief after researchers (10) • Schizophrenia patients whose medication couldn't stop the voices in their heads some relief after researchers (15) HMM Hedge Candidate Selection • Linear combination of features – Bigram probability of headline, P(H) – Unigram probability of non-headline words, P(S|H) – Number of clumps – Length of headline in words, chars HMM Hedge Sentence Selection 0.3 0.25 Rouge 1 0.2 0.15 0.1 0.05 0 Sent 1 Sent 2 Sent 3 Sent 4 Selected Sentence Sent 5 HMM Hedge Sentence Boundary vs Word Window 0.27 0.23 0.21 0.19 0.17 nc e s se 1 10 w nt e or d s 20 w or d s 30 w or d s 40 w or d s or d w 50 w or d s 0.15 60 Rouge 1 0.25 Window Size HMM Hedge Multi-Candidate 0.256 0.254 Rouge 1 0.252 0.25 0.248 0.246 0.244 1-best 2-best 3-best 4-best N-best Candidates 5-best Trimmer Architecture Parser Parses Sentence Trimmer Entity Tagger Entity Tags Candidates Trimmer • Underlying method: select words in order • Parse and Trim • Rules come from study of Headlinese – Some syntactic structures are far less common in Headlines than in Story sentences. Phenomenon Headlines Lead Sent Preposed adjunct 0% 2.7% Conjoined VP 3% 27% Trimmer: Mask operation Trimmer: Mask Outside Trimmer Algorithm • Find all instances of applicable Trimmer rules • Apply rules application instances one at a time until the desired length is reached Trimmer Rule: Root S • Select the lowest leftmost S which has NP and VP children, in that order. [S [S [NPRebels] [VP agree to talks with government]] officials said Tuesday.] Trimmer Rule: Preposed Adjunct (Preamble Rule) • Remove [YP …] preceding first NP inside chosen S [S [PP According to a now-finalized blueprint described by U.S. officials and other sources] [NP the Bush administration] [VP plans to take complete, unilateral control of a post-Saddam Hussein Iraq]] Trimmer Rule: Conjunction • Remove [X][CC][X] or [X][CC][X] [S Illegal fireworks [VP [VP injured hundreds of people] [CC and] [VP started six fires.]]] [S A company offering blood cholesterol tests in grocery stores says [S [S medical technology has outpaced state laws,] [CC but] [S the state says the company doesn’t have the proper licenses.]]] Multi-candidate Trimmer • After each rule application, current state of parse is a candidate • Multi-candidate Trimmer rules – Root S – Preamble – Conjunction Multi-candidate Trimmer Rule: Root-S • Multi-candidate Root S • [S1 [S2 The latest flood crest, the eighth this summer, passed Chongqing in southwest China], and [S3 waters were rising in Yichang, in central China’s Hubei province, on the middle reaches of the Yangtze], state television reported Sunday.] • Single-candidate version would choose only S2. Multicandidate Root-S generates all three choices. Trimmer: Preamble Rule Multi-candidate Trimmer Rule Preposed Adjunct Trimmer: Preamble Rule Multi-candidate Trimmer Rule: Conjunction • [S Illegal fireworks [VP [VP injured hundreds of people] [CC and] [VP started six fires.]]] • Illegal fireworks injured hundreds of people • Illegal fireworks started six fires Trimmer Candidate Selection • Baseline LUL: select longest version under limit • Linear Combination of Features – L: Length in characters or words – R: Counts of rule applications – C: Centrality Trimmer: Multi-candidate Rule 14000 12000 Number of Candidates Generated 10000 8000 6000 4000 2000 0 None R P C R,P Multi-Candidate Rules R,C P,C R,P,C Trimmer: Candidate Counts 0.264 0.262 0.26 0.258 Rouge 1 0.256 0.254 0.252 0.25 0.248 0.246 0.244 5000 6000 7000 8000 9000 10000 Candidate Count 11000 12000 13000 14000 Trimmer: Candidate Rules 0.27 0.25 0.23 No Multi-Candidate Rouge 1 R P C 0.21 R,P R,C P,C R,P,C 0.19 0.17 0.15 LUL L R C LR Candidate Selection Features LC RC LRC Trimmer: Candidate Selection Features 0.27 0.25 0.23 LUL Rouge 1 L R C 0.21 LR LC RC LRC 0.19 0.17 0.15 None R P C R,P Candidate Selection Features R,C P,C R,P,C Topiary • Combines topic terms and fluent text – Fluent text comes from Trimmer – Topics come from Unsupervised Topic Detection (UTD) – High-scoring System at DUC2004 • Most recent large-scale evaluation of Headline Generation systems Topiary Architecture Parser Parses Sentence Topiary Entity Tagger Document Entity Tags Topic Assignment Topic Terms Candidates Topiary Single-candidate Algorithm 1. Adjust length threshold to make room for highest scoring non-redundant topic term • “Osama”, 75 char → 69 char 2. While fluent text above adjusted length threshold 1. Apply a Trimmer rule 2. Adjust length threshold to make room for highest scoring non-redundant topic term 3. Fill remaining space with topic terms Topiary: DUC2004 Humans 0.35 Topiary 0.3 Baseline 0.25 Rouge 1 0.2 0.15 0.1 0.05 DUC2004 Subm itted System s and Peers B D F 1 To pi ar y 13 1 13 6 13 5 78 54 12 8 11 0 50 88 31 87 99 76 91 79 18 98 75 25 0 DUC2004: First 75, Topiary, Trimmer, UTD 0.3 Rouge Score 0.25 0.2 First 75 chars Topiary Trimmer UTD 0.15 0.1 0.05 0 Rouge 1 Rouge 2 Rouge 3 Rouge Metric Rouge 4 Topiary: Multi-candidate Algorithm • Generate Multi-candidate Trimmer candidates • Generate Topic Terms • Combine each Trimmer candidate with each Topic Term. Fill empty space with all combinations of Topic Terms Topiary: Multi-candidate Examples • Document APW19990519.0113 Topics – – – – STUDY SCHIZOPHRENIA BRAIN DOCTORS 1.168 0.315 0.144 0.074 • Schizophrenia patients whose medication couldn't stop the imaginary voices • STUDY Schizophrenia patients whose medication couldn't stop the imaginary v • DOCTORS STUDY BRAIN Schizophrenia patients gained some relief. Topiary Candidate Selection • Linear Combination of Features – L: Length in characters or words – R: Counts of rule applications – C: Centrality – T: Topic counts, sum of scores Topiary DUC2004 Evaluation 0.3 0.25 First 75 chars Rouge Score Trimmer 0.2 UTD Topiary MC Topiary 0.15 0.1 0.05 0 Rouge 1 Rouge 2 Rouge 3 Rouge Metric Rouge 4 Talk Roadmap • Problem Description • Contributions and Hypotheses • Automatic Summarization under MASC framework – Single Document Summarization and Evaluation • HMM Hedge, Trimmer, Topiary – Extension to Multi-document Summarization • Collaboration and Novel Genres • Summary and Conclusion • Future Work General Architecture HMM Hedge Trimmer Topiary Lead Sentence First N Sentences Document Candidates Sentence Selection Sentences Candidate Selection Feature Weights Maximal Marginal Relevance Summary Sentence Compression Multi-Document Summarization Candidate Selection • Maximal Marginal Relevance (MMR) (Carbonell and Goldstein, 1998) 1. Score all candidates with linear combination of static and dynamic features 2. While summary not full 1. Add highest-scoring candidate to summary 2. Remove other compressions of its source sentence from the pool 3. Recalculate dynamic features, Rescore candidates Multi-Document Summarization Candidate Selection Features • Static Features – Sentence Position – Relevance – Centrality – Compression-specific features • Dynamic Features – Redundancy – Count of summary candidates from source document Relevance and Centrality • Candidate Query Relevance: Matching score between candidate and query • Document Query Relevance: Lucene similarity score between document and query • Candidate Centrality: Average Lucene similarity of candidate to other sentences in document • Document Centrality: Average Lucene similarity of document to other documents in cluster Redundancy: Weighted Word Overlap count ( w, S ) P( w | S ) size ( S ) count ( w, G ) P( w | G ) size (G ) redundancy (C , S ) P( w | S ) (1 ) P ( w | G ) wC • λ estimates ratio of topic-specific words in summary to size of summary. DUC 2006 Evaluation 0.45 0.4 Rouge Score 0.35 0.3 0.25 Trimmer HMM 0.2 0.15 0.1 0.05 0 R1 Recall R2 Recall Rouge Metric Talk Roadmap • Problem Description • Contributions and Hypotheses • Automatic Summarization under MASC framework – Single Document Summarization and Evaluation • HMM Hedge, Trimmer, Topiary – Extension to Multi-document Summarization • Collaboration and Novel Genres • Summary and Conclusion • Future Work Collaboration under MASC • University of Maryland and Institute for Defense Analysis Center for Computing Sciences (IDA/CCS) collaborated on a submission to DUC2006 • Sentence compression: Trimmer, CCS shallow compression • Sentence selection features: Relevance, Centrality, Omega Estimated Oracle Score Collaboration under MASC • University of Maryland, Carnegie Mellon University and IBM collaborated on GALE Rosetta Team Distillation Evaluation • Trimmer was a component of the system for generating snippets Broadcast News • 560 broadcast news transcripts – ABC, CNN, NBC, PRI, VOA – January 1998 – June 1998, October 2000 – December 2000 – Transcribed by BBN’s BYBLOS Large Vocabulary Continuous Speech Recognition (Colthurst et 2000) • Baseline (first 75), Multi-candidate Trimmer, Multi-candidate Topiary Broadcast News Evaluation 0.256 0.254 0.252 Rouge 1 0.25 0.248 0.246 0.244 0.242 0.24 0.238 0.236 Baseline Trimmer System Topiary Broadcast News Trimmer Feature Sets 0.3 0.25 Rouge 1 0.2 First 1 First 5 0.15 0.1 0.05 0 LUL L R C LR Feature Sets LC RC LRC Talk Roadmap • Problem Description • Contributions and Hypotheses • Automatic Summarization under MASC framework – Single Document Summarization and Evaluation • HMM Hedge, Trimmer, Topiary – Extension to Multi-document Summarization • Collaboration and Novel Genres • Summary and Conclusion • Future Work Hypothesis No. 1 • Extractive Summarization Systems can achieve higher Rouge scores by using larger pools of candidates. – HMM Hedge, Single-document. Rouge-1 recall increases as number of candidates increases – Trimmer, Single-document. Rouge-1 increases with greater use of multi-candidate rules – Topiary, Single-document. Multi-candidate Topiary scores significantly higher for Rouge 2 than singlecandidate Topiary. Hypothesis No. 2 • Extractive summarization systems can achieve higher Rouge scores by giving the sentence selector appropriate sets of features. – Trimmer, Single-document. Rouge-1 increases with larger set of features Hypothesis No. 3 • For Headline Generation, combination of fluent text and topics better than either alone – Topiary scored significantly higher than Trimmer and UTD Contributions • Multiple Alternative Sentence Compressions Summarization Framework • Sentence compression methodologies – HMM Hedge – Trimmer • Topiary Headline Generation Contributions • Use of MASC framework performance across summarization tasks and compression source • Fluent and informative summaries can be constructed by selecting words in order from sentences. Verified by doing a human study. • Headlines combining fluent text and topic terms score better than either alone Future Work • Enhance redundancy score with paraphrase detection (Ibrahim et al 2003, Shen et al 2006) • Anaphora resolution in candidates (LingPipe tools) • Expand candidate pool by sentence merging (Jing & McKeown 2000) • Sentence ordering in multi-sentence summaries (Radev 1999, Barzilay 2002, Lapata 2003, Okazaki 2004, Conroy et al 2006) End Feasibility Study • Three subjects • 56 AP newswire stories from TIPSTER corpus • Construct headlines by selecting words in order from the stories • Task could be done for 53 prose stories, not for 3 non-prose stories • Only 7 went beyond 60th word Feasibility Study • Two subjects • 73 AP newswire stories from TIPSTER corpus • Construct headlines by selecting words in order from the stories, allowing morphological variation HMM Hedge 0.28 0.26 0.24 Rouge 1 Scores 0.22 R1 Recall, 1 Sentence R1 Precision, 1 Sentence 0.2 R1 Recall, 2 Sentences R1 Precision, 2 Sentences R1 Recall, 3 Sentences 0.18 R1 Precision, 3 Sentences 0.16 0.14 0.12 0.1 1 2 3 4 N-best at each length 5 HMM Hedge • Features (5-fold cross validation) default weight, optimized weight fold A) Linear combination scoring function – Word position sum (-0.05, 1.72) – Small gaps (-0.01, 1.02) – Large gaps (-0.05, 3.70) – Clumps (-0.05, -0.17) – Sentence position (0, -945) – Length in words (1, 42) – Length in characters (1, 85) – Unigram probability of story words (1, 1.03) – Bigram probability of headline words (1, 1.51) – Emit probability of headline words (1, 3.60) HMM Hedge Multi-Document Summarization Headline Language Model General Language Model Feature Weights URA Index HMM Hedge Document Candidates, HMM Features URA Part of Speech Tagger Query (optional) Verb Tags Candidates, HMM Features, URA Features Selection Summary Topiary Evaluation Rouge Metric Topiary Rouge-1 Recall 0.25027 Multi-Candidate Topiary 0.26490 Rouge-2 Recall 0.06484 0.08168* Rouge-3 Recall 0.02130 0.02805 Rouge-4 Recall 0.00717 0.01105 Rouge-L 0.20063 0.22283* Rouge-W1.2 0.11951 0.13234* Redundancy: Intuition • Consider a summary about earthquakes • “Generated” by topic: Earthquake, seismic, Richter scale • “Generated” by general language: Dog, under, during • Sentences with many words “generated” by the topic are redundant Trimmer System R1 Recall R1 Prec. R2 Recall R2 Prec Trimmer MultiDoc 0.38198 0.37617 0.08051 0.07922 HMM MultiDoc 0.37404 0.37405 0.07884 0.07887 Evaluation Human extrinsic evaluation of HMM, Trimmer, Topiary and First 75 LDC agreement: ~20x increase in speed. Some loss of accuracy. Relevance Prediction Baseline First75 char, hard to beat