part8-graphs

advertisement

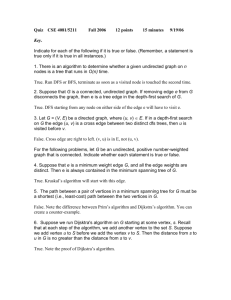

CSE 326: Data Structures

Part 8

Graphs

Henry Kautz

Autumn Quarter 2002

Outline

•

•

•

•

•

Graphs (TO DO: READ WEISS CH 9)

Graph Data Structures

Graph Properties

Topological Sort

Graph Traversals

– Depth First Search

– Breadth First Search

– Iterative Deepening Depth First

• Shortest Path Problem

– Dijkstra’s Algorithm

Graph ADT

Graphs are a formalism for representing

relationships between objects

– a graph G is represented as

G = (V, E)

• V is a set of vertices

• E is a set of edges

– operations include:

•

•

•

•

Han

Luke

Leia

V = {Han, Leia, Luke}

E = {(Luke, Leia),

(Han, Leia),

(Leia, Han)}

iterating over vertices

iterating over edges

iterating over vertices adjacent to a specific vertex

asking whether an edge exists connected two vertices

What Graph is THIS?

ReferralWeb

(co-authorship in scientific papers)

Biological Function Semantic Network

Graph Representation 1:

Adjacency Matrix

A |V| x |V| array in which an element (u, v)

is true if and only if there is an edge from u to v

Han

Luke

Han

Han

Luke

Luke

Leia

Runtime:

iterate over vertices

iterate ever edges

iterate edges adj. to vertex

edge exists?

Leia

Space requirements:

Leia

Graph Representation 2:

Adjacency List

A |V|-ary list (array) in which each entry stores a

list (linked list) of all adjacent vertices

Han

Han

Luke

Luke

Leia

Runtime:

iterate over vertices

iterate ever edges

iterate edges adj. to vertex

edge exists?

Leia

space requirements:

Directed vs. Undirected Graphs

• In directed graphs, edges have a specific direction:

Han

Luke

Leia

• In undirected graphs, they don’t (edges are two-way):

Han

Luke

Leia

• Vertices u and v are adjacent if (u, v) E

Graph Density

A sparse graph has O(|V|) edges

A dense graph has (|V|2) edges

Anything in between is either sparsish or densy depending on the context.

Weighted Graphs

Each edge has an associated weight or cost.

Clinton

20

Mukilteo

Kingston

30

Bainbridge

35

Edmonds

Seattle

60

Bremerton

There may be more

information in the graph as well.

Paths and Cycles

A path is a list of vertices {v1, v2, …, vn} such

that (vi, vi+1) E for all 0 i < n.

A cycle is a path that begins and ends at the same

node.

Chicago

Seattle

Salt Lake City

San Francisco

Dallas

p = {Seattle, Salt Lake City, Chicago, Dallas, San Francisco, Seattle}

Path Length and Cost

Path length: the number of edges in the path

Path cost: the sum of the costs of each edge

3.5

Chicago

Seattle

2

2

2

Salt Lake City

2.5

2.5

2.5

3

San Francisco

Dallas

length(p) = 5

cost(p) = 11.5

Connectivity

Undirected graphs are connected if there is a path between

any two vertices

Directed graphs are strongly connected if there is a path from

any one vertex to any other

Directed graphs are weakly connected if there is a path

between any two vertices, ignoring direction

A complete graph has an edge between every pair of vertices

Trees as Graphs

• Every tree is a graph with

some restrictions:

– the tree is directed

– there are no cycles (directed

or undirected)

– there is a directed path from

the root to every node

A

B

C

D

E

F

G

BAD!

I

H

J

Directed Acyclic Graphs (DAGs)

DAGs are directed

graphs with no

cycles.

main()

mult()

if program call

graph is a DAG,

then all

procedure calls

can be in-lined

add()

access()

Trees DAGs Graphs

read()

Application of DAGs:

Representing Partial Orders

check in

airport

reserve

flight

call

taxi

pack

bags

take

flight

taxi to

airport

locate

gate

Topological Sort

Given a graph, G = (V, E), output all the vertices

in V such that no vertex is output before any other

vertex with an edge to it.

reserve

flight

call

taxi

taxi to

airport

pack

bags

check in

airport

take

flight

locate

gate

Topo-Sort Take One

Label each vertex’s in-degree (# of inbound edges)

While there are vertices remaining

Pick a vertex with in-degree of zero and output it

Reduce the in-degree of all vertices adjacent to it

Remove it from the list of vertices

runtime:

Topo-Sort Take Two

Label each vertex’s in-degree

Initialize a queue (or stack) to contain all in-degree zero

vertices

While there are vertices remaining in the queue

Remove a vertex v with in-degree of zero and output it

Reduce the in-degree of all vertices adjacent to v

Put any of these with new in-degree zero on the queue

runtime:

Recall: Tree Traversals

a

b

f

c

h

g

e

d

k

abfgkcdhilje

i

j

l

Depth-First Search

• Pre/Post/In – order traversals are examples of

depth-first search

– Nodes are visited deeply on the left-most branches

before any nodes are visited on the right-most branches

• Visiting the right branches deeply before the left would still be

depth-first! Crucial idea is “go deep first!”

• Difference in pre/post/in-order is how some computation (e.g.

printing) is done at current node relative to the recursive calls

• In DFS the nodes “being worked on” are kept on

a stack

Iterative Version DFS

Pre-order Traversal

Push root on a Stack

Repeat until Stack is empty:

Pop a node

Process it

Push it’s children on the Stack

Level-Order Tree Traversal

• Consider task of traversing tree level by level from top to

bottom (alphabetic order)

a

• Is this also DFS?

b

f

c

h

g

k

e

d

i

j

l

Breadth-First Search

• No! Level-order traversal is an example of Breadth-First

Search

• BFS characteristics

– Nodes being worked on maintained in a FIFO Queue, not a stack

– Iterative style procedures often easier to design than recursive

procedures

Put root in a Queue

Repeat until Queue is empty:

Dequeue a node

Process it

Add it’s children to queue

QUEUE

a

bcde

cdefg

defg

efghij

fghij

ghij

hijk

ijk

jkl

kl

l

a

b

f

c

h

g

k

e

d

i

l

j

Graph Traversals

• Depth first search and breadth first search also work for

arbitrary (directed or undirected) graphs

– Must mark visited vertices so you do not go into an infinite

loop!

• Either can be used to determine connectivity:

– Is there a path between two given vertices?

– Is the graph (weakly) connected?

• Important difference: Breadth-first search always finds

a shortest path from the start vertex to any other (for

unweighted graphs)

– Depth first search may not!

Demos on Web Page

DFS

BFS

Is BFS the Hands Down Winner?

• Depth-first search

– Simple to implement (implicit or explict stack)

– Does not always find shortest paths

– Must be careful to “mark” visited vertices, or you could

go into an infinite loop if there is a cycle

• Breadth-first search

– Simple to implement (queue)

– Always finds shortest paths

– Marking visited nodes can improve efficiency, but even

without doing so search is guaranteed to terminate

Space Requirements

Consider space required by the stack or queue…

• Suppose

– G is known to be at distance d from S

– Each vertex n has k out-edges

– There are no (undirected or directed) cycles

• BFS queue will grow to size kd

– Will simultaneously contain all nodes that are at

distance d (once last vertex at distance d-1 is expanded)

– For k=10, d=15, size is 1,000,000,000,000,000

DFS Space Requirements

• Consider DFS, where we limit the depth of the search

to d

– Force a backtrack at d+1

– When visiting a node n at depth d, stack will contain

•

•

•

•

•

(at most) k-1 siblings of n

parent of n

siblings of parent of n

grandparent of n

siblings of grandparent of n …

• DFS queue grows at most to size dk

– For k=10, d=15, size is 150

– Compare with BFS 1,000,000,000,000,000

Conclusion

• For very large graphs – DFS is hugely more

memory efficient, if we know the distance to the

goal vertex!

• But suppose we don’t know d. What is the

(obvious) strategy?

Iterative Deepening DFS

IterativeDeepeningDFS(vertex s, g){

for (i=1;true;i++)

if DFS(i, s, g) return;

}

// Also need to keep track of path found

bool DFS(int limit, vertex s, g){

if (s==g) return true;

if (limit-- <= 0) return false;

for (n in children(s))

if (DFS(limit, n, g)) return true;

return false;

}

Analysis of Iterative Deepening

• Even without “marking” nodes as visited,

iterative-deepening DFS never goes into an

infinite loop

– For very large graphs, memory cost of keeping track of

visited vertices may make marking prohibitive

• Work performed with limit < actual distance to G

is wasted – but the wasted work is usually small

compared to amount of work done during the last

iteration

Asymptotic Analysis

• There are “pathological” graphs for which

iterative deepening is bad:

n=d

G

S

Iterative Deepening DFS =

1 2 3 ... n O(n 2 )

BFS = O(n)

A Better Case

Suppose each vertex n has k out-edges, no cycles

• Bounded DFS to level i reaches ki vertices

• Iterative Deepening DFS(d) =

d

k O(k )

i

d

i 1

d

BFS = O(k )

ignore low order terms!

(More) Conclusions

• To find a shortest path between two nodes in a

unweighted graph, use either BFS or Iterated DFS

• If the graph is large, Iterated DFS typically uses

much less memory

– Later we’ll learn about heuristic search algorithms,

which use additional knowledge about the problem

domain to reduce the number of vertices visited

Single Source, Shortest Path for

Weighted Graphs

Given a graph G = (V, E) with edge costs c(e),

and a vertex s V, find the shortest (lowest cost)

path from s to every vertex in V

•

•

•

•

Graph may be directed or undirected

Graph may or may not contain cycles

Weights may be all positive or not

What is the problem if graph contains cycles

whose total cost is negative?

The Trouble with

Negative Weighted Cycles

A

2

-5

C

B

1

2

D

10

E

Edsger Wybe Dijkstra

(1930-2002)

• Invented concepts of structured programming,

synchronization, weakest precondition, and "semaphores"

for controlling computer processes. The Oxford English

Dictionary cites his use of the words "vector" and "stack" in

a computing context.

• Believed programming should be taught without computers

• 1972 Turing Award

• “In their capacity as a tool, computers will be but a ripple on

the surface of our culture. In their capacity as intellectual

challenge, they are without precedent in the cultural history

of mankind.”

Dijkstra’s Algorithm for

Single Source Shortest Path

• Classic algorithm for solving shortest path in

weighted graphs (with only positive edge weights)

• Similar to breadth-first search, but uses a priority

queue instead of a FIFO queue:

– Always select (expand) the vertex that has a lowest-cost

path to the start vertex

– a kind of “greedy” algorithm

• Correctly handles the case where the lowest-cost

(shortest) path to a vertex is not the one with

fewest edges

Pseudocode for Dijkstra

Initialize the cost of each vertex to

cost[s] = 0;

heap.insert(s);

While (! heap.empty())

n = heap.deleteMin()

For (each vertex a which is adjacent to n along edge e)

if (cost[n] + edge_cost[e] < cost[a]) then

cost [a] = cost[n] + edge_cost[e]

previous_on_path_to[a] = n;

if (a is in the heap) then heap.decreaseKey(a)

else heap.insert(a)

Important Features

• Once a vertex is removed from the head, the cost

of the shortest path to that node is known

• While a vertex is still in the heap, another shorter

path to it might still be found

• The shortest path itself from s to any node a can

be found by following the pointers stored in

previous_on_path_to[a]

Dijkstra’s Algorithm in Action

2

A

1

4

D

2

B

1

2

10

9

4

C

2

E

known

cost

H

1

G

8

7

vertex

A

B

C

D

E

F

G

H

3

F

1

Demo

Dijkstra’s

Data Structures

for Dijkstra’s Algorithm

|V| times:

Select the unknown node with the lowest cost

findMin/deleteMin

O(log |V|)

|E| times:

a’s cost = min(a’s old cost, …)

decreaseKey O(log |V|)

runtime: O(|E| log |V|)

CSE 326: Data Structures

Lecture 8.B

Heuristic Graph Search

Henry Kautz

Winter Quarter 2002

Homework Hint - Problem 4

(a b) mod p (a mod p b mod p ) mod p

final mod in case sum is p

(c(a mod p)) mod p (ca) mod p

Let (bk bk 1bk 2 ...b1 ) be the interpretation of a bit string

as a binary number. Then:

(bk bk 1bk 2 ...b1 ) bk 2k 1 (bk 1bk 2 ...b1 )

You can turn in a final version of your answer to

problem 4 without penalty on Wednesday.

Outline

• Best First Search

• A* Search

• Example: Plan Synthesis

• This material is NOT in Weiss, but is important for

both the programming project and the final exam!

Huge Graphs

• Consider some really huge graphs…

– All cities and towns in the World Atlas

– All stars in the Galaxy

– All ways 10 blocks can be stacked

Huh???

Implicitly Generated Graphs

• A huge graph may be implicitly specified by rules for

generating it on-the-fly

• Blocks world:

– vertex = relative positions of all blocks

– edge = robot arm stacks one block

stack(blue,table)

stack(green,blue)

stack(blue,red)

stack(green,red)

stack(green,blue)

Blocks World

• Source = initial state of the blocks

• Goal = desired state of the blocks

• Path source to goal = sequence of actions

(program) for robot arm!

• n blocks nn vertices

• 10 blocks 10 billion vertices!

Problem: Branching Factor

• Cannot search such huge graphs exhaustively.

Suppose we know that goal is only d steps away.

• Dijkstra’s algorithm is basically breadth-first

search (modified to handle arc weights)

• Breadth-first search (or for weighted graphs,

Dijkstra’s algorithm) – If out-degree of each node

is 10, potentially visits 10d vertices

– 10 step plan = 10 billion vertices visited!

An Easier Case

• Suppose you live in Manhattan; what do you do?

S

52nd St

G

51st St

50th St

2nd Ave

3rd Ave

4th Ave

5th Ave

6th Ave

7th Ave

8th Ave

9th Ave

10th Ave

Best-First Search

• The Manhattan distance ( x+ y) is an estimate

of the distance to the goal

– a heuristic value

• Best-First Search

– Order nodes in priority to minimize estimated distance

to the goal h(n)

• Compare: BFS / Dijkstra

– Order nodes in priority to minimize distance from the

start

Best First in Action

• Suppose you live in Manhattan; what do you do?

S

52nd St

G

51st St

50th St

2nd Ave

3rd Ave

4th Ave

5th Ave

6th Ave

7th Ave

8th Ave

9th Ave

10th Ave

Problem 1: Led Astray

• Eventually will expand vertex to get back on the

right track

S

52nd St

G

51st St

50th St

2nd Ave

3rd Ave

4th Ave

5th Ave

6th Ave

7th Ave

8th Ave

9th Ave

10th Ave

Problem 2: Optimality

• With Best-First Search, are you guaranteed a

shortest path is found when

– goal is first seen?

– when goal is removed from priority queue (as with

Dijkstra?)

Sub-Optimal Solution

• No! Goal is by definition at distance 0: will be

removed from priority queue immediately, even if

a shorter path exists!

(5 blocks)

52nd St

S

51st St

h=4

h=2

h=5

G

h=1

4th Ave

5th Ave

6th Ave

7th Ave

8th Ave

9th Ave

Synergy?

• Dijkstra / Breadth First guaranteed to find optimal

solution

• Best First often visits far fewer vertices, but may

not provide optimal solution

– Can we get the best of both?

A* (“A star”)

• Order vertices in priority queue to minimize

(distance from start) + (estimated distance to goal)

f(n) = g(n)

+ h(n)

f(n) = priority of a node

g(n) = true distance from start

h(n) = heuristic distance to goal

Optimality

• Suppose the estimated distance (h) is

always less than or equal to the true distance to the

goal

– heuristic is a lower bound on true distance

• Then: when the goal is removed from the priority

queue, we are guaranteed to have found a shortest

path!

Problem 2 Revisited

52nd

St

(5 blocks)

S

G

51st St

50th St

vertex

g(n)

h(n)

f(n)

52nd & 9th

0

5

5

4th Ave

5th Ave

6th Ave

7th Ave

8th Ave

9th Ave

Problem 2 Revisited

52nd

St

(5 blocks)

S

G

51st St

50th St

vertex

g(n)

h(n)

f(n)

52nd & 4th

5

2

7

51st & 9th

1

4

5

4th Ave

5th Ave

6th Ave

7th Ave

8th Ave

9th Ave

Problem 2 Revisited

52nd

St

(5 blocks)

S

G

51st St

50th St

vertex

g(n)

h(n)

f(n)

52nd & 4th

5

2

7

51st & 8th

2

3

5

50th & 9th

2

5

7

4th Ave

5th Ave

6th Ave

7th Ave

8th Ave

9th Ave

Problem 2 Revisited

52nd

St

(5 blocks)

S

G

51st St

vertex

g(n)

h(n)

f(n)

52nd & 4th

5

2

7

51st & 7th

3

2

5

50th & 9th

2

5

7

50th & 8th

3

4

7

50th St

4th Ave

5th Ave

6th Ave

7th Ave

8th Ave

9th Ave

Problem 2 Revisited

52nd

St

(5 blocks)

S

G

51st St

vertex

g(n)

h(n)

f(n)

52nd & 4th

5

2

7

51st & 6th

4

1

5

50th & 9th

2

5

7

50th & 8th

3

4

7

50th & 7th

4

3

7

50th St

4th Ave

5th Ave

6th Ave

7th Ave

8th Ave

9th Ave

Problem 2 Revisited

52nd

St

(5 blocks)

S

G

51st St

vertex

g(n)

h(n)

f(n)

52nd & 4th

5

2

7

51st & 5th

5

0

5

50th & 9th

2

5

7

50th & 8th

3

4

7

50th & 7th

4

3

7

50th St

4th Ave

5th Ave

6th Ave

7th Ave

8th Ave

9th Ave

Problem 2 Revisited

52nd

St

(5 blocks)

S

G

51st St

50th

vertex

g(n)

h(n)

f(n)

52nd & 4th

5

2

7

50th & 9th

2

5

7

50th & 8th

3

4

7

50th & 7th

4

3

7

St

4th Ave

5th Ave

6th Ave

7th Ave

8th Ave

9th Ave

DONE!

What Would Dijkstra Have

Done?

52nd St

(5 blocks)

S

G

51st St

50th St

49th St

48th St

4th Ave

5th Ave

6th Ave

7th Ave

8th Ave

9th Ave

47th St

Proof of A* Optimality

• A* terminates when G is popped from the heap.

• Suppose G is popped but the path found isn’t optimal:

priority(G) > optimal path length c

• Let P be an optimal path from S to G, and let N be the last

vertex on that path that has been visited but not yet popped.

There must be such an N, otherwise the optimal path would have been

found.

priority(N) = g(N) + h(N) c

• So N should have popped before G can pop. Contradiction.

non-optimal path to G

S

portion of optimal

path found so far

G

N

undiscovered portion

of shortest path

What About Those Blocks?

• “Distance to goal” is not always physical distance

• Blocks world:

– distance = number of stacks to perform

– heuristic lower bound = number of blocks out of place

# out of place = 2, true distance to goal = 3

3-Blocks State

Space Graph

ABC

h=2

A

BC

h=1

A

CB

h=2

B

AC

h=2

B

CA

h=1

C

AB

h=3

C

BA

h=3

C

A

B

h=3

B

A

C

h=2

C

B

A

h=3

A

B

C

h=0

B

C

A

h=3

A

C

B

h=3

start

goal

3-Blocks

Best First

Solution

ABC

h=2

A

BC

h=1

A

CB

h=2

B

AC

h=2

B

CA

h=1

C

AB

h=3

C

BA

h=3

C

A

B

h=3

B

A

C

h=2

C

B

A

h=3

A

B

C

h=0

B

C

A

h=3

A

C

B

h=3

start

goal

3-Blocks BFS

Solution

ABC

h=2

expanded, but not

in solution

A

BC

h=1

A

CB

h=2

B

AC

h=2

B

CA

h=1

C

AB

h=3

C

BA

h=3

C

A

B

h=3

B

A

C

h=2

C

B

A

h=3

A

B

C

h=0

B

C

A

h=3

A

C

B

h=3

start

goal

3-Blocks A*

Solution

ABC

h=2

expanded, but not

in solution

A

BC

h=1

A

CB

h=2

B

AC

h=2

B

CA

h=1

C

AB

h=3

C

BA

h=3

C

A

B

h=3

B

A

C

h=2

C

B

A

h=3

A

B

C

h=0

B

C

A

h=3

A

C

B

h=3

start

goal

Other Real-World Applications

• Routing finding – computer networks, airline

route planning

• VLSI layout – cell layout and channel routing

• Production planning – “just in time” optimization

• Protein sequence alignment

• Many other “NP-Hard” problems

– A class of problems for which no exact polynomial time

algorithms exist – so heuristic search is the best we can

hope for

Coming Up

• Other graph problems

– Connected components

– Spanning tree

CSE 326: Data Structures

Part 8.C

Spanning Trees and More

Henry Kautz

Autumn Quarter 2002

Today

•

•

•

•

Incremental hashing

MazeRunner project

Longest Path?

Finding Connected Components

– Application to machine vision

• Finding Minimum Spanning Trees

– Yet another use for union/find

Incremental Hashing

n n i

h(a1...an ) c ai % p

i 1

n

n 1 n 1i

n 1i

h(a2 ...an 1 ) c

ai % p an 1 c

ai % p

i2

i2

n

n 1

n 1

n 1i

an 1 c a1 c a1 c

ai % p

i 2

n

n 1

n i

an 1 c a1 c c ai % p

i 1

n n i

n 1

an 1 % p c a1 % p c c ai % p % p

i 1

an 1 c n 1a1 ch(a1...an ) % p

20 15

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

|*

|

+ + + + + + + + + + + + + + + + + + + + +

| | | | | | | | | | | | | | | | | | | | |

+-+-+-+-+ +-+ +-+ +-+ +-+-+ +-+-+-+-+-+-+

|

|

|

| | | | | | | |

Maze Runner

|

+-+-+-+-+-+-+-+-+-+-+-+ + + + + + + +-+ +

|X

| |

|

|

| |

+-+ + +-+-+ +-+-+-+ +-+ +-+ +-+-+-+-+-+-+

| | | | | | | | | | | | |

| | | |

|

+ + + + + + + + + +-+ + + +-+ + + +-+ +-+

|

| | | |

|

| | | | |

| | | |

•DFS, iterated DFS, BFS,

best-first, A*

+-+-+ + + + + + + + + + + + + +-+ + + + +

| | |

| | | | |

|

|

+ + + +-+ + + + + + + + + + + + + + + + +

|

| | | | | | | | | | | | | |

+ + + + + + + + + + + + + + +-+-+-+-+ +-+

| | | | | | | | | | | | | | |

|

+ + + + + + + + + + +-+ +-+-+ + + +-+-+ +

| | | | | | | | | |

|

| | |

| |

+-+-+-+-+-+-+-+-+-+-+-+-+ +-+-+-+-+-+-+-+

| | | | | | | | | |

| |

|

+ + + + + + + + + + +-+ +-+-+-+-+ +-+-+-+

| | | |

| |

| |

| |

| | | |

+ + + + +-+ +-+ + + + + +-+ + +-+ + + + +

| | |

| | |

| | |

|

| |

+ + +-+-+-+-+ +-+ +-+-+-+ +-+-+ +-+ +-+ +

| | |

| | | | | | | | |

| | | |

| |

+ + + + + + + + + + + + + + + + + + +-+ +

|

|

|

•Crufty old C++ code from

fresh clean Java code

|

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

•Win fame and glory by

writing a nice real-time maze

visualizer

Java Note

Java lacks enumerated constants…

enum {DOG, CAT, MOUSE} animal;

animal a = DOG;

Static constants not type-safe…

static final int DOG = 1;

static final int CAT = 2;

static final int BLUE = 1;

int favoriteColor = DOG;

Amazing Java Trick

public final class Animal {

private Animal() {}

public static final Animal DOG = new Animal();

public static final Animal CAT = new Animal();

}

public final class Color {

private Color() {}

public static final Animal BLUE = new Color();

}

Animal x = DOG;

Animal x = BLUE; // Gives compile-time error!

Longest Path Problem

• Given a graph G=(V,E) and vertices s, t

• Find a longest simple path (no repeating vertices)

from s to t.

• Does “reverse Dijkstra” work?

Dijkstra

Initialize the cost of each vertex to

cost[s] = 0;

heap.insert(s);

While (! heap.empty())

n = heap.deleteMin()

For (each vertex a which is adjacent to n along edge e)

if (cost[n] + edge_cost[e] < cost[a]) then

cost [a] = cost[n] + edge_cost[e]

previous_on_path_to[a] = n;

if (a is in the heap) then heap.decreaseKey(a)

else heap.insert(a)

Reverse Dijkstra

Initialize the cost of each vertex to

cost[s] = 0;

heap.insert(s);

While (! heap.empty())

n = heap.deleteMax()

For (each vertex a which is adjacent to n along edge e)

if (cost[n] + edge_cost[e] > cost[a]) then

cost [a] = cost[n] + edge_cost[e]

previous_on_path_to[a] = n;

if (a is in the heap) then heap.increaseKey(a)

else heap.insert(a)

Does it Work?

a

5

3

s

6

1

b

t

Problem

• No clear stopping condition!

• How many times could a vertex be inserted in the

priority queue?

– Exponential!

– Not a “good” algorithm!

• Is the better one?

Counting Connected Components

Initialize the cost of each vertex to

Num_cc = 0

While there are vertices of cost {

Pick an arbitrary such vertex S, set its cost to 0

Find paths from S

Num_cc ++ }

Using DFS

Set each vertex to “unvisited”

Num_cc = 0

While there are unvisited vertices {

Pick an arbitrary such vertex S

Perform DFS from S, marking vertices as visited

Num_cc ++ }

Complexity = O(|V|+|E|)

Using Union / Find

Put each node in its own equivalence class

Num_cc = 0

For each edge E = <x,y>

Union(x,y)

Return number of equivalence classes

Complexity =

Using Union / Find

Put each node in its own equivalence class

Num_cc = 0

For each edge E = <x,y>

Union(x,y)

Return number of equivalence classes

Complexity = O(|V|+|E| ack(|E|,|V|))

Machine Vision: Blob Finding

Machine Vision: Blob Finding

1

2

3

4

5

Blob Finding

• Matrix can be considered an efficient

representation of a graph with a very regular

structure

• Cell = vertex

• Adjacent cells of same color = edge between

vertices

• Blob finding = finding connected components

Tradeoffs

• Both DFS and Union/Find approaches are

(essentially) O(|E|+|V|) = O(|E|) for binary images

• For each component, DFS (“recursive labeling”)

can move all over the image – entire image must

be in main memory

• Better in practice: row-by-row processing

– localizes accesses to memory

– typically 1-2 orders of magnitude faster!

High-Level Blob-Labeling

•

Scan through image left/right and top/bottom

•

If a cell is same color as (connected to) cell to

right or below, then union them

•

Give the same blob number to cells in each

equivalence class

Blob-Labeling Algorithm

Put each cell <x,y> in it’s own equivalence class

For each cell <x,y>

if color[x,y] == color[x+1,y] then

Union( <x,y>, <x+1,y> )

if color[x,y] == color[x,y+1] then

Union( <x,y>, <x,y+1> )

label = 0

For each root <x,y>

blobnum[x,y] = ++ label;

For each cell <x,y>

blobnum[x,y] = blobnum( Find(<x,y>) )

Spanning Tree

Spanning tree: a subset of the edges from a connected graph

that…

… touches all vertices in the graph (spans the graph)

… forms a tree (is connected and contains no cycles)

4

7

9

1

2

5

Minimum spanning tree: the spanning tree with the least total

edge cost.

Applications of Minimal

Spanning Trees

• Communication networks

• VLSI design

• Transportation systems

Kruskal’s Algorithm for

Minimum Spanning Trees

A greedy algorithm:

Initialize all vertices to unconnected

While there are still unmarked edges

Pick a lowest cost edge e = (u, v) and mark it

If u and v are not already connected, add e to the

minimum spanning tree and connect u and v

Sound familiar?

(Think maze generation.)

Kruskal’s Algorithm in Action (1/5)

2

2

B

A

F

1

4

3

2

1

10

9

G

C

2

D

7

4

8

E

H

Kruskal’s Algorithm in Action (2/5)

2

2

B

A

F

1

4

3

2

1

10

9

G

C

2

D

7

4

8

E

H

Kruskal’s Algorithm in Action (3/5)

2

2

B

A

F

1

4

3

2

1

10

9

G

C

2

D

7

4

8

E

H

Kruskal’s Algorithm in Action (4/5)

2

2

B

A

F

1

4

3

2

1

10

9

G

C

2

D

7

4

8

E

H

Kruskal’s Algorithm Completed (5/5)

2

2

B

A

F

1

4

3

2

1

10

9

G

C

2

D

7

4

8

E

H

Why Greediness Works

Proof by contradiction that Kruskal’s finds a minimum

spanning tree:

• Assume another spanning tree has lower cost than

Kruskal’s.

• Pick an edge e1 = (u, v) in that tree that’s not in

Kruskal’s.

• Consider the point in Kruskal’s algorithm where u’s set

and v’s set were about to be connected. Kruskal selected

some edge to connect them: call it e2 .

• But, e2 must have at most the same cost as e1 (otherwise

Kruskal would have selected it instead).

• So, swap e2 for e1 (at worst keeping the cost the same)

• Repeat until the tree is identical to Kruskal’s, where the

cost is the same or lower than the original cost:

contradiction!

Data Structures

for Kruskal’s Algorithm

Once:

|E| times:

Pick the lowest cost edge…

Initialize heap of edges…

buildHeap

findMin/deleteMin

|E| times:

If u and v are not already connected…

…connect u and v.

union

runtime:

|E| + |E| log |E| + |E| ack(|E|,|V|)

Data Structures

for Kruskal’s Algorithm

Once:

|E| times:

Pick the lowest cost edge…

Initialize heap of edges…

buildHeap

findMin/deleteMin

|E| times:

If u and v are not already connected…

…connect u and v.

union

runtime:

|E| + |E| log |E| + |E| ack(|E|,|V|) = O(|E|log|E|)

Prim’s Algorithm

•

Can also find Minimum Spanning Trees using a

variation of Dijkstra’s algorithm:

Pick a initial node

Until graph is connected:

Choose edge (u,v) which is of minimum cost

among edges where u is in tree but v is not

Add (u,v) to the tree

• Same “greedy” proof, same asymptotic

complexity

Coming Up

•

•

•

•

Application: Sentence Disambiguation

All-pairs Shortest Paths

NP-Complete Problems

Advanced topics

– Quad trees

– Randomized algorithms

Sentence Disambiguation

• A person types a message on their cell phone

keypad. Each button can stand for three different

letter (e.g. “1” is a, b, or c), but the person does not

explicitly indicate which letter is meant. (Words are

separated by blanks – the “0” key.)

• Problem: How can the system determine what

sentence was typed?

– My Nokia cell phone does this!

• How can this problem be cast as a shortest-path

problem?

Sentence Disambiguation as

Shortest Path

Idea:

• Possible words are vertices

• Directed edge between adjacent possible words

• Weight on edge from W1 to W2 is probability that

W2 appears adjacent to W1

– Probabilities over what?! Some large archive (corpus)

of text

– “Word bi-gram” model

• Find the most probable path through the graph

W11

W 12

W 21

W 13

W 23

W 22

W

W3111

W 33

W 41

W 43

Technical Concerns

• Isn’t “most probable” actually longest (most

heavily weighted) path?!

• Shouldn’t we be multiplying probabilities, not

adding them?!

P(# w1w2 w3 #) P(w1 | #) P(w2 | w1 ) P(w3 | w2 ) P(# | w3 )

Logs to the Rescue

• Make weight on edge fromW1 to W2 be

- log P(W2 | W1)

• Logs of probabilities are always negative

numbers, so take negative logs

• The lower the probability, the larger the negative

log! So this is shortest path

• Adding logs is the same as multiplying the

underlying quantities

To Think About

• This really works in practice – 99% accuracy!

• Cell phone memory is limited – how can we use as

little storage as possible?

• How can the system customize itself to a user?

Question

Which graph algorithm is asymptotically

better:

• (|V||E|log|V|)

• (|V|3)

All Pairs Shortest Path

• Suppose you want to compute the length of the

shortest paths between all pairs of vertices in a

graph…

– Run Dijkstra’s algorithm (with priority queue)

repeatedly, starting with each node in the graph:

– Complexity in terms of V when graph is dense:

Dynamic Programming Approach

Dk ,i , j distance from vi to v j that uses

only v1, v2 ,..., vk as intermediates

Note that path for Dk ,i , j either does not use vk ,

or merges the paths vi vk and vk v j

Dk ,i , j min{Dk 1,i , j , Dk 1,i ,k Dk 1,k , j }

Floyd-Warshall Algorithm

// C – adjacency matrix representation of graph

//

C[i][j] = weighted edge i->j or if none

// D – computed distances

FW(int n, int C [][], int D [][]){

for (i = 0; i < N; i++){

Run time =

for (j = 0; j < N; j++)

D[i][j] = C[i][j];

D[i][i] = 0.0;

}

How could we

for (k = 0; k < N; k++)

compute the paths?

for (i = 0; i < N; i++)

for (j = 0; j < N; j++)

if (D[i][k] + D[k][j] < D[i][j])

D[i][j] = D[i][k] + D[k][j];

}