Detecting Faces in Images: A Survey

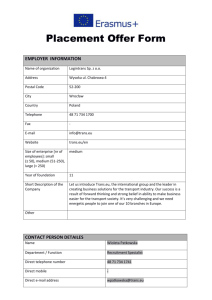

advertisement

IEEE TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE, VOL. 24, NO. 1, JANUARY 2002 Ming-Hsuan Yang, Member, IEEE, David J. Kriegman, Senior Member, IEEE, Narendra Ahuja, Fellow, IEEE Given a single image, Identify all image regions which contain a face Regardless of ▪ its 3D position, ▪ orientation and ▪ lighting conditions Categorize and evaluate different algorithms IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Knowledge-based methods Encode human knowledge of what constitutes a typical face (usually, the relationships between facial features) Feature invariant approaches Aim to find structural features of a face that exist even when the pose, viewpoint, or lighting conditions vary Template matching methods Several standard patterns stored to describe the face as a whole or the facial features separately Appearance-based methods The models (or templates) are learned from a set of training images which capture the representative variability of facial appearance IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Learn appearance “templates” from examples in images Statistical analysis and machine-learning Train a classifier using positive (and usually negative) examples of faces Representation Pre processing Train a classifier Search strategy Post processing View based IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Image or feature vector: variable x p (x | face) p (x | nonface) High-dimension x multimodal of p(x|..) No natural parameterized forms Empirically validated parametric or nonparametric approximation IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Neural network: Multilayer Perceptrons Principal Component Analysis (PCA), Factor Analysis Mixture of PCA, Mixture of factor analyzers Support vector machine (SVM) Distribution-based method Naïve Bayes classifier Hidden Markov model Sparse network of winnows (SNoW) Kullback relative information Inductive learning: C4.5 Adaboost … IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Face Images linearly encoded using a modest number of basis images [Kirby and Sirovich] Principle Component Analysis (PCA) … mxn … Minimize the mean square error between the projection of the training images onto this subspace and the original images m*n vectors, N samples K Basis vectors, K<<N IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Eigen faces Matthew Turk and Alex Pentland J. Cognitive Neuroscience 1991 convert x into v1, v2 coordinates What does the v2 coordinate measure? - distance to line - use it for classification—near 0 for orange pts What does the v1 coordinate measure? - position along line - use it to specify which orange point it is Classification can be expensive: Big search prob (e.g., nearest neighbors) or store large PDF’s Suppose the data points are arranged as above Idea—fit a line, classifier measures distance to line CSE 576, Spring 2008 Face Recognition and Detection 9 Dimensionality reduction • We can represent the orange points with only their v1 coordinates (since v2 coordinates are all essentially 0) • This makes it much cheaper to store and compare points • A bigger deal for higher dimensional problems CSE 576, Spring 2008 Face Recognition and Detection 10 Consider the variation along direction v among all of the orange points: What unit vector v minimizes var? What unit vector v maximizes var? Solution: v1 is eigenvector of A with largest eigenvalue v2 is eigenvector of A with smallest eigenvalue CSE 576, Spring 2008 Face Recognition and Detection 11 Suppose each data point is N-dimensional Same procedure applies: The eigenvectors of A define a new coordinate system ▪ eigenvector with largest eigenvalue captures the most variation among training vectors x ▪ eigenvector with smallest eigenvalue has least variation We can compress the data using the top few eigenvectors ▪ corresponds to choosing a “linear subspace” ▪ represent points on a line, plane, or “hyper-plane” ▪ these eigenvectors are known as the principal components CSE 576, Spring 2008 Face Recognition and Detection 12 = + An image is a point in a high dimensional space An N x M image is a point in RNM We can define vectors in this space as we did in the 2D case CSE 576, Spring 2008 Face Recognition and Detection 13 The set of faces is a “subspace” of the set of images We can find the best subspace using PCA This is like fitting a “hyper-plane” to the set of faces ▪ spanned by vectors v1, v2, ..., vK ▪ any face CSE 576, Spring 2008 Face Recognition and Detection 14 PCA extracts the eigenvectors of A Gives a set of vectors v1, v2, v3, ... Each vector is a direction in face space ▪ what do these look like? CSE 576, Spring 2008 Face Recognition and Detection 15 The eigenfaces v1, ..., vK span the space of faces A face is converted to eigenface coordinates by CSE 576, Spring 2008 Face Recognition and Detection 16 Algorithm 1. Process the image database (set of images with labels) • Run PCA—compute eigenfaces • Calculate the K coefficients for each image 2. Given a new image (to be recognized) x, calculate K coefficients 3. Detect if x is a face 4. If it is a face, who is it? ▪ Find closest labeled face in database ▪ nearest-neighbor in K-dimensional space CSE 576, Spring 2008 Face Recognition and Detection 17 eigenvalues i= K NM How many eigenfaces to use? Look at the decay of the eigenvalues the eigenvalue tells you the amount of variance “in the direction” of that eigenface ignore eigenfaces with low variance CSE 576, Spring 2008 Face Recognition and Detection 18 [Sung and Poggio, 94] Learn distribution of image patterns from one object from positive and negative examples Distribution-based models for face/nonface patterns ▪ 19x19 image, 361-D vector ▪ K-means: 6 face clusters, 6 non-face clusters ▪ Multidimensional Gaussian: mean & covariance matrix Multilayer perceptron classifier IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) [Sung and Poggio, 94] IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) [Sung and Poggio, 94] Masking: reduce the unwanted background noise in a face pattern Illumination gradient correction: find the best fit brightness plane and then subtracted from it to reduce heavy shadows caused by extreme lighting angles Histogram equalization: compensates the imaging effects due to changes in illumination and different camera input gains IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) [Sung and Poggio, 94] Compute distances of a sample to all the face and non-face clusters Within subspace distance (D1) ▪ Mahalanobis distance of the projected sample to the cluster center Distance to the subspace (D2) ▪ Distance of the sample to the subspace IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) [Sung and Poggio, 94] Distance measure IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) [Sung and Poggio, 94] Feature vector for each sample A vector of distance measurements to all clusters Multilayer perceptron classifier Train from database: 47316 ▪ 4150 face: easy to collect ▪ Non-face: hard to get the representative sample ▪ Bootstrap method: selectively adds image to the training set as training progress IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Positive examples Get as much variation as possible Manually crop and normalize each face image into a standard size (e.g., 19 ×19) Creating virtual examples [Sung and Poggio 94] Negative examples: Fuzzy idea Any images that do not contain faces A large image subspace Bootstraping [Sung and Poggio 94] IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Simple and very effective method Randomly mirror, rotate, translate and scale face samples by small amounts Increase number of training examples Less sensitive to alignment error Randomly mirrored, rotated translated, and scaled faces [Sung & Poggio 94] IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) [Sung and Poggio, 94] 1. Start with a small set of non-face examples in the training set 2. Train a MLP classifier with the current training set 3. Run the learned face detector on a sequence of random images. 4. Collect all the non-face patterns that the current system wrongly classifies as faces (i.e., false positives) 5. Add these non-face patterns to the training set 6. Got to Step 2 or stop if satisfied Improve the system performance greatly IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) (B. Moghaddam and A. Pentland) i PCA decomposition Principal subspace Orthogonal complement distance from feature space ▪ Discarded in standard PCA Learn local features Multivariate Gaussian Mixture of Gaussians distance in feature space Detect Maximum likelihood IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) [Yang et al. 00] Factor Analysis (FA) Generative method that performs clustering and dimensionality reduction within each cluster Modeling the covariance structure of High dimensional data using a small number of latent variables Similar with PCA, but different ▪ Data density is normalized along the principal component subspace ▪ Robust to independent noise in the features Able to detect faces in wide variations IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) [Yang et al. 00] Use mixture model to detect faces in different pose Using EM to estimate all the parameters in the mixture model See also [Moghaddam and Pentland 97] on using probabilistic Gaussian mixture for object localization IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) [Yang et al. 00] High-D image space to low-D Provides a better projection than PCA for pattern classification since it aims to find the most discriminant projection direction. Outperform the Eigenface method on several databases IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) [Yang et al. 00] Apply Self Self-Organizing Map (SOM) to cluster faces/non-faces, and thereby labels for samples Apply FLD to find optimal projection matrix for maximal separation Estimate class-conditional density for detection Given a set of unlabeled face and non—face samples SOM Face/non face prototypes generated by SOM FLD Class Conditional Density Maximum Likelihood Estimation IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Feasibility of training a system to capture the complex class conditional density of face patterns Hierarchical neural networks [Agui et al. 1992] Two parallel subnetworks ▪ First: Inputs are intensity values from original image and intensity values from filtered image using 3x3 Sobel filter ▪ Second: outputs from the subnetworks and extracted feature values Works for faces have the same size IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Vaillant et al. Examples of face/non-face images: 20x20 pixels Two neural networks: A: Trained to find approximate locations of faces at some scale -- select candidates B: trained to determine the exact position of faces at some scale -- verify IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) [Burel and Carel, 94] Compress examples using SOM Multilayer perceptron is used to learn them for face/background classification Detection Scanning each image at various resolution Normalize each location and size to standard size Classify normalized window by an MLP IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) With multiple layers nonlinear principle component analysis Different autoassociative networks to One to Detect frontal-view faces One to Turned up to 60°to left/right A gating networks to assign weights to frontal/side face detectors ▪ Utilized in an ensemble of autoassociative networks IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) [Lin et al. 1997] Similar to radial basis function network with Modified learning rules Probabilistic interpretation Extract feature vectors on intensity and edge Contains eyebrows, eyes, nose Feed two vectors to PDBNN and Use fusion of the outputs to classify IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Rowley et al. Train multiple multilayer perceptrons with different receptive fields [Rowley and Kanade 96]. Merging the overlapping detections within one network Train an arbitration network to combine the results from different networks Needs to find the right neural network architecture (number of layers, hidden units, etc.) and parameters (learning rate, etc.) IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) H. Rowley, S. Baluja, and T. Kanade IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Merging overlapping detections within one network [Rowley and Kanade 96] IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Arbitration among multiple networks AND operator OR operator Voting Arbitration network IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) A paradigm to train polynomial function, neural networks, or radial basis function (RBF) classifiers Methods for training a classifier (e.g., Bayesian, neural networks, radial basis function RBF) are based on of minimizing the training error SVMs operates on structural risk minimization, to minimize an upper bound on the expected generalization error IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Find the optimal separating hyperplane constructed by support vectors [Vapnik 95] Maximize distances between the data points closest to the separating hyperplane (large margin classifier) Formulated as a quadratic programming problem Kernel functions for nonlinear SVMs support IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) [Osuna et al. 97] Adopt similar architecture Similar to [Sung and Poggio 94] with the SVM classifier Pros: Good recognition rate with theoretical support Cons: Time consuming in training and testing Need to pick the right kernel IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Training: Solve a complex quadratic optimization problem Speed-up: Sequential Minimal Optimization (SMO) [Platt 99] Testing: The number of support vectors may be large lots of kernel computations Speed-up: Reduced set of support vectors [Romdhani et al. 01] Variants: Component-based SVM [Heisele et al. 01]: ▪ Learn components and their geometric configuration ▪ Less sensitive to pose variation IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Yang et al. 00 A sparse network of linear functions that utilizes the Winnow update rule On line, mistake driven algorithm Attribute (feature) efficiency Allocations of nodes and links is data driven complexity depends on number of active features Allows for combining task hierarchically Multiplicative learning rule IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Yang et al. 00 Multiplicative weight update algorithm Pros: On--line feature selection [Yang et al. 00] Detect faces with different features and expressions, in different poses, and under different lighting conditions Cons: Need more powerful feature representation Have similar performance, but computationally more efficient Also been applied to object recognition [Yang et al. 02] IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Schneiderman and Kanade, 98 Estimate joint probability of local appearance and position at multiple resolutions Local patterns are more unique Intensity patterns around the eyes are much more distinctive Learn the distribution by parts using Naïve Bayes classifier Provides better estimation of conditional density functions Provides a functional form of the posterior probability to capture the joint statistics of local appearance and position IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Schneiderman and Kanade, 98 At each scale, a face image is decomposed into 4 subregions The project to a lower dimensional space (PCA) Quantized into a finite set of patterns The statistics of each projected subregion are estimated from the projected samples to encode local appearance IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Schneiderman and Kanade, 98 Apply Bayes decision rule Further decompose the appearance into space, frequency, and orientation Also wavelet representation for general object recognition [H. Schneiderman and T. Kanade, 00] IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Schneiderman and Kanade, 98 Extend to detect faces in different pose with multiple detectors Each detector specializes to a view: frontal, left pose and right pose [Mikolajczyk et al. 01] extend to detect faces from side pose to frontal view IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Schneiderman and Kanade, 98 Able to detect profile faces [Schneiderman and Kanade 98] Extended to detect cars [Schneiderman and Kanade 00] IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Assumption of HMM: Patterns can be characterized as a parametric random process Parameters can be estimated in a precise, well-defined manner Develop HMM Hidden states need to be decided Learn transitional probability between states from examples ▪ each example is represented as a sequence of observations Maximize the probability of observing the training data by adjusting the parameters (Viterbi segmentation method and Baum-Welch algorithms) IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Face Pattern Several regions (eye, nose, mouth, forehead, chin) Observe these regions in an appropriate order (top-bottom, left-right) Aims to associate facial regions with the states of a continuous density Hidden Markov Model IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Observe vectors: scan the window vertically with P pixels of overlap Five hidden states The boundaries between strips of pixels are represented by probabilistic transitions between states IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Contextual constraints in a face pattern A small neighborhood of pixels Markov random field (MRF) Convenient and consistent to model context-dependent entities ▪ image pixels ▪ correlated features Achieved by characterizing mutual influences using conditional MRF distributions Using Kullback relative information, Markov process maximizing the information-based discrimination between the two classes Apply to detection IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) T. Cover and J. Thomas, 91 Probability functions p(x): the template is a face q(x): the template is a non-face Training database to estimate distribution Face ▪ 100 individuals x 9 views Nonface ▪ 143000 nonface templates using histograms IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Select the most informative pixels (MIP) Maximize the Kullback relative information between p(x) and q(x) ▪ the MIP distribution focuses on the eye and mouth regions and avoids the nose area. Use MIP to obtain linear features for classification and representation [Fukunaga and Koontz] Detect faces Pass a window over the input image Compute the distance from face space (DFFS) [Pentland et al, 94] If the DFFS-Face < DFFS-Nonface, a face is assumed to exist within the window IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Colmenarez and Huang, 97 Apply Kullback relative information to Maximize the information-based discrimination between positive and negative examples of faces A family of discrete Markov processes Model the face and background patterns Estimate the probability model Learning Optimization Select the Markov process that maximizes the informationbased discrimination between the two classes IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Qian and Huang, 97 Combine view-based and model-based Use visual-attention algorithm to reduce search space – select important image regions Detect face in selected regions ▪ Combination of template matching and feature matching ▪ Using a hierarchical Markov random field ▪ Maximum a posterior estimation IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Learning by example A system tries to induce a general rule from a set of observed instances Algorithms ID3 (Quinlan, 1986) C4.5 (Quinlan, 1993) FOIL (Quinlan, 1990) http://sifter.org/~brandyn/InductiveLearning.html http://www.iiia.csic.es/Projects/FedLearn/OO-Induction.html IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) J. Huang et al. 96 Learn decision tree from positive and negative examples of face pattern Training example ▪ 8x8 pixel window ▪ represented by a vector of 30 attributes ▪ which is composed of entropy, mean, and standard deviation of the pixel intensity values. C4.5 builds a classifier as a decision tree ▪ leaves indicate class identity ▪ nodes specify tests to perform on a single attribute. The learned decision tree is then used to decide whether a face exists in the input example. Results Localization accuracy rate of 96% A set of 2,340 frontal face images in the FERET data set. IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) N. Duta and A.K. Jain, IIPR, 1998. Learn face concept using Mitchell’s Find-S algorithm Distribution of face patterns P(x|face) can be approximated by a set of Gaussian clusters For a face instance, Dis( x, ci ) k max Dis( x j , ci ),0 k 1 j Apply Find-S algorithm to learn the thresholding distance such that faces and nonfaces can be differentiated. Several distinct characteristics First, it does not use negative (nonface) examples Second, only the central portion of a face is used for training. Third, feature vectors consist of images with 32 intensity levels or textures, while some uses full-scale intensity values as inputs. Detection rate of 90 percent on the first CMU data set. IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Training process is essential Benchmark data sets Face image Databases FERET database ▪ consists of monochrome images taken in different frontal views and in left and right profiles ▪ assess the strengthens and weaknesses of different face recognition approaches ▪ Since each image consists of an individual on a uniform and uncluttered background, it is not suitable for face detection benchmarking IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) ftp://whitechapel.media.mit.edu/pub/images/ 16 people images are taken in frontal view with slight variability in head orientation (tilted upright, right, and left) on a cluttered background IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) http://www.uk.research.att.com/facedatabase.html Formerly known as the Olivetti database 10 images for 40 distinct subjects Different time, lighting, facial expression, facial details IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Cropped, masked frontal face images Taken from a wide variety of light sources Study on face recognition under the effect of varying illumination conditions IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) http://vision.ucsd.edu/~leekc/ExtYaleDatabase/Yale%20Face%20Database.htm 5760 single light source images of 10 subjects each seen under 576 viewing conditions (9 poses x 64 illumination conditions). For every subject in a particular pose An image with ambient (background) illumination was also captured. Total number of images is in fact 5760+90=5850. Total size of the compressed database is ~ 1GB. IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Developed for access control experiments using multimodal inputs Contains sequences of face images of 37 people. Five sequences for each subject were taken over one week. Each image sequence contains images from right profile (-90 degree) to left profile (90 degree) While the subject counts from“0” to “9” in their native languages IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) 564 images of 20 people with varying pose. The images of each subject cover a range of poses from right profile to frontal views IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) A. Martinez and R. Benavente, 1998 3,276 color images of 126 people (70 males + 56 females) in frontal view Designed for face recognition experiments under several mixing factors, such as facial expressions, illumination conditions, and occlusions. Also has been applied to image and video indexing as well as retrieval All the faces appear with different facial expression (neutral, smile, anger, and scream), illumination (left light source, right light source, and sources from both sides), Occlusion (wearing sunglasses or scarf). Taken During two sessions separated by two weeks. By the same camera setup under tightly controlled conditions of illumination and pose. IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) http://web.mit.edu/emeyers/www/face_databases.html The abovementioned databases are designed mainly to measure performance of face recognition methods and, thus, each image contains only one individual. Best utilized as training sets rather than test sets IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) K.-K. Sung and T. Poggio, 96&98 First, 301 frontal and near-frontal mugshots of 71 different people ▪ High quality digitized images with a fair amount of lighting variation Second, 23 images with a total of 149 face patterns. Most of these images have complex background with Faces taking up only a small amount of the image area IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Some images are scanned from newspapers and, thus, have low resolution. Though most faces in the images are upright and frontal. Some faces in the images appear in different pose IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) http://vasc.ri.cmu.edu/NNFaceDetector/ 130 images with a total of 507 frontal faces. Also includes 23 images of the second data set used by [Sung and Poggio, 1998]. Most images contain more than one face on a cluttered background A good test set to assess algorithms which detect upright frontal faces. IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) http://vasc.ri.cmu.edu/NNFaceDetector/ Some images contain hand-drawn cartoon faces. Most images contain more than one face and the face size varies significantly. IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) For detecting 2D faces with frontal pose and rotation in image 50 images with a total of 223 faces, of which 210 are at angles > 10 degrees. IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Schneiderman and Kanade, 00 208 images Each image contains faces with facial expressions and in profile views IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) A common test bed for direct benchmarking of face detection and recognition algorithms 300 digital photos Captured in a variety of resolutions Face size ranges from as small as 13x13 pixels to as large as 300x300 pixels. IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) They were not tested on the same test set Performance among several appearancebased face detection methods on two standard data sets Test Set 1 (125 Images with 483 Faces) and Test Set 2 (23 Images with 136 Faces) IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Appearance-based face detection methods The number and variety of training examples have a direct effect on the classification performance IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Training time and execution time The number of scanning windows vary a lot Different criteria adopted in reporting the detection rates A loose criterion may declare all the faces as “successful” detections, while a more strict one would declare most of them as nonfaces. IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Training time and execution time The number of scanning windows vary a lot Different criteria adopted in reporting the detection rates The evaluation criteria may and should depend on the purpose of the detector Required computational resources, particularly, time and memory IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) A Collect of sample face detection codes and evaluation tools http://vision.ai.uiuc.edu/mhyang/face-detection-survey.html IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Provide a comprehensive survey of research on face detection Provide some structural categories for the methods described in over 150 papers It is imprudent to explicitly declare which methods indeed have the lowest error rates The community needs to more seriously consider systematic performance evaluation IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) The class of faces admits a great deal of shape, color, albedo variability due to differences in individuals, nonrigidity, facial hair, glasses, and makeup Images are formed under variable lighting and 3D pose and may have cluttered backgrounds IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Paul A. Viola and Michael J. Jones Intl. J. Computer Vision 57(2), 137–154, 2004 (originally in CVPR’2001) (slides adapted from Bill Freeman, MIT 6.869, April 2005) CSE 576, Spring 2008 Face Recognition and Detection 89 • • • Training Data 5000 faces (frontal) 108 non faces Faces are normalized Scale, translation • • • Many variations Across individuals Illumination Pose (rotation both in plane and out) CSE 576, Spring 2008 Face Recognition and Detection 90 • • • • Feature set (…is huge about 16M features) Efficient feature selection using AdaBoost New image representation: Integral Image Cascaded Classifier for rapid detection Fastest known face detector for gray scale images CSE 576, Spring 2008 Face Recognition and Detection 91 • “Rectangle filters” Similar to Haar wavelets • Differences between sums of pixels in adjacent rectangles CSE 576, Spring 2008 Face Recognition and Detection 92 Partial sum Any rectangle is D = 1+4-(2+3) • • Also known as: summed area tables [Crow84] boxlets [Simard98] CSE 576, Spring 2008 Face Recognition and Detection 93 CSE 576, Spring 2008 Face Recognition and Detection 94 Perceptron yields a sufficiently powerful classifier Use AdaBoost to efficiently choose best features • add a new hi(x) at each round • each hi(xk) is a “decision stump” hi(x) b=Ew(y [x> q]) a=Ew(y [x< q]) q CSE 576, Spring 2008 Face Recognition and Detection x 95 • • • For each round of boosting: Evaluate each rectangle filter on each example Sort examples by filter values Select best threshold for each filter (min error) Use sorting to quickly scan for optimal threshold • • • Select best filter/threshold combination Weight is a simple function of error rate Reweight examples (There are many tricks to make this more efficient.) CSE 576, Spring 2008 Face Recognition and Detection 96 Friedman, J., Hastie, T. and Tibshirani, R. Additive Logistic Regression: a Statistical View of Boosting http://www-stat.stanford.edu/~hastie/Papers/boost.ps “We show that boosting fits an additive logistic regression model by stagewise optimization of a criterion very similar to the log-likelihood, and present likelihood based alternatives. We also propose a multi-logit boosting procedure which appears to have advantages over other methods proposed so far.” CSE 576, Spring 2008 Face Recognition and Detection 97 Given a nested set of classifier hypothesis classes Computational Risk Minimization CSE 576, Spring 2008 Face Recognition and Detection 98 Speed is proportional to the average number of features computed per sub-window. On the MIT+CMU test set, an average of 9 features (/ 6061) are computed per sub-window. On a 700 Mhz Pentium III, a 384x288 pixel image takes about 0.067 seconds to process (15 fps). Roughly 15 times faster than Rowley-BalujaKanade and 600 times faster than Schneiderman-Kanade. CSE 576, Spring 2008 Face Recognition and Detection 99 CSE 576, Spring 2008 Face Recognition and Detection 100 • • Fastest known face detector for gray images Three contributions with broad applicability: Cascaded classifier yields rapid classification AdaBoost as an extremely efficient feature selector Rectangle Features + Integral Image can be used for rapid image analysis CSE 576, Spring 2008 Face Recognition and Detection 101 Informal study by Andrew Gallagher, CMU, for CMU 16-721 Learning-Based Methods in Vision, Spring 2007 The Viola Jones algorithm OpenCV implementation was used. (<2 sec per image). For Schneiderman and Kanade, Object Detection Using the Statistics of Parts [IJCV’04], the www.pittpatt.com demo was used. (~10-15 seconds per image, including web transmission). CSE 576, Spring 2008 Face Recognition and Detection 102 Schneiderman Kanade Viola Jones CSE 576, Spring 2008 Face Recognition and Detection 103 Lin Liang1, Hong Chen2, Ying-Qing Xu1, Heung-Yeung Shum1 1 Microsoft Research, Asia 2 Xi’an Jiaotong University, China Training data include 92 pairs of original facial images <--> exaggerated caricatures drawn by an artist IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1) Original image Unexaggerated sketch Exaggerated caricature Apply to the image Caricature by the artist IEEE TRANS. ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,2002 24(1)