(cat, live)(house, home)

advertisement

TEXTRUNNER

1. Banko, M., Cafarella, M. J., Soderland. S., Broadhead, M., & Etzioni O. (2007). Open

Information Extraction from the Web. Proceedings of the 20th International Joint

Conference on Artificial Intelligence (IJCAI 2007)

2. Cafarella, M. J., Banko, M., & Etzioni, Oren. (2006). Relational Web Search. UW CSE

Tech Report 2006-04-02

3. Yates, A., & Etzioni, O. (2007). Unsupervised Resolution of Objects and Relations on

the Web. NAACL-HLT2007

Turing Center

Computer Science and Engineering

University of Washington

Reporter: Yi-Ting Huang

Date: 2009/9/4

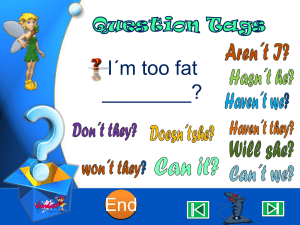

PART 1. Query

Qualified List Queries

Relationship Queries

Unnamed-Item Queries

Factoid Queries

2

Relationship Queries

3

Factoid Queries

4

Qualified List Queries

5

PART 2. Retrieval

Tn=(ei, r, ej)

6

PART 3. clustering

7

corpus

Learner

Extractor

PART 2

input

a structured set

of extractions

a set of

raw triple

Assessor

PART 3

output

Build

inverted

index

Query

Processing

Relationship Queries

Factoid Queries

input

Qualified List Queries

Unnamed-Item Queries

a subset of

extractions

output

PART 1

8

Spreading Activation Search

• Spreading activation is a technique that has

been used to perform associative retrieval of

nodes in a graph.

B (80%)

D (50%)

F (20%)

A(100%)

C (80%)

E (50%)

G (20%)

decay factor

9

PART1: Scoring based on

Spreading Activation Search

• search query term Q={q0, q1,…qn-1 }

e.g. king of pop, Q={q0=king, q1=of, q3=pop }

•

TextHit(ni; qj) =1, If a node ni contains a query term qj,

TextHit(ni; qj) = 0, Otherwise.

TextHit(e; qj ) = 1, If an edge e contains a query term qj,

TextHit(e; qj ) = 0, Otherwise.

•

decay factor:0~1

10

Q={q0, q1, … qn-1}

A ranked list,

T1={q0 q1…,e1,n12}

T2={q0 q1…,e2,n22}

T3={q0 q1…,e3,n32}

….

11

PART 2. Input & Output

• Input (corpus):

– Given a corpus of 9 million Web pages

– containing 133 million sentences,

• Output:

– extracted a set of 60.5 million tuples

– an extraction rate of 2.2 tuples per sentence.

12

Learner

Training

example

Dependency

parser

Positive

example

Heuristics Rule

False

example

Learner

13

Dependency parser

• Dependency parsers locate instances of semantic

relationships between words, forming a directed graph that

connects all words in the input.

– the subject relation (John ← hit),

– the object relation (hit → ball)

– phrasal modification (hit → with → bat).

14

S=(Mary is a good student who lives in Taipei.)

T=(Mary/NP1 is/VB a good student/NP2 who/PP lives/VB in/PP Taipei/NP3.)

e1 is NP, e.g. Mary

e.g. Taipei

e.g. who lives in

e.g. positive / negative

e.g. |R|=3<M

e.g. lives/VB

e.g. (Mary, Taipei,T)

15

S=(Mary is a good student who lives in Taipei.)

T=(Mary/NP1 is/VB a good student/NP2 who/PP lives/VB in/PP Taipei/NP3.)

A=(Mary/NP1 is/VB a good student/NP2 who/PP lives/VB in/PP Taipei/NP3.)

e.g. Mary is subject

e.g. Mary is head

e.g. (Mary, Taipei, T)

e.g. if “Taipei” is object of PP,

then if “Taipei” is valid semantic role

then positive

else negative

else

positive, R=normalize(R )

e.g. lives—> live

16

Learner

• Naive Bayes classifier

• T(ei, ri,j ,ej )

Features include

– the presence of part-of-speech tag sequences in the

relation ri,j ,

– the number of tokens in ri,j ,

– the number of stopwords in ri,j ,

– whether or not an object e is found to be a proper

noun,

– the part-of-speech tag to the left of ei,

– the part-of-speech tag to the right of ej .

17

Unsupervised Resolution of Objects

and Relations on the Web

Alexander Yates, Oren Etzioni

Turing Center

Computer Science and Engineering

University of Washington

Proceedings of NAACL HLT 2007

Research Motivation

• Web Information Extraction (WIE) systems extract

assertions that describe a relation and its

arguments from Web text.

– (is capital of ,D.C.,United States)

– (is capital city of ,Washington,U.S.)

which describes the same relationship as above but

contains a different name for the relation and each

argument.

• We refer to the problem of identifying

synonymous object and relation names as

Synonym Resolution (SR).

19

Research purpose

• we present RESOLVER, a novel, domainindependent, unsupervised synonym

resolution system that applies to both objects

and relations.

• RESOLVER Elements co-referential names

together using a probabilistic model informed

by string similarity and the similarity of the

assertions containing the names.

20

Assessor

SSM

A structured

subset of

extractions

output

Clustering

Combine

Evidence

a set of

extractions

ESP

input

21

String Similarity Model (SSM)

• T=(s, r, o);

• If s1 and s2 are object

string, sim(s1, s2) based

– s and o are object string;

on Monge-Elkan string

– r is relation string.

similarity.

– (r,s) is the property of s.

• If s1 and s2 are relation

– (s,o) is the instance of r.

string, sim(s1, s2) based

•

on Levenshtein string

Ti ( si , r , o); T j ( s j , r , o)

distance.

t

f

Ri , j Ri , j orRi , j

•

22

Levenshtein string distance

• Food

• Good

• God

• Good

23

Extracted Shared Property Model (ESP)

Mars

• T=(s, r, o);

– s and o are object string;

– r is relation string.

– (r,s) is the property of s.

•

•

•

•

(si, sj), si=Mars; sj=red planet

(Mars, lacks, ozone layer) 659

(red Planet, lacks, ozone layer) 26

They share four properties 4

659

Red planet

k=4 26

|Ej|=nj

|Ei|=ni

|Ui|=Pi

3500

659

|Ei|=ni

24

• Ball and urns abstraction

• ESP uses a pair of urns, containing Pi and Pj

balls respectively, for the two strings si and sj .

Some subset of the Pi balls have the exact

same labels as an equal-sized subset of the Pj

balls. Let the size of this subset be Si,j .

• Si,j =min(Pi ,Pj ),if R i,jt

Si,j <min(Pi ,Pj ),if R i,jf

25

Sij U i U j

| K | k

K Ei E j

Fi ( Ei Sij ) K

Sj

Si

Fj ( E j Sij ) K

Sij

|Ui|=Pi

|Ei|=ni

|Fi|=r

k

|Uj|=Pj

|Fj|=s

|Ej|=nj

26

Ball and urns abstraction

27

Sij

Sij

Pi

ni

28

Sij

r

Sj

Si

Sij

|Ui|=Pi

|Ei|=ni

|Fi|=r

s

k

|Uj|=Pj

|Fj|=s

|Ej|=nj

29

Combine Evidence

30

e1=(dog, live, house)

e2=(puppy, live, house)

e3=(cat, live, house)

e4=(cat, live, home)

e5=(kitty, live, home)

Elements[dog]=1 Elements[1]=dog

Elements[live]=2 Elements[2]=live

Elements[house]=3 Elements[3]=house

Elements[puppy]=4 Elements[4]=puppy

Elements[cat]=5

Elements[5]cat

Elements[home]=6 Elements[6]=home

Elements[kitty]=7 Elements[7]kitty

Max=50

1 round

Index[live house]=(1,4,5, live house)

Index[live home]=(5,7, live home)

Index[cat live]=(3,6, cat live)

Sim(1,4)

Sim(1,5)

Sim(4,5)

Sim(5,7)

Sim(3,6)

31

e1=(dog, live, house)

e2=(puppy, live, house)

e3=(cat, live, house)

e4=(cat, live, home)

e5=(kitty, live, home)

Elements[dog]=1 Elements[1]=dog+pupp

Elements[live]=2 Elements[2]=live

Elements[house]=3 Elements[3]=house

Elements[puppy]=1 Elements[4]=puppy

Elements[cat]=5

Elements[5]cat

Elements[home]=6 Elements[6]=home

Elements[kitty]=7 Elements[7]kitty

Max=50

1 round

Index[live house]=(1,4,5, live house)

Index[live home]=(5,7, live home)

Index[cat live]=(3,6, cat live)

Sim(1,4)

Sim(1,5)

Sim(4,5)

Sim(5,7)

Sim(3,6)

UsedCluster={}

32

e1=(dog, live, house)

e2=(puppy, live, house)

e3=(cat, live, house)

e4=(cat, live, home)

e5=(kitty, live, home)

Elements[dog]=1 Elements[1]=dog+pupp

Elements[live]=2 Elements[2]=live

Elements[house]=3 Elements[3]=house+ho

Elements[puppy]=1 Elements[4]=puppy

Elements[cat]=5

Elements[5]cat+kitty

Elements[home]=3 Elements[6]=home

Elements[kitty]=5 Elements[7]kitty

Max=50

1 round

Index[live house]=(1,4,5, live house)

Index[live home]=(5,7, live home)

Index[cat live]=(3,6, cat live)

Sim(1,4) UsedCluster={(1,4), (5,7), (3,6)}

Sim(1,5)

Sim(4,5)

Sim(5,7)

Sim(3,6)

33

e1=(dog, live, house)

e2=(puppy, live, house)

e3=(cat, live, house)

e4=(cat, live, home)

e5=(kitty, live, home)

Elements[dog]=1 Elements[1]=dog+pupp

Elements[live]=2 Elements[2]=live

Elements[house]=3 Elements[3]=house+ho

Elements[puppy]=1 Elements[4]=puppy

Elements[cat]=5

Elements[5]cat+kitty

Elements[home]=3 Elements[6]=home

Elements[kitty]=5 Elements[7]kitty

Max=50

2 round

Index[live house]=(1,1,5, live house)

Index[live home]=(5,5, live home)

Index[cat live]=(3,3, cat live)

UsedCluster={}

34

e1=(dog, live, house)

e2=(puppy, live, house)

e3=(cat, live, house)

e4=(cat, live, home)

e5=(kitty, live, home)

Elements[dog]=1 Elements[1]=dog+puppy+cat

Elements[live]=2 Elements[2]=live

Elements[house]=3 Elements[3]=house+home

Elements[puppy]=1 Elements[4]=puppy

Elements[cat]=1

Elements[5]cat+kitty

Elements[home]=3 Elements[6]=home

Elements[kitty]=5 Elements[7]kitty

Max=50

2 round

Index[live house]=(1,5, live house)

Index[live home]=(5, live home)

Index[cat live]=(3, cat live)

Sim(1,5) UsedCluster={(1,5)}

35

e1=(dog, live, house)

e2=(puppy, live, house)

e3=(cat, live, house)

e4=(cat, live, home)

e5=(kitty, live, home)

Elements[dog]=1 Elements[1]=dog+puppy+cat

Elements[live]=2 Elements[2]=live

Elements[house]=3 Elements[3]=house+home

Elements[puppy]=1 Elements[4]=puppy

Elements[cat]=1

Elements[5]cat+kitty

Elements[home]=3 Elements[6]=home

Elements[kitty]=5 Elements[7]kitty

Max=50

2 round

Index[live house]=(1,5, live house)

Index[live home]=(5, live home)

Index[cat live]=(3, cat live)

Sim(1,5) UsedCluster={(1,5)}

(dog, puppy, cat)(live,house)

(cat, kitty)(live,home)

(cat, live)(house, home)

36

Experiment

• Dataset :

– 9797 distinct object strings

– 10151 distinct relation strings

• Metric:

– Measure the precision by manually labeling all of the

cluster.

– Measure the recall

• The top 200 object strings formed 51 clusters of size, with an

average cluster is size of 2.9.

• For relation string, formed 110 clusters, with an avg cluster

size of4.9.

37

Result

• CSM had particular trouble with lowerfrequency strings, judging ar too many of

them to be co-referential on too little

evidence.

• Extraction error

• Multiple word sense.

38

Function Filtering

• (West Virginia, capital of, Richmond)

(Virginia, capital of, Charleston)

• sim(y1,y2)>thd

if there exist a function f and extraction

f(x1,y1) and f(x2, y2) match (1 to 1)

then not be merged.

• It requires as input the set of functional and

one-to-one relations in the data

39

Web Hitcounts

• While names for two similar objects may often

appear together in the same sentence, it is

relatively rare for two different names of the

same object to appear in the same sentence.

• Coordination-Phrase Filter searches

40

Experiment

41

Conclusion

• In the study, it showed that how the

TEXTRUNNER automatically extracts

information from web and the RESOLVER

system finds clusters of co-referential object

names in the relations 78% and recall of 68%

with the aid of CPF.

42

Comments

• The assumption of ESP model

• How to use TextRunner in my research

– Find relation

– Using TextRunner as query expansion

43