PowerPoint

advertisement

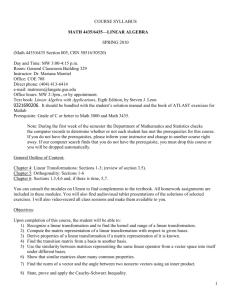

Lecture XXVII

Orthonormal Bases and Projections

Suppose that a set of vectors {x1,…,xr} for a basis for

some space S in Rm space such that r m. For

mathematical simplicity, we may want to form an

orthogonal basis for this space. One way to form such

a basis is the Gram-Schmit orthonormalization. In

this procedure, we want to generate a new set of

vectors {y1,…yr} that are orthonormal.

The Gram-Schmit process is:

y1 x1

x2 ' y1

y 2 x2

y1

y1 ' y1

x3 ' y1 x3 ' y2

y3 x3

y1 ' y1 y2 ' y2

zi

yi

yi ' yi 2

1

Example

1

9

x1 3 , x2 7

4

16

1

9 7 16 3

70

1

9

4 13

3 50

y2 7

13

1

16

4 20

1 3 4 3 13

4

The vectors can then be normalized to one. However,

to test for orthogonality:

70

13

1 3 4 5013 0

20

13

Theorem 2.13 Every r-dimensional vector space, except

the zero-dimensional space {0}, has an orthonormal

basis.

Theorem 2.14 Let {z1,…zr} be an orthornomal basis for

some vector space S, of Rm. Then each x Rm can be

expressed uniquely as

x uv

were u S and v is a vector that is orthogonal to every

vector in S.

Definition 2.10 Let S be a vector subspace of Rm. The

orthogonal complement of S, denoted S, is the

collection of all vectors in Rm that are orthogonal to

every vector in S: That is, S={x:x Rm and x’y=0 for all

yS}.

Theorem 2.15. If S is a vector subspace of Rm then its

orthogonal complement S is also a vector subspace of

Rm.

Projection Matrices

The orthogonal projection of an m x 1 vector x onto a

vector space S can be expressed in matrix form.

Let {z1,…zr} be any othonormal basis for S while {z1,…zm}

is an orthonormal basis for Rm. Any vector x can be

written as:

x 1 z1 r zr r 1 zr 1 m zm u v

Aggregating 1’,2’)’ where 1=(1 …r)’ and

2=(r+1…m)’ and assuming a similar decomposition of

Z=[Z1 Z2], the vector x can be written as:

x Z Z11 Z 2 2

u Z11

v Z 2 2

given orthogonality, we know that Z1’Z1=Ir and Z1’Z2=(0),

and so

1

1

Z1Z1 ' x Z1Z1 ' Z1 Z 2 Z1 0 Z11 u

2

2

Theorem 2.17 Suppose the columns of the m x r matrix

Z1 from an orthonormal basis for the vector space S

which is a subspace of Rm. If x Rm, the orthogonal

projection of x onto S is given by Z1Z1’x.

Projection matrices allow the division of the space into a

spanned space and a set of orthogonal deviations from

the spanning set. One such separation involves the

Gram-Schmit system.

In general, if we define the m x r matrix X1=(x1,…xr) and

define the linear transformation of this matrix that

produces an orthonormal basis as A, so that:

Z1 X1 A

we are left with the result that:

Z1 ' Z1 A' X1 ' X1 A I r

Given that the matrix A is nonsingular, the projection

matrix that maps any vector x onto the spanning set

then becomes:

1

PS Z1Z1 ' X 1 AA' X 1 ' X 1 ( X ' X ) X 1 '

Ordinary least squares is also a spanning

decomposition. In the traditional linear model:

y Xb

yˆ Xbˆ

within this formulation b is chosen to minimize the

error between y and estimated y:

y Xb ' y Xb

This problem implies minimizing the distance

between the observed y and the predicted plane Xb,

which implies orthogonality. If X has full column

rank, the projection space becomes X(X’X)-1X’ and the

projection then becomes:

Xb X X ' X X ' y

1

Premultiplying each side by X’ yields:

X ' Xb X ' X X ' X X ' y

1

b X ' X X ' X X ' X X ' y

1

b X ' X X ' y

1

1

Idempotent matrices can be defined as any matrix

such that AA=A.

Note that the sum of square errors under the projection

can be expressed as:

SSE y Xb ' y Xb

I

X X ' X X 'y ' I X X ' X X 'y

y ' I X X ' X X 'I X X ' X X 'y

y X X ' X X ' y ' y X X ' X X ' y

1

1

1

1

N

N

1

n

1

n

In general, the matrix In-X(X’X)-1X’ is referred to as an

idempotent matrix. An idempotent matrix is one that

AA=A:

I

n

X X ' X X ' In X X ' X X '

1

1

I n X X ' X X ' X X ' X X '

1

1

X X ' X X ' X X ' X X '

1

In X X ' X X '

1

1

Thus, the SSE can be expressed as:

SSE y ' I n X X ' X X ' y

1

y ' y y ' X X ' X X ' y v' v

1

which is the sum of the orthogonal errors from the

regression

Eigenvalues and Eigenvectors

Eigenvalues and eigenvectors (or more appropriately

latent roots and characteristic vectors) are defined by

the solution

Ax x

for a nonzero x. Mathematically, we can solve for the

eigenvalue by rearranging the terms:

Ax x 0

A I x 0

Solving for then involves solving the characteristic

equation that is implied by:

A I 0

Again using the matrix in the previous example:

1 9 5

1 0 0 1

3 7 8 0 1 0 3

2 3 5

0 0 1

2

5 14 132 3 0

9

5

7

8

3

5

In general, there are m roots to the characteristic

equation. Some of these roots may be the same. In

the above case, the roots are complex. Turning to

another example:

5 3 3

A 4 2 3 {1,2,5}

4 4 5

The eigenvectors are then determined by the linear

dependence in A-I matrix. Taking the last example:

4 3 3

A I 4 3 3

4 4 4

Obviously, the first and second rows are linear. The

reduced system then implies that as long as x1=x2 and

x3=0, the resulting matrix is zero.

Theorem 11.5.1 For any symmetric matrix, A, there exists

an orthogonal matrix H (that is, a square matrix

satisfying H’H=I) wuch that:

H ' AH L

where L is a diagonal matrix. The diagonal elements of

L are called the characteristic roots (or eigenvalues) of

A. The ith column of H is called the characteristic vector

(or eigenvector) of A corresponding to the characteristic

root of A.

This proof follows directly from the definition of

eigenvalues. Letting H be a matrix with eigenvalues in

the columns it is obvious that

AH LH

H ' AH H ' LH LH ' H L

Kronecker Products

Two special matrix operations that you will encounter

are the Kronecker product and vec() operators.

The Kronecker product is a matrix is an element by

element multiplication of the elements of the first

matrix by the entire second matrix:

a11B a12 B

a B a B

21

22

A B

am1 B am 2 B

a1n B

a2 n B

amn B

The vec(.) operator then involves stacking the columns

of a matrix on top of one another.