Lecture_7_May11

advertisement

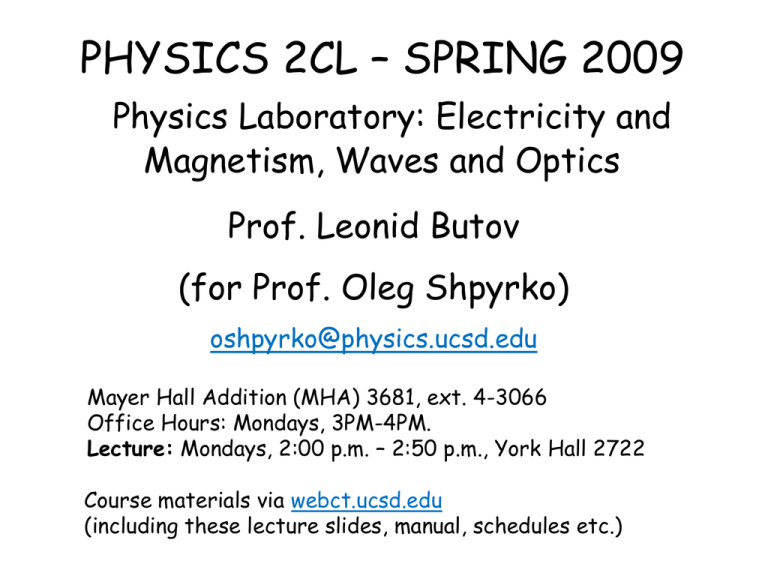

PHYSICS 2CL – SPRING 2009 Physics Laboratory: Electricity and Magnetism, Waves and Optics Prof. Leonid Butov (for Prof. Oleg Shpyrko) oshpyrko@physics.ucsd.edu Mayer Hall Addition (MHA) 3681, ext. 4-3066 Office Hours: Mondays, 3PM-4PM. Lecture: Mondays, 2:00 p.m. – 2:50 p.m., York Hall 2722 Course materials via webct.ucsd.edu (including these lecture slides, manual, schedules etc.) Today’s Plan: Chi-Squared, least-squared fitting Next week: Review Lecture (Prof. Shpyrko is back) Long-term course schedule Week Lecture Topic 1 Mar. 30 Introduction 2 Apr. 6 Error propagation; Oscilloscope; RC circuits Experiment NO LABS 0 3 Apr. 13 Normal distribution; RLC circuits 1 4 Apr. 20 Statistical analysis, t-values; 2 5 Apr. 27 Resonant circuits 3 6 May 4 Review of Expts. 4, 5, 6 and 7 4, 5, 6 or 7 7 May 11 Least squares fitting, c2 test 8 May 18 Review Lecture 4, 5, 6 or 7 9 May 25 No Lecture (UCSD Holiday: Memorial Day) No LABS, Formal Reports Due 10 June 1 Final Exam 4, 5, 6 or 7 NO LABS Schedule available on WebCT Labs Done This Quarter This week’s lab(s), 3 out of 4 0. Using lab hardware & software 1. Analog Electronic Circuits (resistors/capacitors) 2. Oscillations and Resonant Circuits (1/2) 3. Resonant circuits (2/2) 4. Refraction & Interference with Microwaves 5. Magnetic Fields 6. LASER diffraction and interference 7. Lenses and the human eye LEAST SQUARES FITTING (Ch.8) Purpose: 1) Agreement with theory? 30 2) Parameters y = f(x) 20 10 y(x) = Bx 0 0 5 10 15 x 20 25 LINEAR FIT y(x) = A +Bx : A – intercept with y axis B – slope 30 y(x) 20 q where B=tan q 10 A 0 0 5 10 15 x 20 25 x1 x2 x3 x4 x5 x6 y1 y2 y3 y4 y5 y6 LINEAR FIT 30 y(x) = A +Bx y=-2+2x y=9+0.8x y(x) 20 10 0 0 5 10 15 x 20 25 x1 y1 x2 x3 x4 x5 x6 y2 y3 y4 y5 y6 LINEAR FIT 30 y(x) = A +Bx y=-2+2x y=9+0.8x y(x) 20 x1 x2 x3 x4 x5 x6 y1 y2 y3 y4 y5 y6 Assumptions: 1) dxj << dyj ; dxj = 0 10 2) yj – normally distributed 3) sj: same for all yj 0 0 5 10 15 x 20 25 LINEAR FIT: y(x) = A + Bx Method of linear regression, aka the least-squares fit…. Quality of the fit 30 S [yj-yfitj] 2 y(x) 20 y4-yfit4 y3-yfit3 10 0 0 5 10 15 x 20 25 LINEAR FIT: y(x) = A + Bx Method of linear regression, aka the least-squares fit…. minimize 30 S [yj-(A+Bxj)]2 20 y(x) y4-(A+Bx4) y3-(A+Bx3) 10 0 0 5 10 15 x 20 25 What about error bars? Not all data points are created equal! 30 y(x) 20 10 0 0 5 10 15 x 20 25 Weight-adjusted average: x x Reminder: Typically the average value of x is given as: i N x1 x2 ... x N N Sometimes we want to weigh data points with some “weight factors” w1, w2 etc: wx x w i i i w1 x1 w2 x2 ... wN xN w1 w2 ... wN You already KNOW this – e. g. your grade: GRADE 20% Formal 12% LABS 20% FINAL 20% 12% * 5 20% Weights: 20 for Final Exam, 20 for Formal Report, and 12 for each of 5 labs – lowest score gets dropped) More precise data points should carry more weight! Idea: weigh the points with the ~ inverse of their error bar 30 y(x) 20 10 0 0 5 10 15 x 20 25 Weight-adjusted average: How do we average values with different uncertainties? Student A measured resistance 100±1 W (x1=100 W, s1=1 W) Student B measured resistance 105±5 W (x2=105 W, s2=5 W) wx x w i i i w1 x1 w2 x2 ... wN xN w1 w2 ... wN Or in this case calculate for i=1, 2: x w1 x1 w2 x2 w1 w2 w1 1 s 2 1 with “statistical” weights: w2 1 s 22 BOTTOM LINE: More precise measurements get weighed more heavily! c2 TEST for FIT (Ch.12) N c 2 y j s 2j j 1 30 f x j )) 2 y(x) 20 10 How good is the agreement between theory and data? 0 0 5 10 15 x 20 25 c2 TEST for FIT (Ch.12) N c 2 y f x )) 2 j j s j 1 2 j Ns y2 s 2 y N c~ 2 30 c2 d 1 # of degrees of freedom 20 y(x) d=N-c 10 0 0 5 10 15 x 20 25 # of data points # of parameters calculated from data # of constraints (Example: You can always draw a perfect line through 2 points) LEAST SQUARES FITTING xj yj y=f(x) 30 y(x)=A+Bx+Cx2+exp(-Dx)+ln(Ex)+… y4-(A+Bx4) y(x) 20 y3-(A+Bx3) N 1. c2 y j 1 10 2. Minimize c2 : c 2 0 A j f x j )) 2 s 2j c 2 0 … B 3. A in terms of xj yj ; B in terms of xj yj , … 0 0 c 2 ~ 5 4. Calculate 10 15 20 25c 0 5. Calculate d x c~ 2 c~ 2 6. Determine probability for c2 0 Usually computer program (for example Origin) can minimize c as a function of fitting parameters (multi-dimensional landscape) by method of steepest descent. 2 c 2 Think about rolling a bowling ball in some energy landscape until it settles at the lowest point Best fit (lowest c2) Sometimes the fit gets “stuck” in a local minimum like this one. Solution? Give it a “kick” by resetting one of the fitting parameters and trying again Fitting Parameter Space Example: fitting datapoints to y=A*cos(Bx) “Perfect” Fit Example: fitting datapoints to y=A*cos(Bx) “Stuck” in local minima of c2landscape fit Next on PHYS 2CL: Monday, May 18, Review Lecture