Mining Airfare Data to Minimize Ticket Purchase Price

Mining Airfare Data to

Minimize Ticket Purchase Price

Oren Etzioni

Craig Knoblock

Alex Yates

Rattapoom Tuchinda

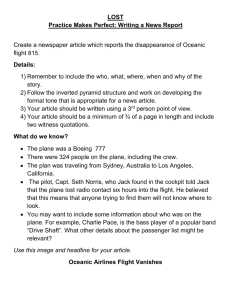

Outline

Introduction and Motivation

Data Mining Methods for Airfare Data

Experiments

Conclusion

Introduction

Corporations often use complex polices to vary product prices over time.

Airline industry often use dynamic pricing strategies to maximize its revenue. (based on seasons, seats available, pricing of other airlines … )

In 1988, Bell Laboratories patented a mathematical formula that could perform rapid calculations on fare problems with thousands of variables.

Introduction Cont.

Airlines have learned to more effectively price their product, instead of attacking each other to gain market share.

Today, airlines use sophisticated software to track their competitors ’ pricing history and propose adjustments that optimize their overall revenue.

Pricing Strategies

Flights depart around holidays or weekends appear to change more.

7 and 14 day “ advance purchase ”

(airlines usually increase ticket price two weeks before departure date and ticket prices are at a maximum on departure dates.)

Weekend Boosts

Example

Price change over time for American Airlines flight #192:223, LAX-BOS, departing on Jan. 2.

(This example shows the rapid price change in the days priori to the New Year)

Categories of airlines

Big players (United Airlines, American

Airlines … )

Smaller airlines which concentrate on selling low-price tickets ( Air Trans,

Southwest)

Different types of tickets (economic, business, first class, restricted or unrestricted)

Is Airfare Prediction Possible?

Airlines have tons of historical data.

Airlines have many fare classes for seats on the same flight, use different sales channels

(e.g., travel agents, priceline.com

… )

Airfare prices information become increasingly available on the Web.

But some information is not available (e.g., # of unsold seats in a particular flight).

Motivation

The goal of this paper is to learn whether to buy a ticket or wait at a particular time point, for a particular flight, given the current price history recorded.

Advisor Model

Consumer wants to buy a ticket.

Hamlet:

‘

buy

’

(this is a good price).

Or:

‘

wait

’

(a better price will emerge).

Notify consumer when price drops.

Will Flights sell out?

Watch the number of empty seats.

Upgrade to business class.

Place on another flight

In our experiment: upgrades were sufficient

Flight Data

Airlines typically user the same flight number

(e.g., NW17) to refer to multiple flights with the same route that depart at the same time on different dates.

In this paper, a particular flight is referred to a combination of its flight number and date.

In the training dataset, same class is the set of states with the same flight number and the same hours but different department dates.

Data Set

Used Fetch.com

’ s data collection infrastructure.

Collected over 12,000 price observations:

41 day period, every 3 hours.

Lowest available fare for a one-week roundtrip.

LAX-BOS and SEA-IAD.

6 airlines including American, United, etc.

Learning Task Formulation

Input: price observation data (built a flight data collection agent that runs at a scheduled internal, extracts the pricing data, and stores the results in a database.

Algorithm: label observations; run learner-- Hamlet

Output: for each purchase point buy VS wait

Formulation cont.

Want to use the latest information.

Run learner daily to produce new model.

Learner is trained on data gathered to date .

Learned policy is a collection of these models.

Our experiments evaluate the savings on the flights.

Candidate Approaches

By hand: an expert looks at the data.

Time series:

Not effective at price jumps!

Reinforcement learning: Q-learning.

Used in computational finance.

Rule learning: Ripper, …

RIPPER

RIPPER (Repeated Incremental Pruning to

Produce Error Reduction) is an efficient rule learning algorithm that can process noisy datasets containing thousands of examples.

First, the algorithm partition examples into a growing set and a pruning set. Next, a rule is grown by adding and pruning features.

Repeat the process until the rule set output by RIPPER.

RIPPER is suitable to handle two-class learning problem.

RIPPER Cont.

In this study, features include price, airline, route, hours-before-takeoff, etc.

Learned 20-30 rules …

IF hours before takeoff

252 AND price

2223

AND route

LAX BOS THEN wait .

Simple Time Series

Predict price using a fixed window of observations weighted by α .

k price

We used a linearly increasing function for α

The time series model makes its decision based on a one-step prediction of the ticket price change:

IF P t+1

> P t

THEN buy , ELSE wait .

p t

1

i k

1

( i ) p t

k

i i k

1

( i )

Reinforcement Learning

Reinforcement learning is learning what to do---how to map situations to actions---so as to maximize a numerical reward signal.

Supervised learning is learning from examples provided by some knowledgeable external supervisor.

Q-learning

Q learning is a method addressing the task of

Reinforcement Learning. The main idea is to approximate the function assigning each stateaction pair the highest possible payoff.

Q denote a transition function, mapping each state-action pair to a successor state

S denote a finite set of states

A denote a finite set of actions

R denote a reward function

Q-learning Cont.

Standard Q-Learning formula:

Q

max a

Q

price

Q

a

, s

Q

300000 if max

Q b , s

w , flight sells

s

otherwise.

out after s .

s ’ is the state resulting from taking action a in state s.

r is the discount factor for future rewards, in this paper, r=1

Hand-Crafted Rule

A fairly simple policy consulted with travel agents, using it to compare with other data mining algorithms

IF ExpPrice

THEN

(s

0

,t

0

)< CurPrice AND s wait ELSE buy

0

>7 days

*ExpPrice (s

0

,t

0

) denotes the average over all

MinPrice (s,t) for flights in the training set with that flight number, where MinPrice (s,t) is the minimum price of that flight over the interval starting from s days before departure up until time t

Hamlet

Stacking with three base learners:

Ripper (e.g., R= wait )

Time series

Q-learning (e.g., Q= buy )

Using multiple data mining methods to combine the outputs

Output: classifies each purchase point as ‘ buy ’ or ‘ wait ’ .

A Sample Rule Generated by Hamlet

IF hours-before-takeoff>=480

AND airline=

United

AND price>=360

AND TS=

buy

AND QL=

wait

THEN wait

* TS is the output of the Time Series algorithm,

QL is the output of Q-Learning.

Ticket Purchasing Simulation

Real price data (collected from the Web)

Simulated passengers (a passenger is a person wanting to buy a ticket on a particular flight at a particular date and time)

Hamlet run once per day (training data: all data gathered in the “ past ” )

Saving Experiments

Savings for a simulated passenger is the difference between the price of a ticket at the earliest purchase point and the price of the ticket at the point when the predictive model recommends buying.

Net savings is savings net of both losses and upgrade costs.

Effectiveness

HAMLET ’ s savings that were 61.8% of optimal!

Savings: buy immediately VS Hamlet.

Optimal: buy at the best possible time

(knowing the future price information)

Savings by Method

Savings over “ buy now ” .

Penalty for sell out = upgrade cost.

Total ticket cost is $4,579,600.

Net Savings by Method

$400,000

$300,000

$200,000

$100,000

$0

-$100,000

-$200,000

-$300,000

-$400,000

-$500,000

Legend:

Time Series

Q-Learning

By Hand

Ripper

Hamlet

Optimal

Savings by Method Cont.

Table below shows the savings, losses, upgrade costs, and net savings achieved in the simulation by each predictive model

Method Savings Losses Upgrade Cost % Upgrades Net Savings % Savings % of Optimal

Optimal $320,572 $0 $0 0% $320,572 7.0% 100.0%

By hand $228,318 $35,329

Ripper $211,031 $4,689

$22,472

$33,340

0.36%

0.45%

$170,517

$173,002

3.8%

3.8%

53.2%

54.0%

Time Series $269,879 $6,138 $693,105

Q-learning $228,663 $46,873 $29,444

Hamlet $244,868 $8,051 $38,743

33.00%

0.49%

0.42%

-$429,364

$152,364

$198,074

-9.5%

3.4%

4.4%

-134.0%

47.5%

61.8%

Sensitivity Analysis

Varying two key parameters to test the robustness of the results to changes in simulation:

Change the distribution of passengers requesting flight tickets (e.g., uniform, linear decrease/increase) to check the performance on multiple flights in three hour interval.

Allow a passenger to purchase a ticket at any time during a three hour internal (e.g., specifies fly in the morning, afternoon or evening)

$400,000

$300,000

$200,000

$100,000

$0

-$100,000

-$200,000

-$300,000

Sensitivity Analysis Cont.

Method

Optimal

By hand

Ripper

Time Series

Q-Learning

Hamlet

Interval Savings

Net Savings % of Optimal % upgrades

$323,802 100.0% 0.0%

$163,523

$173,234

-$262,749

$149,587

$191,647

55.5%

53.5%

-81.1%

46.2%

59.2%

0.0%

0.0%

6.3%

0.2%

0.1%

Legend:

Time Series

Q-Learning

By Hand

Ripper

Hamlet

Optimal

Upgrade Penalty

Most algorithms avoided the costly upgrades almost all the time. (% Upgrades as a fraction of the number of test passengers –

4488 of them)

Method

Optimal

By hand

Ripper

Time Series

Q-learning

Hamlet

Upgrade Cost % Upgrades

$0 0%

$22,472

$33,340

$693,105

$29,444

$38,743

0.36%

0.45%

33.00%

0.49%

0.42%

Discussion

76% of the time --- no savings possible.

(Prices never dropped from the earliest purchase point until the flight departed)

Uniform distribution over 21 days.

33% of the passengers arrived in the last week.

No passengers arrived 28 days before.

Simulation understates possible savings!

Savings on “ Feasible ” Flights

Comparison of Net Savings (as a percent of total ticket price) on Feasible Flights

(price saving is possible)

Method

Optimal

By hand

Ripper

Time Series

Q-learning

Hamlet

Net Savings

30.6%

21.8%

20.1%

25.8%

21.8%

23.8%

Related Work

Trading agent competition.

Auction strategies

Temporal data mining.

Time Series.

Computational finance.

Future Work

More tests are necessary: international, multi-routes, hotels, etc.

Cost sensitive learning

Additional base learners

Bagging/boosting

Refined predictions

Commercialization: patent, license.

Conclusions

Dynamic pricing is prevalent.

Price mining a-la-Hamlet is feasible.

Price drops can be surprisingly predictable.

Need additional studies and algorithms.

Great potential to help consumers!

?

But …

Airlines may introduce noise into their pricing patterns to fool a price miner.

Demand and supply of seats are uncertain. (Good prediction based on good assumptions)

Who can earn most benefit in the end?

(Airlines, consumers, price miner?)

John Nash said …

It ’ s a GAME

!

References

Fast Effective Rule Induction, In A. Prieditis and S.

Russell, editors, Proc of the 12th ICML , 1995.

A General Method for making classifiers costsensitive, In Proc. of Fifth ACM SIGKDD , 1999.

Reinforcement Learning: An Introduction. MIT Press,

Cambridge, MA, 1998.

The Analysis of Time Series: An Introduction.

Chapman and Hall, London, UK, 1989.

“ Airlines Rely on Technology To Manipulate Fare

Structure “— By Scott Mccartney, Wall Street Jounal,

November 3, 1997.

End