Project 2 Overview (Threads in Practice)

Thread Implementation and

Scheduling

CSE451

Andrew Whitaker

Scheduling: A Few Lectures Ago…

Scheduler decides when to run runnable threads

1 2 3 4 5 scheduler {4, 2, 3, 5, 1, 3, 4, 5, 2, …}

CPU

Programs should make no assumptions about the scheduler

Scheduler is a “black box”

This Lecture: Digging into the

Blackbox

Previously, we glossed over the choice of which process or thread is chosen to be run next

“some thread from the ready queue”

This decision is called scheduling

scheduling is policy

context switching is mechanism

We will focus on CPU scheduling

But, we will touch on network, disk scheduling

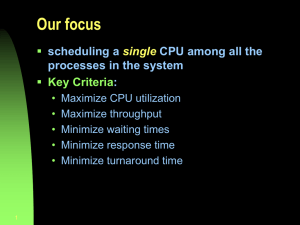

Scheduling goals

Maximize CPU utilization

Maximize throughput

Req/sec

Minimize latency

Average turnaround time

Time from request submission to completion

Average response time

Time from request submission till first result

Favor some particular class of requests

Priorities

Avoid starvation

These goals can conflict!

Context

Goals are different across applications

I/O Bound

Web server, database (maybe)

CPU bound

Calculating

Interactive system

The ideal scheduler works well across a wide range of scenarios

Preemption

Non-preemptive : Once a thread is given the processor, it has it for as long as it wants

A thread can give up the processor with

Thread.yield or a blocking I/O operation

Preemptive : A long-running thread can have the processor taken away

Typically, this is triggered by a timer interrupt

To Preempt or not to Preempt?

Non-preemptive schedulers are subject to starvation while (true) { /* do nothing */ }

Non-preemptive schedulers are easier to implement

Non-preemptive scheduling is easier to program

Fewer scheduler interleavings => fewer race conditions

Non-preemptive scheduling can perform better

Fewer locks => better performance

Preemption in Linux

User-mode code can always be preempted

Linux 2.4 (and before): the kernel was non-preemptive

Any thread/process running in the kernel runs to completion

Kernel is “trusted”

Linux 2.6: kernel is preemptive

Unless holding a lock

Linux 2.6 Preemption Details

The task_struct contains a preempt_count variable

Incrementing when grabbing a lock

Decrementing when releasing a lock

A thread in the kernel can be preempted iff preempt_count == 0

Algorithm #1: FCFS/FIFO

First-come first-served / First-in first-out ( FCFS/FIFO )

Jobs are scheduled in the order that they arrive

Like “real-world” scheduling of people in lines

Supermarkets, bank tellers, McD’s,

Starbucks …

Typically non-preemptive

no context switching at supermarket!

FCFS example

Suppose the duration of A is 5, and the durations of B and C are each 1. What is the turnaround time for schedules 1 and 2 (assuming all jobs arrive at roughly the same time) ?

Job A B time

C 1

2 B C Job A

Schedule 1: (5+6+7)/3 = 18/3 = 6

Schedule 2: (1+2+7)/3 = 10/3 = 3.3

Analysis FCFS

+ No starvation (assuming tasks terminate)

Average response time can be lousy

Small requests wait behind big ones

FCFS may result in poor overlap of CPU and I/O activity

I/O devices are left idle during long CPU bursts

FCFS at Amazon: Head-of-line

Blocking

Clients and servers at Amazon communicate over HTTP

HTTP is a request/reply protocol which does not allow for re-ordering

Problem: Short requests can get stuck behind a long request

Called “head-of-line blocking”

B Job A C

Algorithm #2: Shortest Job First

Choose the job with the smallest service requirement

Variant: shortest processing time first

Provably optimal with respect to average waiting time

SJF drawbacks

It’s non-preemptive

… but there’s a preemptive version – SRPT (Shortest

Remaining Processing Time first) – that accommodates arrivals

Starvation

Short jobs continually crowd out long jobs

Possible solution: aging

How do we know processing times?

In practice, we must estimate

Longest Job First?

Why would a company like Amazon favor

Longest Job First?

Algorithm #3: Priority

Assign priorities to requests

Choose request with highest priority to run next

if tie, use another scheduling algorithm to break (e.g.,

FCFS)

To implement SJF, priority = expected length of

CPU burst

Abstractly modeled (and usually implemented) as multiple “priority queues”

Put a ready request on the queue associated with its priority

Priority drawbacks

How are you going to assign priorities?

Starvation

if there is an endless supply of high priority jobs, no lowpriority job will ever run

Solution: “age” threads over time

Increase priority as a function of accumulated wait time

Decrease priority as a function of accumulated processing time

Many ugly heuristics have been explored in this space

Priority Inversion

A low-priority task acquires a resource

(e.g., lock) needed by a high-priority task

Can have catastrophic effects (see Mars

PathFinder)

Solution: priority inheritance

Temporarily “loan” some priority to the lockholding thread

Algorithm #4: RR

Round Robin scheduling (RR)

Ready queue is treated as a circular FIFO queue

Each request is given a time slice, called a quantum

Request executes for duration of quantum, or until it blocks

Advantages:

Simple

No need to set priorities, estimate run times, etc.

No starvation

Great for timesharing

RR Issues

What do you set the quantum to be?

No value is “correct”

If small, then context switch often, incurring high overhead

If large, then response time degrades

Turnaround time can be poor

Compared to FCFS or SJF

All jobs treated equally

If I run 100 copies of SETI@home, it degrades your service

Need priorities to fix this…

Combining algorithms

In practice, any real system uses some sort of hybrid approach, with elements of FCFS, SPT,

RR, and Priority

Example: multi-level queue scheduling

Use a set of queues, corresponding to different priorities

Priority scheduling across queues

Round-robin scheduling within a queue

Problems:

How to assign jobs to queues?

How to avoid starvation?

How to keep the user happy?

UNIX scheduling: Multi-level

Feedback Queues

Segregate processes according to their CPU utilization

Highest priority queue has shortest CPU quantum

Processes begin in the highest priority queue

Priority scheduling across queues, RR within

Process with highest priority always run first

Processes with same priority scheduled RR

Processes dynamically change priority

Increases over time if process blocks before end of quantum

Decreases if process uses entire quantum

Goals:

Reward interactive behavior over CPU hogs

Interactive jobs typically have short bursts of CPU

Multi-processor Scheduling

Each processor is scheduled independently

Two implementation choices

Single, global ready queue

Per-processor run queue

Increasingly, per-processor run queues are favored (e.g., Linux 2.6)

Promotes processor affinity (better cache locality)

Decreases demand for (slow) main memory

What Happens When a Queue is

Empty?

Tasks migrate from busy processors to idle processors

Book talks about push vs pull migration

Java 1.6 support: thread-safe doubleended queue ( java.util.Deque

)

Use a bounded buffer per consumer

If nothing in a consumer’s queue, steal work from somebody else