Data Checking Processes - Past - Higher Education Statistics Agency

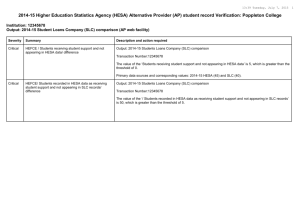

advertisement

HESA for Planners Objectives • Identify best practice around quality assurance and use of data • Improve our understanding of check documentation and how it can be utilised • Introduce the downloadable files and how they can be used • Outline the future information landscape • Learn from each other “You never finish HESA, you abandon it” Utilisation of time – ‘opportunity cost’ Efficient and cost-effective procedures Best practice Collaborative approach to data Resource Systems that work for the organisation The institutional perspective HESA Student Return Paul Cotter UWE 18th September 2012 Topics for today… 1. Background 2. The HESA Team 3. Data Checking Process – Past 4. Data Checking Process – Present + Future? 5. Managing HESA “High Season” 6. Headlines + Conclusions Background • HESA Student Return historically administered within a traditional Academic Registry service • Following a series of restructures, HESA responsibility moved to the Business Intelligence and Planning function at UWE which was later subsumed within a larger “super service” • Major initiatives ongoing designed to: 1. Make best use of data checking opportunities throughout the academic year 2. Minimise impact of workload peak during the HESA “high season” (August to October) To deliver high quality HESA student return without the stress, anxiety and excessive hours traditionally associated with this activity! HESA Student Return Team • Vice Chancellor (Formal Sign-Off) • Head of Planning (Oversight/Scrutiny, Local Sign-Off) • External Reporting Manager (Operational Ownership, HESA Expert) • External Reporting (Planning) Assistants x2 Data Checking Processes - Past • Go back 5+ years and “early” data checking efforts were undertaken by one Academic Registry staff member (Limited effectiveness, no shared ownership and no shared best practice, huge workload “peak” between August and October) • More recently, data quality activity prior to August focused on locally ‘policed’ data checking on the basis of UWE-wide “data calendar” and associated guidance • HESA team leader would chair a group of ‘data managers’ from across the university to enforce standard practice in terms of academic infrastructure and student record keeping • This process was inclusive (UWE-wide) and in keeping with a devolved administrative structure, it could not guarantee conformity and left a significant volume of amendments to be processed post August 1st (Ineffective, too devolved… Insufficient resources to ‘police’ 30,000 student records and variation in practice) Data Checking Processes – Present + Future • Major operational restructure leading to centralisation of administrative activities, efficiency savings required efficient solutions • Ownership of student record quality with centralised Student Records team, data checking activity maintained and monitored by the HESA team • Standardized, automated data checking reporting systems: 1. Backed up by “signed off” data checking process and clear/up-to-date online documentation accessible by all stakeholders 2. Policy of continuous data quality monitoring to “flatten” workload peaks 3. Proactively optimise data accuracy by using ‘beta’ systems prior to 1st August 4. “Service level agreement” required between Student Records and HESA teams to clarify responsibilities, deadlines, etc Managing HESA “High Season” • Documentation of the entire HESA process • Test documentation and processes through internal audit to legitimize approach • Attend HESA briefings and pay attention to communications to ensure that all system developments for future returns are dealt with asap • Hit the button on 1st August with the most accurate, conforming raw student record dataset possible • Work through your pre-agreed task list as systematically as possible • Make use of reconciliation tools to scrutinize accuracy and consistency Headlines + Conclusions • Proactive investment in data checking processes/tools and co-ordination of activity will pay dividends • Viewing HESA as a long term ‘project’ will deliver short and long term benefits: 1. Increased confidence in underlying student record quality 2. Minimising “high season” stress 3. Clear audit trail for future reference HESA for Planners, 18 September 2012 The HESA Integration Group Dr Nicky Kemp Director of Policy and Planning University of Bath Role of Policy and Planning • Accountable to Council for risk management process • Accountable to Audit Committee for data verification process • Accountable to Vice-Chancellor for the accuracy of data returns • Accountable to colleagues for guidance and interpretation HESA Integration Group Who’s who? • • • • • • Policy & Planning Finance Human Resources Student Records Estates Computing Services HESA Integration Group Responsibilities: • OPP: Institutional Profile, KIS, HEBCIS • Finance: HESA FSR; TRAC • HR: HESA Staff Return • SREO: HESA Student Record (NSS, DLHE); HESES • Estates: Estates Management Statistics What we do HESA Integration Group Activities: • Co-ordinate annual returns • Plan future returns • Consider implications for related activity • Undertake horizon scanning Data quality and use • Consistency • Funding & regulation • Visibility Verification • Consistent, documented verification process • Provision for internal ‘external’ scrutiny • Timely preparation of data submissions and their scrutiny • Credibility checks of data Using check documentation What is check documentation? • Check doc is an Excel workbook which displays the raw data in a series of tables • Key tool for quality assurance used by HESA and the HEI • Produced after a successful commit/test commit One of many tools for QA – should not be used in isolation! Why use check documentation? • Check documentation gives an overview of the submitted data • Comparison feature also useful for later commits/test commits to monitor changes • Identify and explain/resolve anomalies in the data • Cannot rely solely on HESA to check the data Understanding check documentation • Use the check documentation guide as a starting point • Use last year’s data for comparison: Using check documentation Check doc contains the definitions and populations needed to recreate an item and identify students Other commit stage reports HIN report Reports any cases where the year-on-year link has been broken Exception report Reports any commit stage validation errors and warnings which should be reviewed by the HEI Minerva …is the data query database operated by HESA During data collection HESA (and HEFCE) raise queries through Minerva and institutions answer them These responses are then reviewed and stored for future use by HESA and the institution Contextual information in Minerva • At the request of the National Planners Group HESA have begun working on a ‘public’ version of Minerva • Designed to give users of the data additional context • In November HESA will publish a query to Minerva to which HEIs can add notes about their institution e.g. ‘we have lots of franchise students’, ‘we recently opened a new department’ • HESA will not interact with what is added • Will remain open throughout the year to add to • HESA will extract and send the information to accompany data requests Using downloadable files Downloadable files • Data Supply (Core, subject, cost centre, module and qualifications on entry tables) • NSS inclusion (person and subject) and exclusion files • POPDLHE • TQI/UNISTATS • All available after every successful full and test commit Using downloadable files • - The files should be utilised to: Carryout additional DQ checks Benchmarking Planning/forecasting Improve efficiency (recreating data from scratch unnecessary) • The files include derived fields and groupings not otherwise found within the data League tables • Student staff ratios by institution and cost centre • First degree (full-time for Guardian) qualifiers by institution and league table subject group • Average total tariff scores on entry for first year, first degree students by institution and league table subject group. • Data is restricted to tariffable qualifications on entry (QUALENT3 = P41, P42, P46, P47, P50, P51, P53, P62, P63, P64, P65, P68, P80, P91) (Times applies ‘under 21’ restriction, Guardian applies ‘full-time’ restriction) • Full-time, first degree, UK domiciled leavers by Institution, League table subject, Activity • Full-time, first degree, UK domiciled leavers entering employment • Graduate employment/Non graduate employment/Unknown • Positive destinations /Negative destinations • Expenditure on academic departments (Guardian) • Expenditure on academic services (Guardian, Times, CUG) • Expenditure on staff and student facilities (Times, CUG) There is no smoking gun “Firstly, you need a team with the skills and motivation to succeed. Secondly, you need to understand what you want to achieve. Thirdly, you need to understand where you are now. Then, understand ‘aggregation of marginal gains’. Put simply….how small improvements in a number of different aspects of what we do can have a huge impact to the overall performance of the team.” Dave Brailsford, Performance Director of British Cycling There is no smoking gun “Firstly, you need a team with the skills and motivation to succeed. Secondly, you need to understand what you want to achieve. Thirdly, you need to understand where you are now. Then, understand ‘aggregation of marginal gains’. Put simply….how small improvements in a number of different aspects of what we do can have a huge impact to the overall performance of the team.” Dave Brailsford, Performance Director of British Cycling Demonstration…. HESA reporting and the new information landscape: the road ahead (Interim) Regulatory Partnership Group • Established by HEFCE and SLC • Includes: HESA, QAA, OFFA, OIA • Observers: UUK, GuildHE, NUS, UCAS • To advise on and oversee the transition to the new regulatory and funding systems for higher education in England • Projects A and B D-BIS White Paper • Para 6.22 of White Paper • A new system that: – Meets the needs of a wider group of users – Reduces duplication – Results in timelier and more relevant data • Also work with other government departments – Secure buy-in to reducing the burden Project B • • • • • Re-design the HE data and information landscape Feasibility and impact analysis Roadmap for future development Focus on data about courses, students and graduates Report to RPG June 2012 Engagement • HEFCE, UCAS, SLC, AHUA, BUFDG, SFC, HEFCW, WG, SROC, UCISA, ARC, Scot-ARC, ISB, OFFA, SAAS, HEBRG, CHRE, QAA, SPA, JISC, UKBA, ISB-NHS, HO, BIS, IA, DS, LRS, AOC, UUK, GuildHE, NPG, SLTN, DH • Workshops, webinars, web site and newsletters HEBRG survey of data collection HEBRG survey of data collection • Variety of responses from institutions • Some references to Data Supply Registers.. • …but comparison with other responses suggests these registers are incomplete • Some further research in this area, especially around PSRBs… • …still much to do to get a complete picture at a sector level What is our aim? Create a sector-wide information database by… … attempting to fit a single, rigid data model onto an HE sector that is diverse and dynamic Data model It is all about translation • From HEIs internal data language to an external data language • The extent to which these match • The variety of external languages that an HEI has to work with • A different journey for each institution • Issues of lexicon as well as data definitions… HE lifecycle Collection processes (eg HESA) • Annual, retrospective, detailed collection • Core dataset for funding, policy and public information • Demanding quality standards • Submission ratio = submitted records final records Student Record submission ratio 30 25 20 15 10 5 0 2004 2005 2006 2007 2008 2009 2010 2011 Evidence (or anecdote) from the KIS • New requirement – high profile • Data spread across institutions – – – – – No documentation Little/no control No standardisation/comparability Variable quality Variable approaches to storage • …being assembled and managed in spreadsheets Friday 27 November 2009 A YORKSHIRE university facing major job cuts made an accounting blunder which left it with £20m less than it expected at the end of the last financial year. Two separate errors in spreadsheets at Leeds University came to light when the institution was left with "significantly" less than it had expected. The mistakes were also carried forward in financial forecasts over a five-year planning period up to 2013, meaning a miscalculation of around £100m. Bosses at Leeds University insisted last night that the mistakes were not responsible for the institution now looking to making savings of £35m because of cuts in higher education funding. A spokesman said: "The two are not connected. The £20m forecasting error is irrelevant to the economies exercise because it isn't money we ever had – it's just a spreadsheet error. Spreadsheets • Often created by people who don’t understand principles of sound data management • Conflate data and algorithms • Almost impossible to QA • Spread and mutate like a virus Search “Ray Panko spreadsheets” Findings – data collectors • Very broad range of organisations, collecting for many different purposes • Low awareness of what each other is collecting • Inconsistent definitions and terminology • Patchy approach to data standards adoption • No holistic view of the system • No mechanism or forum to bring these bodies together Findings – HE providers • Few HE providers have a complete picture of their data reporting requirements • Weaknesses in data management • Uncoordinated responses to (uncoordinated) requests for data Findings - technology • • • • It is cheap and plentiful It works It is an enabler… ….if we could become more joined up in other ways Recommendations to RPG • Governance for data and information exchange across the sector • Development of a common data language – Data model, lexicon, thesaurus • Inventory of data collections • Specific data standards work – JACS – Unique Learner Number Issues for institutions • Find out what data you are supplying – – – – Who is supplying it? How is it being generated? Where is it going? How is it being used? • Data management – What data does your organisation need? – What control and governance does it have? – Where and how is it stored? Where are we now • Planning a programme of work to take the recommendations forward • Steering Group – Chaired by Sir Tim Wilson • UK dimension • Details will be posted on the RPG web pages www.hefce.ac.uk/about/intro/wip/rpg/ Seize the moment • Technologies and standards • Change – System-wide – Key stakeholders • Political will and profile HESA reporting and the new information landscape: the road ahead Welcome slide HESA for Planners (Student Record) Seminar September 2012 HEFCE’s verification work on the HESA 2011-12 student record The aim of this session is to advise you: • why we are doing this; • why it is so important now; • areas we will be looking at; and • what we are expecting of institutions during the HESA data verification period. Context Moving from a funding system that was insensitive: • +/-5% tolerance band; • Dampening in the system; • Second chances. This system was predicated on dampening and stability. Context (cont.) Moving to a system where “every student counts”. Be we looking at: • Student Number Control; • Phase-out funding; • New funding in the new regime. Every bit of FTE has a monetary value attached to it. Key areas we will be looking at In general the areas will be the same as last year. We will be looking at the robustness of the data affecting the following areas of our funding : • ABB+ qualifications on entry data (replacing the AAB+ from last year’s exercise).; • Research Degree Programme supervision fund allocations; • Student Opportunity: • Widening participation; • Teaching enhancement and student success (Improving retention); • Teaching Funding – fund-out rates; • Student Number Control; • National Scholarship Programme. Why HEFCE and not HESA? Long term advantage to institutions Ultimately it must be HEFCE because when it comes to funding decisions, based on the data: • It will have been HEFCE that made judgments on data and on responses to queries; • HEFCE has an understanding of the funding. During the verification process we will have raised queries and understood responses with reference to the funding consequences. We will have decided whether to probe further in the time available. Further down the line, if institutions have given HESA explanations we may not agree with, there can be issues. It is better to get the data right BEFORE submission. Demands made of student data These have changed over the years. From about 1994-95 to the early 2000’s, student records were, in the main, statistical records that were required to be about right. In the last 5 years or so, the requirements made of student data have meant that greater accuracy in individual student data is a requirement. HEFCE’s involvement in the verification work is aiding this move towards greater accuracy AT THE POINT OF SUBMISSION. We have seen the fruit of our involvement in the 2010-11 verification work in that if the same thresholds were used for the Funding and Monitoring Data exercise as were used for the 2009-10 reconciliation exercise, HALF the number of institutions would have been selected. Methodology this year As stated, similar areas will be reviewed as last year. Detailed guidance is on our website together with a copy of the full list of potential queries. These can be found at: http://www.hefce.ac.uk/whatwedo/invest/institns/funddataaudit/dat averification/ The query sheet indicates which queries will be raised by HESA and which by HEFCE. HESA queries are all based on warnings or checkdoc items. HEFCE queries are all based on IRIS outputs. This session will go through the areas to be reviewed, and what we are expecting of institutions during the 2011-12 HESA Student collection period. Use of Minerva Following feedback from institutions last year, all queries will be raised through Minerva. This is a pilot exercise to see if it works better than last year. Queries raised by HESA that HEFCE is interested in responses to will be highlighted by HESA in their Minerva queries. HEFCE will review the responses and follow these up if required. HEFCE will need to be satisfied with the response to the query before it is archived. Areas that HESA is not specifically interested in but HEFCE is due to the potential impact on funding will also be raised in Minerva, specifically identified as a HEFCE query. HEFCE will review the responses to these queries to ensure they are content. All this is within the confines of the requirement to sign off the data as fit for purpose by the HESA deadline of 01 November 2012. Engagement of the sector in the verification process We appreciate the HESA collection process is iterative. We do not expect data to be in its final condition when we first review it. However, we believe it useful to engage early in the process, to highlight areas where data, if they remain as they are when we review them, could have funding implications. We very much urge institutions to engage with our queries as early as possible, to ensure any issues are identified and corrections made in plenty of time for sign-off, assisting in ensuring the institution receives the correct level of funding based on data as submitted initially, and not following amendments down the line. IRIS outputs We will briefly go though the outputs to show where the queries relating to the IRIS outputs come from, where to look for reasons for the outputs and what areas of data need reviewing to ensure it is genuine data and not errors that need amending before sign-off of the data. Finally…. Any questions? Thank you for listening a.beresford@hefce.ac.uk