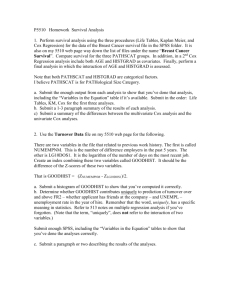

Introduction to Biostatistics for Clinical

advertisement

Introduction to Biostatistics

for Clinical and Translational

Researchers

KUMC Departments of Biostatistics & Internal Medicine

University of Kansas Cancer Center

FRONTIERS: The Heartland Institute of Clinical and Translational Research

Course Information

Jo A. Wick, PhD

Office Location: 5028 Robinson

Email: jwick@kumc.edu

Lectures are recorded and posted at

http://biostatistics.kumc.edu under ‘Educational

Opportunities’

Hypothesis Testing

Continued

Inferences on Time-to-Event

Survival Analysis is the class of statistical

methods for studying the occurrence (categorical)

and timing (continuous) of events.

The event could be

development of a disease

response to treatment

relapse

death

Survival analysis methods are most often applied

to the study of deaths.

Inferences on Time-to-Event

Survival Time: the time from a well-defined point in

time (time origin) to the occurrence of a given

event.

Survival data includes:

a time

an event ‘status’

any other relevant subject characteristics

Inferences on Time-to-Event

In most clinical studies the length of study period is

fixed and the patients enter the study at different

times.

Lost-to-follow-up patients’ survival times are measured

from the study entry until last contact (censored

observations).

Patients still alive at the termination date will have

survival times equal to the time from the study entry until

study termination (censored observations).

When there are no censored survival times, the set

is said to be complete.

Functions of Survival Time

Let T = the length of time until a subject

experiences the event.

The distribution of T can be described by several

functions:

Survival Function: the probability that an individual

survives longer than some time, t:

S(t) = P(an individual survives longer than t)

= P(T > t)

Functions of Survival Time

If there are no censored observations, the survival

function is estimated as the proportion of patients

surviving longer than time t:

# of patients surviving longer than t

Sˆ (t ) =

total # of patients

Functions of Survival Time

Density Function: The survival time T has a

probability density function defined as the limit of

the probability that an individual experiences the

event in the short interval (t, t + t) per unit width

t:

P an individual dying in the interval (t, t + t )

f (t ) = lim

t 0

t

Functions of Survival Time

Survival density function:

Functions of Survival Time

If there are no censored observations, f(t) is

estimated as the proportion of patients

experiencing the event in an interval per unit width:

# of patients dying in the interval beginning at time t

fˆ(t )

total # of patients interval width

The density function is also known as the

unconditional failure rate.

Functions of Survival Time

Hazard Function: The hazard function h(t) of

survival time T gives the conditional failure rate.

It is defined as the probability of failure during a

very small time interval, assuming the individual

has survived to the beginning of the interval:

P an individual of age t fails in the time interval (t , t + t )

h(t ) lim

t 0

t

Functions of Survival Time

The hazard is also known as the instantaneous

failure rate, force of mortality, conditional mortality

rate, or age-specific failure rate.

The hazard at any time t corresponds to the risk of

event occurrence at time t:

For example, a patient’s hazard for contracting influenza

is 0.015 with time measured in months.

What does this mean? This patient would expect to

contract influenza 0.015 times over the course of a month

assuming the hazard stays constant.

Functions of Survival Time

If there are no censored observations, the hazard

function is estimated as the proportion of patients

dying in an interval per unit time, given that they

have survived to the beginning of the interval:

# of patients dying in the interval beginning at time t

hˆ(t ) =

# of patients surviving at t interval width

=

# of patients dying per unit time in the interval

# of patients surviving at t

Estimation of S(t)

Product-Limit Estimates (Kaplan-Meier): most

widely used in biological and medical applications

Life Table Analysis (actuarial method): appropriate

for large number of observations or if there are

many unique event times

Methods for Comparing S(t)

If your question looks like: “Is the time-to-event

different in group A than in group B (or C . . . )?”

then you have several options, including:

Log-rank Test: weights effects over the entire observation

equally—best when difference is constant over time

Weighted log-rank tests:

• Wilcoxon Test: gives higher weights to earlier effects—better for

detecting short-term differences in survival

• Tarome-Ware: a compromise between log-rank and Wilcoxon

• Peto-Prentice: gives higher weights to earlier events

• Fleming-Harrington: flexible weighting method

Inferences for Time-to-Event

Example: survival in squamous cell carcinoma

A pilot study was conducted to compare

Accelerated Fractionation Radiation Therapy

versus Standard Fractionation Radiation Therapy

for patients with advanced unresectable squamous

cell carcinoma of the head and neck.

The researchers are interested in exploring any

differences in survival between the patients treated

with Accelerated FRT and the patients treated with

Standard FRT.

Inferences for Time-to-Event

H0: S1(t) = S2(t) for all t

Overall Survival by Treatment

H1: S1(t) ≠ S2(t) for at least one t

1.00

AFRT

SFRT

Survival Probability

0.75

0.50

0.25

0.00

0

12

24

36

48

60

72

Survival Time (months)

84

96

108

120

Squamous Cell Carcinoma

Gender

Male

Female

Age

Median

Range

Primary Site

Larynx

Oral Cavity

Pharynx

Salivary Glands

Stage

III

IV

Tumor Stage

T2

T3

T4

AFRT

SFRT

28 (97%)

1 (3%)

16 (100%)

0

61

30-71

65

43-78

3 (10%)

6 (21%)

20 (69%)

0

4 (25%)

1 (6%)

10 (63%)

1 (6%)

4 (14%)

25 (86%)

8 (50%)

8 (50%)

3 (10%)

8 (28%)

18 (62%)

2 (12%)

7 (44%)

7 (44%)

Squamous Cell Carcinoma

Median Survival Time:

AFRT: 18.38 months (2 censored)

SFRT: 13.19 months (5 censored)

Overall Survival by Treatment

1.00

AFRT

SFRT

Survival Probability

0.75

0.50

0.25

0.00

0

12

24

36

48

60

72

Survival Time (months)

84

96

108

120

Squamous Cell Carcinoma

Overall Survival by Treatment

Log-Rank1.00

test p-value= 0.5421

AFRT

SFRT

Survival Probability

0.75

0.50

0.25

0.00

0

12

24

36

48

60

72

Survival Time (months)

84

96

108

120

Squamous Cell Carcinoma

Staging of disease is also prognostic for survival.

Shouldn’t we consider the analysis of the survival

of these patients by stage as well as by treatment?

Squamous Cell Carcinoma

Overall Survival by Treatment and Stage

1.00

AFRT/Stage 3

AFRT/Stage 4

SFRT/Stage 3

SFRT/Stage 4

Median Survival Time:

AFRT Stage 3: 77.98 mo.

AFRT Stage 4: 16.21 mo.

SFRT Stage 3: 19.34 mo.

SFRT Stage 4: 8.82 mo.

Survival Probability

0.75

0.50

0.25

0.00

0

12

24

36

48

60

72

Survival Time (Months)

Log-Rank test p-value = 0.0792

84

96

108

120

Inferences on Time-to-Event

Concerns a response that is both categorical

(event?) and continuous (time)

There are several nonparametric methods that can

be used—choice should be based on whether you

anticipate a short-term or long-term benefit.

Log-rank test is optimal when the survival curves

are approximately parallel.

Weight functions should be chosen based on

clinical knowledge and should be pre-specified.

What about adjustments?

There may be other predictors or explanatory

variables that you believe are related to the

response other than the actual factor (treatment) of

interest.

Regression methods will allow you to incorporate

these factors into the test of a treatment effect:

Logistic regression: when y is categorical and nominal

binary

Multinomial logistic regression: when y is categorical

with more than 2 nominal categories

Ordinal logistic regression: when y is categorical and

ordinal

What about adjustments?

There may be other predictors or explanatory

variables that you believe are related to the

response other than the actual factor (treatment) of

interest.

Regression methods will allow you to incorporate

these factors into the test of a treatment effect:

Linear regression: when y is continuous and the factors

are a combination of categorical and continuous (or just

continuous)

Two- and three-way ANOVA: when y is continuous and

the factors are all categorical

What about adjustments?

There may be other predictors or explanatory

variables that you believe are related to the

response other than the actual factor (treatment) of

interest.

Regression methods will allow you to incorporate

these factors into the test of a treatment effect:

Cox regression: when y is a time-to-event outcome

Linear Regression

The relationship between two variables may be

one of functional dependence—that is, the

magnitude of one of the variables (dependent) is

assumed to be determined by the magnitude of the

second (independent), whereas the reverse is not

true.

Blood pressure and age

Dependent does not equate to ‘caused by’

Linear Regression

In it’s most basic form, linear regression is a

probabilistic model that accounts for unexplained

variation in the relationship between two variables:

y = Deterministic Component + Random Error

= mx + b + ε

= β0 + β1x + ε

This model is referred to as simple linear

regression.

10

Simple Linear Regression

yy ==0.78

0 +1x+ +

0.89

0 x+ε

8

y = β0 + β1x + ε

4

y

6

y response variable

x explanatory variable

β0 intercept

2

β1 slope

0

ε 'error'

0

2

4

6

x

8

10

Arm Circumference and Height

Data on anthropomorphic measures from a

random sample of 150 Nepali children up to 12

months old

What is the relationship between average arm

circumference and height?

Data:

Arm circumference: x = 12.4cm

Height: x = 61.6cm

s = 1.5cm

R = (7.3cm,15.6cm)

s = 6.3cm

R = (40.9cm,73.3cm)

Arm Circumference and Height

Treat height as continuous when estimating the

relationship

Linear regression is a potential option--it allows us

to associate a continuous outcome with a

continuous predictor via a linear relationship

The line estimates the mean value of the outcome for

each continuous value of height in the sample used

Makes a lot of sense, but only if a line reasonably

describes the relationship

Visualizing the Relationship

Scatterplot

Visualizing the Relationship

Does a line reasonably describe the general shape

of the relationship?

We can estimate a line using a statistical software

package

The line we estimate will be of the form:

ŷ = β0 + β1x

Here,ŷ is the average arm circumference for a

group of children all of the same height, x

Arm Circumference and Height

Arm Circumference and Height

Arm Circumference and Height

How do we interpret the estimated slope?

The average change in arm circumference for a one-unit

(1 cm) increase in height

The mean difference in arm circumference for two groups

of children who differ by one unit (1 cm) in height

These results estimate that the mean difference in

arm circumferences for a one centimeter difference

in height is 0.16 cm, with taller children having

greater average arm circumference

Arm Circumference and Height

What is the estimated mean difference in arm

circumference for children 60 cm versus 50 cm

tall?

Arm Circumference and Height

Our regression results only apply to the range of

observed data

Arm Circumference and Height

How do we interpret the estimated intercept?

The estimated y when x = 0--the estimated mean arm

circumference for children 0 cm tall.

Does this make sense given our sample?

Frequently, the scientific interpretation of the

intercept is meaningless.

It is necessary for fully specifying the equation of a

line.

Arm Circumference and Height

X = 0 isn’t even on the graph

Inferences using Linear

Regression

H0: β1 = 0 versus

H1: β1 > 0 (strong positive linear relationship)

or H1: β1 < 0 (strong negative linear relationship)

or H1: β1 ≠ 0 (strong linear relationship)

Test statistic: t (df = n – 2)

ˆ 1

t

sˆ

1

x x y y

i

i

s

xi x

xi x

2

2

Notes

Linear regression performed with a single predictor

(one x) is called simple linear regression.

Correlation is a measure of the strength of the linear

relationship between two continuous outcomes.

Linear regression with more than one predictor is

called multiple linear regression.

y = β0 + β1x1 + β1x1 +

+ βk xk + ε

Multiple Linear Regression

For the ith x:

H0: βi = 0

H1: βi ≠ 0

Test statistic: t (df = 1)

For all x:

H0: βi = 0 for all i

H1: βi ≠ 0 for at least one i

Test statistic: F (df1 = k, df2 = n – (k + 1))

Multiple Linear Regression

How do we interpret the estimate of βi?

βi is called a partial regression coefficient and can be

thought of as conditional slope—it is the rate of change of

y for every unit change in xi if all other x’s are held

constant.

It is sometimes said that βi is a measure of the

relationship between y and xi after ‘controlling for’ the

remaining x’s—that is, it is a measure of the extent to

which y is related to xi after removing the effects of the

other x’s.

Regression Plane

With one predictor, the relationship is described by

a line.

With two predictors, the relationship is estimated

by a plane in 3D.

Linear Correlation

Linear regression assumes the linear dependence

of one variable y (dependent) on a second variable

x (independent).

Linear correlation also considers the linear

relationship between two continuous outcomes but

neither is assumed to be functionally dependent

upon the other.

Interest is primarily in the strength of association, not in

describing the actual relationship.

Scatterplot

48

Correlation

Pearson’s Correlation Coefficient is used to

quantify the strength.

r

x x y y

x x y y

2

2

Note: If sample size is small or data is non-normal,

use non-parametric Spearman’s coefficent.

49

Correlation

r>0

r<0

r=0

Business Statistics, 4e, by Ken Black. © 2003 John Wiley & Sons.

3-56

Inferences on Correlation

H0: ρ = 0 (no linear association) versus

H1: ρ > 0 (strong positive linear relationship)

or H1: ρ < 0 (strong negative linear relationship)

or H1: ρ ≠ 0 (strong linear relationship)

Test statistic: t (df = 2)

Correlation

52

Correlation

* Excluding France

53

Logistic Regression

When you are interested in describing the relationship

between a dichotomous (categorical, nominal)

outcome and a predictor x, logistic regression is

appropriate.

ln

= β0 + β1x + ε

1

Pr y = 1

Conceptually, the method is the same as linear

regression MINUS the assumption of y being

continuous.

Logistic Regression

Interpretation of regression coefficients is not

straight-forward since they describe the

relationship between x and the log-odds of y = 1.

We often use odds ratios to determine the

relationship between x and y.

Death

A logistic regression model was used to describe

the relationship between treatment and death:

Y = {died, alive}

X = {intervention, standard of care}

ln

= β0 + β1x + ε

1

Pr y = death

if intervention

1

x=

2 if standard of care

Death

β1 was estimated to be 0.69. What does this

mean?

If you exponentiate the estimate, you get the odds ratio

relating treatment to the probability of death!

exp(0.69) = 0.5—when treatment involves the

intervention, the odds of dying decrease by 50% (relative

to standard of care).

Notice the negative sign—also indicates a decrease in

the chances of death, but difficult to interpret without

transformation.

Death

β1 was estimated to be 0.41. What does this

mean?

If you exponentiate the estimate, you get the odds ratio

relating treatment to the probability of death!

exp(0.41) = 1.5—when treatment involves the

intervention, the odds of dying increase by 50% (relative

to standard of care).

Notice the positive sign—also indicates an increase in the

chances of death, but difficult to interpret without

transformation.

Logistic Regression

What about when x is continuous?

Suppose x is age and y is still representative of

death during the study period.

ln

= β0 + β1x + ε

1

Pr y = death

x = baseline age in years

Death

β1 was estimated to be 0.095. What does this

mean?

If you exponentiate the estimate, you get the odds ratio

relating age to the probability of death!

exp(0.095) = 1.1—for every one-year increase in age, the

odds of dying increase by 10%.

Notice the positive sign—also indicates a decrease in the

chances of death, but difficult to interpret without

transformation.

Multiple Logistic Regression

In the same way that linear regression can

incorporate multiple x’s, logistic regression can

relate a categorical y response to several

independent variables.

Interpretation of partial regression coefficients is

the same.

Cox Regression

Cox regression and logistic regression are very

similar

Both are trying to describe a yes/no outcome

Cox regression also attempts to incorporate the timing of

the outcome in the modeling

Cox vs Logistic Regression

Distinction between rate and proportion:

Incidence (hazard) rate: number of “events” per

population at-risk per unit time (or mortality rate, if

outcome is death)

Cumulative incidence: proportion of “events” that occur

in a given time period

63

Cox vs Logistic Regression

Distinction between hazard ratio and odds ratio:

Hazard ratio: ratio of incidence rates

Odds ratio: ratio of proportions

Logistic regression aims to estimate the odds ratio

Cox regression aims to estimate the hazard ratio

By taking into account the timing of events, more

information is collected than just the binary yes/no.

64

Publication Bias

From: Publication bias: evidence of delayed publication in a cohort study of clinical research projects BMJ 1997;315:640-645 (13 September)

65

Publication Bias

Table 4 Risk factors for time to publication using univariate Cox regression analysis

Characteristic

# not published

# published

Hazard ratio (95% CI)

Null

29

23

1.00

Non-significant

trend

16

4

0.39 (0.13 to 1.12)

Significant

47

99

2.32 (1.47 to 3.66)

From: Publication bias: evidence of delayed publication in a cohort study of clinical research projects BMJ 1997;315:640-645 (13 September)

Interpretation: Significant results have a 2fold higher incidence of publication compared

to null results.

Cox Regression

Cox Regression is what we call semiparametric

Kaplan-Meier is nonparametric

There are also parametric methods which assume the

distribution of survival times follows some type of

probability model (e.g., exponential)

Can accommodate both discrete and continuous

measures of event times.

Can accommodate multiple x’s.

Easy to incorporate time-dependent covariates—

covariates that may change in value over the

course of the observation period

Clinical Trials &

Design of Experiments

Random Samples

The Fundamental Rule of Using Data for

Inference requires the use of random sampling or

random assignment.

Random sampling or random assignment ensures

control over “nuisance” variables.

We can randomly select individuals to ensure that

the population is well-represented.

Equal sampling of males and females

Equal sampling from a range of ages

Equal sampling from a range of BMI, weight, etc.

Random Samples

Randomly assigning subjects to treatment levels to

ensure that the levels differ only by the treatment

administered.

weights

ages

risk factors

Nuisance Variation

Nuisance variation is any undesired sources of

variation that affect the outcome.

Can systematically distort results in a particular

direction—referred to as bias.

Can increase the variability of the outcome being

measured—results in a less powerful test because of too

much ‘noise’ in the data.

Example: Albino Rats

It is hypothesized that exposing albino rats to

microwave radiation will decrease their food

consumption.

Intervention: exposure to radiation

Levels exposure or non-exposure

Levels 0, 20000, 40000, 60000 uW

Measurable outcome: amount of food consumed

Possible nuisance variables: sex, weight,

temperature, previous feeding experiences

Experimental Design

Statistical analysis, no matter how intricate, cannot

rescue a poorly designed study.

No matter how efficient, statistical analysis cannot

be done overnight.

A researcher should plan and state what they are

going to do, do it, and then report those results.

Be transparent!

Experimental Design

Types of data collected in a clinical trial:

Treatment – the patient’s assigned treatment and actual

treatment received

Response – measures of the patient’s response to

treatment including side-effects

Prognostic factors (covariates) – details of the patient’s

initial condition and previous history upon entry into the

trial

Experimental Design

Three basic types of outcome data:

Qualitative – nominal or ordinal, success/failure, CR, PR,

Stable disease, Progression of disease

Quantitative – interval or ratio, raw score, difference,

ratio, %

Time to event – survival or disease-free time, etc.

Experimental Design

Formulate statistical hypotheses that are germane

to the scientific hypothesis.

Determine:

experimental conditions to be used (independent

variable(s))

measurements to be recorded

extraneous conditions to be controlled (nuisance

variables)

Experimental Design

Specify the number of subjects required and the

population from which they will be sampled.

Power, Type I & II errors

Specify the procedure for assigning subjects to the

experimental conditions.

Determine the statistical analysis that will be

performed.

Experimental Design

Considerations:

Does the design permit the calculation of a valid estimate

of treatment effect?

Does the data-collection procedure produce reliable

results?

Does the design possess sufficient power to permit and

adequate test of the hypotheses?

Experimental Design

Considerations:

Does the design provide maximum efficiency within the

constraints imposed by the experimental situation?

Does the experimental procedure conform to accepted

practices and procedures used in the research area?

• Facilitates comparison of findings with the results of other

investigations

Threats to Valid Inference

Statistical Conclusion Validity

• Low statistical power - failing to reject a false hypothesis because

of inadequate sample size, irrelevant sources of variation that are

not controlled, or the use of inefficient test statistics.

• Violated assumptions - test statistics have been derived

conditioned on the truth of certain assumptions. If their tenability is

questionable, incorrect inferences may result.

Many methods are based on approximations to a

normal distribution or another probability

distribution that becomes more accurate as sample

size increases—using these methods for small

sample sizes may produce unreliable results.

Threats to Valid Inference

Statistical Conclusion Validity

Reliability of measures and treatment implementation.

Random variation in the experimental setting and/or

subjects.

• Inflation of variability may result in not rejecting a false hypothesis

(loss of power).

Threats to Valid Inference

Internal Validity

Uncontrolled events - events other than the

administration of treatment that occur between the time

the treatment is assigned and the time the outcome is

measured.

The passing of time - processes not related to treatment

that occur simply as a function of the passage of time that

may affect the outcome.

Threats to Valid Inference

Internal Validity

Instrumentation - changes in the calibration of a

measuring instrument, the use of more than one

instrument, shifts in subjective criteria used by observers,

etc.

• The “John Henry” effect - compensatory rivalry by subjects

receiving less desirable treatments.

• The “placebo” effect - a subject behaves in a manner consistent

with his or her expectations.

Threats to Valid Inference

External Validity—Generalizability

Reactive arrangements - subjects who are aware that

they are being observed may behave differently that

subjects who are not aware.

Interaction of testing and treatment - pretests may

sensitize subjects to a topic and enhance the

effectiveness of a treatment.

Threats to Valid Inference

External Validity—Generalizability

Self-selection - the results may only generalize to

volunteer populations.

Interaction of setting and treatment - results obtained in a

clinical setting may not generalize to the outside world.

Clinical Trials—Purpose

Prevention trials look for more effective/safer

ways to prevent a disease in individuals who have

never had it, or to prevent a disease from recurring

in individuals who have.

Screening trials attempt to identify the best

methods for detecting diseases or health

conditions.

Diagnostic trials are conducted to distinguish

better tests or procedures for diagnosing a

particular disease or condition.

Clinical Trials—Purpose

Treatment trials assess experimental treatments,

new combinations of drugs, or new approaches to

surgery or radiation therapy for efficacy and safety.

Quality of life (supportive care) trials explore

means to improve comfort and quality of life for

individuals with chronic illness.

Classification according to the U.S. National Institutes of Health

Clinical Trials—Phases

Pre-clinical studies involve in vivo and in vitro

testing of promising compounds to obtain

preliminary efficacy, toxicity, and pharmacokinetic

information to assist in making decisions about

future studies in humans.

Clinical Trials—Phases

Phase 0 studies are exploratory, first-in-human

trials, that are designed to establish very early on

whether the drug behaves in human subjects as

was anticipated from preclinical studies.

Typically utilizes N = 10 to 15 subjects to assess

pharmacokinetics and pharmacodynamics.

Allows the go/no-go decision usually made from animal

studies to be based on preliminary human data.

Clinical Trials—Phases

Phase I studies assess the safety, tolerability,

pharmacokinetics, and pharmacodynamics of a

drug in healthy volunteers (industry standard) or

patients (academic/research standard).

Involves dose-escalation studies which attempt to identify

an appropriate therapeutic dose.

Utilizes small samples, typically N = 20 to 80 subjects.

Clinical Trials—Phases

Phase II studies assess the efficacy of the drug

and continue the safety assessments from phase I.

Larger groups are usually used, N = 20 to 300.

Their purpose is to confirm efficacy (i.e., estimation of

effect), not necessarily to compare experimental drug to

placebo or active comparator.

Clinical Trials—Phases

Phase III studies are the definitive assessment of

a drug’s effectiveness and safety in comparison

with the current gold standard treatment.

Much larger sample sizes are utilized, N = 300 to 3,000,

and multiple sites can be used to recruit patients.

Because they are quite an investment, they are usually

randomized, controlled studies.

Clinical Trials—Phases

Phase IV studies are also known as post-

marketing surveillance trials and involve the

ongoing or long-term assessment of safety in

drugs that have been approved for human use.

Detect any rare or long-term adverse effects in a much

broader patient population

The Size of a Clinical Trial

Lasagna’s Law

Once a clinical trial has started, the number of suitable

patients dwindles to a tenth of what was calculated before

the trial began.

The Size of a Clinical Trial

“How many patients do we need?”

Statistical methods can be used to determine the

required number of patients to meet the trial’s

principal scientific objectives.

Other considerations that must be accounted for

include availability of patients and resources and

the ethical need to prevent any patient from

receiving inferior treatment.

We want the minimum number of patients required to

achieve our principal scientific objective.

The Size of a Clinical Trial

Estimation trials involve the use of point and

interval estimates to describe an outcome of

interest.

Hypothesis testing is typically used to detect a

difference between competing treatments.

The Size of a Clinical Trial

Type I error rate (α): the risk of concluding a

significant difference exists between treatments

when the treatments are actually equally effective.

Type II error rate (β): the risk of concluding no

significant difference exists between treatments

when the treatments are actually different.

The Size of a Clinical Trial

Power (1 – β): the probability of correctly detecting

a difference between treatments—more commonly

referred to as the power of the test.

Truth

Conclusion

H1

H0

H1

H0

1–β

β

α

1–α

The Size of a Clinical Trial

Setting three determines the fourth:

For the chosen level of significance (α), a clinically

meaningful difference (δ) can be detected with a

minimally acceptable power (1 – β) with n subjects.

Depending on the nature of the outcome, the same

applies: For the chosen level of significance (α), an

outcome can be estimated within a specified margin of

error (ME) with n subjects.

Example: Detecting a Difference

The Anturane Reinfarction Trial Research Group

(1978) describe the design of a randomized

double-blind trial comparing anturan and placebo

in patients after a myocardial infarction.

What is the main purpose of the trial?

What is the principal measure of patient outcome?

How will the data be analyzed to detect a treatment

difference?

What type of results does one anticipate with standard

treatment?

How small a treatment difference is it important to detect

and with what degree of uncertainty?

Example: Detecting a Difference

Primary objective: To see if anturan is of value in

preventing mortality after a myocardial infarction.

Primary outcome: Treatment failure is indicated by

death within one year of first treatment (0/1).

Data analysis: Comparison of percentages of

patients dying within first year on anturan (π1)

versus placebo (π2) using a χ2 test at the α = 0.05

level of significance.

Example: Detecting a Difference

Expected results under placebo: One would

expect about 10% of patients to die within a year

(i.e., π2 = .1).

Difference to detect (δ): It is clinically interesting

to be able to determine if anturan can halve the

mortality—i.e., 5% of patients die within a year—

and we would like to be 90% sure that we detect

this difference as statistically significant.

Example: Detecting a Difference

We have:

H0: π1 = π2 versus H1: π1 π2 (two-sided test)

α = 0.05

1 – β = 0.90

δ = π2 – π1 = 0.05

The estimate of power for this test is a function of

sample size:

1 P z

z 2 SE

P z

p1q1 n1 p2 q2 n2

z 2 SE

p1q1 n1 p2 q2 n2

Example: Detecting a Difference

1β

β

1α

α/2

zα/2

Reject H0

Conclude difference

α/2

zα/2

Fail to reject H0

Conclude no

difference

Reject H0

Conclude difference

Example: Detecting a Difference

Assuming equal sample sizes, we can solve for n:

where

z 2 2 pq z p1q1 p2 q2

n

2

p1 p2

p

2

q 1 p

Example: Detecting a Difference

z 2 2 pq z p1q1 p2 q2

n

2

2

1.96 2 0.925 0.075 1.282 0.95 0.05 0.90 0.1

0.0025

1.96 0.373 1.282 0.371

0.0025

582.5

2

n = 583 patients per group is required

2

Power and Sample Size

n is roughly inversely proportional to δ2; for fixed α and

β, halving the difference in rates requiring detection

results in a fourfold increase in sample size.

n depends on the choice of β such that an increase in

power from 0.5 to 0.95 requires around 3 times the

number of patients.

Reducing α from 0.05 to 0.01 results in an increase in

sample size of around 40% when β is around 10%.

Using a one-sided test reduces the required sample

size.

Example: Detecting a Difference

Primary objective: To see if treatment A increases

outcome W.

Primary outcome: The primary outcome, W, is

continuous.

Data analysis: Comparison of mean response of

patients on treatment A (μ1) versus placebo (μ2)

using a two-sided t-test at the α = 0.05 level of

significance.

Example: Detecting a Difference

Expected results under placebo: One would

expect a mean response of 10 (i.e., μ2 = 10).

Difference to detect (δ): It is clinically interesting to

be able to determine if treatment A can increase

response by 10%—i.e., we would like to see a

mean response of 11 (10 + 1) in patients getting

treatment A and we would like to be 80% sure that

we detect this difference as statistically significant.

Example: Detecting a Difference

We have:

H0: μ1 = μ2 versus H1: μ1 μ2 (two-sided test)

α = 0.05

1 – β = 0.80

δ=1

For continuous outcomes we need to determine

what difference would be clinically meaningful, but

specified in the form of an effect size which takes

into account the variability of the data.

Example: Detecting a Difference

Effect size is the difference in the means divided

by the standard deviation, usually of the control or

comparison group, or the pooled standard

deviation of the two groups

d

where

1 2

12 22

n1 n2

Example: Detecting a Difference

1β

β

1α

α/2

zα/2

Reject H0

Conclude difference

α/2

zα/2

Fail to reject H0

Conclude no

difference

Reject H0

Conclude difference

Example: Detecting a Difference

Power Calculations an interesting interactive

web-based tool to show the relationship between

power and the sample size, variability, and

difference to detect.

A decrease in the variability of the data results in

an increase in power for a given sample size.

An increase in the effect size results in a decrease

in the required sample size to achieve a given

power.

Increasing α results in an increase in the required

sample size to achieve a given power.

Additional Slides

Common Inferential Designs

Comparing 2 independent percentages

χ2 test, Fisher’s Exact test, logistic regression

Comparing 2 independent means

2-sample t-test, multiple regression, analysis of

covariance

Comparing two independent distributions

Wilcoxon Rank-Sum test, Kolmogorov-Smirnov test

Comparing two independent time-to-event variables

Logrank test, Wilcoxon test, Cox proportional-hazards

regression

Estimation of Effect

For a dichotomous (yes/no) outcome

Estimate margin of error within a certain bound

Two or Multiple stage designs (Gehan, Simon, and

others)

Bayesian designs (Simon, Thall and others)

Exact binomial probabilities

Estimation of Effect

For a continuous outcome

Estimate margin of error within a certain bound

Is the magnitude of change above or below a

certain threshold with a given confidence

Detecting a Difference

Two-Arm studies: dichotomous or polytomous

outcome

2 × c Chi-square test (Fisher’s Exact test)

Mantel-Haenzsel

Logistic Regression

Generalized linear model

GEE or GLIMM if longitudinal

Detecting a Difference

Two-Arm studies: continuous outcome

Two-sample t-test (Wilcoxon rank sum)

Linear regression

General linear model

Mixed linear models for longitudinal data

Detecting a Difference

Time to event outcome

Log-rank test

Generalized Wilcoxon test

Likelihood ratio test

Cox proportional hazards regression

Detecting a Difference

Multiple-Arm studies: dichotomous or polytomous

outcome

r × c Chi-square test (Fisher’s Exact test)

Mantel-Haenzsel

Logistic Regression

Generalized linear model

GEE or GLIMM if longitudinal

Detecting a Difference

Multiple-Arm studies: continuous outcome

ANOVA (Kruskal-Wallis test)

Linear Regression – Analysis of Covariance

Multi-factorial designs

Mixed linear models for longitudinal data

Prognostic Factors

It is reasonable and sometimes essential to collect

information of personal characteristics and past

history at baseline when enrolling patient’s onto a

clinical trial.

These variables allow us to determine how

generalizable the results are.

Prognostic Factors

Prognostic factors know to be related to the

desired outcome of the clinical trial must be

collected and in some cases randomization should

be stratified upon these variables.

Many baseline characteristics may not be known to

be related to outcome, but may be associated with

outcome for a given trial.

Comparable Treatment Groups

All baseline prognostic and descriptive factors of

interest should be summarized between the

treatment groups to insure that they are

comparable between treatments. It is generally

recommended that these be descriptive

comparisons only, not inferential

Note: Just because a factor is balanced does

not mean it will not affect outcome and vice versa.

Subgroup Analysis

Does response differ for differing types of patients?

This is a natural question to ask.

To answer this question one should test to see if

the factor that determines type of patient interacts

with treatment.

Separate significance tests for different subgroups

do not provide direct evidence of whether a

prognostic factor affects the treatment difference:

a test for interaction is much more valid.

Tests for interactions may also be designed a

priori.

Adjusting for Covariates

Quantitative Response: Multiple Regression

Qualitative Response: Multiple Logistic

Regression

Time-to-event Response: Cox Proportional

Hazards Regression

Multiplicity of Data

Multiple Treatments – the number of possible

treatment comparisons increases rapidly with the

number of treatments. (Newman-Keuls, Tukey’s

HSD or other adjustment should be designed)

Multiple end-points – there may be multiple ways

to evaluate how a patient responds. (Bonferroni

adjustment, Multivariate test, combined score, or

reduce number of primary end-points)

Multiplicity of Data

Repeated Measurements – patient’s progress may

be recorded at several fixed time points after the

start of treatment. One should aim for a single

summary measure for each patient outcome so

that only one significance test is necessary.

Subgroup Analyses – patients may be grouped into

subgroups and each subgroup may be analyzed

separately.

Interim Analyses – repeated interim analyses may

be performed after accumulating data while the

trial is in progress.

Incidence and Prevalence

An incidence rate of a disease is a rate that is

measured over a period of time; e.g., 1/100

person-years.

For a given time period, incidence is defined as:

# of newly - diagnosed cases of disease

# of individuals at risk

Only those free of the disease at time t = 0 can be

included in numerator or denominator.

Incidence and Prevalence

A prevalence ratio is a rate that is taken at a

snapshot in time (cross-sectional).

At any given point, the prevalence is defined as

# with the illness

# of individuals in population

The prevalence of a disease includes both new

incident cases and survivors with the illness.

Incidence and Prevalence

Prevalence is equivalent to incidence multiplied by

the average duration of the disease.

Hence, prevalence is greater than incidence if the

disease is long-lasting.

Measurement Error

To this point, we have assumed that the outcome

of interest, x, can be measured perfectly.

However, mismeasurement of outcomes is

common in the medical field due to fallible tests

and imprecise measurement tools.

Diagnostic Testing

Sensitivity and Specificity

Sensitivity of a diagnostic test is the probability that

the test will be positive among people that have the

disease.

P(T+| D+) = TP/(TP + FN)

Sensitivity provides no information about people that

do not have the disease.

Specificity is the probability that the test will be

negative among people that are free of the disease.

Pr(T|D) = TN/(TN + FP)

Specificity provides no information about people that

have the disease.

Prevalence

SN == 56/70

SP

24/30

= =30/100

= 0.80

0.80 = 0.30

Healthy

Diseased

Non-Diseased

Diseased

Diseased

Non-Diseased

Positive Diagnosis

Negative Diagnosis

Diagnosed positive

A perfect diagnostic test has

SN = SP = 1

Healthy

Diseased

Non-Diseased

Diseased

Positive Diagnosis

Negative Diagnosis

A 100% inaccurate diagnostic

test has SN = SP = 0

Healthy

Diseased

Non-Diseased

Diseased

Positive Diagnosis

Negative Diagnosis

Sensitivity and Specificity

Example: 100 HIV+ patients are given a new

diagnostic test for rapid diagnosis of HIV, and 80 of

these patients are correctly identified as HIV+

What is the sensitivity of this new diagnostic test?

Example: 500 HIV patients are given a new

diagnostic test for rapid diagnosis of HIV, and 50 of

these patients are incorrectly specified as HIV+

What is the specificity of this new diagnostic test?

(Hint: How many of these 500 patients are correctly

specified as HIV?)

Positive and Negative Predictive

Value

Positive predictive value is the probability that a person

with a positive diagnosis actually has the disease.

Pr(D+|T+) = TP/(TP + FP)

This is often what physicians want-patient tests

positive for the disease; does this patient actually have

the disease?

Negative predictive value is the probability that a

person with a negative test does not have the disease.

Pr(D-|T-) = TN/(TN + FN)

This is often what physicians want-patient tests

negative for the disease; is this patient truly disease

free?

NPV

56/62 == 0.63

0.90

PPV == 24/38

Healthy

Diseased

Non-Diseased

Diseased

Diseased

Non-Diseased

Positive Diagnosis

Negative Diagnosis

Diagnosed positive

A perfect diagnostic test has

PPV = NPV = 1

Healthy

Diseased

Non-Diseased

Diseased

Positive Diagnosis

Negative Diagnosis

A 100% inaccurate diagnostic

test has PPV = NPV = 0

Healthy

Diseased

Non-Diseased

Diseased

Positive Diagnosis

Negative Diagnosis

PPV and NPV

Example: 50 patients given a new diagnostic test

for rapid diagnosis of HIV test positive, and 25 of

these patients are actually HIV+.

What is the PPV of this new diagnostic test?

Example: 200 patients given a new diagnostic test

for rapid diagnosis of HIV test negative, but 2 of

these patients are actually HIV+.

What is the NPV of this new diagnostic test? (Hint:

How many of these 200 patients testing negative for

HIV are truly HIV?)