outcomes assessment via rubrics: a pilot study in an mis course as a

advertisement

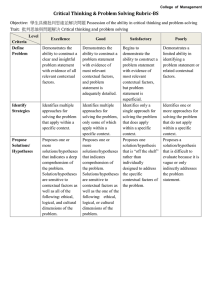

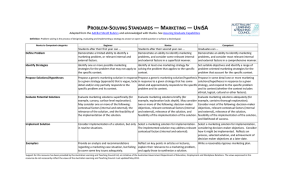

OUTCOMES ASSESSMENT VIA RUBRICS: A PILOT STUDY IN AN MIS COURSE AS A PRECOURSOR TO A MULTIPLE MEASURE APPROACH By W. R. Eddins,York College of Pennsylvania George Strouse,York College of Pennsylvania At NABET 2012 1 Outcomes Assessment with Rubrics Our previous research in Outcomes Assessment (OA) focused on inputs and outputs This paper adds a process dimension to OA - a service system oriented approach Activities related to Service System Development ◦ ◦ ◦ ◦ Analysis and design Development activities Integration Verification and validation Experimental design Findings Conclusion 2 Previous Research A Revised Collaboration Learning Scale: A Pilot Study in an MIS Course (2011) ◦ ◦ ◦ ◦ Subjects: students (10) Independent variables: RIPLS, tests quizzes and case projects Dependent variable: Likert scale and grades Significant findings: collaboration improved and case study grades improved Using the MSLQ to Measure Collaboration and Critical Thinking in an MIS Course (2010) ◦ ◦ ◦ ◦ Subjects: students (14) Independent variables: MSLQ, tests, quizzes and case projects Dependent variable: Likert scale and grades Significant findings: peer learning improved, and quizzes and case study grades improved 3 Service System Oriented Approach Capability Maturity Model Integration for Services (CMMI-SVC) ◦ Forrester et al (2011) ◦ SEI (http://www.sei.cmu.edu/) ◦ Maturity levels (initial, managed, defined, quantitatively managed and optimized) Service System Development definition p561 ◦ The purpose of Service System Development (SSD) is to analyze, design, develop, integrate, verify, and validate service systems, including service system components, to satisfy existing or anticipated service agreements 4 Analysis & Design and Use Cases Apply Rubric Each faculty member will use the system to apply the rubric to student responses, both pre and post. The faculty member will cycle through the set of student responses to tests, case projects, or other assessment instrument, and select the appropriate milestone for each rubric criterion. The system will not display the student identifier or the type of the response (pre or post) as the faculty member cycles through the complete set of responses. 5 Analysis & Design and Data Model 6 Developed using VS, C# & Access 7 Integration Sponsor: Dean of Academic Affairs Assessment Program Learning Committee Association of American Colleges and Universities VALUE Rubrics Professionalism and problem solving in the syllabus of IFS305-Management Information Systems included in … ◦ Vision statement of the department ◦ Implemented in student memos 8 Verification and Validation AACU’s problem solving rubric Face and content validity? Define Problem Propose Solutions/ Hypotheses Implement Solution Capstone 4 Demonstrates the ability to construct a clear and insightful problem statement with evidence of all relevant contextual factors. Milestones 3 Demonstrates the ability to construct a problem statement with evidence of most relevant contextual factors, and problem statement is adequately detailed. Proposes one or more Proposes one or more solutions/hypotheses that solutions/hypotheses that indicates a deep indicates comprehension comprehension of the of the problem. problem. Solutions/hypotheses are Solution/hypotheses are sensitive to contextual sensitive to contextual factors as well as the one factors as well as all of the of the following: ethical, following: ethical, logical, logical, or cultural and cultural dimensions of dimensions of the the problem. problem. Implements the solution in a Implements the solution in manner that addresses a manner that addresses thoroughly and deeply multiple contextual factors multiple contextual factors of the problem in a surface of the problem. manner. 2 Begins to demonstrate the ability to construct a problem statement with evidence of most relevant contextual factors, but problem statement is superficial. Proposes one solution/hypothesis that is “off the shelf” rather than individually designed to address the specific contextual factors of the problem. Implements the solution in a manner that addresses the problem statement but ignores relevant contextual factors. Benchmark 1 Demonstrates a limited ability in identifying a problem statement or related contextual factors. Proposes a solution/hypothesi s that is difficult to evaluate because it is vague or only indirectly addresses the problem statement. Implements the solution in a manner that does not directly address the problem statement. 9 Verification and Validation (continued) Adds a “process” approach to validation with a focus on… • • • Establishing course/program quality goals Monitoring goal/level attainment Applying statistical process control to… • Identify current performance levels • Establish acceptable levels of performance • Compare current performance to goals • Identify process areas to improve • Develop a historical perspective • Analyze and report performance measures and goal attainment 10 Experimental Design Summer pilot study Subjects: faculty who teach the course Independent variable: AACU’s rubric Dependent variable: Likert type scale Assessment of student memo Pre/post treatment 11 Findings Comparison of pre/post showed ◦ Significant difference ◦ Supports content validity Inter-rater comparison ◦ No significant difference ◦ Supports item reliability 12 Conclusion Multi-measure approach Addressing the effort issue ◦ Random selection (not the entire class) ◦ Selection of independent variables depends upon Faculty interest Past variables Hopefully, the tool can … ◦ Ease effort considerations ◦ Structure the process The future for the tool 13 Questions? 14