GP-Music - Computer Science and Engineering

advertisement

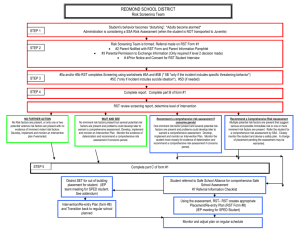

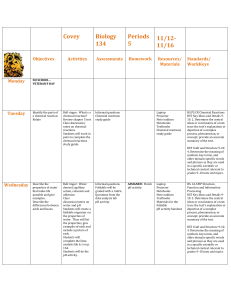

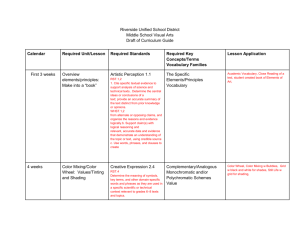

Genetic Programming System for Music Generation With Automated Fitness Raters What is Genetic Programming(GP)? • an evolutionary algorithm-based methodology inspired by biological evolution • New generation of solutions created from previous generations • Three Steps: o Mutation: change random bits o Crossover: exchange parts of two parents o Selection: get rid of bad examples An Example: 8-Queen Problem Fitness Function: How good is each string? In this case, it depends on the number of non-attacking pairs of queens. Selection: Choose parents randomly according to fitness function Crossover: Make new children from parents Choose a cut-point, swap halves Mutation Change random bits with low probability Back to the GP-Music System An interactive system which allows users to evolve short musical sequences. Focus on creating short melodic sequences. Does not attempt to evolve polyphony or the actual wave forms of the instruments. Create only a set of notes and pauses. Use XM format - store musical pieces rather than straight digital audio Function and Terminal Sets • Function Set: play_two(2 arguments), add_space(1 argument), play_twice(1 argument), shift_up(1 argument), shift_down(1 argument), mirror(1 argument), play_and_mirror(1 argument) • Terminal Set: o Notes: C-4, C#4, D-4, D#4, E-4, F-4, F#4, G-4, G#4, A-5, A#5, B-5 • Pseudo-Chords: o C-Chord, D-Chord, E-Chord, F-Chord, G-Chord, A-Chord, B-Chord • Other: RST (used to indicates one beat without a note) Function and Terminal Sets Cont. Pseudo-Chord Corresponding Note Sequence C-Chord C-4, E-4, G-4 D-Chord D-4, F#4, A-5 E-Chord E-4, G#4, B-5 F-Chord F-4, A-5, C-5 G-Chord G-4, B-5, D-5 A-Chord A-5, C#5, E-5 B-Chord B-5, D#5, F#5 Sample Music Program Interpreter: (shift-down (add-space (play-and-mirror (play-two (play-two (play-two (play-two B-5 B-5) (shift-down A-5)) (shift-down A-5)) F-4)))) A-5,RST,A-5,RST,F-4,RST,F-4,RST,E4,RST, E-4,RST,F-4,RST,F-4,RST,A-5,RST,A5,RST B-5,RST,B-5,RST,G-4,RST,G-4,RST,F4,RST, F-4,RST,G-4,RST,G-4,RST,B-5,RST,B5,RST B-5,B-5,G-4,G-4,F-4,F-4,G-4,G-4,B-5,B-5 shift-down add-space play-andmirror B-5,B-5,G-4,G-4,F-4 play-two B-5,B-5,G-4,G-4 play-two B-5,B-5,G-4 B-5,B-5 play-two play-two B-5 B-5 F-4 shift-down shift-down A-5 A-5 Each node in the tree propagates up a musical note string, which is then modified by the next higher node. In this way a complete sequence of notes is built up, and the final string is returned by the root node. Fitness Selection A human using the system is asked to rate the musical sequences that are created for each generation of the GP process. User Bottleneck A user can only rate a small number of sequences in a sitting, limiting the number of individuals and generations that can be used. The GP-Music System takes rating data from a users run and uses it to train a neural network based automatic rater. Neural Networks • A trainable mathematical model for finding boundaries • Inspired by biological neurons o Neurons collect input from receptors, other neurons o If enough stimulus collected, the neuron fires • Inputs in artificial neurons are variables, outputs of other neurons • Output is an “activation” determined by the weighted inputs Back Propagation Algorithm We have some error and we want to assign “blame” for it proportionally to the various weights in the network We can compute the error derivative for the last layer Then, distribute “blame” to previous layer Backprop Training Start with random weights Run the network forward Calculate the error based on outputs Propagate the error backward Update the weights Repeat with next training example until you stop improving o Cross validation data is very important here o o o o o o each of the top level nodes has ‘Level Spread’ connections to lower nodes. The weights on these connections are all shared, so the weight on the first input to each upper level node is identical each lower level node affects two upper level nodes Auto Rater Runs • The weights and biases for the auto-rater network trained for 850 cycles were used in several runs of the GP-Music System. • To evaluate how well the auto-rater works in larger runs, runs with 100 and 500 sequences per generation over 50 generations were made. The resulting best individuals are shown in the next slide 50 Generations, 100 individuals per Generation 50 Generations, 500 individuals per Generation Future Work • it would be interesting to analyze the weights which are being learned by the network to see what sort of features it is looking for • It may also be possible to improve the structure of the auto raters themselves by feeding them extra information, or modifying their topology Reference The research is done by Brad Johanson - Stanford University Rains Apt. 9A 704 Campus Dr. Stanford, CA. 94305 bjohanso@stanford.edu Riccardo Poli - University of Birmingham School of Computer Science The University of Birmingham Birmingham B15 2TT R.Poli@cs.bham.ac.uk