Slides for week 9

advertisement

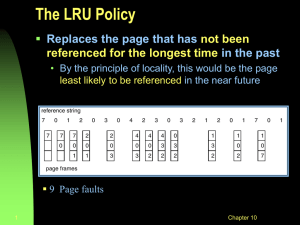

First-in, First-out Remove the oldest page Old pages may be heavily used Determine the age based on the loading time, not on the time being referenced Process table, scheduler Pure FIFO is rarely used Improve: second chance algorithm 1 Second Chance Algorithm Idea: Looking for an old page not recently referenced Algorithm: Inspect the R bit before removing old pages 0 A 0: throw it away 1: clear R bit, move the page to the tail Page loaded first 18 … Most recently loaded page 18 … 20 A A is treated like a newly loaded page 2 Clock Page Algorithm Moving pages around in second chance algorithm is inefficient Keep all page frames on a circular list A hand points to the oldest one A variation of second chance algorithm H A B G C F E D 3 Least Recently Used (LRU) Observation: Pages heavily used in the last few instructions will probably be heavily used again in the next few instructions. Remove the page unused for the longest time Maintaining a list of pages? Most recently used page at the front Least recently used page at the rear Update the list on every memory reference Very costly! 4 LRU: Hardware Solution A global hardware counter automatically incremented after each instruction A 64-bit local counter per entry in page table Local counter global counter when page is referenced When a page fault occurs, examine all the local counters. The page with lowest value is the least recently used one 5 Software LRU NFU – Not Frequently Used Each page has a software counter initially zero Add R bit to the counter every clock interrupt, then clear the R bit The page with least counter value is removed Never forget anything, hurt recently used pages A page was heavily used before but no longer be referenced. This page cannot be removed due to the high value counter. Improvement: Aging Counters are shifted right 1 bit before the R bit is added in The R bit is added to the leftmost 6 Example The page with lowest counter value is removed Before clock tick 1 Page R bit Counter 0 0 11110000 1 1 01100000 2 1 3 After clock tick 1 Page R bit Counter 01111000 0 0 01111000 10110000 1 0 10110000 00100000 2 0 10010000 0 01000000 3 0 00100000 4 0 10110000 4 0 01011000 5 0 01010001 5 0 00101000 00101000 7 Aging Vs. LRU • In aging, no information about which page was referenced last (first) during the interval between clock ticks. • Clock interval is 10 seconds. One page is referenced at the 3rd second; while another at the 7th second. Aging does not know which page is referenced first. • Limited history kept by aging • 8-bit counter only keeps the history of 8 clock ticks. • Referenced 9 ticks ago or 1000 ticks ago: no difference 8 Working Set & Thrashing Working set: the set of pages currently used by a process The entire working set in main memory not many page faults until process moves to next phase Only part of the working set in main memory many page faults, slow Like page table, each process has its own unique working set Trashing: a program causing page faults every few instructions 9 Working Set Model: Avoid Trashing Demand paging Pages are loaded on demand Lots of page faults when process starts Working set model: bring it in memory before running a process Keep track of working sets Pre-paging: before run a process, allocate its working set 10 The Function of Working Set Working set (WS): at time t, the set of pages used by the k most recent memory references The set changes slowly in time, i.e., the content of working set is not sensitive to k E.g., a loop referring 10 pages has 1,000 instructions for each iteration Working set has the same 10 pages from k=1,000 to k=1,000,000 if the loop executes 1000 times. w(k, t) k 11 Maintaining WS: A Simple Way Store page numbers in a shift register of length k, and with every memory reference, we do Shift the register left one position, and Insert the most recently referenced page number on the right The set of k page numbers in the register is the working set. p2 p3 … p(k+1) p1 p2 … pk the eldest page Page (k+1) is referenced The most recent page 12 Approximating Working Set Maintaining the shift register is expensive Alternative: using the execution time () instead of references E.g., the set of pages used during the last =100 msec of execution time. Usually > the interval of clock interrupt 13 WS Page Replacement Algorithm • Basic idea: find a page not in WS and evict it • The pages in WS are most likely to be used next • On every page fault, the page table is scanned to look for a suitable page to evict • Each entry contains (at least) two items: the time the page was last used and R (referenced) bit. The hardware sets the R each time a page is used. Software clears the R bit periodically. 14 Algorithm Details WSClock Algorithm The basic working set page replacement scans the entire page table per page fault, costly! Improvement: clock algorithm + working set information A circular list of pages. Initially empty. When a page is loaded, it is added to the list. Pages form a ring. Widely used Based on: time of last use, R and M bits 16 Algorithm Details A page not in working set Clean (no modification) use it Dirty schedule a write back to disk and keep searching It is OS who is responsible for the write back! If the hand comes to its staring point again Some write-backs find a clean page (it must be not in working set) No write-back just grab a clean page or current page 17 Example 2204 =200 Current virtual time Time of last use R bit (M bit not shown) 2014 1 2014 0 1213 0 1980 0 1213 0 1 1980 0 2 2014 1 2014 0 1213 0 2204 1 New page M=1 1213 0 4 1980 0 M=0 3 Summary Algorithm Optimal NRU FIFO Second chance Clock LRU NFU Aging Working set WSClock Comment Not implementable, good as benchmark Very crude Might throw out important pages Big improvement over FIFO Realistic Excellent, but difficult to implement exactly Fairly crude approximation to LRU Efficient algorithm approximates LRU well Somewhat expensive to implement Good efficient algorithm 19 Maintaining WS: A Simple Way Store page numbers in a shift register of length k, and with every memory reference, we do Shift the register left one position, and Insert the most recently referenced page number on the right The set of k page numbers in the register is the working set. p2 p3 … p(k+1) p1 p2 … pk the eldest page Page (k+1) is referenced The most recent page 20 Approximating Working Set Maintaining the shift register is expensive Alternative: using the execution time () instead of references E.g., the set of pages used during the last =100 msec of execution time. Usually > the interval of clock interrupt 21 WS Page Replacement Algorithm • Basic idea: find a page not in WS and evict it • The pages in WS are most likely to be used next • Pre-requisites • Each entry contains (at least) two items: the time the page was last used and R (referenced) bit. The hardware sets the R each time a page is used. Software clears the R bit after every clock interrupt. 22 Algorithm Details 23 WSClock Algorithm The basic working set page replacement scans the entire page table per page fault, costly! Improvement: clock algorithm + working set information A circular list of pages. Initially empty. When a page is loaded, it is added to the list. Pages form a ring. Widely used Based on: time of last use, R and M bits 24 Algorithm Details When page fault occurs, check the page pointed by the clock-hand If it is clean and not in working set, Get it! If it is dirty, schedule a write back to disk and move the hand one page ahead It is OS who is responsible for the write back, and OS will clear the M bit once that page is written back. If the hand comes to its staring point again Some write-backs find a clean page (it must be not in working set) No write-back just grab a clean page or current page 25 Example 2204 =200 Current virtual time Time of last use R bit (M bit not shown) 2014 1 2014 0 1213 0 1980 0 1213 0 1 1980 0 2 2014 1 2014 0 1213 0 2204 1 New page M=1 1213 0 4 1980 0 M=0 3 26 Summary Algorithm Optimal NRU FIFO Second chance Clock LRU NFU Aging Working set WSClock Comment Not implementable, good as benchmark Very crude Might throw out important pages Big improvement over FIFO Realistic Excellent, but difficult to implement exactly Fairly crude approximation to LRU Efficient algorithm approximates LRU well Somewhat expensive to implement Good efficient algorithm 27 Outline Basic memory management Swapping Virtual memory Page replacement algorithms Modeling page replacement algorithms Design issues for paging systems Implementation issues Segmentation 28 Motivation For the preceding algorithms like LRU, WSClock Based on the empirical studies E.g., more page frames are better. No theoretical analysis Given the number of page frames, how many page faults are expected? To reduce the page fault occurrence to certain level, how many page frames are required? 29 More Page Frames Fewer Page Faults? Belady’s anomaly Not always the case. 5 virtual pages and 3/4 page frames Page reference string: 0 1 2 3 0 1 4 0 1 2 3 4 FIFO is used Youngest 0 1 2 3 0 1 4 0 1 2 0 1 2 3 0 1 2 0 1 P P P Oldest P Youngest Oldest 0 1 2 0 1 2 0 1 0 3 3 2 1 0 P P P P 3 4 0 3 2 P 1 0 3 P 4 4 4 2 3 3 1 1 1 4 2 2 0 0 0 1 4 4 P P P 9 page faults 0 3 2 1 0 1 3 2 1 0 4 4 3 2 1 P 10 page faults 0 0 4 3 2 P 1 1 0 4 3 P 2 2 1 0 4 P 3 3 2 1 0 P 4 4 3 2 1 P 30 Model of Paging System Three elements for the system Page reference string Page replacement algorithm #page frames (size of main memory): m M: the array keeping track of the state of memory. |M|=n, the number of virtual pages |top part|=m, recording the pages in main memory Empty entry free page frame |bottom part|=n-m, storing the pages have been referenced but have been paged out. 31 How M Works? Initially empty When a page is referenced, If it is not in memory (i.e., not in the top part of M), page fault occurs. If empty entry in the top part exists, load it, or A page is moved from the top part to the bottom part. Pages in both parts may be separately rearranged. 32 An Example With LRU Algorithm When referenced, move to the top entry in M. All pages above the just referenced page move down one position Reference string Top part Keep the most recently referenced page at top 0 2 1 3 5 4 6 3 7 4 7 3 3 5 5 3 1 1 1 7 1 3 4 1 0 2 1 3 5 4 6 3 7 4 7 3 3 5 5 3 1 1 1 7 1 3 4 1 0 2 1 3 5 4 6 3 7 4 7 7 3 3 5 3 3 3 1 7 1 3 4 0 2 1 3 5 4 6 3 3 4 4 7 7 7 5 5 5 3 3 7 1 3 0 2 1 3 5 4 6 6 6 6 4 4 4 7 7 7 5 5 5 7 7 0 2 1 1 5 5 5 5 5 6 6 6 4 4 4 4 4 4 5 5 Bottom part Page faults 0 2 2 1 1 1 1 1 1 1 1 6 6 6 6 6 6 6 6 0 0 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 P P P P P P P P P P P 33 Stack Algorithms The algorithms that have the property: M(m, r) M(m+1, r), where m is the number of page frames and r is an index into the reference string. M(m,r) is a set of pages in the top part of M for index r. m is the size of top part. E.g., M(4,1) If increase main memory size by one page frame and re-execute the process, at every point during the execution, all the pages that were present in memory in the first run are also present in the second run Stack algorithms do not suffer from Belady’s anomaly LRU is but FIFO is not. 34 An Observation Reference string LRU 0 2 1 0 2 1 0 2 0 M(4,r) M(5,r) Youngest FIFO Oldest Youngest Oldest 3 3 1 2 0 5 5 3 1 2 0 4 4 5 3 1 2 0 6 6 4 5 3 1 2 0 0 1 2 0 1 2 0 1 0 P P P 0 1 2 0 1 2 0 1 0 3 3 6 4 5 1 2 0 7 7 3 6 4 5 1 2 0 3 3 2 1 P 3 3 2 1 0 P P P P 4 4 7 3 6 5 1 2 0 0 0 3 2 P 0 3 2 1 0 7 7 4 3 6 5 1 2 0 3 3 7 4 6 5 1 2 0 3 3 7 4 6 5 1 2 0 5 5 3 7 4 6 1 2 0 1 1 0 3 P 1 3 2 1 0 4 4 1 0 P 4 4 3 2 1 P 0 4 1 0 1 4 1 0 0 0 4 3 2 P 1 1 0 4 3 P 5 5 3 7 4 6 1 2 0 3 3 5 7 4 6 1 2 0 2 2 4 1 P 2 2 1 0 4 P 1 1 3 5 7 4 6 2 0 3 3 2 4 P 3 3 2 1 0 P 1 1 3 5 7 4 6 2 0 4 3 2 4 4 4 3 2 1 P 1 1 3 5 7 4 6 2 0 7 7 1 3 5 4 6 2 0 1 1 7 3 5 4 6 2 0 3 3 1 7 5 4 6 2 0 P 4 4 3 1 7 5 6 2 0 1 1 4 3 7 5 6 2 0 9 page faults 10 page faults 35 Outline Basic memory management Swapping Virtual memory Page replacement algorithms Modeling page replacement algorithms Design issues for paging systems Implementation issues Segmentation 36 Allocating Memory for Processes Process A has a page fault… A0 A1 A2 A3 A4 A5 B0 B1 B2 B3 B4 B5 B6 C1 C2 C3 Age 10 7 5 4 6 3 9 4 6 2 5 6 12 3 5 6 Local page replacement Local lowest Global lowest A0 A1 A2 A3 A6 A5 B0 B1 B2 B3 B4 B5 B6 C1 C2 C3 Global page replacement A0 A1 A2 A3 A6 A5 B0 B1 B2 A6 B4 B5 B6 C1 C2 C3 37 Local/Global Page Replacement Local page replacement: every process with a fixed fraction of memory Thrashing even if plenty of free page frames are available. Waste memory if working set shrinks Global page replacement: dynamically allocate page frames for each process Generally works better. But how to determine how many page frames to assign to each process? 38 Assign Page Frames to Processes Allocate each process an equal share 1000 frames and 10 processes. Each process gets 100 frames. Assign 100 frames to a 10-page process! Dynamically adjust #frames Based on program size Allocate based on page fault frequency (PFF) A Page faults/sec B #frames Assigned 39 Page Size Arguments for small page size Internal fragmentation: on average, half of the final page for a process is empty and wasted p: page size, thus p/2 memory wasted for each segment The size of process could be smaller than one page Arguments for large page size Small pages large page table and overhead s: size of process, e: size of page entry. The over head of page table entries is: s*e/p Total overhead = s*e / p + p / 2 Solution: p = sqrt(2 * s* e) 40 Shared Pages for Programs Processes sharing the same program should share the pages Avoid two copies of the same page Keep the share pages when a process swaps out or terminates. Identify the share pages Costly if search all the page tables. 41 Cleaning Dirty Pages Writing back dirty pages when free pages are needed is costly! Processes are blocked and have to wait Overhead of process switching Keep enough clean pages for paging Background paging daemon: evicting pages Keep page content, can be reused 42