Lecture 9 Supervised Learning

advertisement

Supervised learning in high-throughput

data

General considerations

Dimension reduction with outcome variables

Classification models

General considerations

This is the common structure of microarray gene expression data

from a simple cross-sectional case-control design.

Data from other high-throughput technology are often similar.

Control 1 Control 2

Gene 1

Gene 2

Gene 3

Gene 4

Gene 5

Gene 6

Gene 7

Gene 8

Gene 9

Gene 10

Gene 11

……

Gene 50000

9.25

6.99

4.55

7.04

2.84

6.08

4

4.01

6.37

2.91

3.71

……

3.65

9.77

5.85

5.3

7.16

3.21

6.26

4.41

4.15

7.2

3.04

3.79

……

3.73

……

……

……

……

……

……

……

……

……

……

……

……

……

……

Control 25 Disease 1 Disease 2

9.4

5

4.73

6.47

3.2

7.19

4.22

3.45

8.14

3.03

3.39

……

3.8

8.58

5.14

3.66

6.79

3.06

6.12

4.42

3.77

5.13

2.83

5.15

……

3.87

5.62

5.43

4.27

6.87

3.26

5.93

4.09

3.55

7.06

3.86

6.23

……

3.76

……

Disease 40

……

……

……

……

……

……

……

……

……

……

……

……

……

6.88

5.01

4.11

6.45

3.15

6.44

4.26

3.82

7.27

2.89

4.44

……

3.62

Fisher Linear Discriminant Analysis

Find the lower-dimension space where the classes are most

separated.

In the projection, two goals are to be fulfilled:

(1)Maximize between-class distance

(2)Minimize within-class scatter

Maximize this function with all non-zero vectors w

t

w Sb w

J ( w) t

w Sww

Between class

distance

Within-class

scatter

Fisher Linear Discriminant Analysis

In the two-class case, we are projecting to a line to find

the best separation:

wx

J ( w)

w x2

w S pooled w

t

t

1

t

Maximization yields:

1

w x1 x2 S Pooled

t

mean1

mean2

Decision

boundry

Decision boundry:

1

x1 x2 S Pooled

x1 x2

t

2

EDR space

Now we start talking about regression. The data is

{xi, yi}

Is dimension reduction on X matrix alone helpful

here? Possibly, if the dimension reduction preserves

the essential structure about Y|X. This is

suspicious.

Effective Dimension Reduction --- reduce the

dimension of X without losing information which is

essential to predict Y.

EDR space

The model: Y is predicted by a set of linear

combinations of X.

If g() is known, this is not

very different from a

generalized linear model.

For dimension reduction

purpose, is there a scheme

which can work on almost

any g(), without knowledge

of its actual form?

EDR space

The general model encompasses many models as special

cases:

EDR space

Under this general model,

The space B generated by β1, β2, ……, βK is

called the e.d.r. space.

Reducing to this sub-space causes no loss of

information regarding predicting Y.

Similar to factor analysis, the subspace B is

identifiable, but the vectors aren’t.

Any non-zero vector in the e.d.r. space is called

an e.d.r. direction.

EDR space

This equation assumes almost the weakest form, to

reflect the hope that a low-dimensional projection of

a high-dimensional regressor variable contains most

of the information that can be gathered from a

sample of modest size.

It doesn’t impose any structure on how the projected

regressor variables effect the output variable.

Most regression models assume K=1, plus additional

structures on g().

EDR space

The philosophical point of Sliced Inverse Regression:

the estimation of the projection directions can be a

more important statistical issue than the estimation of

the structure of g() itself.

After finding a good e.d.r. space, we can project data

to this smaller space. Then we are in a better position

to identify what should be pursued further : model

building, response surface estimation, cluster

analysis, heteroscedasticity analysis, variable

selection, ……

SIR

Sliced Inverse Regression.

In regular regression, our interest is the conditional

density h(Y|X). Most important is E(Y|X) and var(Y|X).

SIR treats Y as independent variable and X as the

dependent variable.

Given Y=y, what values will X take?

This takes us from a p-dimensional problem (subject

to curse of dimensionality) back to a 1-dimensional

curve-fitting problem:

E(Xi|Y), i=1,…, p

SIR

SIR

SIR

covariance matrix for the slice

means of X, weighted by the slice

sizes

Find the SIR directions by conducting

the eigenvalue decomposition of

with respect to

:

sample covariance for Xi ’s

SIR

An example response

surface found by SIR.

PLS

Finding latent factors in X that can predict Y.

X is multi-dimensional, Y can be either a random

variable or a random vector.

The model will look like:

where Tj is a linear combination of X

PLS is suitable in handling p>>N situation.

PLS

Data:

Goal:

PLS

Solution:

ak+1 is the (k+1)th eigen vector of

Alternatively,

The PLS components minimize

Can be solved by iterative regression.

PLS

Example: PLS v.s. PCA in regression:

Y is related to X1

Classification Tree

An example classification tree.

Classification Trees

Every split (mostly

binary)should

increase node purity.

Drop of impurity as a

criteria for variable

selection at each

split.

Tree should not be

overly complex. May

prune tree.

Classification tree

Classification tree

Issues:

How many splits should be allowed at a node?

Which property to use at a node?

When to stop splitting a node and declare it a “leaf”?

How to adjust the size of the tree?

Tree size <-> model complexity.

Too large a tree – over fitting;

Too small a tree –

not capture the underlying structure.

How to assign the classification decision at each leaf?

Missing data?

Classification Tree

Binary split.

Classification Tree

To decide what split criteria to use, need to establish the

measurement of node impurity.

Entropy:

i ( N ) P ( j ) log 2 P ( j )

j

Misclassification:

i ( N ) 1 max P( j )

j

Gini impurity:

i ( N ) P ( i ) P ( j ) 1 P 2 ( j )

i j

j

(Expected error rate if class label is permuted.)

Classification Tree

Classification Tree

Growing the tree.

Greedy search: at every step, choose the query that decreases the

impurity as much as possible.

i( N ) i( N ) PLi( N L ) (1 PL )i( N R )

For a real valued predictor, may use gradient descent to find the

optimal cut value.

When to stop?

- Stop when reduction in impurity is smaller than a threshold.

- Stop when the leaf node is too small.

- Stop when a global criterion is met. Size

i( N )

- Hypothesis testing.

leaf _ nodes

- Cross-validation.

- Fully grow and then prune.

Classification Tree

Pruning the tree.

- Merge leaves when the loss of impurity is not severe.

- cost-complexity pruning allows elimination of a branch

in a single step.

When priors and costs are present, adjust training by adjusting

the Gini impurity

i( N )

ij

P ( i ) P ( j )

ij

Assigning class label to a leaf.

- No prior: take the class with highest frequency at the

node.

- With prior: weigh the frequency by prior

- With loss function.…

Always minimize the loss.

Classification Tree

Choice or

features.

Classification Tree

Multivariate tree.

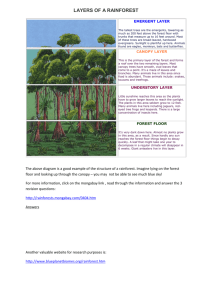

Bootstraping

Directly assess uncertainty from the training data

Basic thinking:

assuming the data approaches

true underlying density, resampling from it will give us an

idea of the uncertainty caused

by sampling

Bootstrapping

Bagging

“Bootstrap aggregation.”

Resample the training dataset.

Build a prediction model on each resampled dataset.

Average the prediction.

B

1

fˆbag ( x) fˆ *b ( x)

B b 1

E fˆ * ( x)

It’s a Monte Carlo estimate of Pˆ

, where

is the

empirical distribution putting equal probability 1/N on each

of the data points.

ˆ

Bagging only differs from the original estimate f ( x) when

f() is a non-linear or adaptive function of the data! When f()

is a linear function,

Tree is a perfect candidate for bagging – each bootstrap

tree will differ in structure.

Bagging trees

Bagged trees

are of

different

structure.

Bagging trees

Error curves.

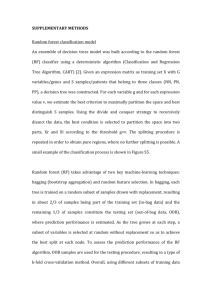

Random Forest

Random Forest

Bagging can be seen as a method to reduce variance of an

estimated prediction function. It mostly helps high-variance,

low-bias classifiers.

Comparatively, boosting build weak classifiers one-by-one,

allowing the collection to evolve to the right direction.

Random forest is a substantial modification to bagging –

build a collection of de-correlated trees.

- Similar performance to boosting

- Simpler to train and tune compared to boosting

Random Forest

The intuition – the average of random variables.

B i.i.d. random variables, each with variance

The mean has variance

B i.d. random variables, each with variance

correlation ,

with pairwise

The mean has variance

------------------------------------------------------------------------------------Bagged trees are i.d. samples.

Random forest aims at reducing the correlation to reduce

variance. This is achieved by random selection of variables.

Random Forest

Random Forest

Example

comparing

RF to

boosted

trees.

Random Forest

Benefit of RF – out of bag (OOB) sample cross validation error.

For sample i, find its RF error from only trees built from samples where

sample i did not appear.

The OOB error rate is close to N-fold cross validation error rate.

Unlike many other nonlinear estimators, RF can be fit in a single

sequence. Stop growing forest when OOB error stabilizes.

Random Forest

Variable importance – find the most relevant predictors.

At every split of every tree, a variable contributed to the

improvement of the impurity measure.

Accumulate the reduction of i(N) for every variable, we have

a measure of relative importance of the variables.

The predictors that appears the most times at split points,

and lead to the most reduction of impurity, are the ones that

are important.

-----------------Another method – Permute the predictor values of the OOB

samples at every tree, the resulting decrease in prediction

accuracy is also a measure of importance. Accumulate it

over all trees.

Random Forest