Differences-in-Differences and A (Very) Brief Introduction to

advertisement

Regression

Discontinuity/Event Studies

Methods of Economic

Investigation

Lecture 21

Last Time

Non-Stationarity

Orders of Integration

Differencing

Unit Root Tests

Estimating Causality in Time Series

A brief introduction to forecasting

Impulse Response Functions

Today’s Class

Returning to Causal Effects

Brief return to Impulse Response Functions

Event Studies/Regression Discontinuity

Testing for Structural Breaks

What happens with there’s a “shock”?

Source: Cochrane, QJE (1994)

Impulse Response Function and Causality

Impulse Response Function:

Can look, starting at time t was there a change

Don’t know if shock (or treatment) was

independent.

The issue is the counterfactual

What would have happened in the future if the

shock had not occurred

OR

What would the past have looked like, in a world

where the treatment existed

Return to Selection Bias

Back to old selection bias problem:

Shock occurs in time t and we observe a

change in y

Maybe y would have changed anyway at time t

E[Yt | shock = 1] – Et-1[Yt | shock = 0]}

=E[Yt | shock = 1] – E[Yt | shock = 0]

+ {E[Yt | shock = 0] –Et-1 [Yt | shock = 0]}

The issue is that “shocks”/treatments are

not randomly assigned to a time period

Basic Idea

Sometimes something changes sharply

with time: e.g

your sentence for a criminal offence is higher if

you are above a certain age (an ‘adult’)

The interest changes suddenly/surprisingly at

after a meeting

There is a change in the CEO/manager at a

firm

Do outcomes also change sharply?

Not just time series

Doesn’t only have to be time could be

some other dimension with a

discontinuous change

You get a scholarship if you get above a certain

mark in an exam,

you get given remedial education if you get below

a certain level,

a policy is implemented if it gets more than 50% of

the vote in a ballot,

All these are potential applications of the

‘regression discontinuity’ design

Treatment Assignment

assignment to treatment (T) depends in a

discontinuous way on some observable variable t

simplest form has assignment to treatment being based

on t being above some critical value t0

t0 is the “discontinuity” or “break date”

method of assignment to treatment is the very

opposite to that in random assignment

it is a deterministic function of some observable variable.

assignment to treatment is as ‘good as random’ in the

neighbourhood of the discontinuity

The basic idea—no reason other outcomes should be

discontinuous but for treatment assignment rule

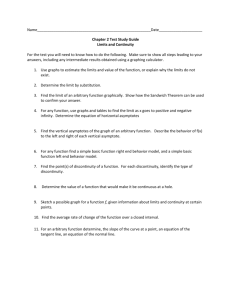

Basics of Estimation

Suppose average outcome in absence of

treatment conditional on τ is:

E[Y| t , T 0] g 0(t )

Suppose average outcome with treatment

conditional on t is:

E[Y| t , T 1] g 1(t )

Treatment effect conditional on t is

g 1 (t) – g 0 (t )

This is ‘full outcomes’ approach

How can we estimate this?

Basic idea is to compare outcomes just to

the left and right of discontinuity i.e. to

compare:

E[Y| t 0 t t 0] E[Y| t 0 t t 0 ]

As δ→0 this comes to:

g1 (t 0 ) g 0 (t 0 )

i.e. treatment effect at t = t0

Comments

Want to compare the outcome that are

just on both sides of the discontinuity

difference in means between these two groups

is an estimate of the treatment effect at the

discontinuity

t

says nothing about the treatment effect away

from the discontinuity

An important assumption is that underlying

effect on t on outcomes is continuous so only

reason for discontinuity is treatment effect

Now introduce treatment

E(y│t)

E(y│t)

β

t0

World with No Treatment

t

t0

World with Treatment

t

The procedure in practice

If take process described above literally

should choose a value of δ that is very

small

This will result in a small number of

observations

Estimate may be consistent but precision will

be low

desire to increase the sample size leads one to

choose a larger value of δ

Dangers

If δ is not very small then may not

estimate just treatment effect

Remember the picture

As one increases δ the measure of the

treatment effect will get larger. This is

spurious so what should one do about it?

The basic idea is that one should control for

the underlying outcome functions.

If underlying relationship linear

If the linear relationship is the correct

specification then one could estimate the

ATE simply by estimating the regression:

y 0 1T 2t

Indicator which is 1 if t>t0

no good reason to assume relationship is

linear

this may cause problems

Suppose true relationship is:

g0(t)

E(y│t)

g1(t)

t0

t

Observed relationship between E(y) and W

g0(t)

E(y│t)

g1(t)

t0

t

Splines

Doesn’t need to be only a level shift

Maybe the parameters all change

Can test changes in the slope and intercept

with interactions in the usual OLS model

Things are trickier in non-linear models

Depends heavily on the correct specification of

the underlying function

Splines allow you to choose a certain type

of function (e.g. linear, quadratic) and

then test if the parameter in the model

changed at the break date t0

Non-Linear Relationship

one would want to control for a different

relationship between y and t for the

treatment and control groups

Another problem is that the outcome

functions might not be linear in t

it could be quadratic or something else.

Discontinuity may not be in the level, it may

be in the underlying function

The trade-offs

a value of δ

Larger means more precision from a larger

sample size

Risk of misspecification of the underlying

outcome function

Choose a underlying functional form

the cost is some precision

intuitively a flexible functional form can get

closer to approximating a discontinuity in the

outcomes

In practice

it is usual for the researcher to summarize

all the data in a graph

Should be able to see a change outcome at t0

get some idea of the appropriate functional

forms and how wide a window should be

chosen.

It is always a good idea to investigate the

sensitivity of estimates to alternative

specifications.

Breaks at an unknown date

So far, we’ve assumed that we know when the

break in the series occurred but sometimes we

don’t

Suppose we are interested in the relationship

between x and y, before and after some date t

yt = xt’β1 + εt , t = 1,…,t

= xt’β2 + εt , t = t+1,…,T

Assume the x’s are stationary and weakly exogenous and

the ε’s are serially uncorrelated and homoskedastic.

Want to test H0: β1=β2 against β1≠β2

If t is known: this is well defined

If t is unknown, and especially if we’re not sure t exists,

then the null is not well defined

What to do?

(You don’t need to know this for the exam)

In the case where t is unknown, use LR

statistic

QLRT

max

t{t min ,..., t max }

FT (t )

When t is unknown: the standard

assumptions used to show that the LRstatistic is asymptotically χ2 not valid here

Andrews (1993) showed that under appropriate

regularity conditions, the QLR statistic has a

“nonstandard limiting distribution.”

Bk (r )' Bk (r )

QLRT sup

D r[ r , r ]

r (1 r )

min max

Distribution with unknown break

(You don’t need to know this for the exam)

Distribution is a “Brownian Bridge” and

distribution values are calculated as a

function of rmin and rmax

The applied researcher has to choose rmin and

rmax without much guidance.

Think of rmin as the minimum proportion of the sample

that can be in the first subsample

Think of 1 – rmax as the minimum proportion of the

sample that can be in the second subsample.

An example - 1

Effect of quarterly earnings announcement

on Market Returns (MacKinlay, 1997)

Outcome: “Abnormal Returns”

Testing for a break

Issues

How big a window should we choose?

Wider window might allow more volatility

which makes it harder to detect jumps

Narrower window has few observations

reducing our ability to detect a small effect

How to model abnormal returns

Different ways to model how expectations of

returns form

This is akin to considering functional form

An Example – 2 (Micro Example)

Lemieux and Milligan “Incentive Effects of

Social Assistance: A regression

discontinuity approach”, Journal of

Econometrics, 2008

In Quebec before 1989 childless benefit

recipients received higher benefits when they

reached their 30th birthday

Does this effect Employment rates?

The Picture

The Estimates

Issues

What window to choose

Close to 30 years old? Not many people on

social assistance

Note that the more flexible is the

underlying relationship between

employment rate and age, the less precise

is the estimate

Underlying function can explain more jumps if

it’s got more curvature

Splines can also explain a lot.

Next Time

Multivariate time series

Cointegration

State-space form

Multiple/Simultaneous Equation Models