Driven By Data: Teacher Training Workshop

advertisement

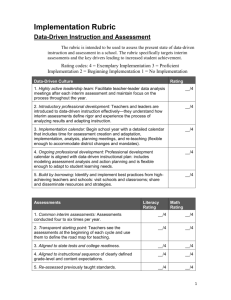

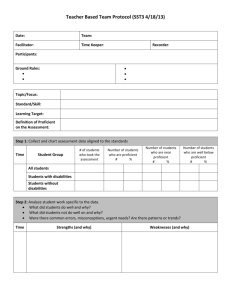

Data-Driven Instruction & Assessment Paul Bambrick-Santoyo P1 NY State Public School ELA 4th Performance vs. Free-Reduced Rates 100% 90% Pct. Proficient 80% 70% 60% 50% 40% 30% 20% 10% 10% 20% 30% 40% 50% 60% 70% Pct. Free-Reduced Lunch 80% 90% 100% P2 NY State Public School ELA 4th Performance vs. Free-Reduced Rates 100% 90% Pct. Proficient 80% 70% 60% 50% 40% 30% 20% 10% 10% 20% 30% 40% 50% 60% 70% Pct. Free-Reduced Lunch 80% 90% 100% P3 Case Study: Springsteen Charter School, Part 1 • What did Jones do well in his attempt to improve mathematics achievement? • What went wrong in his attempt to do data-driven decision making? • As the principal at Springsteen, what would be your FIRST STEPS in the upcoming year to respond to this situation? P4 Man on Fire: • What were the key moments in Creasy’s attempt to help the girl (Pita)? • What made Creasy’s analysis effective? P5 ASSESSMENT ANALYSIS I PART 1—GLOBAL IMPRESSIONS: Global conclusions you can draw from the data: • How well did the class do as a whole? • What are the strengths and weaknesses in the standards: where do we need to work the most? • How did the class do on old vs. new standards? Are they forgetting or improving on old material? • How were the results in the different question types (multiple choice vs. open-ended, reading vs. writing)? • Who are the strong/weak students? P6 ASSESSMENT ANALYSIS II PART 2—DIG IN: • “Squint:” Bombed questions—did students all choose same wrong answer? Why or why not? • Compare similar standards: Do results in one influence the other? • Break down each standard: Did they do similarly on every question or were some questions harder? Why? • Sort data by students’ scores: Are there questions that separate proficient / non-proficient students? • Look horizontally by student: Are there any anomalies occurring with certain students? P7 Teacher-Principal Role Play ROLE-PLAY ANALYSIS: • What did you learn about the teachers? • How did the interim assessment and analysis template change the dynamic of a normal teacher/principal conversation? • By using this particular assessment and analysis template, what decisions did the principal make about what was important for the student learning at his/her school? P8 Videos of Teacher-Principal Conference North Star Assessment Analysis Meetings P9 Impact of Data-Driven Decision Making State Test & TerraNova Results 2003-2008 P10 ASSESSMENT GOALS 2003-2007 SAME OVERARCHING GOALS: • Achieve academic excellence for every student • Prepare every student for college SPECIFIC ACHIEVEMENT GOALS: • MS: 15-point growth in students proficient/higher at each grade level (30% in 5th grade to 90% in 8th grade) • Long-term: 90/90/90 school P11 Comparison of 02-03 to 03-04: How one teacher improved 5th Grade 2002-2003 -- Percentage at or above national avg TER R A NOV A N=43 s tudents 2002 5th R eadi ng Grad e Pre- T est 36.6% 34.1% Language 2003 5th grade 40.5% 40.5% C HA NGE + 3.9 + 6.3 5th Grade 2003-2004 -- Percentage at or above national avg TER R A NOV A N=42 s tudents 2003 5th Gr ad e Pr e- T est 2004 5th grade C HA NGE R eadi ng 31.0% 52.4% + 21.4 Language 21.4% 47.6% + 26.2 P12 Comparison of 02-03 to 03-04: How 2nd teacher improved 6th Grade 2002-2003 -- Percentage at or above grade level TERRANOVA 2002 2003 6th Grade Pre-Test 6th grade CHANGE Reading 53.7% 29.3% - 24.4 Language 51.2% 48.8% - 2.4 N=43 students 6th Grade 2003-2004 -- Percentage at or above grade level TERRANOVA 2003 2004 5th grade 6th grade CHANGE Reading 40.5% 44.2% + 3.7 Language 40.5% 79.1% + 38.6 N=42 students P13 North Star Academy: NJ State Test Results 2009 P14 NJASK 8—DOWNTOWN MS LITERACY P15 NJASK 8—DOWNTOWN MS MATH P16 North Star Middle Schools: Setting the Standard P17 North Star Elementary: Exploding Expectations 2008-09 TerraNova Exam: Kindergarten--Median National Percentile Kindergarteners’ Median National Percentile 100.0% 95.3% 96.7% 97.4% 80.0% 60.0% K Pre-test 42.6% 40.0% Kindergarten 29.3% 27.5% 20.0% 0.0% Reading Language Math P18 HIGH SCHOOL HSPA—ENGLISH Comparative Data from 2008 HSPA Exam P19 HIGH SCHOOL HSPA—MATH Comparative Data from 2008 HSPA Exam P20 NEW JERSEY HSPA—ENGLISH PROFICIENCY P21 NEW JERSEY HSPA—MATH PROFICIENCY P22 Ft. Worthington: Turnaround Through Transparency P23 Monarch Academy: Vision and Practice P24 P25 Quick-Write Reflection • From what you know right now, what are the most important things you would need to launch a data-driven instructional model in your school? P26 THE FOUR KEYS: DATA-DRIVEN INSTRUCTION AT ITS ESSENCE: ASSESSMENTS ANALYSIS ACTION in a Data-driven CULTURE P27 Power of the Question Analysis of Assessment Items P28 1. 50% of 20: 2. 67% of 81: 3. Shawn got 7 correct answers out of 10 possible answers on his science test. What percent of questions did he get correct? 4. J.J. Redick was on pace to set an NCAA record in career free throw percentage. Leading into the NCAA tournament in 2004, he made 97 of 104 free throw attempts. What percentage of free throws did he make? 5. J.J. Redick was on pace to set an NCAA record in career free throw percentage. Leading into the NCAA tournament in 2004, he made 97 of 104 free throw attempts. In the first tournament game, Redick missed his first five free throws. How far did his percentage drop from before the tournament game to right after missing those free throws? 6. J.J. Redick and Chris Paul were competing for the best free-throw shooting percentage. Redick made 94% of his first 103 shots, while Paul made 47 out of 51 shots. • Which one had a better shooting percentage? • In the next game, Redick made only 2 of 10 shots while Paul made 7 of 10 shots. What are their new overall shooting percentages? Who is the better shooter? • Jason argued that if Paul and J.J. each made the next ten shots, their shooting percentages would go up the same amount. Is this true? Why or P29 why not? ASSESSMENT BIG IDEAS: Standards (and objectives) are meaningless until you define how to assess them. Because of this, assessments are the starting point for instruction, not the end. P30 POWER OF THE QUESTION—READING: LITTLE RED RIDING HOOD: 1. What is the main idea? 2. This story is mostly about: A. B. C. D. Two boys fighting A girl playing in the woods Little Red Riding Hood’s adventures with a wolf A wolf in the forest 3. This story is mostly about: A. Little Red Riding Hood’s journey through the woods B. The pain of losing your grandmother C. Everything is not always what it seems D. Fear of wolves P31 ASSESSMENT BIG IDEAS: In an open-ended question, the rubric defines the rigor. In a multiple choice question, the options define the rigor. P32 POWER OF THE QUESTION—GRAMMAR/WRITING SUBJECT-VERB AGREEMENT • He _____________ (run) to the store. • Michael _____________ (be) happy yesterday at the party. • Find the subject-verb agreement mistake in this sentence: • Find the grammar mistake in this sentence: • Find the six grammar and/or punctuation mistakes in this paragraph: P33 STARTING WITH THE OBJECTIVE: FOUR DIFFERENT OBJECTIVES FOR SCIENTIFIC METHOD: 1. Review the steps of the Scientific Method 2. Understand the Scientific Method 3. Define the steps of the Scientific Method 4. Use the Scientific Method in an experiment SMALL GROUP REFLECTION: What are the differences between each objective? Think of the simplest and most complex way you could assess each objective. Does it change the rigor of the objective? P34 ASSESSMENTS: PRINCIPLES FOR EFFECTIVE ASSESSMENTS: COMMON INTERIM: • At least quarterly • Common across all teachers of the same grade level TRANSPARENT STARTING POINT: • Teachers see the assessments in advance • The assessments define the roadmap for teaching P35 ASSESSMENTS: PRINICIPLES FOR EFFECTIVE ASSESSMENTS: ALIGNED TO: • To state test (format, content, & length) • To instructional sequence (curriculum) • To college-ready expectations RE-ASSESSES: • Standards that appear on the first interim assessment appear again on subsequent interim assessments P36 ASSESSMENTS: Writing • RUBRIC: Take a good one, tweak it, and stick with it • ANCHOR PAPERS: Write/acquire model papers for Proficient and Advanced Proficient that will be published throughout the school & used by teachers • GRADING CONSENSUS: Grade MANY student papers together to build consensus around expectations with the rubric • DRAFT WRITING VS. ONE-TIME DEAL: Have a balance P37 THE FOUR KEYS: ASSESSMENTS (Interim, Transparent, Aligned, Reassess) ANALYSIS ACTION in a Data-driven CULTURE P38 Quiz Enhancement—Reflection: Personal Reflection • What was hard for me about this exercise (if anything)? • What are my big takeaways for leading quality assessments in my school? • What questions do I have and what things do I want to learn to be an even more effective leader in this area? P39 THE FOUR KEYS: ASSESSMENTS (Interim, Transparent, Aligned, Reassess) ANALYSIS ACTION in a Data-driven CULTURE P40 P41 THE FOUR KEYS: ASSESSMENTS (Interim, Transparent, Aligned, Reassess) ANALYSIS ACTION in a Data-driven CULTURE P42 Analysis, Revisited Moving from the “What” to the “Why” P43 Man on Fire: • What made Creasy’s analysis effective? • After a solid analysis, what made Creasy’s action plan effective? P44 ANALYSIS: • IMMEDIATE: Ideal 48 hrs, max 1 wk turnaround • USER-FRIENDLY: Data reports are short but include analysis at question level, standards level and overall • TEACHER-OWNED analysis • TEST-IN-HAND analysis: Teacher & instructional leader together • DEEP: Moves beyond “what” to “why” P45 THE FOUR KEYS: ASSESSMENTS (Interim, Transparent, Aligned, Reassess) ANALYSIS (Quick, User-friendly, Teacher-owned, Test-in-hand, Deep) ACTION in a Data-driven CULTURE (Leadership, PD, Calendar, Build by Borrowing) P46 Drawing Exercise Reflection: • What made your second round so much more effective? • Based on this experience, what is important to be an effective teacher at re-teaching and achieving mastery? P47 Mr. Holland’s Opus: • What made the difference? How did Lou Russ finally learn to play the drum? • What changed Mr. Holland’s attitude and actions? P48 ACTION: • PLAN new lessons based on data analysis • ACTION PLAN: Implement what you plan (dates, times, standards & specific strategies) • ONGOING ASSESSMENT: In-the-moment checks for understanding to ensure progress • ACCOUNTABILITY: Observe changes in lesson plans, classroom observations, in-class assessments • ENGAGED STUDENTS: Know end goal, how they did, and what actions they’re taking to improve P49 THE FOUR KEYS: ASSESSMENTS (Interim, Transparent, Aligned, Reassess) ANALYSIS (Quick, User-friendly, Teacher-owned, Test-in-hand, Deep) ACTION (Action Plan, Ongoing, Accountability, Engaged) in a Data-driven CULTURE P50 DATA-DRIVEN CULTURE: • ACTIVE LEADERSHIP TEAM: Teacher-leader data analysis meetings; maintain focus • INTRODUCTORY PD: What (assessments) and how (analysis and action) • CALENDAR: Done in advance with built-in time for assessment, analysis, and action (flexible) P51 DATA-DRIVEN CULTURE: • ONGOING PD: Aligned with data-driven calendar: flexible to adapt to student learning needs • BUILD BY BORROWING: Identify and implement best practices from high-achieving teachers and schools P52 THE FOUR KEYS: ASSESSMENTS (Interim, Transparent, Aligned, Reassess) ANALYSIS (Quick, User-friendly, Teacher-owned, Test-in-hand, Deep) ACTION (Action Plan, Ongoing, Accountability, Engaged) in a Data-driven CULTURE (Leadership, PD, Calendar, Build by Borrowing) P53 Increasing Rigor Using Data-Driven Best Practices: Review “Increasing Rigor” Document: •Put a question mark next to activities that you want to understand more deeply in order to implement effectively. •Put a star next to activities that sound particularly doable for you that you want to implement on a regular basis in your classroom. Lesson Plan Enhancement: •Make changes to your lesson plan given this list: Choose the particular enhancements that will help this particular lesson. P54 Results Meeting Protocol Effective Group Meeting Strategy P55 ACTION: RESULTS MEETING 50 MIN TOTAL • IDENTIFY ROLES: Timer, facilitator, recorder (2 min) • IDENTIFY OBJECTIVE to focus on (2 min or given) • WHAT WORKED SO FAR (5 min) • [Or: What teaching strategies did you try so far] • CHIEF CHALLENGES (5 min) • BRAINSTORM proposed solutions (10 min) • [See protocol on next page] • REFLECTION: Feasibility of each idea (5 min) • CONSENSUS around best actions (15 min) • [See protocol on next page] • PUT IN CALENDAR: When will the tasks happen? When will the teaching happen? (10 min) P56 RESULTS MEETING STRUCTURE: PROTOCOLS FOR BRAINSTORMING/CONSENSUS PROTOCOL FOR BRAINSTORMING: • Go in order around the circle: Each person has 30 seconds to share a proposal. • If you don’t have an idea, say “Pass.” • No judgments should be made; if you like the idea, when it’s your turn simply say, “I would like to add to that idea by…” • Even if 4-5 people pass in a row, keep going for the full brainstorming time. PROTOCOL FOR REFLECTION: • 1 minute—silent personal/individual reflection on the list: what is doable and what isn’t for each person. • Go in order around the circle once: Depending on size of group each person has 30-60 seconds to share their reflections. • If a person doesn’t have a thought to share, say “Pass” and come back to that person later. • No judgments should be made. P57 RESULTS MEETING STRUCTURE: PROTOCOLS FOR BRAINSTORMING/CONSENSUS PROTOCOL FOR CONSENSUS/ACTION PLAN: • ID key actions from brainstorming that everyone will agree to implement. • Make actions as specific as possible within the limited time. • ID key student/teacher guides or tasks needed to be done to be ready to teach—ID who will do each task. • Spend remaining time developing concrete elements of lesson plan: • Do Now’s • Teacher guides (e.g., what questions to ask the students or how to structure the activity) • Student guides • HW, etc. NOTE: At least one person (if not two) should be recording everything electronically to send to the whole group P58 KEY TIPS TO MAKING RESULTS MEETING PRODUCTIVE: • GET SPECIFIC to the assessment question itself: We can teach 10 lessons on this standard. What’s the set of lessons these students need based on the data? • AVOID PHILOSOPHICAL DEBATES about theories of Math/Literacy: Focus on the small, specific challenge of the moment. That’s where the change will begin! • IF GROUP IS TOO LARGE: After presenter is done, split into two groups. You’ll generate more ideas and you can share your conclusions/action plans at the end. P59 TOPIC CHOICES FOR RESULTS MEETING: 1. K-2: TerraNova Challenging Questions (# 47 is counter-example: a question where students performed very well) 2. 4-6: State Test Challenging Questions/Standards P60 Dodge Academy: Turnaround Through Transparency P61 DATA-DRIVEN RESULTS: Greater Newark Academy Charter School 8th Grade GEPA Results Year Tested GNA 2004 Language Arts Mathematics % Proficient / Adv Proficient % Proficient / Adv Proficient 46.3 7.3 P62 DATA-DRIVEN RESULTS: Greater Newark Academy Charter School 8th Grade GEPA Results Year Tested GNA 2004 GNA 2005 Language Arts Mathematics % Proficient / Adv Proficient % Proficient / Adv Proficient 46.3 63.2 7.3 26.3 P63 DATA-DRIVEN RESULTS: Greater Newark Academy Charter School 8th Grade GEPA Results Year Tested GNA 2004 GNA 2005 GNA 2006 Language Arts Mathematics % Proficient / Adv Proficient % Proficient / Adv Proficient 46.3 63.2 73.5 7.3 26.3 73.5 P64 DATA-DRIVEN RESULTS: Greater Newark Academy Charter School 8th Grade GEPA Results Language Arts Mathematics % Proficient / Adv Proficient % Proficient / Adv Proficient GNA 2006 46.3 63.2 73.5 7.3 26.3 73.5 GNA 2007 80.1 81.8 Year Tested GNA 2004 GNA 2005 P65 DATA-DRIVEN RESULTS: Greater Newark Academy Charter School 8th Grade GEPA Results Language Arts Mathematics % Proficient / Adv Proficient % Proficient / Adv Proficient GNA 2006 46.3 63.2 73.5 7.3 26.3 73.5 GNA 2007 80.1 81.8 + 33.8 + 74.5 Newark Schools 2006 54.5 41.5 NJ Statewide 2006 82.5 71.3 Year Tested GNA 2004 GNA 2005 Difference 2004-07 P66 Greater Newark Charter: Achievement by Alignment P67 Chicago International Charter School: Winning Converts P68 Morell Park Elementary School: Triumph in Planning P69 Thurgood Marshall Academy Charter High School: Teachers & Leaders Together P70 REAL QUOTES FROM OUR CHILDREN… “The teachers use the assessments to become better teachers. They see what they didn’t teach very well and re-teach so we can learn it better. So we end up learning more.” “I like the assessments because they help me know what I need to work on. Every time I have something new to learn, and my teacher pushes me to keep learning those new things.” “My teacher would do anything to help us understand. He knows that science can be a hard subject so he will teach and re-teach the lesson until everyone gets it.” P71 REAL QUOTES FROM OUR CHILDREN II… “Mr. G always accepts nothing less than each student’s personal perfection. He is constantly telling us that we owe ourselves only our best work. If you are not understanding something from class, he will make sure you get it before the day is over. He makes sure to come in early in the morning and stays hours after school so that we are able to go to him with anything we need.” “Ms. J is a special teacher because she wakes up the power that we all have in ourselves. She has taught us writing skills that are miles ahead from where we started because she cares about our future.” P72 Burning Questions Data-Driven Instruction & Assessment Paul Bambrick-Santoyo P73 Conclusions Data-Driven Instruction & Assessment Paul Bambrick-Santoyo P74